Benchmarking Catalysis: How Standardized Databases Are Revolutionizing Catalyst Discovery and Development

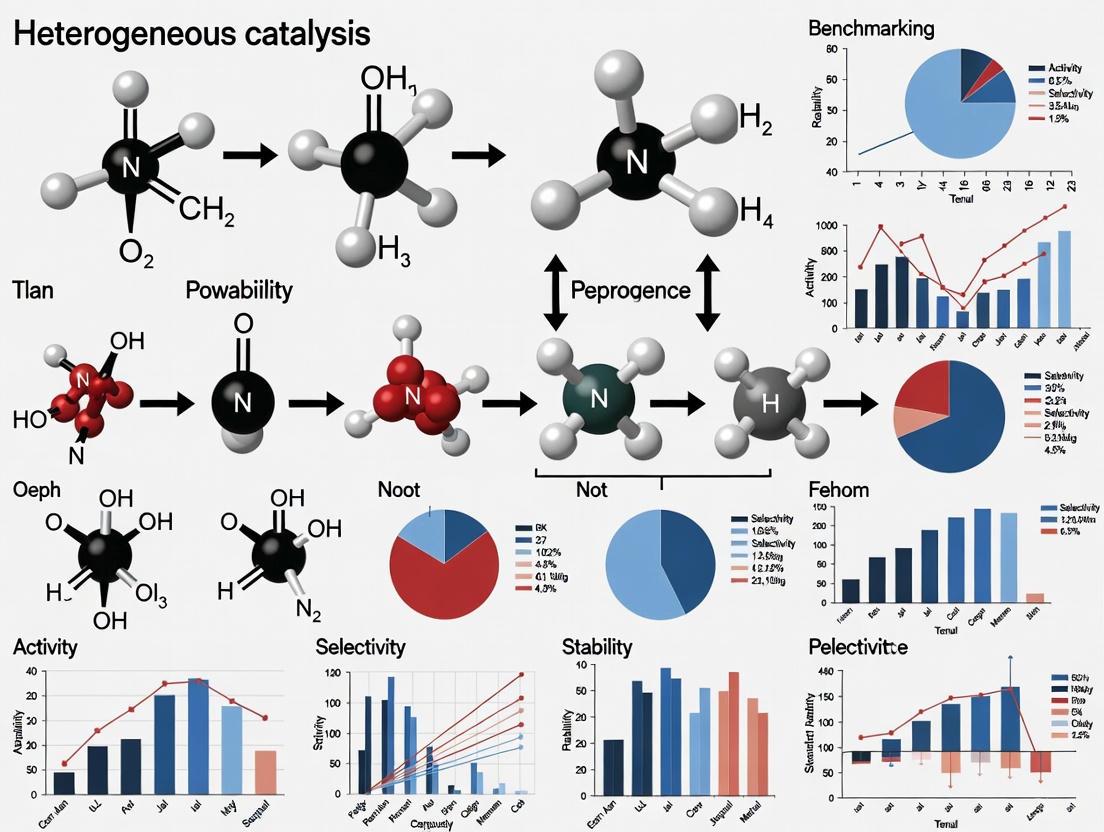

This article explores the critical role of benchmarking databases in addressing reproducibility challenges and accelerating innovation in heterogeneous catalysis research.

Benchmarking Catalysis: How Standardized Databases Are Revolutionizing Catalyst Discovery and Development

Abstract

This article explores the critical role of benchmarking databases in addressing reproducibility challenges and accelerating innovation in heterogeneous catalysis research. We examine how platforms like CatTestHub implement FAIR data principles to create standardized references for catalyst performance evaluation. The content covers foundational concepts of catalytic benchmarking, methodological frameworks for database implementation, strategies for overcoming data quality issues, and validation approaches for comparative analysis. For researchers and drug development professionals, this resource provides essential insights into leveraging standardized catalytic data to enhance research rigor, enable reliable comparisons, and accelerate materials discovery across biomedical and chemical applications.

The Catalysis Reproducibility Crisis: Why Benchmarking Databases Are Essential

Understanding the Heterogeneous Catalysis Data Challenge

The field of heterogeneous catalysis research, crucial for chemical manufacturing and energy technologies, is navigating a profound data challenge. While computational methods have advanced, allowing for the screening of materials in silico, the ultimate validation of new catalysts relies on experimental benchmarking [1]. However, the availability of consistent, high-quality experimental data is hindered by variability in reaction conditions, reporting procedures, and a lack of standardized protocols [2] [3]. This article objectively compares the traditional, fragmented approach to catalysis data with the emerging paradigm of centralized benchmarking databases, using the recently developed CatTestHub as a primary example [2].

Comparative Analysis of Catalysis Data Management Approaches

The following table contrasts the key characteristics of traditional data handling versus modern, structured database approaches.

| Feature | Traditional Fragmented Approach | Structured Database (CatTestHub) |

|---|---|---|

| Data Repository | Isolated systems, lab notebooks, individual publications [4] | Centralized, open-access database or spreadsheet [2] |

| Data Silos | Prevalent; prevents a holistic view and fragmented insights [4] | Integrated data from various sources into a unified format [2] |

| Data Quality | Often poor; includes duplicate, inaccurate, or incomplete information [4] | Automated quality checks and detailed metadata for context [2] |

| Benchmarking Ability | Difficult due to inconsistent conditions and reporting [2] | Enables direct comparison through standardized measurements [2] |

| FAIR Principles | Rarely followed, making data hard to find and reuse [5] | Informs design; ensures findability, accessibility, interoperability, reuse [2] |

| Community Aspect | Limited; data is not consolidated for community-wide use [2] | Serves as an open-access community platform for benchmarking [2] |

Experimental Protocols for Catalytic Benchmarking

A core requirement for generating reliable, comparable data is the use of rigorous and standardized experimental procedures. The methodologies below are cited as exemplars for producing high-quality catalysis data.

Protocol for "Clean" Catalyst Testing

This methodology, designed to account for the dynamic nature of catalysts under reaction conditions, ensures consistent and reproducible data generation [5].

- Catalyst Activation: A rapid activation procedure is first performed, exposing the fresh catalyst to harsh conditions (e.g., up to 450°C) for 48 hours to quickly bring it into a steady-state [5].

- Systematic Kinetic Analysis: Following activation, the catalyst undergoes a three-step testing sequence [5]:

- Temperature Variation: The reaction temperature is varied to determine the catalyst's performance across a thermal range.

- Contact Time Variation: The feed flow rate is adjusted to understand the influence of reactant-catalyst contact time.

- Feed Variation: The reactant composition is altered, which can include co-dosing reaction intermediates or varying the alkane/oxygen/steam ratios [5].

- Comprehensive Characterization: The catalysts are characterized using multiple techniques (e.g., N₂ adsorption, XPS) both before and after reaction to link physicochemical properties to performance [5].

CatTestHub Benchmarking Workflow

This protocol outlines the general workflow for contributing to and using a community benchmarking database [2].

- Source Benchmark Catalyst: Obtain a well-characterized, widely available catalyst, such as those from commercial vendors or standardized materials like EuroPt-1 [2].

- Perform Standardized Reaction Protocol: Conduct catalytic tests (e.g., methanol decomposition, Hofmann elimination) using agreed-upon reaction conditions to ensure data comparability [2].

- Characterize Catalyst Structure: Perform structural characterization (e.g., surface area, acidity) to provide nanoscopic context for the macroscopic kinetic data [2].

- Report Data & Reactor Configuration: Document all kinetic data, reaction conditions, and details of the reactor configuration as per database requirements [2].

- Submit to Central Database: Contribute the structured data to the open-access database (e.g., CatTestHub) [2].

- Community Validation: With repeated measurements by independent researchers, the data for a given catalyst and reaction becomes a validated community benchmark [2].

The Scientist's Toolkit: Key Research Reagents & Materials

The table below details essential materials and their functions as derived from the featured experiments and databases.

| Research Reagent / Material | Function in Catalysis Research |

|---|---|

| Vanadyl Pyrophosphate (VPO) | An industrial benchmark catalyst for n-butane oxidation to maleic anhydride; used for performance comparison [5]. |

| MoVTeNbOx (M1 phase) | A mixed-metal oxide catalyst extensively researched for propane oxidation; serves as a reference material [5]. |

| EuroPt-1 | A standardized platinum catalyst historically developed to enable efficient comparisons between researchers [2]. |

| H-ZSM-5 Zeolite | A standardized solid acid catalyst used for probe reactions like Hofmann elimination to benchmark acid site activity [2]. |

| Pt/SiO₂ | A common supported metal catalyst used in benchmarking reactions such as methanol decomposition [2]. |

| Methanol & Formic Acid | Small, well-understood probe molecules whose decomposition reactions are used to benchmark the activity of metal sites [2]. |

| Alkylamines | Probe molecules (e.g., for Hofmann elimination) used to characterize the acid site strength and concentration in solid acids like zeolites [2]. |

The "Heterogeneous Catalysis Data Challenge" stems from a legacy of non-standardized, inaccessible data that hinders progress. The comparative analysis demonstrates that structured, community-driven databases like CatTestHub offer a quantitatively superior pathway for experimental catalysis research. By adopting standardized experimental protocols and contributing to centralized benchmarks, researchers can overcome data silos and quality issues. This shift enables true performance validation, accelerates the development of advanced materials, and is fundamental to establishing a robust, data-centric future for catalytic science.

Catalysis research is a cornerstone of modern chemical and biochemical technologies, fundamental to societal needs for chemicals, fuels, and pharmaceuticals [6] [7]. The ultimate goal of much of this research is the selective acceleration of the rate of catalytic turnover beyond the state-of-the-art [6]. However, a persistent challenge has been answering a fundamental question: how can a newly reported catalytic activity be verified to outperform existing standards? [6] The concept of benchmarking—the evaluation of a quantifiable observable against an external standard—provides the solution [6]. In catalysis science, this involves community-based consensus on making reproducible, fair, and relevant assessments of key performance metrics like activity, selectivity, and deactivation profile [8]. This guide traces the evolution of catalytic benchmarking from its early beginnings with standardized materials like EuroPt-1 to the modern, open-access databases that are revolutionizing the field, providing an objective comparison of the methodologies, capabilities, and applications of these critical resources.

The Early Era of Benchmarking: Standard Reference Catalysts

The first significant efforts in catalytic benchmarking emerged from the need for common materials that would enable reproducible and comparable experimental measurements between different research laboratories.

Table 1: Early Standard Reference Catalysts

| Catalyst Name | Material Type | Developing Body | Primary Purpose | Key Strength | Inherent Limitation |

|---|---|---|---|---|---|

| EuroPt-1 [6] | Platinum on Silica | Johnson-Matthey | Provide a common Pt-based material for comparing experimental measurements. | Availability of a well-characterized, common material. | No standard procedure for catalytic activity measurement. |

| EuroNi-1 [6] | Nickel Catalyst | EUROCAT | Enable comparisons between researchers for nickel-catalyzed reactions. | Abundantly available and reliably synthesized. | Lack of agreed-upon reaction conditions for testing. |

| World Gold Council Standards [6] | Gold Catalysts | World Gold Council | Enable efficient performance comparisons between researchers using gold catalysts. | Synthesized with the explicit goal of being a standard. | Limited to specific gold-catalyzed reactions. |

| International Zeolite Association Standards [6] | MFI and FAU Zeolites | International Zeolite Association | Provide standard zeolite materials to any researcher by request. | Readily available to the global research community. | No unified activity measurement protocol. |

These early initiatives provided the foundational principle of using well-characterized and abundantly available catalysts from commercial vendors or consortia [6]. While a critical first step, these programs were met with limited success. The primary shortcoming was that, despite the availability of a common material, no standard procedure or condition at which catalytic activity should be measured was universally implemented [6]. Consequently, while researchers could use the same catalyst, differences in reaction setups, conditions, and data reporting made truly quantitative comparisons across studies difficult. Furthermore, these efforts lacked a centralized, open-access database for uniformly reporting catalytic data measured by independent researchers [6].

The Rise of Modern Benchmarking Databases

Driven by the limitations of early approaches and the contemporary focus on data-centric science, the field has witnessed the development of more sophisticated benchmarking platforms. These modern resources aim not only to provide reference materials but also to standardize data reporting and provide open-access community platforms.

CatTestHub: A Contemporary Solution

CatTestHub represents a modern response to the challenges of experimental benchmarking in heterogeneous catalysis [6] [3]. It is an online, open-access database specifically designed to standardize data reporting and provide a community-wide benchmark [6].

Table 2: Modern Catalytic Benchmarking Database: CatTestHub

| Feature | Description | Advantage over Historical Predecessors |

|---|---|---|

| Database Design | Informed by FAIR principles (Findable, Accessible, Interoperable, Reusable); uses a simple spreadsheet format [6]. | Ensures long-term accessibility and ease of use, unlike proprietary or complex formats. |

| Hosted Catalyst Classes | Metal catalysts and solid acid catalysts [6]. | Provides benchmarks for distinct classes of active sites. |

| Probe Reactions | Methanol & formic acid decomposition (metal catalysts); Hofmann elimination of alkylamines (zeolites) [6] [3]. | Uses well-understood chemistries to probe specific catalytic functionalities. |

| Data Curation | Macroscopic kinetic data, material characterization, and reactor configuration details [6]. | Contextualizes macroscopic rates with nanoscopic active site information, enabling deeper insights. |

| Access & Accountability | Available online (cpec.umn.edu/cattesthub); uses DOIs, ORCID, and funding acknowledgements [6]. | Provides electronic means for accountability, intellectual credit, and traceability, which earlier efforts lacked. |

The core mission of CatTestHub is to house experimentally measured chemical rates of reaction free from corrupting influences like catalyst deactivation or heat/mass transfer limitations, which is essential for establishing a reliable benchmark [6]. Its architecture is designed to be a living resource, where the quality and utility of the benchmark are improved through the continuous addition of kinetic information by the global catalysis community [6] [3].

Experimental Protocols for Benchmarking

The credibility of any benchmark hinges on the rigor and reproducibility of the experimental methods used to generate the data. The following section outlines the standardized protocols and key reagents essential for reliable catalytic benchmarking.

Workflow for Establishing a Catalytic Benchmark

The process of creating and validating a community-accepted benchmark follows a structured workflow that integrates material selection, kinetic measurement, and data sharing. The diagram below visualizes this multi-step methodology.

The Scientist's Toolkit: Essential Reagents for Benchmarking

The following table details key reagents and materials commonly used in catalytic benchmarking experiments, as derived from established protocols [6].

Table 3: Essential Research Reagent Solutions for Catalytic Benchmarking

| Reagent/Material | Function in Benchmarking | Example from Literature |

|---|---|---|

| Reference Catalysts (e.g., Pt/SiO₂, H-ZSM-5) | Serve as the common standard against which new catalysts or technologies are evaluated. | EuroPt-1; Commercial 1% Pt/SiO₂ (Sigma Aldrich 520691) used in CatTestHub [6]. |

| Probe Molecules (e.g., Methanol, Formic Acid, Alkylamines) | Undergo well-understood reactions (decomposition, elimination) to probe specific active sites on catalysts. | Methanol (>99.9%, Sigma Aldrich) for metal site benchmarking; Alkylamines for solid acid site benchmarking [6]. |

| High-Purity Gases (e.g., H₂, N₂) | Act as reactants, carriers, or purge gases; purity is critical to avoid catalyst poisoning. | Hydrogen (99.999%) and Nitrogen (99.999%) procured from industrial gas suppliers [6]. |

| Supported Metal Catalysts | Provide a consistent and dispersed form of the active metal for reactions like hydrogenation and decomposition. | Pt/C, Pd/C, Ru/C, Rh/C, and Ir/C from commercial sources (Strem Chemicals, Thermofischer) [6]. |

Comparative Analysis: From Past to Present

The evolution from standard catalysts to modern databases represents a significant leap in ensuring rigorous and reproducible catalysis research. The table below provides a consolidated comparison of this progression.

Table 4: Comparative Analysis: Standard Catalysts vs. Modern Benchmarking Databases

| Aspect | Era of Standard Catalysts (e.g., EuroPt-1) | Era of Modern Databases (e.g., CatTestHub) |

|---|---|---|

| Core Offering | Physical reference material [6]. | Integrated platform of material, standardized data, and protocols [6]. |

| Standardization Focus | Catalyst composition and structure [6]. | Catalyst + Reaction conditions + Data reporting format [6]. |

| Data Accessibility | Data scattered across literature, difficult to compare [6]. | Centralized, open-access repository with uniform data [6]. |

| Community Role | Passive: use the provided material. | Active: contribute to and validate the growing benchmark [6]. |

| Overcoming Limitations | Did not fully solve the problem of data comparability [6]. | Directly addresses reproducibility and comparability via FAIR data principles [6]. |

The journey of catalytic benchmarking from EuroPt-1 to CatTestHub marks a fundamental shift in the culture of experimental catalysis research. The early standard catalysts laid the groundwork by emphasizing the need for common materials, but they failed to create a unified framework for measuring and reporting performance. Modern databases have learned from these limitations, building upon the foundation of well-characterized materials and integrating them with standardized protocols, community-driven data collection, and open-access platforms designed around the FAIR principles [6]. This evolution empowers researchers to make rigorous, quantitative comparisons of catalytic activity, truly contextualizing their results against the state-of-the-art. By providing a structured and ever-improving benchmark, these modern tools are not just reflecting the progress of the field—they are actively accelerating it by ensuring that new discoveries in catalyst materials and technologies are built upon a solid, verifiable, and communal foundation of data.

The field of experimental heterogeneous catalysis has long faced a significant challenge: the inability to quantitatively compare new catalytic materials and technologies due to inconsistent data collection and reporting practices across research institutions. While certain catalytic chemistries have been studied for decades, quantitative comparisons based on literature information remain hindered by variability in reaction conditions, types of reported data, and reporting procedures [3]. This lack of standardization makes it difficult to verify whether newly reported catalytic activities truly outperform established benchmarks, ultimately slowing progress in catalyst development for sustainable energy and chemical production [6].

CatTestHub emerges as a direct response to this challenge, providing an open-access community platform for benchmarking experimental catalysis data. Designed according to FAIR data principles (Findability, Accessibility, Interoperability, and Reuse), this database represents a paradigm shift toward data-centric approaches in catalysis research [6] [9]. By combining systematically reported catalytic activity data for selected probe chemistries with relevant material characterization and reactor configuration information, CatTestHub establishes a much-needed foundation for rigorous comparison of catalytic performance across different laboratories and research programs [9].

Understanding the Catalysis Database Landscape

The movement toward open-access databases in catalysis research has gained significant momentum in recent years, primarily driven by the increasing volume of computational data and the need for organized repositories. Before examining CatTestHub's specific contributions, it is essential to understand the existing landscape of catalysis databases and their respective specializations.

Table: Comparison of Catalysis Database Platforms

| Database | Primary Focus | Data Type | Key Features | Access |

|---|---|---|---|---|

| CatTestHub | Experimental benchmarking | Experimental kinetics | Probe reactions, material characterization, reactor details | Open-access spreadsheet |

| Catalysis-Hub.org | Surface reactions | Computational data | >100,000 chemisorption/reaction energies, atomic geometries | Web interface & Python API |

| Open Catalyst Project | Catalyst discovery | Computational data | DFT calculations for renewable energy applications | Open-access datasets |

| CatApp | Surface reactions | Computational data | ~3,000 reactions on transition metal surfaces | Web browser access |

The table above highlights a significant gap in the existing ecosystem: while multiple platforms serve computational data, CatTestHub is uniquely focused on standardized experimental measurements [6] [10]. This distinction is crucial because experimental validation remains the ultimate benchmark for catalytic performance, despite the valuable predictive capabilities of computational approaches. Catalysis-Hub.org, for instance, hosts over 100,000 chemisorption and reaction energies obtained from electronic structure calculations but explicitly cautions that results from different datasets "are not necessarily directly comparable" due to variations in DFT codes, exchange-correlation functionals, and calculation parameters [10]. Similarly, the Open Catalyst Project focuses primarily on computational data for renewable energy applications [6].

Unlike these computational repositories, CatTestHub addresses the experimental benchmarking challenge through standardized probe reactions and consistent reporting protocols across contributing researchers [6]. This experimental focus, combined with detailed material characterization and reactor configuration data, provides a critical bridge between computational predictions and real-world catalytic performance.

CatTestHub's Architectural Framework and Design Principles

Database Structure and Implementation

CatTestHub employs a deliberately simple spreadsheet-based format that prioritizes long-term accessibility and ease of use. This practical design choice ensures that the database remains usable regardless of future software evolution, addressing a common limitation of more complex database architectures that may become obsolete with technological changes [6]. The database structure encompasses three primary domains: experimentally measured chemical reaction rates, comprehensive material characterization data, and detailed reactor configuration information relevant to chemical reaction turnover on catalytic surfaces [6].

The curation process intentionally focuses on collecting observable macroscopic quantities measured under well-defined reaction conditions, supported by detailed descriptions of reaction parameters and characterization information for each catalyst investigated [6]. This systematic approach ensures that each data entry contains sufficient information for experimental reproduction and validation—a critical requirement for establishing reliable benchmarks. The database also incorporates metadata where appropriate to provide essential context for the reported data, enhancing both interpretability and reusability [6].

Adherence to FAIR Data Principles

CatTestHub's architecture is explicitly informed by the FAIR guiding principles for scientific data management, which emphasize Findability, Accessibility, Interoperability, and Reuse [6] [9]. Each of these principles translates to specific implementation features within the database:

- Findability: The spreadsheet format provides inherent organizational structure, while unique digital object identifiers (DOIs) associated with each dataset enable precise citation and tracking [6].

- Accessibility: Hosted online as a spreadsheet at cpec.umn.edu/cattesthub, the database ensures immediate access without specialized software or computational expertise [6].

- Interoperability: The use of common, non-proprietary formats facilitates data exchange and integration with other resources, while metadata provides essential context for cross-referencing [6].

- Reuse: Comprehensive documentation of experimental conditions, material properties, and reactor configurations enables meaningful repurposing of data for various research applications beyond their original intent [6].

This principled approach to database design represents a significant advancement over prior benchmarking attempts in experimental heterogeneous catalysis, which—despite offering standardized materials—failed to establish standardized measurement procedures or centralized data repositories [6].

Experimental Methodologies and Benchmarking Protocols

Probe Reactions and Catalytic Systems

CatTestHub's current iteration employs carefully selected probe reactions designed to provide fundamental insights into different classes of catalytic functionality. For metal catalysts, the database features methanol decomposition and formic acid decomposition as benchmark chemistries, both of which provide sensitive probes of metallic active sites [6] [3]. For solid acid catalysts, the Hofmann elimination of alkylamines over aluminosilicate zeolites serves as a benchmark reaction that specifically characterizes Brønsted acidity [6] [3].

These reactions were selected based on several criteria: they provide clear mechanistic insights into specific catalytic functionalities, they can be conducted under well-defined conditions that minimize transport limitations, and they generate reproducible kinetic data suitable for cross-laboratory comparison [6]. The initial database release spans over 250 unique experimental data points collected across 24 distinct solid catalysts, demonstrating substantial coverage despite being an emerging resource [9].

Standardized Experimental Workflow

The experimental methodology supporting CatTestHub follows a rigorous, standardized workflow designed to ensure data quality and comparability. The process begins with catalyst selection, prioritizing materials that are either commercially available (from sources like Zeolyst or Sigma Aldrich), consortium-provided, or reliably synthesizable by individual researchers [6]. This accessibility focus ensures that benchmark materials can be widely utilized across the research community.

Diagram: CatTestHub Experimental Workflow

Following catalyst characterization, kinetic measurements are conducted under carefully controlled conditions specifically designed to avoid convoluting catalytic activity with transport phenomena [6]. The database specifically documents whether reported turnover rates are free from corrupting influences such as catalyst deactivation, heat/mass transfer limitations, and thermodynamic constraints—a critical consideration often overlooked in conventional catalytic studies [6]. This methodological rigor ensures that the resulting data reflect intrinsic catalytic properties rather than experimental artifacts.

Essential Research Reagents and Materials

The experimental data within CatTestHub relies on carefully selected catalytic materials and reagents that ensure reproducibility across different laboratories. The following table details key research reagents referenced in the CatTestHub methodology, along with their specific functions in catalytic benchmarking:

Table: Essential Research Reagents for Catalytic Benchmarking

| Reagent/Material | Source | Function in Benchmarking |

|---|---|---|

| Pt/SiO₂ | Sigma Aldrich (520691) | Benchmark platinum catalyst for methanol decomposition |

| Pt/C | Strem Chemicals (7440-06-04) | Supported platinum catalyst for comparative studies |

| Pd/C | Strem Chemicals (7440-05-03) | Palladium catalyst for hydrogenation/dehydrogenation |

| Ru/C | Strem Chemicals (7440-18-8) | Ruthenium catalyst for comparison with Pt/Pd |

| Methanol (>99.9%) | Sigma Aldrich (34860-1L-R) | Probe molecule for metal catalyst benchmarking |

| H-ZSM-5 Zeolite | Various sources | Standard solid acid catalyst for amine elimination |

| Nitrogen (99.999%) | Ivey Industries/Airgas | Inert carrier gas for flow reactions |

| Hydrogen (99.999%) | Ivey Industries/Airgas | Reducing agent and reaction component |

These carefully selected materials represent a strategic mix of commercially available catalysts and high-purity reagents that can be sourced consistently by researchers worldwide, forming the foundation for reproducible benchmarking measurements [6].

Comparative Analysis: CatTestHub vs. Computational Databases

Data Quality and Reproducibility

The most significant distinction between CatTestHub and computational databases lies in their fundamental data types and associated reproducibility considerations. Computational databases like Catalysis-Hub.org face inherent challenges in direct comparability because results "are not necessarily directly comparable, even though trends within a dataset are well-converged" due to variations in DFT codes, exchange-correlation functionals, and calculation parameters [10]. This limitation necessitates careful curation when combining datasets from different sources for quantitative analysis.

In contrast, CatTestHub's experimental focus provides direct empirical measurements of catalytic performance under standardized conditions. However, it introduces different methodological considerations related to experimental reproducibility, including catalyst synthesis reproducibility, reactor configuration effects, and measurement precision. The database addresses these challenges through detailed documentation of material properties, reactor geometries, and experimental protocols that enable identification and control of potential variability sources [6].

Accessibility and Usability

CatTestHub's spreadsheet-based format offers distinct practical advantages for researchers without specialized computational training or resources. The straightforward structure allows immediate access and data manipulation using ubiquitous software tools, lowering the barrier to entry for experimental researchers [6]. This approach contrasts with platforms like Catalysis-Hub.org, which despite offering powerful API access and advanced query capabilities, requires greater technical sophistication for optimal utilization [10].

This usability advantage comes with potential limitations in scalability and advanced data mining capabilities. As the database grows, maintaining the spreadsheet format may present organizational challenges that structured database systems are specifically designed to address. However, for the current scope of benchmarking data, the practical accessibility benefits likely outweigh these potential limitations.

Future Directions and Community Implementation

Expansion Roadmap

While CatTestHub currently focuses on metal catalysts and solid acid materials with three probe reactions, its architectural framework is designed for systematic expansion across multiple dimensions. The developers envision continuous addition of kinetic information on select catalytic systems by the broader heterogeneous catalysis community, gradually building a more comprehensive benchmarking resource [9]. This expansion could encompass additional catalyst classes (such as metal oxides, sulfides, or single-atom catalysts), broader reaction networks (including tandem reactions and complex feedstock conversions), and emerging catalytic technologies that leverage non-thermal stimuli [6].

The database's simple structure facilitates this community-driven growth model, allowing researchers to contribute new datasets following the established formatting conventions without requiring complex data transformation or specialized computational tools. This low-barrier contribution model is essential for building a truly comprehensive benchmarking resource that reflects the diversity of contemporary catalysis research.

Implementation in Research Workflows

For individual researchers, CatTestHub provides two primary functionalities: benchmark validation of new catalytic materials against established standards, and methodological calibration of experimental systems using reference catalysts with well-documented performance characteristics [6]. Research groups developing novel catalytic materials can use the database to contextualize their performance claims against standardized benchmarks under comparable conditions, adding credibility to reported advancements.

For the broader catalysis community, consistent use of CatTestHub benchmarks promises to enhance cross-study comparability, potentially accelerating the identification of truly promising catalytic materials and strategies. Educational institutions can also leverage this resource for training purposes, providing students with standardized datasets for developing skills in kinetic analysis and catalytic performance evaluation.

CatTestHub represents a significant step toward addressing the long-standing challenge of standardization in experimental heterogeneous catalysis. By providing an open-access platform for curated benchmarking data that follows FAIR principles, this initiative enables meaningful quantitative comparisons across research laboratories and experimental programs [6] [9]. While computational databases offer valuable insights into reaction mechanisms and catalytic trends, CatTestHub fills the critical need for standardized experimental benchmarks against which computational predictions and novel catalytic concepts can be validated [10].

As the catalysis research community increasingly embraces data-centric approaches, resources like CatTestHub will play an essential role in ensuring that experimental advancements are grounded in rigorous, comparable measurements. The continued expansion and adoption of this benchmarking platform promises to accelerate the development of more efficient, selective, and sustainable catalytic technologies for addressing global energy and chemical production challenges.

The field of heterogeneous catalysis currently lacks structured repositories for experimental data, and the publication of machine-readable datasets remains uncommon [11]. This poses a significant challenge for research transparency and knowledge building. The FAIR principles—Findable, Accessible, Interoperable, and Reusable—provide a framework to optimize data sharing and reuse by both humans and machines [12] [13]. Implementing these principles is particularly crucial in catalysis research, where the complexity of data encompassing kinetics, material characterization, and reaction parameters demands careful stewardship to enable scientific progress.

Catalyst discovery has historically relied on serendipity rather than systematic design, as exemplified by the accidental discovery of chlorine as a promoter for ethylene epoxidation on silver in the 1930s [14]. The adoption of FAIR data practices represents a transformative opportunity to add rationale to this otherwise serendipitous discovery process, creating a foundation for advanced data analytics and machine learning applications that can accelerate the development of new catalytic materials [14] [11].

Understanding the FAIR Principles

The FAIR principles describe how research outputs should be organized so they can be more easily accessed, understood, exchanged, and reused [13]. Table 1 outlines the core components of each FAIR dimension.

Table 1: The Four Components of FAIR Data Principles

| FAIR Component | Core Requirements | Implementation Examples |

|---|---|---|

| Findable | Persistent identifiers, rich metadata, searchable resources | Digital Object Identifiers (DOIs), descriptive titles with keywords, complete metadata fields [13] [15] |

| Accessible | Standardized retrieval protocols, clear access permissions, authentication if needed | Public components in repositories, README files with access instructions, "as open as possible, as closed as necessary" approach [13] [15] |

| Interoperable | Common formats, controlled vocabularies, community standards | Non-proprietary file formats (CSV, TXT, PDF/A), documented variables and units, ontologies and thesauri [13] [15] |

| Reusable | Clear licenses, detailed provenance, comprehensive documentation | Appropriate usage licenses, methodology protocols, version control, data dictionaries [13] [15] |

It is essential to recognize that FAIR does not necessarily mean "open." Data can be FAIR but not openly accessible if restrictions are necessary, while openly available data may lack sufficient documentation to be truly FAIR [13]. The emphasis is on machine-actionability—the capacity of computational systems to find, access, interoperate, and reuse data with minimal human intervention—which is crucial given the increasing volume, complexity, and creation speed of research data [12].

Platform Comparisons for FAIR Data Implementation

Researchers in catalysis have multiple options for implementing FAIR data practices, ranging from general-purpose repositories to domain-specific solutions. Table 2 compares key platforms and their applicability to catalysis research.

Table 2: Comparison of Platforms for FAIR Catalysis Data Management

| Platform | Primary Focus | Key FAIR Features | Catalysis-Specific Capabilities |

|---|---|---|---|

| NOMAD with Catalysis Plugin [11] | Domain-specific catalysis data | Structured data upload aligned with Voc4Cat vocabulary, built-in visualization, intuitive search | Specifically designed for catalytic reactions, catalyst materials, reaction conditions, and kinetic properties |

| Open Science Framework (OSF) [15] | General research project management | DOI generation, metadata fields, version control, license selection, component organization | Suitable for general catalysis research data with customizable metadata and components |

| CPEC Chemical Catalysis Database [16] | Experimental catalytic surfaces | Houses kinetic, thermodynamic, and characterization data from experiments and literature | Growing list of catalytic chemistries with experimentally measured parameters |

| Zenodo & Harvard Dataverse [13] | General-purpose repository | Persistent identifiers, metadata schemes, community standards | Broad applicability but lacks catalysis-specific structure |

The NOMAD platform's catalysis plugin (nomad_catalysis) represents a significant advancement as it enables the upload of structured experimental data and metadata with built-in visualization and alignment to the community-developed vocabulary Voc4Cat, ensuring long-term interpretability [11]. This domain-specific approach addresses the unique challenges of catalysis data, which often involves complex relationships between material properties, reaction conditions, and catalytic performance.

Experimental Protocols for Generating FAIR Catalysis Data

The "Clean Data" Approach for Catalytic Testing

High-quality FAIR data begins with rigorous experimental design. The "clean data" approach employs standardized procedures to account for the dynamic nature of catalysts during testing [5]. This methodology includes:

- Consistent Catalyst Activation: Implementing a rapid activation procedure under harsh reaction conditions to quickly bring catalysts into a steady state, typically over 48 hours, with temperature limits to minimize gas-phase reactions [5].

- Systematic Testing Protocol: Following activation with three sequential steps: (1) temperature variation, (2) contact time variation, and (3) feed variation with intermediate co-dosing, alkane/oxygen ratio changes, and water concentration adjustments [5].

- Comprehensive Characterization: Measuring numerous physicochemical parameters (e.g., via N₂ adsorption, XPS, in situ XPS) to capture properties under reaction conditions rather than just standard conditions [5].

This approach ensures consistent formation of active states and generates data suitable for identifying meaningful property-function relationships through AI analysis [5].

Catalyst Synthesis and Preparation Methods

Various methodologies exist for preparing catalyst layers, particularly in microreactor applications where the catalytic coating significantly influences performance [17]:

- Sol-Gel Method: Utilizing chemical precursor solutions or colloidal dispersions that undergo gelation to form thicker catalyst layers on reactor surfaces, followed by calcination treatment [17].

- Suspension Method: Dispersing pre-prepared catalyst materials in solution to form a sol, which is introduced into microreactors to form catalyst layers through drying processes [17].

- Bio-inspired Electroless Deposition and Layer-by-Layer Self-Assembly: Emerging strategies that offer potential for creating high-performance catalytic coatings with improved efficiency [17].

These synthesis approaches highlight the importance of detailed methodological documentation—a key aspect of reusability in FAIR data—as different preparation methods significantly impact catalytic activity and stability [17].

Visualization of FAIR Data Implementation Workflow

The following diagram illustrates the integrated workflow for implementing FAIR data principles throughout the catalysis research lifecycle, combining experimental and data management components:

FAIR Data Implementation Workflow in Catalysis Research

This workflow demonstrates how experimental processes in catalysis research integrate with FAIR data management practices to create a continuous cycle of knowledge generation and reuse. The red arrows highlight critical transition points where experimental data must be transformed into FAIR-compliant digital assets.

Essential Research Reagent Solutions for Catalysis Data Generation

The implementation of FAIR data standards requires not only data management infrastructure but also consistent experimental materials and protocols. Table 3 details key research reagents and their functions in generating reliable, comparable catalysis data.

Table 3: Essential Research Reagent Solutions for Catalytic Testing

| Reagent Category | Specific Examples | Research Function | FAIR Data Consideration |

|---|---|---|---|

| Redox-Active Elements | Vanadium, Manganese compounds [5] | Serve as catalytic centers for oxidation reactions | Document precursor sources, purity, and synthesis conditions |

| Catalyst Supports | Al₂O₃, SiO₂, TiO₂, zeolites, carbon materials [17] | Provide high surface area, thermal stability, and mechanical strength | Specify surface area, pore structure, and pretreatment methods |

| Metallic Catalysts | Nickel, Copper, Platinum, Palladium [17] | Enable various hydrogenation/dehydrogenation reactions | Record dispersion metrics, particle size, and loading percentages |

| Sol-Gel Precursors | Al[OCH(CH₃)₂]₃, Ni(NO₃)₂·6H₂O, La(NO₃)₃·6H₂O [17] | Form thicker catalyst layers through gelation processes | Document aging times, temperatures, and calcination conditions |

| Polymer Stabilizers | Polyvinyl alcohol (PVA), Hydroxyethyl cellulose [17] | Enhance suspension stability in catalyst deposition | Note concentrations and molecular weights in methodology |

The careful documentation of these research reagents—including sources, preparation methods, and characterization parameters—is essential for ensuring the reusability of catalysis data, enabling other researchers to reproduce and build upon published results.

Impact and Future Perspectives

The implementation of FAIR data standards in catalysis research enables the identification of key "materials genes"—physicochemical descriptive parameters correlated with catalytic performance [5]. By applying symbolic regression AI analysis to consistent, high-quality datasets, researchers can identify nonlinear property-function relationships that reflect the intricate interplay of processes governing catalytic behavior, such as local transport, site isolation, surface redox activity, adsorption, and material dynamical restructuring under reaction conditions [5].

This data-centric approach indicates the most relevant characterization techniques for catalyst design and provides "rules" on how catalyst properties may be tuned to achieve desired performance [5]. As the field progresses, the phase boundary perspective in catalyst design—which suggests that optimal catalysts exist at boundaries between phases, compositions, or coverages—can be more systematically explored through FAIR data principles that enable the accumulation and analysis of diverse experimental results [14].

The development of specialized infrastructure like the NOMAD catalysis plugin represents a significant step toward a new era for open and FAIR data in catalysis research [11]. By facilitating efficient data sharing with intuitive search functionality, such tools help researchers quickly identify relevant catalytic reactions, catalyst materials, reaction conditions, and kinetic properties, ultimately supporting more efficient and reproducible catalyst development.

Benchmarking Database of Experimental Heterogeneous Catalysis

The field of heterogeneous catalysis research has evolved significantly over the past century, with continuous advancements in material synthesis, characterization techniques, and fundamental understanding of reaction mechanisms. Despite these progresses, a persistent challenge has been the lack of standardized benchmarks for comparing catalytic performance across different laboratories and studies. The ability to quantitatively compare newly evolving catalytic materials and technologies is hindered by the widespread availability of catalytic data collected in a consistent manner. While certain catalytic chemistries have been widely studied across decades of scientific research, the quantitative utilization of available literature information remains challenging due to variability in reaction conditions, types of reported data, and reporting procedures. This limitation has prompted the development of CatTestHub, an experimental catalysis database that seeks to standardize data reporting across heterogeneous catalysis, providing an open-access community platform for benchmarking [6] [3].

The concept of benchmarking extends back multiple centuries and has evolved over time, with specifics varying by field, but ultimately represents the evaluation of a quantifiable observable against an external standard. Access to a reliable benchmark affords individual contributors the ability to assess their quantifiable observable relative to an agreed upon standard, helping contextualize the relevance of their results. For heterogeneous catalysis, a benchmarking comparison can take multiple forms: determining if a newly synthesized catalyst is more active than existing predecessors, verifying if a reported turnover rate is free of corrupting information such as diffusional limitations, or assessing if the application of an energy source has accelerated a catalytic cycle [6].

CatTestHub: Database Architecture and Design Principles

Core Design Philosophy

CatTestHub was designed with specific architectural principles to ensure its utility and longevity within the catalysis research community. The database architecture was informed by the FAIR principles (findability, accessibility, interoperability, and reuse), helping ensure relevance to members of the heterogeneous catalysis community at large. A spreadsheet-based database format was implemented, offering ease of findability while curating key reaction condition information required for reproducing reported experimental measures of catalytic activity, along with details of reactor configurations in which experimental measures were performed [6].

To allow reported macroscopic measures of catalytic activity to be contextualized on the nanoscopic scale of active sites, structural characterization was provided for each unique catalyst material. In both major subsections of structural and functional portions of CatTestHub, metadata was used where appropriate to provide context for the reported data. Unique identifiers in the form of digital object identifiers (DOI), ORCID, and funding acknowledgements are reported for all data, providing electronic means for accountability, intellectual credit, and traceability. The database is available online as a spreadsheet, providing users with ease of access, the capability to download and reuse data, and ensures longevity due to its common format and structure [6].

Data Curation and Organization

The database was designed to house experimentally measured chemical rates of reaction, material characterization, and reactor configuration relevant to chemical reaction turnover on catalytic surfaces. The curation largely involved the intentional collection of observable macroscopic quantities measured under well-defined reaction conditions, detailed descriptions of reaction conditions and parameters, supported by characterization information for the various catalysts investigated. This approach ensures that the database contains sufficient information for researchers to reproduce experimental findings and make meaningful comparisons between different catalytic systems [6].

The organization of CatTestHub addresses a critical gap in previous benchmarking attempts. Prior efforts at benchmarking in experimental heterogeneous catalysis have been met with limited success. In the early 1980s, catalyst manufacturers made available materials with established structural and functional characterization, providing researchers with a common material for comparing experimental measurements. However, despite the availability of common materials, no standard procedure or condition at which catalytic activity is measured has been implemented. CatTestHub represents a comprehensive solution that addresses both material standardization and measurement consistency [6].

Experimental Methodologies and Benchmarking Protocols

Probe Reactions and Catalyst Classes

Currently, CatTestHub hosts two primary classes of catalysts: metal catalysts and solid acid catalysts. For metal catalysts, the decomposition of methanol and formic acid have been leveraged as benchmarking chemistries. For solid acid catalysts, the Hofmann elimination of alkylamines over aluminosilicate zeolites has been leveraged as a benchmark. These reactions were selected based on their well-understood mechanisms and relevance to broader catalytic applications [6].

The methanol decomposition reaction over metal catalysts serves as a particularly valuable benchmark system because it provides insights into the ability of metals to catalyze the cleavage of C-H and O-H bonds, while simultaneously offering information about the catalyst's selectivity toward various decomposition pathways. Similarly, the Hofmann elimination reaction on solid acid catalysts probes the strength and density of acid sites, which are critical parameters for numerous industrial catalytic processes including cracking, isomerization, and alkylation reactions [6].

Standardized Experimental Workflow

The experimental workflow for catalytic benchmarking in CatTestHub follows a systematic approach that ensures data consistency and reproducibility across different research groups. The process begins with catalyst selection and characterization, followed by carefully controlled reaction conditions, and concludes with comprehensive data analysis and reporting.

Materials and Reagent Specifications

The experimental protocols in CatTestHub require precise specification of materials and reagents to ensure reproducibility. For methanol decomposition studies, high-purity methanol (>99.9%) is typically used, along with high-purity nitrogen and hydrogen (99.999%) for carrier and reaction gases. Various metal catalysts are obtained from commercial sources including Pt/SiO₂, Pt/C, Pd/C, Ru/C, Rh/C, and Ir/C. Similarly, for zeolite catalysts, specific framework types such as MFI and FAU are utilized with standardized preparation methods [6].

The attention to material specifications addresses a critical aspect of catalytic benchmarking—the need for well-characterized and abundantly available catalysts. These can be sourced through commercial vendors (e.g., Zeolyst, Sigma Aldrich), consortia of researchers, or through reliable synthesis protocols that can be reproduced by individual researchers. This approach ensures that benchmarked rates of catalytic turnover are free of other influences such as catalyst deactivation, heat/mass transfer limitations, and thermodynamic constraints [6].

Comparative Performance Analysis of Catalytic Systems

Metal Catalyst Performance in Methanol Decomposition

The performance of various metal catalysts for methanol decomposition has been systematically evaluated and cataloged in CatTestHub. The following table summarizes the key performance metrics for representative metal catalysts:

Table 1: Metal Catalyst Performance in Methanol Decomposition

| Catalyst | Support | Temperature Range (°C) | Turnover Frequency (TOF) (s⁻¹) | Primary Products | Stability Profile |

|---|---|---|---|---|---|

| Pt/SiO₂ | Silica | 200-300 | 0.45-1.20 | CO, H₂ | High stability (>100 h) |

| Pt/C | Carbon | 200-300 | 0.38-1.05 | CO, H₂ | Moderate stability (>50 h) |

| Pd/C | Carbon | 250-350 | 0.25-0.75 | CO, H₂, CH₄ | Deactivation observed |

| Ru/C | Carbon | 200-300 | 0.15-0.45 | CO, H₂, CH₄ | Stable with time |

| Rh/C | Carbon | 200-300 | 0.35-0.95 | CO, H₂ | High stability |

| Ir/C | Carbon | 250-350 | 0.20-0.60 | CO, H₂ | Moderate stability |

The data reveals significant differences in catalytic performance across different metal systems, with platinum-based catalysts generally exhibiting higher turnover frequencies compared to other metals. The product distribution also varies, with some catalysts producing primarily CO and H₂, while others show tendency toward methane formation, indicating different reaction pathways and selectivity patterns [6].

Solid Acid Catalyst Performance in Hofmann Elimination

Zeolite catalysts have been evaluated for the Hofmann elimination of alkylamines, providing insights into their acid site strength and distribution. The following table compares the performance of different zeolite frameworks for this model reaction:

Table 2: Zeolite Catalyst Performance in Hofmann Elimination

| Zeolite Framework | SiO₂/Al₂O₃ Ratio | Temperature (°C) | Amine Conversion (%) | Alkene Selectivity (%) | Acid Site Density (mmol/g) |

|---|---|---|---|---|---|

| H-ZSM-5 (MFI) | 30 | 250 | 85-92 | 88-94 | 0.45-0.52 |

| H-ZSM-5 (MFI) | 50 | 250 | 78-85 | 90-95 | 0.32-0.38 |

| H-Y (FAU) | 5.1 | 200 | 65-75 | 82-88 | 0.85-0.95 |

| H-Y (FAU) | 12 | 200 | 55-65 | 85-90 | 0.45-0.55 |

| H-Beta (BEA) | 25 | 225 | 70-80 | 86-92 | 0.40-0.48 |

| H-MOR (MOR) | 20 | 275 | 80-88 | 80-86 | 0.50-0.58 |

The data demonstrates clear structure-activity relationships, with the zeolite framework type and silica-alumina ratio significantly influencing both conversion and selectivity. The higher acid site density in low-silica zeolites correlates with increased activity but does not always translate to improved selectivity, highlighting the complex interplay between acid strength, site accessibility, and reaction pathway specificity [6].

Advanced Catalyst Design Strategies and Computational Integration

Descriptor-Based Approaches for Catalyst Design

Contemporary catalyst design increasingly employs descriptor-based approaches where key parameters such as adsorption energies or transition state energies serve as proxies for estimating catalytic performance. Many studies are based on the volcano-plot paradigm, wherein the binding strength of one (or a few) simple adsorbates is used to estimate the rate, with the idea that the binding strength should be neither too strong nor too weak. For example, volcano plots have been developed for NH₃ electrooxidation based on bridge- and hollow-site N adsorption energies, correctly predicting that Pt₃Ir and Ir are more active than Pt [18].

This approach has been successfully applied to discover novel heterogeneous catalysts for alkane dehydrogenation. For ethane dehydrogenation, the C and CH₃ adsorption energies were chosen as computationally facile descriptors. Through this approach, Ni₃Mo was identified as a promising catalyst and subsequently experimentally validated. The Ni₃Mo/MgO catalyst achieved an ethane conversion of 1.2%, three times higher than the 0.4% conversion for Pt/MgO under the same reaction conditions, demonstrating the power of descriptor-based design strategies [18].

Meta-Analysis of Catalytic Literature Data

Advanced meta-analysis approaches have been developed to identify correlations between a catalyst's physico-chemical properties and its performance in particular reactions. This method unites literature data with textbook knowledge and statistical tools. Starting from a researcher's chemical intuition, a hypothesis is formulated and tested against the data for statistical significance. Iterative hypothesis refinement yields simple, robust, and interpretable chemical models [19].

This approach has been successfully demonstrated for the oxidative coupling of methane (OCM) reaction. The analysis of 1802 distinct catalyst compositions revealed that only well-performing catalysts provide, under reaction conditions, two independent functionalities: a thermodynamically stable carbonate and a thermally stable oxide support. This insight, derived from statistical analysis of large datasets, provides guidance for the discovery of improved OCM catalysts and illustrates the power of data-driven approaches in catalysis research [19].

Research Reagent Solutions and Essential Materials

The experimental benchmarking of catalytic materials requires specific reagents and materials with carefully controlled properties. The following table details key research reagent solutions and their functions in catalytic testing:

Table 3: Essential Research Reagents for Catalytic Benchmarking

| Reagent/Material | Specification | Function in Testing | Commercial Sources |

|---|---|---|---|

| Methanol | >99.9% purity | Probe molecule for metal catalyst evaluation | Sigma-Aldrich |

| Formic Acid | High purity | Alternative probe for decomposition studies | Various suppliers |

| Alkylamines | Specific chain length | Probe molecules for acid site characterization | Various suppliers |

| Pt/SiO₂ | Well-dispersed | Reference metal catalyst | Sigma-Aldrich |

| H-ZSM-5 | Standardized SiO₂/Al₂O₃ | Reference acid catalyst | Zeolyst International |

| H-Y Zeolite | Standardized framework | Reference large-pore zeolite | Zeolyst International |

| Supported Metal Catalysts | Various metals on standardized supports | Benchmarking materials | Strem Chemicals, ThermoFisher |

The careful selection and specification of these materials ensures that experimental results are comparable across different laboratories and studies. The use of commercially available reference materials allows researchers to contextualize their findings against established benchmarks, facilitating more meaningful comparisons and accelerating catalyst development [6].

Signaling Pathways and Reaction Mechanisms

The catalytic reactions implemented in CatTestHub involve well-defined reaction mechanisms that can be visualized as signaling pathways. The methanol decomposition reaction on metal surfaces follows a specific sequence of elementary steps, while the Hofmann elimination on solid acid catalysts involves distinct acid-base mediated pathways.

The methanol decomposition pathway on metal surfaces typically begins with methanol adsorption, followed by sequential O-H and C-H bond cleavage steps, ultimately yielding CO and H₂ as products. The rate-determining step varies across different metal catalysts, with some metals favoring O-H bond cleavage while others rate-limited by C-H bond activation. This fundamental understanding of reaction mechanisms enables more rational interpretation of the performance data cataloged in benchmarking databases [6].

Similarly, the Hofmann elimination on solid acid catalysts involves adsorption of alkylamines on Brønsted acid sites, followed by β-hydride abstraction, C-N bond cleavage, and eventual formation and desorption of alkene and ammonia products. The relative rates of these elementary steps, influenced by zeolite framework structure and acid site density, determine the overall activity and selectivity patterns observed in the benchmarking data [6].

CatTestHub represents a significant advancement in the field of experimental heterogeneous catalysis by providing a standardized platform for catalytic benchmarking. The database's design, informed by FAIR principles and community needs, addresses critical challenges in comparing catalytic performance across different studies and laboratories. Through the selection of appropriate probe reactions, comprehensive material characterization, and systematic reporting of kinetic information, CatTestHub provides a collection of catalytic benchmarks for distinct classes of active sites [6] [3].

The continued expansion of such benchmarking databases, coupled with advanced data analysis approaches including meta-analysis and descriptor-based design strategies, promises to accelerate catalyst discovery and development. As the catalysis research community increasingly adopts standardized benchmarking practices, the ability to quantitatively compare new catalytic materials and technologies will improve, ultimately advancing the field toward more efficient and sustainable chemical processes [6] [18] [19].

The integration of computational design with experimental validation, facilitated by reliable benchmarking data, represents a particularly promising direction for future catalysis research. As demonstrated by recent successes in descriptor-based catalyst design, the combination of fundamental understanding, computational prediction, and experimental benchmarking creates a powerful framework for discovering and optimizing next-generation catalytic materials [18].

Building Effective Catalysis Databases: Architecture and Implementation

In the field of experimental heterogeneous catalysis, the ability to quantitatively compare new catalytic materials and technologies is fundamental to scientific progress. However, this endeavor is often hindered by a critical challenge: the lack of widely available, consistently collected catalytic data [6]. While numerous catalytic chemistries have been studied for decades, the quantitative utility of this vast literature is compromised by significant variability in reaction conditions, types of reported data, and reporting procedures themselves [3]. This inconsistency makes it difficult to define a true "state-of-the-art" against which new catalytic activities can be verified [6].

The CatTestHub database emerges as a direct response to this challenge. It is an open-access, experimental catalysis database designed to standardize data reporting across heterogeneous catalysis, thereby providing a community-wide platform for benchmarking [6]. Its design is informed by the FAIR principles (Findability, Accessibility, Interoperability, and Reuse), ensuring its relevance and utility to the broader research community [6]. By offering a structured repository for well-characterized catalytic data, CatTestHub exemplifies the modern approach to database design that does not merely capture comprehensive data but makes it genuinely accessible and actionable for researchers, thus bridging the critical gap between data collection and scientific insight.

Core Design Principles for Accessible and Comprehensive Databases

Designing a database that successfully balances deep data capture with ease of use requires adherence to several foundational principles. These principles ensure the database is not only a repository of information but a robust tool for research.

- Understanding Data and Requirements: The first step involves a clear analysis of data requirements and defining objectives. This means understanding not just the immediate data needs but also anticipating future growth and usage patterns, ensuring the design can scale and adapt [20].

- Prioritizing Data Integrity and Consistency: The accuracy and reliability of data are paramount. This is achieved through:

- Normalization: A technique to organize data into tables to minimize redundancy and dependency, which conserves storage and simplifies maintenance [20].

- Constraints and Data Types: Using primary keys, foreign keys, and check constraints enforces relationship rules and validates data, while appropriate data types (e.g., dates, integers) ensure accuracy and optimize storage [20] [21].

- Ensuring Scalability and Performance: A well-designed database must handle increasing loads. Effective indexing strategies speed up data retrieval, while design techniques like partitioning and sharding help manage larger datasets gracefully [20].

- Implementing Security Measures: Protecting sensitive research data is crucial. This involves access control mechanisms, such as role-based permissions, and encryption for data both at rest and in transit [20]. Regular security audits and updates further mitigate risks [20].

These principles provide the framework for creating a database that is both a trustworthy source of information and a performant tool for the scientific community.

Comparative Analysis: CatTestHub as a Benchmarking Tool

The following table summarizes the key features of the CatTestHub database, comparing its approaches to data capture, accessibility, and design against traditional, often less structured, methods of data dissemination.

Table 1: Comparison of Database Design and Accessibility Features in Catalysis Benchmarking

| Feature | CatTestHub Database Approach | Traditional / Ad-hoc Data Approaches |

|---|---|---|

| Data Structure & Integrity | Structured spreadsheet format; data curated for minimal redundancy [6]. | Highly variable; often dispersed across publications in inconsistent formats [3]. |

| Data Accessibility | Open-access online platform (spreadsheet); simple format ensures longevity and ease of access [6]. | Locked in proprietary formats or behind paywalls; access can be difficult and non-uniform. |

| Metadata & Context | Rich metadata provided for reaction conditions and catalyst characterization; includes reactor configuration details [6]. | Often incomplete, requiring assumptions and making reproduction difficult. |

| FAIR Compliance | Explicitly designed around FAIR principles, enhancing findability and reuse [6]. | Rarely designed with FAIR principles as a primary goal. |

| Benchmarking Foundation | Aims to establish a community standard through repeated measurements on well-characterized catalysts [6] [3]. | Benchmarking is challenging due to variability in conditions and reporting. |

| Scalability & Community Role | Designed for growth through continuous community contributions [6]. | Static; limited to the data presented in a single publication or study. |

This comparison highlights CatTestHub's systematic methodology. It moves beyond simply being a data container to becoming a curated knowledge resource. By providing structural and contextual consistency, it enables reliable comparison—the very essence of benchmarking [6] [3]. Its simple, open-access spreadsheet format is a strategic choice to lower barriers to both contribution and use, thereby fostering community adoption and long-term viability [6].

Experimental Protocols: Methodologies for Catalytic Benchmarking

The integrity of the CatTestHub database is underpinned by standardized experimental protocols for its featured probe reactions. These detailed methodologies are critical for ensuring that the data captured is not only comprehensive but also reproducible and comparable across different laboratories. Below is a detailed protocol for one of its key benchmark reactions.

Methanol Decomposition over Metal Catalysts

This protocol outlines the experimental procedure for measuring the catalytic activity of metal catalysts (e.g., Pt, Pd, Ru supported on SiO₂ or C) for methanol decomposition, a key probe reaction in CatTestHub [6].

1. Materials and Reagents:

- Catalysts: Commercial metal catalysts (e.g., Pt/SiO₂, Pd/C) are procured from standard suppliers like Sigma-Aldrich or Strem Chemicals [6].

- Chemicals: Methanol (>99.9%, Sigma-Aldrich), Nitrogen (99.999%), Hydrogen (99.999%) [6].

- Equipment: Tubular packed-bed reactor, mass flow controllers for gases, liquid syringe pump for methanol delivery, online gas chromatograph (GC) for product analysis.

2. Experimental Workflow: The logical flow of the experimental process, from preparation to data analysis, is visualized in the following diagram.

3. Detailed Procedures:

- Catalyst Preparation: A precise mass of catalyst (typically 10-100 mg) is loaded into the reactor tube. The catalyst bed may be diluted with an inert material like quartz sand to manage heat and mass transfer effects.

- Reaction Conditions: The catalyst is first activated in situ, often under a hydrogen flow at elevated temperature. The methanol decomposition reaction is then conducted under standardized conditions, for example:

- Temperature: Ramped or held at isothermal temperatures (e.g., 150-300°C).

- Pressure: Atmospheric pressure.

- Feed Composition: Methanol introduced via a carrier gas (H₂ or N₂) using a saturator or direct liquid injection.

- Weight Hourly Space Velocity (WHSV): Controlled to ensure kinetic regime operation [6].

- Product Analysis: The reactor effluent is analyzed by an online GC equipped with a suitable column (e.g., Hayesep) and a detector (TCD or FID) to quantify products (H₂, CO, CO₂, dimethyl ether).

4. Data Processing and Validation:

- Kinetic Analysis: Reaction rates are calculated based on methanol conversion and product distribution.

- Validation Checks: Data is validated to be free of corrupting influences such as internal or external diffusional limitations and thermodynamic constraints. This often involves tests like varying catalyst particle size and flow rate [6].

- Turnover Frequency (TOF) Calculation: The ultimate output is the TOF (molecules per site per second), which requires an accurate measure of the number of active sites, often determined by chemisorption or other characterization techniques.

The Scientist's Toolkit: Essential Research Reagent Solutions

The following table details key materials and reagents essential for conducting the benchmark catalytic experiments described in the CatTestHub database.

Table 2: Key Research Reagent Solutions for Catalytic Benchmarking Experiments

| Reagent/Material | Function in Experiment | Specific Example & Sourcing |

|---|---|---|

| Standard Reference Catalysts | Serves as the benchmark material for activity comparison; ensures consistency across different labs. | EuroPt-1, EUROCAT's EuroNi-1, World Gold Council standard Au catalysts [6]. |

| Probe Molecule Gases | High-purity gases used as reactants or carrier gases to avoid catalyst poisoning and ensure reproducible kinetics. | Hydrogen (99.999%), Nitrogen (99.999%) [6]. |

| Liquid Probe Molecules | High-purity liquid reactants used to study specific catalytic reactions and mechanisms. | Methanol (>99.9%, Sigma-Aldrich), Formic Acid [6]. |

| Supported Metal Catalysts | Commercial catalysts used to generate baseline activity data for metal-catalyzed reactions. | Pt/SiO₂ (Sigma-Aldrich 520691), Pt/C, Pd/C, Ru/C (Strem Chemicals) [6]. |

| Solid Acid Catalysts (Zeolites) | Standard zeolite materials used to benchmark acid-catalyzed reactions. | Zeolites with MFI and FAU frameworks from the International Zeolite Association [6]. |

| Characterization Gases | Gases used for quantifying the number of active sites on the catalyst surface. | High-purity H₂, CO, for pulse chemisorption experiments. |

Data Presentation and Visualization for Enhanced Accessibility

Effective data presentation is critical for transforming raw data into an accessible and interpretable format. CatTestHub's spreadsheet-based structure is a foundational step, but the principles of statistical visualization can be further applied to maximize clarity.

- The Design Plot Principle: The primary confirmatory visualization should "show the design" of the experiment [22]. This means the key dependent variable (e.g., Turnover Frequency) should be broken down by all the key experimental manipulations (e.g., catalyst type, temperature), exactly as they were designed in the experimental plan. This avoids the visual equivalent of p-hacking and provides a transparent first look at the estimated effects [22].

- Facilitating Comparison: Visual variables should be chosen to facilitate accurate comparison. Our visual system is better at comparing the positions of points or bars than comparing areas or colors [22]. For a catalysis database, this means using positional encodings (e.g., in a bar chart of TOF for different catalysts) is preferable to a color-coded heatmap for conveying the primary quantitative message.

- Adherence to Accessibility Standards: All visualizations, including charts and diagrams, must meet minimum color contrast ratio thresholds (at least 4.5:1 for normal text) as defined by WCAG guidelines [23] [24]. This ensures that information is perceivable by users with low vision or color vision deficiencies. The DOT scripts provided in this article use a color palette pre-verified for sufficient contrast.

The following diagram illustrates the high-level architecture of a benchmarking database like CatTestHub, showing how different components interact to balance comprehensive data capture with user accessibility.

The design philosophy exemplified by CatTestHub—one that deliberately balances comprehensive data capture with user-centric accessibility—provides a powerful strategic advantage in experimental heterogeneous catalysis and beyond. By implementing a structure that is both rigorous in its data integrity and simple in its accessibility, it transforms a collection of data points into a true community resource.

This approach directly supports the core tenets of modern science: reproducibility, collaboration, and accelerated discovery. An accessible database lowers the barrier for researchers to validate their findings against established benchmarks and to build upon the work of others with confidence. As the volume and complexity of scientific data continue to grow, the principles of FAIR data management and thoughtful database design will become increasingly critical. Investing in such systems is not merely an investment in data management, but an investment in the very infrastructure of scientific progress.

The field of experimental heterogeneous catalysis is undergoing a profound transformation, driven by the growing power of data-centric research and artificial intelligence. However, the ability to quantitatively compare new catalytic materials and technologies has been historically hindered by inconsistent data reporting across research studies [6]. Variability in reported reaction conditions, material characterization data, and reactor configurations makes it difficult to validate new catalytic claims against established benchmarks or to aggregate data for machine learning applications [5]. This reproducibility challenge spans multiple dimensions: minor variations in synthetic procedures can significantly alter catalyst properties [25], differences in testing protocols affect kinetic measurements [6], and insufficient reporting of reactor configurations prevents proper interpretation of mass and heat transport effects [7]. In response to these challenges, the catalysis research community has developed new standardized reporting frameworks, benchmarking databases, and data extraction tools that collectively aim to establish FAIR (Findable, Accessible, Interoperable, and Reusable) data principles as the foundation for future catalysis research [6] [9].

Standardized Reporting Frameworks and Guidelines

Comprehensive Reporting Recommendations

Recent community-driven initiatives have established detailed guidelines for reporting catalytic research to ensure reproducibility. These recommendations address the full experimental workflow from catalyst synthesis to reactivity testing.

Table 1: Essential Reporting Parameters for Reproducible Catalyst Synthesis

| Category | Specific Parameters to Report | Impact on Reproducibility |

|---|---|---|

| Reagent Preparation | Purity, lot numbers, supplier, contamination checks, pretreatment history of supports | Residual impurities (S, Na) can poison active sites; purity variations affect nanoparticle synthesis [25] |

| Synthesis Procedure | Temperature, concentration, pH, mixing time/rate, order of addition, aging time | Mixing time during deposition precipitation affects metal particle size distribution [25] |

| Post-Treatment | Drying conditions, calcination temperature/atmosphere/ramp rate, reduction conditions | Activation procedure (fluidized vs. fixed bed) creates different active species in Phillips catalysts [25] |

| Storage Conditions | Duration, atmosphere, container type | TiO₂ surfaces accumulate atmospheric carboxylic acids; ppb-level H₂S exposure deactivates Ni catalysts [25] |

| Characterization | Minimum characterization set: surface area, elemental analysis, crystallographic structure | Enables confirmation of successful replication and comparison to benchmark materials [25] |

For reactor configuration and kinetic testing, critical parameters include full reactor geometry, catalyst bed dimensions, dilution ratios, thermocouple placement and accuracy, gas flow rates and controllers, pressure regulation, analytical methods and calibration, and demonstrated absence of transport limitations [25] [7]. The reporting of observed phenomena during experiments—such as color changes, precipitation rates, or unexpected results—is equally valuable for reproducibility but often omitted from formal publications [25].

Machine-Readable Protocol Standards