Catalytic Performance Benchmarking: Essential Protocols for Accelerated Research and Drug Development

This article provides a comprehensive guide to catalytic performance benchmarking, tailored for researchers and drug development professionals.

Catalytic Performance Benchmarking: Essential Protocols for Accelerated Research and Drug Development

Abstract

This article provides a comprehensive guide to catalytic performance benchmarking, tailored for researchers and drug development professionals. It explores the foundational principles of community-based benchmarking and its critical role in ensuring reproducible, fair, and relevant catalyst assessments. The scope extends from establishing standard metrics for activity, selectivity, and stability to the application of advanced methodologies including AI-driven platforms, experimental design, and standardized databases. It further addresses common troubleshooting scenarios, optimization strategies for predictive modeling, and robust frameworks for the validation and comparative analysis of catalytic data, ultimately serving as a key resource for accelerating discovery in biomedical and clinical research.

The Foundations of Catalytic Benchmarking: Principles, Standards, and Community Initiatives

Catalytic benchmarking is a systematic process for evaluating and comparing the performance of catalysts against established standards. The core purpose is to provide a rigorous, reproducible framework that enables researchers to contextualize new catalytic findings against a validated baseline. In the field of heterogeneous catalysis, defining the "state-of-the-art" has remained challenging without community-wide standards for activity verification [1]. The practice of benchmarking extends beyond simple performance comparison; it establishes metrology for catalytic turnover, helping to distinguish genuine catalytic acceleration from artifacts such as diffusional limitations or catalyst deactivation [1].

The fundamental need for catalytic benchmarking stems from the proliferation of catalytic materials and activation strategies. New catalyst compositions are continuously emerging, while existing catalysts are being enhanced through novel energetic stimuli including non-thermal plasma, electrical charge, electric fields, strain, or light [1]. Without standardized benchmarking protocols, claims of enhanced activity remain difficult to verify independently. Community-driven benchmarking addresses this challenge through open-access data sharing and standardized testing methodologies aligned with FAIR principles (Findability, Accessibility, Interoperability, and Reuse) [1].

Community-Driven Benchmarking Frameworks

Established Catalytic Benchmarking Initiatives

Several organized efforts have emerged to standardize catalytic performance assessment. These initiatives provide structured frameworks for comparing catalytic data across different laboratories and research groups, as summarized in Table 1.

Table 1: Community-Driven Catalytic Benchmarking Platforms

| Platform Name | Scope & Focus | Key Features | Reference Materials |

|---|---|---|---|

| CatTestHub | Experimental heterogeneous catalysis; metal and solid acid catalysts | Spreadsheet-based database; structural & functional characterization; reactor configuration details | Pt/SiOâ‚‚, Pd/C, Ru/C, H-ZSM-5 zeolite [1] |

| JARVIS-Leaderboard | Broad materials design methods (AI, electronic structure, force-fields, quantum computation, experiments) | Open-source platform; multiple data modalities (structures, images, spectra, text); 274 benchmarks | Various computational and experimental methods [2] |

| CatBench | Machine learning interatomic potentials for adsorption energy predictions | Focus on computational catalysis; benchmarking framework for ML potentials | Catalyst screening datasets [3] |

| Historical Standards | Early catalyst benchmarking efforts | Commercially available reference catalysts | EuroPt-1, EuroNi-1, World Gold Council catalysts [1] |

The CatTestHub database exemplifies modern approaches to experimental benchmarking, housing data on catalytic reaction rates, material characterization, and reactor configurations [1]. Its design emphasizes curation of macroscopic quantities measured under well-defined reaction conditions, supported by comprehensive characterization data for various catalysts. Similarly, the JARVIS-Leaderboard provides an extensive benchmarking platform spanning multiple methodologies, from artificial intelligence to electronic structure calculations and experimental measurements [2]. This platform addresses the critical need for reproducibility in materials science, where more than 70% of research has been shown to be non-reproducible according to some estimates [2].

Benchmarking Workflow and Database Architecture

A standardized workflow is essential for generating comparable catalytic benchmarking data. The process begins with well-characterized, widely available catalysts, proceeds through controlled activity measurements under agreed-upon conditions, and culminates in data sharing through accessible repositories.

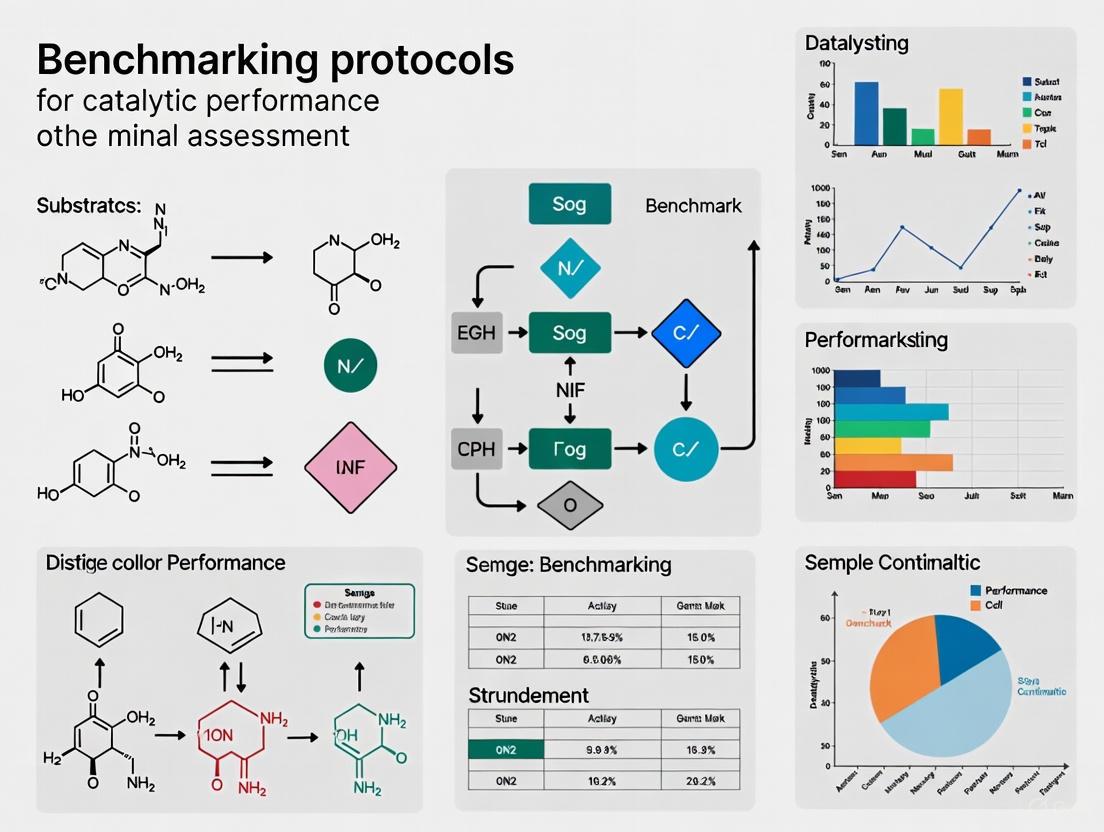

Diagram 1: Catalytic benchmarking workflow showing the community-driven process from catalyst selection to established benchmark.

The database architecture for catalytic benchmarking must balance comprehensive data capture with accessibility. CatTestHub implements a spreadsheet-based format to ensure long-term accessibility and ease of use, while containing sufficient detail to enable experimental reproduction [1]. This includes reaction condition parameters, catalyst characterization data, reactor configuration details, and critical metadata for traceability. Each data entry is linked to unique identifiers such as digital object identifiers (DOI) and researcher ORCIDs to ensure accountability and proper attribution [1].

Experimental Protocols for Catalytic Benchmarking

Standard Catalyst Systems and Test Reactions

Effective benchmarking requires well-defined catalyst systems and representative test reactions. Established benchmark catalysts include commercially available materials such as Pt/SiOâ‚‚, Pd/C, Ru/C, and standardized zeolites (H-ZSM-5) [1]. These materials provide consistent baseline performance for comparison.

For metal-catalyzed reactions, methanol decomposition and formic acid decomposition serve as valuable benchmark reactions due to their sensitivity to catalyst properties and relative simplicity [1]. For solid acid catalysts, the Hofmann elimination of alkylamines over aluminosilicate zeolites provides a reliable probe reaction [1]. These reactions exhibit well-understood mechanisms and respond predictably to catalyst variations, making them ideal for benchmarking purposes.

Table 2: Experimental Catalytic Benchmarking Data for Methanol Decomposition

| Catalyst | Temperature (°C) | Methanol Conversion (%) | Reaction Rate (mol/g·h) | Turnover Frequency (hâ»Â¹) |

|---|---|---|---|---|

| 5% Pt/SiOâ‚‚ | 200 | 87.5 | 0.35 | 14.2 |

| 5% Pd/C | 200 | 23.1 | 0.09 | 3.7 |

| 5% Ru/C | 200 | 10.5 | 0.04 | 1.7 |

| 5% Rh/C | 200 | 15.8 | 0.06 | 2.5 |

| 5% Ir/C | 200 | 8.9 | 0.04 | 1.4 |

Table 3: Experimental Catalytic Benchmarking Data for Formic Acid Decomposition

| Catalyst | Temperature (°C) | Formic Acid Conversion (%) | Reaction Rate (mol/g·h) | Turnover Frequency (hâ»Â¹) |

|---|---|---|---|---|

| 5% Pt/SiOâ‚‚ | 150 | 95.2 | 0.41 | 16.8 |

| 5% Pd/C | 150 | 44.7 | 0.19 | 7.8 |

| 5% Ru/C | 150 | 28.9 | 0.12 | 5.1 |

| 5% Rh/C | 150 | 35.4 | 0.15 | 6.2 |

| 5% Ir/C | 150 | 18.3 | 0.08 | 3.2 |

Protocol: Methanol Decomposition Over Metal Catalysts

Materials and Equipment

- Catalyst Materials: Commercially sourced metal catalysts (e.g., 5% Pt/SiOâ‚‚ from Sigma Aldrich 520691; 5% Pd/C from Strem Chemicals 7440-05-03; 5% Ru/C from Strem Chemicals 7440-18-8) [1]

- Chemicals: Methanol (>99.9%, Sigma Aldrich 34860-1L-R) [1]

- Gases: Nitrogen (99.999%), Hydrogen (99.999%) for pretreatment and carrier gas [1]

- Equipment: Fixed-bed reactor system with temperature control, online gas chromatograph for product analysis

Catalyst Pretreatment Procedure

- Load 50-100 mg of catalyst into the fixed-bed reactor

- Purge the system with inert gas (Nâ‚‚) at room temperature for 15 minutes

- Program the furnace to increase temperature to 300°C at a ramp rate of 5°C/min

- Switch to hydrogen flow (50 mL/min) at 300°C and maintain for 2 hours for catalyst reduction

- Cool the catalyst to the target reaction temperature (200°C) under hydrogen flow

- Switch to reaction feed conditions

Reaction Testing Protocol

- Prepare methanol feed by saturating carrier gas (N₂) with methanol vapor at 0°C

- Set total flow rate to achieve a weight hourly space velocity (WHSV) of 2.0 hâ»Â¹

- Maintain reactor at 200°C and 1 atm pressure

- Allow system to stabilize for 30 minutes before data collection

- Collect product stream samples at 30-minute intervals for analysis

- Analyze products using gas chromatography with flame ionization detector (GC-FID)

- Continue testing for a minimum of 4 hours to verify steady-state performance

Data Analysis and Calculations

- Calculate methanol conversion: ( X = \frac{C{in} - C{out}}{C_{in}} \times 100\% )

- Determine reaction rate: ( r = \frac{F \times X}{m{cat}} ) where F is methanol molar flow rate, m({}{cat}) is catalyst mass

- Compute turnover frequency (TOF) based on exposed metal sites determined by CO chemisorption

Protocol: Hofmann Elimination Over Solid Acid Catalysts

Materials and Preparation

- Catalyst: H-ZSM-5 zeolite (SiO₂/Al₂O₃ = 30), pelletized and sieved to 180-250 μm

- Reactant: n-Propylamine (≥99%) as the probe molecule

- Equipment: Fixed-bed reactor, GC-MS system for amine and product analysis

Catalyst Activation

- Load 100 mg of zeolite catalyst into reactor

- Heat to 500°C at 5°C/min under dry air flow (50 mL/min)

- Maintain at 500°C for 4 hours to remove moisture and contaminants

- Cool to reaction temperature (250°C) under inert gas

Reaction Procedure

- Introduce n-propylamine using a syringe pump at 0.1 mL/h

- Dilute with helium carrier gas at 30 mL/min total flow

- Maintain reactor at 250°C and atmospheric pressure

- Analyze effluent stream using online GC-MS

- Identify propylene and amine products through retention time and mass spectra

- Continue monitoring until steady-state conversion is achieved (typically 2-3 hours)

The Scientist's Toolkit: Essential Research Reagent Solutions

Successful implementation of catalytic benchmarking requires standardized materials and analytical approaches. Table 4 details essential research reagents and their functions in catalytic benchmarking experiments.

Table 4: Essential Research Reagent Solutions for Catalytic Benchmarking

| Reagent/Material | Specifications | Function in Benchmarking | Example Sources |

|---|---|---|---|

| Platinum on Silica (Pt/SiOâ‚‚) | 5 wt% loading, 2-3 nm particle size | Reference catalyst for dehydrogenation reactions; baseline for metal-catalyzed reactions | Sigma Aldrich (520691) [1] |

| Palladium on Carbon (Pd/C) | 5 wt% loading, high dispersion | Benchmark for hydrogenation/dehydrogenation; comparison to Pt catalysts | Strem Chemicals (7440-05-03) [1] |

| H-ZSM-5 Zeolite | SiO₂/Al₂O₃ = 30, specific surface area >400 m²/g | Standard solid acid catalyst; acid site density reference | International Zeolite Association [1] |

| Methanol (CH₃OH) | >99.9% purity, anhydrous | Probe molecule for decomposition reactions; activity comparison | Sigma Aldrich (34860-1L-R) [1] |

| n-Propylamine | ≥99% purity | Hofmann elimination reactant; acid site strength probe | Commercial suppliers [1] |

| Formic Acid (HCOOH) | ≥95% purity, analytical grade | Decomposition reaction probe; comparison of metal catalysts | Various suppliers [1] |

| Engeletin | Engeletin, CAS:572-31-6, MF:C21H22O10, MW:434.4 g/mol | Chemical Reagent | Bench Chemicals |

| Dpdpe | Dpdpe, CAS:88381-29-7, MF:C30H39N5O7S2, MW:645.8 g/mol | Chemical Reagent | Bench Chemicals |

Data Reporting and Metadata Standards

Comprehensive metadata collection is essential for reproducible catalytic benchmarking. The following elements must be documented for each benchmark experiment:

Catalyst Characterization Metadata

- Structural properties: Surface area (BET method), pore volume and distribution, crystallinity (XRD)

- Chemical composition: Elemental analysis, acid site density and strength (for solid acids)

- Morphological properties: Particle size distribution, metal dispersion (for supported metals)

- Surface properties: Active site characterization through chemisorption or spectroscopic methods

Reaction Condition Metadata

- Temperature: Reactor bed temperature with measurement method specified

- Pressure: System pressure with uncertainty range

- Feed composition: Exact reactant concentrations and purity specifications

- Flow conditions: Space velocity (WHSV/GHSV), flow rate, and dilution ratios

- Reactor type: Fixed-bed, continuous stirred tank, or other configuration details

Data Quality Assurance

- Stability testing: Minimum 4-hour time-on-stream to verify steady-state operation

- Mass balance closure: Requirement of 95-105% carbon balance

- Reproducibility: Multiple experimental runs with standard deviation reporting

- Transport limitations: Verification of absence of internal and external diffusion limitations

Implementation and Community Adoption

Successful implementation of catalytic benchmarking requires community-wide engagement. Researchers should:

- Select Appropriate Benchmark Reactions: Choose reactions relevant to their catalytic system from established benchmarks

- Incorporate Reference Catalysts: Include standard reference materials in experimental series

- Follow Standard Protocols: Adhere to established testing procedures for comparability

- Contribute to Open Databases: Share results through platforms like CatTestHub to expand benchmark data

- Provide Comprehensive Metadata: Ensure complete reporting of experimental conditions and catalyst characteristics

The integration of benchmarking into research workflows enhances scientific rigor and enables meaningful comparison across laboratories. As the database of benchmark results grows through community contributions, the reliability and statistical significance of catalytic performance standards increases accordingly [1].

Diagram 2: Implementation framework showing how individual research groups contribute to and benefit from community benchmarking.

This framework creates a virtuous cycle where individual researchers both contribute to and benefit from community benchmarking efforts. As more laboratories adopt these standards, the catalytic benchmarking ecosystem becomes increasingly robust and statistically significant, ultimately accelerating the development of improved catalytic materials and processes.

In both industrial applications and academic research, the systematic assessment of catalytic performance is paramount. The core properties of activity, selectivity, and stability form the fundamental triad for evaluating and benchmarking catalysts across diverse chemical processes [4]. Activity measures the catalyst's efficiency in accelerating a reaction, selectivity dictates its precision in directing the reaction toward the desired products, and stability determines its operational lifespan and resistance to deactivation [4]. A holistic benchmarking protocol must accurately quantify these metrics to enable meaningful comparisons between different catalytic systems, guide research and development efforts, and facilitate the transition from laboratory discovery to industrial application. The following sections detail the definitions, quantitative measures, and standardized experimental protocols for assessing these critical performance indicators, providing a structured framework for catalytic performance assessment research.

Defining the Core Metrics

Activity

Catalyst activity refers to the rate at which a catalyst accelerates a chemical reaction, fundamentally by lowering the activation energy required [4]. This metric directly impacts process efficiency, as a highly active catalyst allows reactions to proceed more swiftly and at lower temperatures or pressures, thereby reducing energy consumption.

Quantitative measures of activity include:

- Reaction Rate: The rate of reactant consumption or product formation, typically expressed in mol·timeâ»Â¹Â·masscatâ»Â¹ or mol·timeâ»Â¹Â·surfacearea_catâ»Â¹.

- Turnover Frequency (TOF): The number of reaction cycles catalyzed per active site per unit time. This is considered a more intrinsic measure of activity as it normalizes for the number of active sites.

- Conversion: The fraction or percentage of a key reactant that is converted into products under specified conditions.

Selectivity

Selectivity is a measure of a catalyst's ability to direct the chemical reaction toward a specific desired product, while minimizing the formation of unwanted byproducts [4]. High selectivity is crucial for achieving atom-efficient and economically viable processes, as it reduces the need for costly and energy-intensive downstream separation and purification steps. The selective nature of a catalyst is profoundly influenced by its structure and composition, which can be tailored to favor specific reaction pathways [4].

Quantitatively, selectivity is often expressed as:

- Product Selectivity (%): The fraction of the converted reactant that forms a specific product, typically calculated as (moles of desired product formed / total moles of all products formed) × 100%.

- Yield: A combined metric reflecting both activity and selectivity, calculated as (Conversion × Selectivity) / 100.

Stability

Stability refers to a catalyst's ability to maintain its activity and selectivity over time under operational conditions [4]. A stable catalyst resists deactivation, a critical consideration for industrial processes where catalyst replacement costs and process downtime have significant economic impacts [4].

Common causes of deactivation include:

- Poishing: Strong chemisorption of species (e.g., sulfur, lead) that block active sites.

- Sintering: Loss of active surface area due to agglomeration of metal particles, often accelerated at high temperatures.

- Coking/Fouling: Deposition of carbonaceous materials on the catalyst surface.

- Leaching: Loss of active material from the catalyst into the reaction medium or stream.

Stability is quantified by monitoring conversion and selectivity as a function of time-on-stream (TOS). The catalyst's lifetime may be reported as the time until a specified drop in activity or selectivity occurs.

The Interplay of Metrics

While activity, selectivity, and stability are distinct properties, they are deeply interconnected [4]. Optimizing a catalyst often involves navigating trade-offs; for instance, a highly active catalyst might promote undesirable side reactions, reducing selectivity, or a modification to improve stability might slightly suppress its intrinsic activity [4]. Therefore, benchmarking protocols must evaluate all three properties concurrently to provide a comprehensive view of catalyst performance and guide the development of optimally balanced systems.

The following tables consolidate key quantitative benchmarks and performance data from recent catalysis research, providing a reference for catalyst evaluation.

Table 1: Performance Benchmarks for Selected Catalytic Reactions

| Reaction | Catalyst System | Key Performance Metric | Reported Value | Conditions | Source/Ref |

|---|---|---|---|---|---|

| Higher Alcohol Synthesis | Fe65Co19Cu5Zr11 | Space-Time Yield (STY) | 1.1 gHA hâ»Â¹ gcatâ»Â¹ | Hâ‚‚:CO=2.0, 533 K, 50 bar | [5] |

| Higher Alcohol Synthesis | Fe79Co10Zr11 (Seed Benchmark) | Space-Time Yield (STY) | 0.32 gHA hâ»Â¹ gcatâ»Â¹ | Hâ‚‚:CO=2.0, 533 K, 50 bar | [5] |

| Nitro-to-Amine Reduction | 114-Catalyst Library | Completion Time / Yield | Varied (0-100% in 80 min) | Fluorescence assay, room temp. | [6] |

Table 2: Key Properties and Their Quantitative Measures

| Performance Metric | Quantitative Measures | Typical Units | Interdependence Notes |

|---|---|---|---|

| Activity | Reaction Rate, Turnover Frequency (TOF), Conversion | mol·sâ»Â¹Â·gâ»Â¹, sâ»Â¹, % | High activity can compromise selectivity. |

| Selectivity | Product Selectivity, Yield | %, % | Dictates product purity and separation costs. |

| Stability | Time-on-Stream (TOS), Deactivation Rate, Lifetime | h, %·hâ»Â¹, h | Crucial for economic viability; affects activity & selectivity over time. |

Experimental Protocols for Benchmarking

Standardized protocols are essential for generating comparable and reproducible catalyst performance data. The following sections outline a general protocol for a catalytic test and a specific high-throughput screening method.

Generalized Catalyst Testing Workflow

This workflow describes a standard laboratory-scale setup for evaluating solid catalysts in a continuous-flow fixed-bed reactor system, a common configuration for gas-phase reactions.

Diagram 1: Catalyst testing workflow.

Procedure:

- Catalyst Preparation and Characterization: Synthesize or procure the catalyst. Characterize its physical and chemical properties using techniques such as:

- Surface Area and Porosity (BET): To determine specific surface area, pore volume, and pore size distribution.

- X-ray Diffraction (XRD): For identifying crystalline phases and estimating crystallite size.

- Scanning/Transmission Electron Microscopy (SEM/TEM): For visualizing morphology, particle size, and distribution.

- Reactor Loading and System Check: Weigh a specific amount of catalyst (dilution with an inert material like silicon carbide may be used to manage heat transfer). Load it into the isothermal zone of a fixed-bed reactor. Ensure the entire system is pressure-tight and purge with an inert gas (e.g., Nâ‚‚, Ar).

- In-Situ Pre-treatment: Activate the catalyst inside the reactor under a specific gas flow and temperature program (e.g., calcination in air to remove impurities, followed by reduction in Hâ‚‚ flow to generate active metal sites).

- Reaction and Stabilization: Switch the gas flow to the reactant mixture (e.g., syngas for Fischer-Tropsch synthesis). Adjust the pressure, temperature, and flow rates to the desired reaction conditions. Allow the system to stabilize for a predetermined period (e.g., 1-2 hours).

- Product Sampling and Analysis: After stabilization, periodically sample the effluent stream. Analyze the product stream using appropriate analytical techniques:

- Gas Chromatography (GC) with FID/TCD: For separation and quantification of hydrocarbons and permanent gases.

- Mass Spectrometry (MS): For identifying and quantifying specific compounds.

- Ensure calibration with standard mixtures for accurate quantification.

- Data Processing: Calculate key performance metrics (Conversion, Selectivity, Yield, STY) based on the analytical data and flow rates.

- Stability Test: For medium- to long-term stability assessment, continue the experiment over an extended period (dozens to hundreds of hours), periodically sampling and analyzing the product stream to track performance over time.

- Post-Reaction Characterization: Recover the spent catalyst for characterization (e.g., TGA for coke deposition, TEM for sintering, XPS for surface composition) to understand deactivation mechanisms.

High-Throughput Fluorogenic Assay for Catalyst Screening

This protocol details a specific high-throughput experimentation (HTE) method for rapidly screening catalyst libraries, as described in recent literature [6].

Principle: The assay utilizes a fluorogenic probe where the non-fluorescent nitro-moiety (NN) is reduced to a strongly fluorescent amine (AN). The reaction progress is monitored in real-time by tracking the increase in fluorescence intensity, allowing for simultaneous screening of multiple catalysts [6].

Diagram 2: High-throughput screening protocol.

Procedure:

- Well Plate Set-Up:

- Use a 24-well polystyrene plate.

- In each reaction well (S), prepare a mixture containing:

- Catalyst: 0.01 mg/mL.

- Fluorogenic Probe: 30 µM NN.

- Reducing Agent: 1.0 M aqueous hydrazine (Nâ‚‚Hâ‚„).

- Additive: 0.1 mM acetic acid.

- Solvent: Hâ‚‚O to a total volume of 1.0 mL [6].

- In paired reference wells (R), prepare an identical mixture but replace the NN probe with the anticipated amine product (AN) to serve as a standard for fluorescence and stability [6].

- Reaction Initiation and Monitoring:

- Place the prepared plate into a multi-mode microplate reader.

- Program the reader to execute a cycle every 5 minutes for a total of 80 minutes:

- Orbital Shaking: 5 seconds to ensure mixing.

- Fluorescence Measurement: Read intensity (Excitation: 485 nm, Bandwidth: 20 nm; Emission: 590 nm, Bandwidth: 35 nm).

- Absorption Spectrum Scan: Scan from 300 nm to 650 nm [6].

- Data Processing and Scoring:

- Convert raw plate reader data into structured formats (e.g., CSV files, SQL database).

- For each catalyst, generate kinetic profiles from the fluorescence and absorbance data.

- Calculate performance scores based on multiple criteria, which may include:

- Reaction completion time (from kinetic curves).

- Final yield.

- Selectivity (monitored via stable isosbestic points in absorption spectra and absence of intermediate peaks).

- Incorporation of sustainability biases (e.g., catalyst abundance, cost, recoverability) into the final score [6].

The Scientist's Toolkit: Essential Research Reagents and Materials

This section lists key reagents, materials, and tools essential for conducting catalyst benchmarking experiments, as featured in the cited protocols and the broader field.

Table 3: Essential Reagents and Materials for Catalyst Benchmarking

| Item Name | Function / Role | Example from Protocols |

|---|---|---|

| Benchmark Catalysts | Standardized materials for cross-study performance comparison and validation. | EuroPt-1, EUROCAT standards, Zeolite Y (MFI, FAU frameworks) [1]. |

| Fluorogenic Probe (NN/AN) | A "switch-on" fluorescent reporter for high-throughput kinetic screening of redox reactions. | Nitronaphthalimide (NN) probe and its amine (AN) form for nitro-reduction assays [6]. |

| Microplate Reader | Instrument for automated, real-time optical monitoring of multiple parallel reactions. | Biotek Synergy HTX multi-mode reader for fluorescence and absorption in well plates [6]. |

| Fixed-Bed Reactor System | Standard laboratory setup for testing solid catalysts under continuous-flow conditions. | Tubular reactors for testing FeCoCuZr catalysts in syngas conversion [5]. |

| Analytical Instruments (GC/MS) | For precise separation, identification, and quantification of reaction products and reactants. | Gas Chromatography (GC) for analyzing hydrocarbon mixtures in reactor effluents [5]. |

| Characterization Tools | To determine physical and chemical properties of fresh and spent catalysts. | BET surface area analyzer, XRD, SEM/TEM [1]. |

| Active Learning & Data Software | Machine learning platforms to guide experimental design and analyze complex data. | Gaussian Process & Bayesian Optimization algorithms for optimizing catalyst composition [5]. |

| Enofelast | Enofelast, CAS:127035-60-3, MF:C16H15FO, MW:242.29 g/mol | Chemical Reagent |

| Enoxacin | Enoxacin, CAS:74011-58-8, MF:C15H17FN4O3, MW:320.32 g/mol | Chemical Reagent |

The Critical Need for Standardization in Data and Metrics

The field of heterogeneous catalysis is undergoing a profound transformation, moving from traditional empirical approaches toward data-driven scientific discovery. However, this transition is hampered by a critical lack of standardization in experimental data and performance metrics, creating significant reproducibility challenges and impeding meaningful cross-comparison of research findings. The catalysis research community faces a benchmarking crisis where newly reported catalytic activities cannot be reliably verified against established state-of-the-art materials [1]. This standardization deficit affects every facet of catalyst development—from fundamental research to industrial application—and demands immediate, coordinated solutions.

Traditional catalyst research has relied heavily on trial-and-error experimentation and theoretical simulations, both increasingly limited in their ability to address complex catalytic systems and vast chemical spaces [7]. While computational resources have fueled the growth of large calculated catalysis datasets, experimental datasets face greater variability due to inconsistent reporting standards, reactor configurations, and testing protocols [1]. This lack of standardized benchmarking makes it difficult to answer fundamental questions: Is a newly synthesized catalyst truly more active than its predecessors? Are reported turnover rates free of corrupting influences like diffusional limitations? Has the application of an energy source genuinely accelerated a catalytic cycle? [1] Without community-wide standards, individual researchers cannot adequately contextualize their results against agreed-upon references, slowing the pace of innovation across energy, environmental, and materials sciences where catalysis serves as a cornerstone discipline [7].

Current Challenges and Consequences of Non-Standardization

The Reproducibility Challenge

Catalysis research suffers from significant reproducibility issues stemming from minimal reporting standards for both catalyst synthesis and performance evaluation. Minute variations in catalyst production—including glassware specifics, chemical lot numbers, reagent addition sequences, aging times, and pretreatment conditions—profound influence catalyst properties such as surface area, metal dispersion, and oxidation states, leading to irreproducibility between batches [8]. These synthesis variables are frequently omitted from literature reports, making experimental replication exceptionally challenging. Furthermore, the active form of a catalyst is generally achieved only under specific reaction conditions, creating complex relationships between initial catalyst properties and ultimate catalytic activity that are poorly understood without standardized characterization protocols [8].

The problem extends to performance evaluation, where differing reactor configurations, analytical methods, and data processing approaches generate results that cannot be meaningfully compared across laboratories. As noted in assessments of experimental heterogeneous catalysis, prior attempts at benchmarking have achieved limited success because, despite the occasional availability of common reference materials, no standard procedures or conditions for measuring catalytic activity have been widely implemented [1]. Standard methods from organizations like ASTM do exist for specific applications but often focus on conditions where catalytic activity is likely convoluted with transport phenomena, and many are not available as open-access resources [1].

Data Management and Integration Hurdles

The interdisciplinary nature of catalysis research—spanning inorganic, organic, analytical, and physical chemistry, alongside chemical engineering and materials science—generates data in diverse formats that resist integration into unified databases [8]. Catalysis data encompasses two broad categories: (1) catalyst synthesis and characterization data (catalyst-centric), and (2) reaction performance data (reaction-centric), each with distinct metadata requirements [8]. The absence of standardized data frameworks prevents effective mining of the collective research output, limiting the potential of artificial intelligence and machine learning approaches that require large, well-curated datasets [7] [8].

Performance metrics for catalysts exhibit tremendous variability in reporting standards, with researchers using different units, normalization methods, and experimental conditions that preclude direct comparison. Even basic information such as temperature and pressure conditions, feed composition, conversion rates, and selectivity measurements may be reported inconsistently or with insufficient metadata to assess their relevance to other systems [9] [1]. This problem is particularly acute in emerging fields like biomass conversion, where the complex nature of lignocellulosic feedstocks and their component constituents creates additional challenges for standardized assessment [8].

Table: Key Challenges in Catalysis Data Standardization

| Challenge Category | Specific Issues | Impact on Research Progress |

|---|---|---|

| Synthesis Reporting | Unrecorded variables in catalyst preparation (aging time, pretreatment conditions, chemical lots) | Prevents replication of catalyst materials and properties |

| Performance Testing | Inconsistent reactor configurations, analytical methods, reaction conditions | Hinders cross-comparison of catalytic activity and selectivity |

| Data Management | Diverse formats across characterization techniques, lack of metadata standards | Impedes data integration, mining, and machine learning applications |

| Reference Materials | Limited availability of standard catalysts without standardized testing protocols | Undermines benchmarking against state-of-the-art materials |

Emerging Solutions and Standardization Frameworks

The FAIR Data Principles and Digital Catalysis Frameworks

The adoption of FAIR data principles (Findable, Accessible, Interoperable, and Reusable) represents a foundational approach to addressing catalysis's standardization challenges [8]. These principles prioritize making data machine-readable and autonomously accessible while still supporting human users, enabling the creation of standardized datasets that are truly useful, reproducible, and shareable across the research community [8]. FAIR data promotes cross-disciplinary research by establishing common standards that allow data from one field to be applied to new contexts, such as leveraging semiconductor research methodologies for catalysis studies [8].

The German Catalysis Society (GeCATS) has proposed a comprehensive framework based on five essential pillars for meaningful description of catalytic processes: (1) data exchange with theory, (2) performance data, (3) synthesis data, (4) characterization data, and (5) operando data [8]. This integrated approach recognizes that catalyst properties evolve under reaction conditions and emphasizes the critical importance of capturing in operando characterization data to understand the complex relationship between catalyst properties and activity [8]. Implementation of such frameworks requires careful consideration of which metadata to record, as this fundamentally influences database design, optimization, governance, and integration for specific applications.

Community Benchmarking Initiatives: CatTestHub

The recently introduced CatTestHub database represents a significant advancement in experimental catalysis standardization, providing an open-access community platform for benchmarking that intentionally houses experimental reaction rates, material characterization, and reactor configuration details [1]. Designed according to FAIR data principles, CatTestHub employs a spreadsheet-based format that ensures longevity, ease of access, and download capability for data reuse [1]. The database incorporates unique identifiers (DOIs, ORCID) and funding acknowledgements to provide electronic means for accountability, intellectual credit, and traceability—essential elements for a sustainable community resource.

CatTestHub's architecture addresses the benchmarking gap by curating key reaction condition information necessary for reproducing reported experimental measures of catalytic activity, alongside detailed reactor configuration specifications [1]. To contextualize macroscopic catalytic activity measurements at the nanoscopic scale of active sites, the database includes structural characterization for each catalyst material [1]. Currently focusing on metal and solid acid catalysts with decomposition of methanol and formic acid as benchmarking chemistries, CatTestHub demonstrates the potential for community-wide standards to emerge through coordinated data collection on well-characterized, commercially available catalyst materials [1].

Standardization Framework Diagram

Machine Learning and Data-Driven Approaches

The integration of machine learning (ML) and artificial intelligence (AI) in catalysis research offers powerful incentives for standardizing data and metrics, as these data-driven approaches require large, high-quality, consistently formatted datasets to build accurate predictive models [7] [8]. ML has evolved from being merely a predictive tool to becoming a "theoretical engine" that contributes to mechanistic discovery and the derivation of general catalytic laws [7]. However, the performance of ML models in catalysis remains highly dependent on data quality and volume, with data acquisition and standardization representing major challenges for ML applications in this domain [7].

Recent advances have demonstrated ML's potential to bridge data-driven discovery with physical insight through a three-stage application framework: (1) data-driven screening, (2) physics-based modeling, and (3) symbolic regression and theory-oriented interpretation [7]. This hierarchical approach enables more efficient exploration of catalytic materials while generating insights that feed back into improved standardization protocols. The emergence of large language models (LLMs) offers promising solutions for database development and curation, potentially overcoming traditional bottlenecks in data standardization [7].

Table: Standardized Testing Protocols for Catalyst Evaluation

| Testing Phase | Standardized Protocol Elements | Required Data Reporting |

|---|---|---|

| Sample Preparation | Defined sampling methods from steady points in catalyst setup; matching production materials and coatings [9] | Catalyst source, composition, sampling methodology, pretreatment conditions |

| Testing Environment | Reactor systems matching real-world conditions; gas mixtures mirroring actual plant environment [9] | Temperature, pressure, feed composition, gas concentrations, reactor type |

| Performance Evaluation | Standardized test procedures with controlled conditions; analytical instrument calibration [9] | Conversion rates, product selectivity, long-term stability, turnover frequencies |

| Data Interpretation | Statistical analysis for reliability; benchmark comparisons against standards [9] | Full reaction conditions, catalyst characterization data, uncertainty estimates |

Experimental Protocols for Standardized Catalyst Assessment

Catalyst Testing and Performance Evaluation

Standardized catalyst testing follows well-defined protocols to generate reproducible, accurate, and comparable data across different laboratories and experimental setups. A basic configuration consists of a tube reactor with a temperature-controlled furnace and mass flow controllers, with the reactor output connected directly to analytical instruments like gas chromatographs, FID hydrocarbon detectors, CO detectors, and FTIR systems [9]. Such systems can replicate established EPA Test Method 25A protocols for emissions testing, providing a foundation for standardized assessment [9].

Proper testing begins with clear objective definition aligned with operational needs, thoughtful catalyst sample selection that represents the entire catalyst system and matches production materials, and careful preparation of testing environments that mirror real-world operating conditions [9]. Performance evaluation should encompass both new catalysts (to verify they match required specifications) and used catalysts (to determine remaining activity levels and optimal regeneration or replacement timing) [9]. This systematic approach helps maintain consistent product quality and prevents unexpected production shutdowns.

High-Throughput Screening Protocols

Advanced screening methodologies combine computational and experimental approaches to accelerate catalyst discovery while maintaining standardized assessment protocols. The high-throughput computational-experimental screening protocol demonstrated for bimetallic catalyst discovery employs electronic density of states (DOS) patterns as a screening descriptor to identify promising candidate materials [10]. This approach quantitatively compares DOS patterns between candidate alloys and reference catalysts using defined similarity metrics, enabling efficient prioritization of experimental targets [10].

The protocol involves several standardized stages: (1) first-principles calculations to screen thermodynamic stability of candidate structures, (2) quantitative DOS similarity analysis relative to reference catalysts, (3) synthetic feasibility evaluation, and (4) experimental synthesis and testing of prioritized candidates [10]. This methodology successfully identified several bimetallic catalysts with performance comparable to palladium references, including the previously unreported Ni61Pt39 catalyst that demonstrated a 9.5-fold enhancement in cost-normalized productivity [10]. Such integrated protocols demonstrate how standardization can accelerate discovery while ensuring consistent performance metrics.

Catalyst Testing Workflow Diagram

Essential Materials and Research Reagent Solutions

The implementation of standardized catalyst testing protocols requires specific research reagents and materials that enable consistent, reproducible experimental outcomes across different laboratories. The following table details key solutions essential for reliable catalyst performance assessment.

Table: Essential Research Reagent Solutions for Standardized Catalyst Testing

| Research Reagent | Function in Catalyst Testing | Application Examples |

|---|---|---|

| Standard Reference Catalysts (EuroPt-1, EuroNi-1, World Gold Council standards) [1] | Benchmark materials for cross-laboratory performance comparison and method validation | Establishing baseline activity measurements; calibrating testing protocols |

| Tube Reactor Systems with temperature-controlled furnaces [9] | Controlled environment for catalyst performance evaluation under defined conditions | Standardized activity testing; stability assessments; kinetic studies |

| Calibrated Gas Mixtures (specific concentrations matching plant environments) [9] | Standardized feed streams for reproducible activity and selectivity measurements | Conversion rate determination; selectivity profiling; poisoning studies |

| Analytical Instrumentation (GC, FID hydrocarbon detectors, CO detectors, FTIR systems) [9] | Quantitative analysis of reaction products and catalyst performance metrics | Product identification and quantification; conversion calculations; mechanistic studies |

| Metal Supported Catalysts (Pt/SiOâ‚‚, Pt/C, Pd/C, Ru/C, Rh/C, Ir/C) [1] | Reference materials for specific catalytic reactions and processes | Hydrogenation/dehydrogenation reactions; biomass conversion; emissions control |

Implementation Roadmap and Future Perspectives

The catalysis research community stands at a pivotal moment where the adoption of comprehensive standardization protocols will determine the pace of innovation in coming decades. Implementation requires coordinated action across multiple stakeholders: academic researchers must adopt FAIR data principles and standardized reporting practices; journal publishers should enforce minimum information standards for publication; funding agencies need to prioritize projects that contribute to community resources; and industrial partners must participate in benchmark development and validation [1] [8].

The digital transformation of catalysis research through artificial intelligence and machine learning offers powerful incentives for standardization, as these technologies require large, consistent datasets to reach their full potential [7] [8]. Future developments will likely include increased automation in data collection and curation, wider adoption of high-throughput experimentation integrated with computational screening, and the emergence of AI-assisted experimental design that leverages standardized data to propose optimal catalyst formulations and testing conditions [10] [8].

As the field advances, standardization efforts must expand beyond conventional catalytic materials and reactions to encompass emerging areas such as single-atom catalysts, electrocatalytic systems, and plasma-catalytic processes [1] [11]. This expansion will require developing new benchmark materials and protocols tailored to these specialized applications while maintaining alignment with broader standardization frameworks. Through coordinated community action, the catalysis research field can overcome its reproducibility challenges and accelerate the discovery of next-generation catalysts essential for sustainable energy, environmental protection, and chemical production.

The exponential growth in volume, complexity, and creation speed of catalytic performance data presents significant challenges for research reproducibility and cross-study comparison. The FAIR Guiding Principles (Findable, Accessible, Interoperable, and Reusable), formally defined in 2016, provide a framework to address these challenges by enhancing data management and stewardship practices [12] [13]. These principles emphasize machine-actionability—the capacity of computational systems to find, access, interoperate, and reuse data with minimal human intervention—which is crucial for handling the large-scale datasets characteristic of modern catalysis research [12] [14].

Within catalytic performance assessment, consistent benchmarking enables meaningful evaluation of new catalyst materials against established standards. The implementation of FAIR principles directly supports this goal by ensuring that experimental data are sufficiently well-described, structured, and accessible to enable reliable comparison and verification across different laboratories and research initiatives [1]. The CatTestHub database exemplifies this approach, implementing FAIR principles to create an open-access community platform for benchmarking experimental heterogeneous catalysis data [1].

The Four FAIR Principles: Detailed Breakdown

Findability

Findability represents the foundational step in data reuse. For catalytic performance data to be findable, both humans and computers must be able to easily discover the relevant datasets and their associated metadata [12].

- Persistent Identifiers: All datasets and significant digital objects must be assigned a globally unique and persistent identifier (PID), such as a Digital Object Identifier (DOI) [15] [14]. This provides a stable reference link that persists over time.

- Rich Metadata: Data must be described with comprehensive, machine-readable metadata. This includes detailed information on catalyst synthesis, reaction conditions, characterization methods, and performance metrics [14].

- Indexed in Searchable Resources: The (meta)data must be registered or indexed in a searchable resource, such as a disciplinary data repository, to facilitate discovery [12] [14].

In practice, for catalysis research, findability requires depositing datasets in repositories like CatTestHub or Zenodo that assign DOIs and ensure the data is discoverable through platform and domain-specific search engines [1] [14].

Accessibility

The Accessibility principle ensures that once users find the required data and metadata, they can retrieve them using standardized, open protocols [12].

- Retrieval via Identifier: Data and metadata should be retrievable by their persistent identifier using a standardized communications protocol (e.g., HTTPS, API) [16] [14].

- Open Protocols: The access protocol should be open, free, and universally implementable. Where necessary, the protocol should also support an authentication and authorization procedure [14]. This means access can be restricted for proprietary or sensitive data, but the pathway to obtain access must be clear.

- Metadata Persistence: Metadata should remain accessible even if the underlying data is no longer available, for instance, due to retention policies [14].

For benchmarking protocols, this implies that even if full catalytic datasets are under embargo, the descriptive metadata (e.g., catalyst type, reaction studied, measured properties) should remain accessible to inform other researchers of the experiment's existence and scope.

Interoperability

Interoperability refers to the ability of data to be integrated with other data, applications, and workflows for analysis, storage, and processing [12].

- Formal Knowledge Representation: Data and metadata should use a formal, accessible, shared, and broadly applicable language for knowledge representation [14]. This avoids ambiguity and ensures clear meaning.

- Standardized Vocabularies and Ontologies: Using FAIR-compliant vocabularies, ontologies, and thesauri is critical. In catalysis, this could involve using standard terms for catalyst nomenclature (e.g., IUPAC naming), reaction classes, and units of measurement [13] [14].

- Qualified References: Datasets should include qualified references to other (meta)data, such as linking a catalytic performance dataset to the DOI of the catalyst characterization data or a related research publication [14].

The CatTestHub implementation uses a standardized spreadsheet format and controlled vocabularies to describe catalysts, reactors, and reaction conditions, enabling direct comparison and integration of data from multiple sources [1].

Reusability

Reusability is the ultimate goal of the FAIR principles, aiming to optimize the future reuse of data [12]. This requires data and metadata to be so well-described that they can be replicated, combined, or repurposed in different settings.

- Rich Description: Metadata must include a plurality of accurate and relevant attributes to provide comprehensive context [14].

- Clear Usage License: Data must be released with a clear and accessible data usage license (e.g., Creative Commons licenses) that specifies the terms of reuse [15] [17].

- Detailed Provenance: The provenance of the data—how it was generated, processed, and derived—must be thoroughly documented [14]. This is essential for reproducing experimental results in catalysis.

- Community Standards: Data and metadata should meet domain-relevant community standards, ensuring acceptance and utility within the field [14]. For catalysis, this includes reporting standards for turnover frequency (TOF), conversion, selectivity, and stability.

Table 1: Summary of Core FAIR Principles and Catalysis-Specific Implementation Examples

| Principle | Core Objective | Key Technical Requirements | Catalysis Research Implementation Example |

|---|---|---|---|

| Findable | Easy discovery by humans and machines | Persistent Identifier (DOI), Rich Metadata, Resource Indexing | Depositing catalyst performance data in CatTestHub with a DOI and detailed metadata on catalyst structure and test conditions [1]. |

| Accessible | Retrievable upon discovery | Standardized Protocol (e.g., HTTPS/API), Metadata permanence | Providing data via a repository API, with metadata always accessible even if data download requires login [16] [14]. |

| Interoperable | Integration with other data and tools | Standard Vocabularies, Formal Languages, Qualified References | Using IUPAC terminology and linking catalytic activity data to separate characterization datasets via their DOIs [1] [14]. |

| Reusable | Replication and combination in new studies | Clear License, Detailed Provenance, Domain Standards | Reporting data with a CC-BY license, including full experimental procedure and adherence to benchmarking protocols like those for methanol decomposition [1] [15]. |

Implementing FAIR Principles: A Protocol for Catalysis Research

The following section provides a practical, step-by-step protocol for implementing FAIR principles in catalytic performance assessment research.

Pre-Experimental Planning and Data Management

A robust FAIR data practice begins before data generation.

- Step 1: Create a Data Management Plan (DMP). The DMP should outline the data types to be generated, the metadata standards to be used, the responsible parties, and the selected data repository. Funding agencies often require a DMP [16].

- Step 2: Identify and Adopt Community Standards. Early identification of relevant community standards is crucial. This includes:

- Metadata Schemas: Define the minimal required information for reporting catalytic data.

- Controlled Vocabularies: Use standardized terms for catalyst names (e.g., "Pt/SiOâ‚‚"), reactor types (e.g., "fixed-bed"), and measured properties (e.g., "turnover frequency").

- Data Formats: Use open, non-proprietary file formats (e.g., CSV, JSON, XML) for data to ensure long-term accessibility [16] [14].

The FAIRification Workflow for Experimental Data

The process of making data FAIR, known as "FAIRification," can be visualized as a workflow encompassing the entire research lifecycle.

Diagram 1: The FAIRification workflow for catalytic data, from experimental planning to repository deposit.

Protocol: Generating and Publishing FAIR-Compliant Catalytic Benchmarking Data

This protocol uses the catalytic decomposition of methanol on metal catalysts, as referenced in the CatTestHub database, as a model experiment [1].

3.3.1 Objective: To measure and report the catalytic activity of a standard Pt/SiOâ‚‚ catalyst for methanol decomposition, generating a FAIR dataset for community benchmarking.

3.3.2 Experimental Procedure:

- Catalyst Preparation: Load a fixed mass (e.g., 50 mg) of commercial Pt/SiO₂ catalyst (e.g., Sigma Aldrich 520691) into a fixed-bed reactor. Pre-reduce the catalyst in flowing H₂ (e.g., 50 sccm) at 400°C for 2 hours [1].

- Reaction Testing: After reduction, switch the feed to a mixture of methanol and inert gas (N₂) at a defined weight hourly space velocity (WHSV). Maintain the reactor at the target reaction temperature (e.g., 250°C).

- Product Analysis: Analyze the reactor effluent using an online gas chromatograph (GC) equipped with a flame ionization detector (FID) or mass spectrometer (MS).

- Data Acquisition: Measure methanol conversion and product selectivity at steady-state conditions (typically after 1 hour on stream). Record all raw data from the GC.

3.3.3 Data Processing and Metadata Generation:

- Process Raw Data: Convert raw GC counts to molar concentrations using calibration curves. Calculate methanol conversion and carbon-containing product selectivities.

- Compile Required Metadata: Create a README file or metadata sheet using the following table as a guide.

Table 2: Essential Metadata for Catalytic Benchmarking Data Reuse

| Metadata Category | Specific Attribute | Example Entry | Function/Importance for Reuse |

|---|---|---|---|

| Catalyst Identifier | Material Name | Pt/SiOâ‚‚ | Uniquely identifies the catalyst material. |

| Supplier & Catalog No. | Sigma Aldrich, 520691 | Allows other researchers to source the same material. | |

| Characterization Data (DOI) | 10.xxxx/zenodo.xxxxx | Links to surface area, metal dispersion, etc. | |

| Reaction Conditions | Reaction Type | Methanol Decomposition | Defines the chemical transformation. |

| Temperature | 250 °C | Critical for kinetic comparisons and reproducibility. | |

| Pressure | 1 atm | Defines the reaction environment. | |

| WHSV | 10 hâ»Â¹ | Allows normalization of activity data. | |

| Reactor System | Reactor Type | Fixed-Bed, Quartz | Defines the reactor geometry and material. |

| Catalyst Mass | 50 mg | Necessary for rate calculations. | |

| Feed Composition | 5% CH₃OH in N₂ | Defines the reactant partial pressures. | |

| Performance Data | Methanol Conversion | 45% | Primary performance metric. |

| Selectivity to CO | 95% | Defines product distribution. | |

| Turnover Frequency (TOF) | 0.15 sâ»Â¹ | Intrinsic activity metric, requires metal dispersion. | |

| Provenance & Admin | License | CC-BY 4.0 | Dictates terms of reuse. |

| ORCID of Contributors | 0000-0002-... | Provides credit and accountability. | |

| Funding Source | DE-SC0023464 | Acknowledges financial support. |

- 3.3.4 Data Deposition and Publication:

- Select a Repository: Choose a suitable repository such as a domain-specific option (e.g., CatTestHub), an institutional repository, or a general-purpose platform like Zenodo or Figshare that provides persistent identifiers (DOIs) [1] [14].

- Upload Data and Metadata: Upload the processed data table(s) and the comprehensive metadata file. The data files should be in open formats (e.g., .csv, .txt).

- Finalize and Publish: Finalize the dataset entry, obtaining a permanent DOI. Use this DOI to cite the dataset in related publications.

The Scientist's Toolkit for FAIR Catalysis Data

Successfully implementing FAIR principles requires a combination of reagents, tools, and infrastructure. The following table details key resources.

Table 3: Essential Research Reagent Solutions and Tools for FAIR Catalysis Data

| Item / Tool | Category | Specific Example / Standard | Function in FAIR Implementation |

|---|---|---|---|

| Reference Catalysts | Research Reagent | EuroPt-1, Zeolyst zeolites [1] | Provides a benchmark material for comparing catalytic performance across different labs, ensuring interoperability of results. |

| Persistent Identifier | Infrastructure Service | Digital Object Identifier (DOI) [16] | Provides a permanent, unique identifier for a dataset, making it Findable and citable. |

| Metadata Standard | Documentation Tool | Domain-specific schema, README.txt template [16] | Provides a structured format for describing data, enabling Interoperability and Reusability. |

| Controlled Vocabulary | Documentation Tool | IUPAC terminology, OntoCat [14] | Standardizes terminology used in metadata and data, preventing ambiguity and ensuring Interoperability. |

| Data Repository | Infrastructure Service | CatTestHub [1], Zenodo, Figshare [14] | Hosts data, provides a DOI, indexes metadata for search, and facilitates Accessibility. |

| Standard Data Format | Data File | .csv, .json, .xml [14] | Ensures data is in an open, machine-readable format that remains Accessible and Interoperable over time. |

| Usage License | Legal Tool | Creative Commons (CC-BY, CC0) [17] | Legally encodes the terms for Reuse, removing ambiguity about how others may use the data. |

| Enterobactin | Enterobactin, CAS:28384-96-5, MF:C30H27N3O15, MW:669.5 g/mol | Chemical Reagent | Bench Chemicals |

| EtDO-P4 | EtDO-P4, CAS:245329-78-6, MF:C31H52N2O4, MW:516.8 g/mol | Chemical Reagent | Bench Chemicals |

Data Relationships and Provenance in Catalysis Benchmarking

A key aspect of reusability is understanding how different digital objects in a research project are interconnected. The following diagram maps these critical relationships.

Diagram 2: Relationships between key digital objects in a FAIR catalysis dataset.

Application Note: Fundamentals of Catalytic Benchmarking

Background and Significance

Benchmarking serves as a critical methodology in catalytic research, enabling direct comparison of catalyst performance across different laboratories and research groups. The process involves systematic comparison of performance metrics against established standards, industry averages, or competitor results to identify strengths, weaknesses, and improvement opportunities [18]. For catalytic studies, this provides indispensable validation of new catalyst materials and processes, ensuring research quality and reproducibility.

According to established benchmarking principles, an effective benchmark report must include several key components: a clearly defined objective or KPI being measured, reliable benchmark data from both internal and external sources, a robust analysis framework, appropriate visual representation of data, and actionable insights with recommendations [18]. These elements ensure that catalytic benchmarking moves beyond simple data collection to provide meaningful guidance for research direction.

Key Benchmarking Initiatives in Catalysis

The evolution of catalytic benchmarking has seen several landmark initiatives, each addressing specific needs within the research community. The EUROPT series of conferences and special issues, including the upcoming EUROPT 2025, represents one sustained effort in advancing continuous optimization methodologies relevant to catalytic research [19]. These forums emphasize the importance of "advanced computational techniques, consistently supported by thorough and well-designed experimental validation" – a principle that directly applies to catalytic benchmarking protocols.

Contemporary research in single-atom catalysts (SACs) for the two-electron oxygen reduction reaction (2e- ORR) exemplifies modern benchmarking applications. As noted in recent reviews, "Single-atom catalysts (SACs) consist of individual metal atoms dispersed on a support, allowing for high structural tunability and cost-effectiveness" [11]. The unsaturated coordination environments and unique electronic structures of SACs significantly enhance their catalytic activity, while isolated active sites improve selectivity for hydrogen peroxide production, making systematic benchmarking particularly crucial for comparing performance across different SAC architectures.

Experimental Protocols and Methodologies

Protocol: Catalyst Performance Benchmarking for 2e- Oxygen Reduction Reaction

Objective and Scope

This protocol establishes standardized procedures for evaluating and benchmarking catalyst performance specifically for the two-electron oxygen reduction reaction (2e- ORR), which enables electrochemical hydrogen peroxide synthesis under ambient conditions [11]. The methodology allows for direct comparison of catalytic activity, selectivity, and stability across different catalyst materials, particularly single-atom catalysts (SACs).

Materials and Equipment

Table 1: Essential Research Reagents and Equipment for 2e- ORR Benchmarking

| Item | Specification | Function/Purpose |

|---|---|---|

| Working Electrode | Glass carbon electrode (5mm diameter) | Platform for catalyst ink deposition and electrochemical testing |

| Catalyst Ink | 5 mg catalyst, 750 μL isopropanol, 250 μL water, 40 μL Nafion | Uniform dispersion of catalyst material on electrode surface |

| Reference Electrode | Reversible Hydrogen Electrode (RHE) | Potential reference and calibration |

| Counter Electrode | Platinum wire | Completes electrochemical circuit |

| Electrolyte | 0.1 M KOH or 0.1 M HClOâ‚„ (Oâ‚‚-saturated) | Reaction medium with controlled pH and Oâ‚‚ concentration |

| Rotating Ring-Disk Electrode (RRDE) | Pine Research Instrumentation | Measures disk current and ring current simultaneously |

| Electrochemical Workstation | Bi-potentiostat configuration | Controls electrode potentials and records current responses |

Detailed Experimental Procedure

Step 1: Catalyst Ink Preparation

- Precisely weigh 5.0 mg of catalyst material using analytical balance.

- Disperse catalyst in 750 μL isopropanol and 250 μL deionized water mixture.

- Add 40 μL of 5% Nafion solution as binder.

- Sonicate mixture for 60 minutes using ultrasonic bath to achieve homogeneous dispersion.

Step 2: Working Electrode Preparation

- Polish glassy carbon electrode sequentially with 0.3 μm and 0.05 μm alumina slurry on microcloth.

- Rinse thoroughly with deionized water between polishing steps.

- Deposit 10 μL of catalyst ink onto polished glassy carbon surface.

- Dry at room temperature for 15 minutes followed by 40°C for 10 minutes.

- Calculate catalyst loading mass based on ink concentration and deposition volume.

Step 3: Electrochemical Cell Assembly

- Assemble standard three-electrode system in electrochemical cell.

- Fill cell with appropriate electrolyte solution (0.1 M KOH for alkaline or 0.1 M HClOâ‚„ for acidic conditions).

- Saturate electrolyte with oxygen by bubbling for 30 minutes prior to measurements.

- Maintain oxygen blanket above electrolyte during measurements.

Step 4: RRDE Measurements

- Set rotation speed to 1600 rpm for all measurements.

- Apply collection efficiency factor (N = 0.37) for hydrogen peroxide quantification.

- Perform cyclic voltammetry from 0.2 to 1.2 V vs. RHE at scan rate of 10 mV/s.

- Record both disk current (Id) and ring current (Ir) simultaneously.

- Calculate hydrogen peroxide selectivity using formula: H₂O₂% = 200 × (Ir/N) / (Id + I_r/N)

Step 5: Stability Testing

- Perform accelerated durability test via potential cycling between 0.6 and 1.0 V vs. RHE.

- Conduct chronoamperometry at constant potential for minimum 10 hours.

- Measure catalyst performance retention after stability testing.

Data Analysis and Benchmarking Framework

Key Performance Indicators (KPIs)

Table 2: Catalytic Performance Benchmarking Metrics for 2e- ORR

| Performance Metric | Calculation Method | Benchmark Reference | Target Value |

|---|---|---|---|

| Onset Potential | Potential at current density of 0.1 mA/cm² | Compared to standard catalysts (PtHg) | > 0.8 V vs. RHE |

| Half-wave Potential | Potential at half of diffusion-limited current | Industry benchmark: < 0.7 V vs. RHE | > 0.75 V vs. RHE |

| H₂O₂ Selectivity | H₂O₂% = 200 × (Ir/N)/(Id + I_r/N) | Highest reported: >95% | >90% across potential range |

| Mass Activity | Current normalized to catalyst mass at 0.65 V | Commercial catalyst benchmarks | >50 A/g at 0.65 V |

| Turnover Frequency | Molecules converted per active site per second | SACs reference: 1-10 eâ»/site/s | >5 eâ»/site/s |

| Stability | Current/selectivity retention after 10,000 cycles | Industry standard: <40% degradation | <20% performance loss |

Benchmarking Analysis Protocol

- Data Normalization: Normalize all current values to geometric surface area, electroactive surface area, and catalyst loading mass.

- Statistical Validation: Perform minimum three independent measurements for each catalyst; report mean values with standard deviations.

- Reference Comparison: Include standard catalyst (commercial Pt/C or PtHg) in each experimental set for cross-validation.

- Uncertainty Quantification: Calculate measurement uncertainties for all reported performance metrics.

Visualization of Benchmarking Workflows

Catalyst Benchmarking Protocol

Catalyst Benchmarking Workflow

SACs Optimization Strategies

SACs Optimization Pathways

Advanced Benchmarking Implementation

Protocol: Community-Based Benchmarking Initiative

Initiative Establishment

- Stakeholder Engagement: Identify and recruit key research laboratories and institutions with complementary expertise in catalytic research.

- Standard Development: Establish consensus on standardized testing protocols, reference materials, and data reporting formats.

- Data Infrastructure: Create centralized repository for benchmark data with controlled access and version management.

Implementation Framework

- Reference Materials: Develop and distribute certified reference catalyst materials to all participating laboratories.

- Interlaboratory Studies: Coordinate round-robin testing of standardized materials across multiple laboratories.

- Performance Validation: Establish statistical methods for evaluating interlaboratory reproducibility and data quality.

- Knowledge Transfer: Regular workshops and publications to disseminate best practices and benchmark results.

Data Management and Reporting Standards

Minimum Reporting Requirements

Table 3: Catalytic Benchmarking Reporting Standards

| Data Category | Required Information | Reporting Format |

|---|---|---|

| Catalyst Synthesis | Precursors, synthesis method, thermal treatment conditions | Detailed experimental section |

| Physical Characterization | BET surface area, metal loading, coordination structure | Quantitative values with uncertainty |

| Electrochemical Conditions | Electrolyte composition, pH, temperature, mass loading | Standardized metadata template |

| Performance Metrics | Onset potential, selectivity, mass activity, stability | Table with mean ± standard deviation |

| Testing History | Electrode preparation date, cell assembly details, reference electrodes | Laboratory notebook references |

| Data Processing | Background subtraction methods, normalization procedures | Transparent description of calculations |

Future Perspectives and Development

The evolution of community benchmarking initiatives continues to address emerging challenges in catalytic performance assessment. Current research focuses on developing more sophisticated benchmarking protocols that account for operational stability, scalability potential, and economic viability alongside fundamental performance metrics [11]. The integration of computational screening with experimental validation represents the next frontier in catalytic benchmarking, enabling more efficient identification of promising catalyst materials before extensive laboratory testing.

As the field advances, benchmarking initiatives must adapt to incorporate emerging characterization techniques and standardized testing protocols. Future developments will likely include automated high-throughput screening platforms, machine-learning assisted data analysis, and more sophisticated accelerated durability testing protocols that better predict long-term catalyst performance under practical operating conditions.

Advanced Methodologies and Tools: From AI to Standardized Experimental Protocols

The integration of artificial intelligence (AI) and machine learning (ML) is revolutionizing the field of catalytic kinetics, moving research beyond traditional trial-and-error approaches and theoretical simulations [7]. Accurate prediction of kinetic constants is fundamental for catalyst screening, reaction optimization, and mechanistic understanding [20]. This document outlines application notes and protocols for developing and benchmarking predictive ML models for kinetic constants, framed within the broader context of establishing robust benchmarking protocols for catalytic performance assessment [21].

A Hierarchical Framework for ML in Catalytic Kinetics

Machine learning applications in catalysis can be viewed as a hierarchical framework progressing from initial screening to physical insight, with each stage offering distinct capabilities for kinetic constant prediction [7].

Stage 1: Data-Driven Catalyst Screening and Kinetic Prediction

At this primary level, ML models primarily serve as rapid surrogates for experiments or density functional theory (DFT) calculations, predicting catalytic activity and kinetic parameters based on existing datasets [7]. This approach is particularly valuable for high-throughput screening across vast chemical spaces where first-principles calculations would be prohibitively expensive [22].

Key Applications:

- Prediction of adsorption energies, a critical parameter in kinetic models, using ML interatomic potentials [3]

- Forecasting catalytic activity and selectivity from catalyst composition and reaction conditions [23]

- Rapid screening of catalyst libraries for specific kinetic performance metrics [7]

Stage 2: Physics-Based Modeling and Feature Integration

Intermediate-level applications integrate physical laws and constraints into ML models, enhancing their predictive power and transferability [7]. This hybrid approach ensures predictions are consistent with fundamental catalytic principles.

Key Applications:

- Incorporating microkinetic modeling with ML predictions to maintain thermodynamic consistency [20]

- Using symbolic regression to discover physically meaningful expressions for rate constants [7]

- Integrating ML force fields with kinetic Monte Carlo (kMC) simulations to capture complex effects like site heterogeneity [20]

Stage 3: Symbolic Regression and Theory-Oriented Interpretation

The most advanced applications use ML not merely for prediction but for mechanistic discovery and deriving general catalytic laws [7]. These approaches aim to extract fundamental knowledge about catalytic systems.

Key Applications:

- Automated generation of reaction networks and mechanisms [20]

- Identification of key descriptors governing catalytic kinetics through techniques like SISSO [7]

- Development of "self-driving models" that automatically construct, refine, and validate multiscale catalysis models against experimental data [20]

Machine Learning Algorithms and Workflows for Kinetic Prediction

Algorithm Selection for Kinetic Modeling

The selection of appropriate ML algorithms depends on dataset size, problem complexity, and interpretability requirements.

Table 1: ML Algorithms for Kinetic Constant Prediction

| Algorithm Category | Specific Methods | Best Use Cases for Kinetic Prediction | Interpretability |

|---|---|---|---|

| Tree-Based Methods | Random Forest, XGBoost, Gradient Boosting [22] | Medium-sized datasets, feature importance analysis | Medium (feature importance available) |

| Kernel Methods | Gaussian Process Regression (GPR), Support Vector Regression (SVR) [22] | Small datasets, uncertainty quantification | Medium to Low |

| Neural Networks | Artificial Neural Networks (ANN), Convolutional Neural Networks (CNN), Graph Neural Networks (GNN) [7] [22] | Large, complex datasets, image/spectral data, molecular structures | Low (require explainable AI techniques) |

| Ensemble Methods | Bayesian Inference-Chemical Reaction Neural Networks (B-CRNN) [22] | Microkinetic model parameter optimization, uncertainty propagation | Medium |

Standardized Model Development Workflow

A robust workflow is essential for developing reliable ML models for kinetic prediction.

Figure 1: Standardized workflow for developing ML models predicting kinetic constants.

Data Acquisition and Curation

- Data Sources: High-throughput experimental data, computational datasets (e.g., DFT calculations), structured databases, and literature mining [7] [22]

- Data Quality: Performance of ML models is highly dependent on data quality and volume [7]. Implement rigorous data validation and cleaning protocols

- Standardization: Adopt FAIR (Findable, Accessible, Interoperable, and Reusable) data principles [22]

- Preprocessing: Apply appropriate normalization, handling of missing data, and outlier detection

Feature Engineering and Descriptor Selection

- Catalyst Descriptors: Electronic structure parameters (d-band center), geometric descriptors (coordination number), elemental properties (electronegativity, atomic radius) [7]

- Reaction Descriptors: Reactant/product descriptors, thermodynamic parameters (reaction energy, activation barrier) [7]

- Process Conditions: Temperature, pressure, concentration [22]

- Automated Feature Engineering: Utilize techniques like Automatic Feature Engineering (AFE) and Sure Independence Screening and Sparsifying Operator (SISSO) for high-dimensional descriptor spaces [7]

Model Training and Validation

- Training Approaches: Supervised learning for labeled kinetic data, unsupervised learning for pattern discovery in kinetic datasets [7]

- Validation Techniques: k-fold cross-validation, leave-one-out cross-validation (LOOCV), temporal validation for time-series kinetic data [22]

- Performance Metrics: Mean Absolute Error (MAE), Root Mean Square Error (RMSE), coefficient of determination (R²) for kinetic predictions [22]

- Uncertainty Quantification: Essential for reliable kinetic predictions; implemented through Bayesian methods or ensemble approaches [20]

Benchmarking Protocols for Kinetic Constant Prediction

Establishing standardized benchmarking protocols is critical for fair comparison of different ML approaches and ensuring research reproducibility [21].

Benchmarking Datasets and Metrics

Table 2: Essential Components for Benchmarking ML Models in Catalytic Kinetics

| Benchmarking Component | Description | Examples/Standards |

|---|---|---|

| Standardized Datasets | Curated datasets for training and testing | CatBench framework for adsorption energy prediction [3], Open Catalyst Project datasets |

| Performance Metrics | Quantitative measures for model evaluation | MAE, RMSE, R² for kinetic parameters; computational efficiency metrics [22] |

| Baseline Models | Standard reference models for comparison | DFT calculations, experimental measurements, traditional kinetic models [21] |

| Uncertainty Quantification | Assessment of prediction reliability | Bayesian methods, ensemble approaches, confidence intervals [20] |

The CatBench Framework for ML Interatomic Potentials

The CatBench framework provides a specialized benchmarking approach for ML interatomic potentials in predicting adsorption energies - a critical parameter for kinetic constant estimation [3]. This framework addresses:

- Standardized evaluation across diverse catalyst structures and adsorbates