CatTestHub: The Open-Access Benchmarking Database Revolutionizing Experimental Catalysis Research

This article explores CatTestHub, an innovative open-access database designed to standardize and benchmark experimental data in heterogeneous catalysis.

CatTestHub: The Open-Access Benchmarking Database Revolutionizing Experimental Catalysis Research

Abstract

This article explores CatTestHub, an innovative open-access database designed to standardize and benchmark experimental data in heterogeneous catalysis. Aimed at researchers and scientists, we cover its foundational FAIR data principles and core structure, provide a methodological guide for its application in data reporting and analysis, address common data troubleshooting and performance optimization strategies, and validate its role as a community-wide standard for comparing catalytic materials and technologies. The synthesis of these intents demonstrates how CatTestHub addresses critical reproducibility challenges and accelerates data-driven discovery in catalysis and related fields.

Understanding CatTestHub: A Foundational Guide to the FAIR Catalysis Database

The field of experimental catalysis is undergoing a profound transformation, driven by the increasing complexity of catalytic materials and the emergence of data-driven research paradigms. The ability to quantitatively compare new catalytic materials and technologies is fundamentally hindered by the widespread lack of consistently collected catalytic data [1]. While certain catalytic chemistries have been studied across decades of scientific research, meaningful quantitative comparisons based on literature information remain challenging due to significant variability in reaction conditions, types of reported data, and reporting procedures [1]. This reproducibility crisis represents a critical bottleneck in catalyst discovery and development, particularly as the field faces growing demands for sustainable energy technologies and carbon-neutral chemical processes [2]. The CatTestHub database emerges as a strategic response to these challenges, providing an open-access platform dedicated to benchmarking experimental heterogeneous catalysis data through systematically reported catalytic activity information for selected probe chemistries [1].

CatTestHub: A Community-Wide Benchmarking Resource

Database Architecture and Design Principles

CatTestHub is designed as an open-access database that combines systematically reported catalytic activity data with relevant material characterization and reactor configuration information [1]. This integrated approach provides a collection of catalytic benchmarks for distinct classes of active site functionality, addressing a critical gap in the catalysis research infrastructure. Through key choices in data access, availability, and traceability, CatTestHub seeks to balance the fundamental information needs of chemical catalysis with the FAIR (Findable, Accessible, Interoperable, and Reusable) data design principles that are essential for modern scientific discovery [1]. The database's current iteration spans over 250 unique experimental data points, collected over 24 solid catalysts, that facilitated the turnover of 3 distinct catalytic chemistries [1]. This curated collection serves as a foundation for a broader community-wide benchmarking effort, with a roadmap for continuous expansion primarily through the addition of kinetic information on select catalytic systems by members of the heterogeneous catalysis community.

Current Scope and Expansion Roadmap

Table: CatTestHub Database Current Scope and Metrics

| Aspect | Current Capacity | Expansion Target |

|---|---|---|

| Experimental Data Points | 250+ unique points | Continuous community addition |

| Catalyst Systems | 24 solid catalysts | Expanded material classes |

| Catalytic Chemistries | 3 distinct reactions | Broad probe reaction set |

| Data Types | Activity, characterization, reactor config | Multi-modal data integration |

The architectural framework of CatTestHub is specifically engineered to overcome the limitations of conventional literature-based data extraction, which is increasingly challenging due to the rapid pace of publication. As noted in recent analyses, catalysis practitioners face a daunting task of keeping abreast of the latest developments in their respective fields, with traditional literature searches often spanning several weeks or months [2]. This challenge is particularly acute for fast-growing catalyst families like single-atom catalysts (SACs), which have seen exponential growth in publications over the past decade [2]. CatTestHub's structured data collection approach significantly reduces this burden by providing standardized, machine-readable data formats that enable more efficient literature analysis and data reuse.

The Standardization Imperative: Challenges and Solutions

The Data Reporting Gap in Experimental Catalysis

A critical issue hampering machine-assisted analysis in catalysis is the profound lack of standardization in reporting protocols [2]. Conventional synthesis procedures are typically reported within the "Methods" sections of scientific articles as unstructured natural language-based textual descriptions, creating significant barriers to automated extraction and analysis [2]. This problem is particularly evident in the synthesis of complex catalyst systems like single-atom heterogeneous catalysts (SACs), where synthetic approaches encompass various steps including mixing, wet deposition, pyrolysis, filtering, washing, and annealing, each with multiple relevant parameters [2]. The absence of consistent reporting standards for these parameters fundamentally limits the reproducibility and machine-readability of catalytic data, ultimately slowing the pace of discovery and validation in the field.

Language Models and Protocol Standardization

Recent advances in natural language processing offer promising solutions to the data extraction challenges in catalysis research. Transformer models have demonstrated capability in converting unstructured synthesis descriptions into structured, machine-readable sequences of information [2]. In one proof-of-concept application, a specialized transformer model (ACE) was shown to convert single-atom catalyst protocols into action sequences with associated parameters, covering all steps required for replicating the synthesis [2]. This approach achieved an overall Levenshtein similarity of 0.66, capturing approximately 66% of information from synthesis protocols into correct action sequences [2]. The implementation of such models can dramatically accelerate literature review processes, reducing the time investment for comprehensive literature analysis by over 50-fold according to some estimates [2].

Guidelines for Machine-Readable Synthesis Procedures

To address the critical issue of non-standardized synthesis reporting, researchers have proposed specific guidelines for writing protocols to significantly improve machine-readability [2]. These guidelines emphasize consistent terminology for synthesis steps, explicit reporting of all relevant parameters, and structured formatting that facilitates automated extraction. Comparative analyses demonstrate that when synthesis protocols are modified according to these guidelines, transformer models show significant performance enhancement in information extraction accuracy [2]. This standardization enables more reliable statistical inference of synthesis trends and applications, ultimately expediting literature review and analysis while fostering better reproducibility across the research community.

Experimental Protocols and Data Curation Framework

Data Collection and Curation Methodology

The CatTestHub database employs a systematic approach to data collection and curation designed to ensure consistency and reliability across experimental measurements. Each data entry incorporates detailed information on reaction conditions, material properties, and performance metrics, ensuring transparency and interoperability [1]. This multimodal data organization includes structured information on reactor configurations, catalyst characterization results, and kinetic performance measurements, creating a comprehensive resource for benchmarking and validation studies. The database structure is specifically designed to bridge the gap between experimental and computational research, allowing for improved benchmarking and predictive modeling [3].

Action-Term Annotation for Synthesis Protocols

A key innovation in the CatTestHub framework is the implementation of action-term annotation for synthesis procedures. This approach identifies the most commonly used synthetic steps that serve as action terms for annotation purposes [2]. Each step involves capturing relevant parameters such as temperature, temperature ramp, atmosphere, and duration for thermal treatments like pyrolysis. These details are clearly defined and can be customized depending on the required level of experimental detail. The annotation process involves manually labeling synthesis paragraphs using dedicated software, creating a structured dataset that facilitates machine learning and automated analysis [2]. This structured representation of synthesis protocols enables more effective knowledge transfer and experimental replication across different research groups.

Table: Essential Parameters for Catalysis Synthesis Protocol Reporting

| Synthesis Step | Critical Parameters | Reporting Standard |

|---|---|---|

| Pyrolysis | Temperature, ramp rate, atmosphere, duration | °C, °C/min, gas composition, hours |

| Annealing | Temperature, atmosphere, duration | °C, gas composition, hours |

| Precursor Mixing | Concentrations, solvents, mixing method | mol/L, solvent identity, rpm/time |

| Washing | Solvent, volume, cycles, temperature | Solvent identity, mL, number, °C |

| Drying | Temperature, atmosphere, pressure | °C, gas composition, mbar |

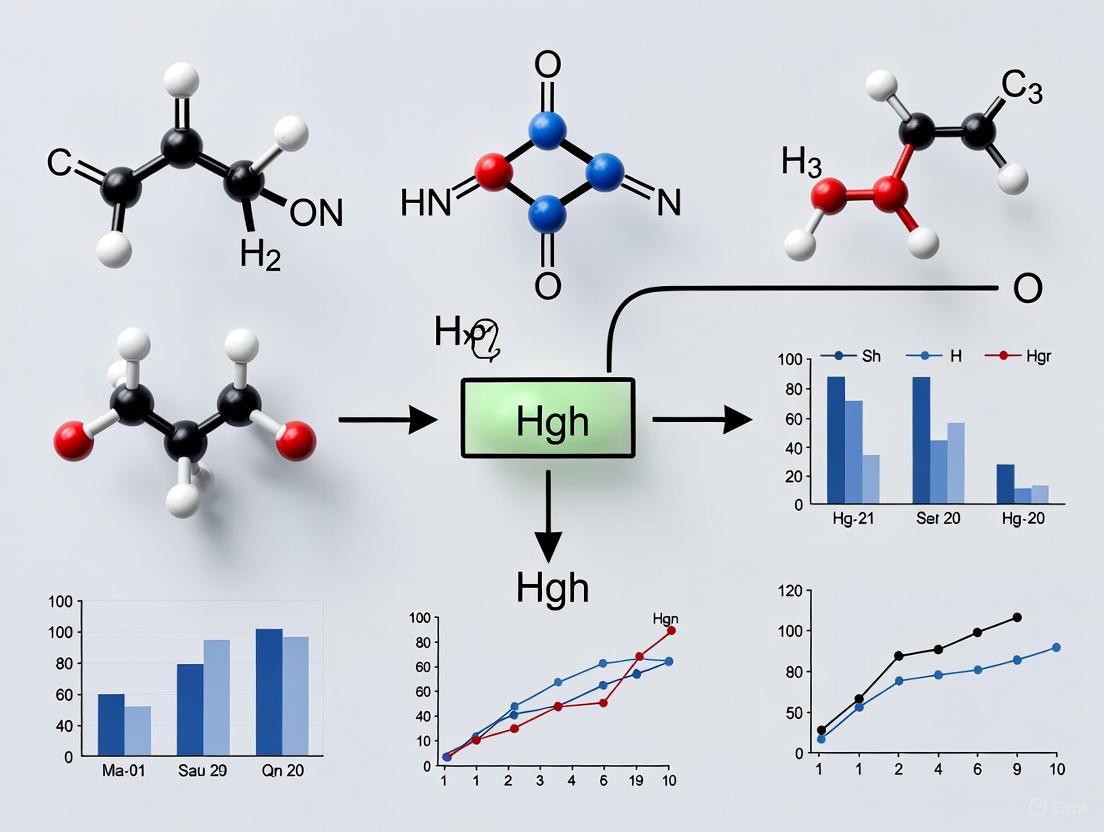

Visualization of Catalysis Data Workflow

The following diagram illustrates the integrated workflow for catalysis data standardization, from experimental synthesis to data repository integration, as implemented in the CatTestHub framework:

The Researcher's Toolkit: Essential Materials and Reagents

Table: Key Research Reagent Solutions for Catalysis Experimental Validation

| Reagent/Material | Function | Application Example |

|---|---|---|

| Zeolitic Imidazolate Frameworks (ZIF-8) | High-surface-area catalyst support | Single-atom catalyst carrier for oxygen reduction reaction [2] |

| Metal Precursors (Chlorides, Nitrates) | Active site formation | Fe-based SAC precursors for electrocatalytic applications [2] |

| Alloy Nanoparticles (Pt3Ir, Pt3Ru1/2Co1/2) | Bimetallic active sites | NH3 electrooxidation catalysts identified via volcano plots [4] |

| Single-Atom Alloys (Rh1Cu) | Isolated active sites | Propane dehydrogenation with enhanced selectivity [4] |

| Metal-Organic Frameworks (PCN-250) | Tunable porous scaffolds | Light alkane C–H bond activation with N2O oxidant [4] |

| Ion-doped CoP | Hydrogen evolution catalyst | Alkaline HER with optimized H adsorption free energy [4] |

The CatTestHub database represents a transformative approach to addressing the critical challenges of standardization and reproducibility in experimental catalysis. By providing a structured, open-access platform for benchmarking catalytic materials, CatTestHub enables more rigorous comparison of catalytic performance across different laboratories and experimental conditions [1]. The integration of standardized synthesis protocols, comprehensive material characterization data, and detailed reaction kinetic information creates a foundation for accelerated catalyst discovery and validation. As the catalysis research community continues to embrace these standardized approaches to data collection, metadata inclusion, and accessibility, the field will be better positioned to overcome existing reproducibility challenges and fully leverage emerging opportunities in machine learning and data-driven catalyst design [3]. The continued expansion and adoption of platforms like CatTestHub will be essential for advancing the development of next-generation catalytic technologies needed for sustainable energy and chemical processing.

The field of heterogeneous catalysis research faces a significant challenge: the inability to quantitatively compare newly developed catalytic materials and technologies due to inconsistent data reporting across the scientific literature. Even for extensively studied catalytic chemistries, quantitative comparisons based on existing literature are hindered by substantial variability in reaction conditions, types of reported data, and reporting procedures [1] [5]. This lack of standardization prevents researchers from definitively determining whether a newly synthesized catalyst genuinely outperforms existing materials or if a reported rate of turnover is free from corrupting influences like diffusional limitations [5].

CatTestHub emerges as a direct response to this challenge, presenting an open-access database specifically dedicated to benchmarking experimental heterogeneous catalysis data [1]. By combining systematically reported catalytic activity data for selected probe chemistries with relevant material characterization and reactor configuration information, this platform provides a collection of catalytic benchmarks for distinct classes of active site functionality [1] [6]. The database is designed to balance the fundamental information needs of chemical catalysis with the FAIR data principles (Findability, Accessibility, Interoperability, and Reuse), ensuring that data remains findable, accessible, interoperable, and reusable for the entire research community [5].

The CatTestHub Mission: Core Principles and Design Philosophy

Core Architectural Framework

The design of CatTestHub's database architecture was fundamentally informed by the need for practical utility and long-term accessibility. Unlike specialized database structures that may become obsolete, CatTestHub implements a simple spreadsheet-based format that offers ease of findability and is likely to remain readily accessible for the foreseeable future [5]. This intentional design choice reflects the platform's commitment to serving as a persistent community resource rather than a specialized tool with limited accessibility.

The database curation process involves the intentional collection of observable macroscopic quantities measured under well-defined reaction conditions, detailed descriptions of reaction conditions and parameters, and supporting characterization information for the various catalysts investigated [5]. This comprehensive approach ensures that researchers can not only access catalytic performance data but also understand the material properties and experimental contexts that produced those results.

Implementation of FAIR Data Principles

CatTestHub embodies its open-access philosophy through rigorous implementation of FAIR data principles:

Findability and Accessibility: The database is available online as a spreadsheet (cpec.umn.edu/cattesthub), providing users with ease of access and the capability to download and reuse data [5]. This open-access model ensures that economic and institutional barriers do not prevent researchers from accessing critical benchmarking data.

Interoperability and Reuse: Through the use of unique identifiers in the form of digital object identifiers (DOI), ORCID, and funding acknowledgements for all data, CatTestHub provides electronic means for accountability, intellectual credit, and traceability [5]. This infrastructure ensures that data contributors receive appropriate credit while maintaining clear provenance for all hosted information.

The philosophy behind CatTestHub aligns with the broader ethical imperative of open scholarship, which views knowledge as a public good that should be accessible to all researchers regardless of their institutional affiliations or geographic location [7] [8]. By removing economic and institutional barriers, CatTestHub enables more rapid scientific progress, fosters global collaboration, and increases the overall transparency of research methodologies in heterogeneous catalysis [7].

Quantitative Scope: Current Database Inventory

CatTestHub's current iteration encompasses a substantial foundation of experimental data for benchmarking purposes. The table below summarizes the quantitative scope of the database in its present form:

Table 1: Current Quantitative Scope of CatTestHub Database

| Database Component | Current Scale | Examples/Specifics |

|---|---|---|

| Experimental Data Points | Over 250 unique points [1] [6] | Systematically reported catalytic activity data |

| Solid Catalysts | 24 distinct materials [1] [6] | Metal and solid acid catalysts |

| Catalytic Chemistries | 3 distinct probe reactions [1] [6] | Methanol decomposition, formic acid decomposition, Hofmann elimination of alkylamines |

The database currently hosts two primary classes of catalysts with associated probe reactions designed to benchmark specific types of catalytic functionality:

Table 2: Catalyst Classes and Probe Reactions in CatTestHub

| Catalyst Class | Probe Reactions | Active Site Functionality Probed |

|---|---|---|

| Metal Catalysts | Decomposition of methanol [5] [9] | Metallic active sites for dehydrogenation |

| Metal Catalysts | Decomposition of formic acid [5] [9] | Metallic active sites for decomposition pathways |

| Solid Acid Catalysts | Hofmann elimination of alkylamines [5] [9] | Brønsted-acid sites in aluminosilicate zeolites |

Experimental Protocols and Methodologies

Workflow for Catalytic Benchmarking

The process of establishing reliable catalytic benchmarks in CatTestHub follows a systematic workflow that ensures data quality and reproducibility:

Detailed Methodologies for Probe Reactions

Methanol Decomposition over Metal Catalysts

Objective: To measure the dehydrogenation activity of metal catalysts under standardized conditions [5].

Materials and Reagents:

- Methanol (>99.9%, Sigma-Aldrich 34860-1L-R) [5]

- Metal Catalysts: Pt/SiO₂ (Sigma-Aldrich 520691), Pt/C (Strem Chemicals 7440-06-04), Pd/C (Strem Chemicals 7440-05-03), Ru/C (Strem Chemicals 7440-18-8), Rh/C (Strem Chemicals 7440-16-6), Ir/C (ThermoFisher 10609149) [5]

- Gases: Nitrogen (99.999%, Ivey Industries), Hydrogen (99.999%, Airgas) [5]

Experimental Protocol:

- Catalyst Pretreatment: Reduce catalyst samples in flowing hydrogen at 573 K for 1 hour prior to reaction [5].

- Reaction Conditions: Maintain reactor temperature between 448-644 K with methanol partial pressure of 10 kPa in nitrogen balance [5].

- Product Analysis: Quantify hydrogen production rates using gas chromatography [5].

- Activity Calculation: Determine turnover frequencies (TOF) based on active metal sites quantified by H₂ chemisorption [5].

Hofmann Elimination over Solid Acid Catalysts

Objective: To quantify Brønsted acid site density and strength in aluminosilicate zeolites [5].

Materials and Reagents:

- Alkylamines: Tetramethylammonium (TMA), trimethylpropylammonium (TMP), dimethyldipropylammonium (DMP) [5]

- Zeolite Catalysts: H-ZSM-5, H-Y, and other framework types with varying Si/Al ratios [5]

Experimental Protocol:

- Catalyst Activation: Pretreat zeolites under oxygen flow at 773 K for 1 hour to remove organic contaminants [5].

- Reaction Conditions: Conduct reactions at 473 K with alkylamine partial pressure of 0.5 kPa in helium balance [5].

- Product Analysis: Monitor alkene products (propylene, butene) using online mass spectrometry [5].

- Acidity Quantification: Calculate Brønsted acid site densities from alkene production rates [5].

Research Reagent Solutions

Table 3: Essential Research Reagents for Catalytic Benchmarking

| Reagent/Catalyst | Source/Provider | Function in Benchmarking |

|---|---|---|

| Methanol (≥99.9%) | Sigma-Aldrich (34860-1L-R) [5] | Probe molecule for metal-catalyzed dehydrogenation |

| Pt/SiO₂ | Sigma-Aldrich (520691) [5] | Reference metal catalyst for activity comparison |

| H-ZSM-5 Zeolite | Commercial sources or standardized synthesis [5] | Reference solid acid catalyst for acidity measurements |

| Tetramethylammonium Ions | Various chemical suppliers [5] | Probe molecules for quantifying Brønsted acid sites |

| Nitrogen Carrier Gas (99.999%) | Ivey Industries [5] | Inert carrier gas for reaction studies |

Database Structure and Community Integration

CatTestHub employs a structured architecture designed to accommodate diverse data types while maintaining consistency and searchability:

Community-Driven Expansion Model

CatTestHub operates on a community-driven model where the quality and utility of the benchmark is continuously improved through additions of kinetic information on select catalytic systems by members of the heterogeneous catalysis community at large [1] [9]. This approach mirrors successful community benchmarking efforts in other scientific fields, where collective participation ensures comprehensive coverage and validation.

The platform includes a roadmap for expansion, primarily through continuous addition of kinetic information on select catalytic systems by the broader research community [1] [6]. This strategy allows CatTestHub to evolve beyond its initial scope of 250 experimental data points across 24 solid catalysts and 3 catalytic chemistries, gradually encompassing a wider range of materials, reactions, and conditions.

Future Directions and Community Impact

The development of CatTestHub represents a significant step toward addressing the reproducibility crisis in experimental catalysis by providing standardized benchmarks that all researchers can use to validate their experimental systems and methodologies [5]. This function is particularly valuable for contextualizing new catalytic discoveries and validating novel measurement techniques.

As the database expands through community contributions, it has the potential to enable large-scale comparative studies across different catalyst classes and reaction types, potentially revealing underlying relationships between material properties and catalytic function that would be difficult to discern from disconnected literature reports. Furthermore, the systematically collected experimental data in CatTestHub provides an essential validation resource for computational catalysis efforts, creating opportunities for closer integration between theoretical predictions and experimental measurements in catalyst design [5].

By maintaining its commitment to open-access principles and community governance, CatTestHub establishes itself not merely as a static repository of data, but as a dynamic community infrastructure that supports the advancement of heterogeneous catalysis research through standardized benchmarking, transparent reporting, and collaborative knowledge building.

The management of experimental catalysis data presents a significant challenge for researchers, scientists, and drug development professionals. The variability in reaction conditions, types of reported data, and reporting procedures often hinders the quantitative comparison of catalytic materials and technologies based on literature information [1]. The CatTestHub database emerges as a solution to this problem, providing an open-access platform dedicated to benchmarking experimental heterogeneous catalysis data [1]. This document outlines the application notes and protocols for navigating and utilizing its foundational structure, which, for many research groups, begins as an organized spreadsheet-based system before potential migration to a full-scale database management system (DBMS). A well-designed spreadsheet architecture serves as the critical first step in ensuring data consistency, integrity, and accessibility, forming the basis for informed decision-making and reproducible scientific research [10].

Foundational Concepts of Database Architecture

At its core, a database is an organized system for collecting, storing, and managing data, replacing the need for separate, disconnected spreadsheets or documents by organizing information into linked tables [10]. Effective data management is the foundation for handling a wide range of business and research data, and its well-designed structure is crucial for ensuring the consistency, integrity, and accessibility of information [10].

While a simple, file-based architecture where data is stored in individual files (like spreadsheets) can be sufficient for smaller amounts of data, it can become unwieldy and harder to manage as the data volume grows [11]. The essential components of any database architecture, which can be mapped to a spreadsheet environment, include [10]:

- Data Model: Defines the logical structure of the data. In a spreadsheet, this translates to the schema of your worksheets and columns.

- Database Schema: The specific implementation of the data model. In a spreadsheet, this is the layout of your tabs, headers, and data validation rules.

- Query Language: The set of commands used to query and manipulate data. Within a spreadsheet, this is often achieved through functions (e.g.,

VLOOKUP,FILTER), pivot tables, and built-in filtering tools.

The CatTestHub Data Structure and Schema

CatTestHub is designed as a collection of catalytic benchmarks for distinct classes of active site functionality [1]. Its architecture combines systematically reported catalytic activity data for selected probe chemistries with relevant material characterization and reactor configuration information [1]. For the purpose of a spreadsheet-based implementation, the database structure can be broken down into a series of interrelated tables.

The logical workflow and relationships between these core data entities in CatTestHub can be visualized as follows:

The following tables summarize the key quantitative and descriptive data points that must be captured within the spreadsheet structure to align with the CatTestHub benchmarking goals.

Table 1: Core Experimental Scope of CatTestHub [1]

| Data Category | Reported Metric | Description |

|---|---|---|

| Catalyst Scope | Number of solid catalysts | 24 unique catalysts |

| Reaction Scope | Number of distinct catalytic chemistries | 3 probe reactions |

| Data Volume | Number of unique experimental data points | Over 250 data points |

Table 2: Essential Data Tables for a Spreadsheet-Based Implementation

| Table Name | Primary Function | Key Data Fields (Examples) |

|---|---|---|

| Catalyst_Registry | Unique identification of all tested materials. | CatalystID, ChemicalComposition, SynthesisMethod, BETSurface_Area (m²/g) |

| Experimental_Setup | Detailed description of the reactor and conditions. | ExperimentID, ReactorType, CatalystMass (g), ReactantFlow_Rate (mL/min) |

| Reaction_Conditions | Precise parameters for each experimental run. | ExperimentID, Temperature (°C), Pressure (bar), ReactantConcentration (mol%) |

| Kinetic_Data | Core performance metrics and results. | ExperimentID, Conversion (%), Selectivity (%), TurnoverFrequency (TOF, s⁻¹), Timestamp |

Experimental Protocols for Data Entry and Curation

To ensure the database serves as a reliable community-wide benchmark, the following protocols for data entry and curation must be rigorously followed. These protocols are adapted from good practices for reporting experimental data to facilitate reproducibility [12].

Protocol: Reporting a New Catalyst and its Characterization Data

Objective: To consistently document the identity and properties of a new catalytic material within the Catalyst_Registry table.

Materials:

- Synthesized catalyst sample.

- Characterization equipment (e.g., BET analyzer, SEM, XRD).

Procedure:

- Assign a Unique ID: Generate a new, unique

Catalyst_IDfollowing the lab's naming convention (e.g.,CAT_024_PtAl2O3). - Record Composition: In the

Chemical_Compositionfield, list all active metals and supports with their nominal loadings (e.g., "1% Pt on Al2O3"). - Document Synthesis: In the

Synthesis_Methodfield, provide a concise yet descriptive protocol (e.g., "Wet impregnation, calcined at 500°C for 4h"). - Input Quantitative Characterization: Enter numerical data from characterization techniques into the appropriate columns (e.g.,

BET_Surface_Area). - Cross-Reference: Ensure the

Catalyst_IDis used consistently in all subsequent experimental data sheets that utilize this material.

Troubleshooting:

- Ambiguous ID: If a

Catalyst_IDis duplicated or unclear, refer to the original lab notebook for synthesis details to resolve the conflict. - Missing Data: If a characterization value is not available, enter "N/A" to distinguish from an oversight. Do not leave the cell blank.

Protocol: Logging Catalytic Performance Data

Objective: To accurately record the conditions and results of a catalytic test experiment, linking it to a specific catalyst.

Materials:

- Reactor system with calibrated mass flow controllers and temperature sensors.

- Online or offline analytical equipment (e.g., GC, MS).

- Pre-defined spreadsheet template based on the Kinetic_Data table.

Procedure:

- Link to Catalyst: Enter the correct

Catalyst_IDfrom the Catalyst_Registry. - Create Experiment ID: Generate a unique

Experiment_IDthat links to this specific run (e.g.,EXP_250_CAT_024). - Record Conditions: Precisely enter all relevant parameters from the

Reaction_Conditionstable (Temperature, Pressure, etc.). - Calculate and Input Kinetic Data: After analysis, calculate and enter performance metrics like

ConversionandSelectivity. TheTurnover_Frequency (TOF)must be calculated based on the number of active sites, if known. - Metadata: Include a

Timestampfor the experiment's completion.

Troubleshooting:

- Steady-State Assumption: Ensure all performance data is recorded only after the catalytic system has reached a verifiable steady state, as indicated by stable conversion readings over time.

- Data Integrity: Use spreadsheet data validation tools to restrict entries in numerical columns to prevent typographical errors.

The Scientist's Toolkit: Essential Research Reagent Solutions

The following table details key materials and resources used in the construction and utilization of the CatTestHub database. Adherence to FAIR (Findable, Accessible, Interoperable, Reusable) data design principles is a core tenet of this toolkit [1].

Table 3: Key Research Reagent Solutions for Catalytic Database Management

| Item / Resource | Function / Description |

|---|---|

| FAIR Data Principles | A set of guiding principles to make data Findable, Accessible, Interoperable, and Reusable. This balances the information needs of chemical catalysis with modern data management [1]. |

| Resource Identification Initiative (RII) | Helps researchers sufficiently cite key resources (e.g., antibodies, plasmids) used to produce scientific findings by providing unique identifiers, promoting reproducibility [12]. |

| Structured, Transparent, Accessible Reporting (STAR) | An initiative that addresses the problem of structure and standardization when reporting methods, which can be adapted for protocol reporting in catalysis [12]. |

| SMART Protocols Ontology | An ontology for the semantic representation of experimental protocols and workflows, providing a machine-processable framework for detailed data description [12]. |

| JavaScript Object Notation (JSON) | A lightweight data-interchange format. A machine-readable checklist in JSON format can be used to measure the completeness of a reported protocol [12]. |

Data Integrity and Validation Workflow

Maintaining the quality and reliability of data within a collaborative, spreadsheet-based system requires a defined validation workflow. This process ensures that only verified and accurate data is used for benchmarking and analysis.

The path from raw experimental data to a validated database entry involves several critical checkpoints, as shown in the following workflow:

Workflow Description:

- Data Entry: A researcher enters raw experimental data into the appropriate spreadsheet tabs following the established protocols.

- Automated Check: Spreadsheet functions and formulas are used to automatically flag outliers, check for mandatory field completion, and validate data formats (e.g., numerical ranges, date formats).

- Peer Review: A second researcher reviews the entered data and the experimental context for scientific soundness and consistency with reported methodologies.

- Curator Approval: A designated data curator gives final approval, after which the data row or sheet is locked to prevent further changes, ensuring its permanence for benchmarking purposes.

The spreadsheet-based architecture of CatTestHub provides a pragmatic and powerful structure for navigating the complex landscape of experimental catalysis data. By implementing a carefully designed schema with linked tables, adhering to strict data entry protocols, and utilizing a robust validation workflow, researchers can build a high-quality, reproducible, and scalable resource. This structured approach directly supports the broader thesis of CatTestHub by creating a reliable foundation for benchmarking catalytic materials, thereby accelerating research and development in catalysis and related drug development fields. Future work may involve the transition from a spreadsheet-based system to a formal DBMS to enhance query capabilities, security, and scalability as the community-driven database continues to expand [1].

The CatTestHub database represents a significant advancement in the field of experimental heterogeneous catalysis by providing an open-access, community-wide platform for benchmarking catalytic performance. Designed in accordance with the FAIR data principles (Findability, Accessibility, Interoperability, and Reuse), this database addresses a critical challenge in catalysis research: the inability to quantitatively compare newly evolving catalytic materials and technologies due to inconsistent data reporting across the scientific literature [5]. The platform serves as a centralized repository for experimental catalysis data, combining systematically reported catalytic activity data with comprehensive material characterization and detailed reactor configuration information. This integrated approach enables meaningful comparisons between catalytic studies and establishes reliable benchmarks for distinct classes of active site functionality [1].

The foundation of CatTestHub's architecture rests on three interconnected core data components that are essential for reproducing experimental results and validating catalytic performance. First, catalytic activity data provides quantitative measures of reaction kinetics and turnover rates under well-defined conditions. Second, material characterization offers nanoscopic-level insights into catalyst structure and composition, enabling the correlation of macroscopic performance with intrinsic material properties. Third, reactor configuration details document the experimental apparatus and conditions, ensuring that measurements are free from artifacts such as heat and mass transfer limitations [5]. Together, these components create a robust framework for evaluating catalytic materials, where the macroscopic measures of catalytic activity can be properly contextualized at the atomic scale of active sites.

Catalytic Activity Data

Quantitative Metrics and Reporting Standards

Catalytic activity data forms the quantitative foundation for evaluating catalyst performance in the CatTestHub database. This component encompasses kinetic parameters, conversion rates, selectivity measurements, and turnover frequencies (TOF) that collectively describe how effectively a catalyst facilitates a chemical transformation. These metrics must be measured under well-defined reaction conditions and reported with sufficient detail to enable direct comparison between different catalytic systems. The reporting of numerical data should follow established scientific guidelines, presenting results in a form as free from interpretation as possible and including estimates of both imprecision (random uncertainty) and inaccuracy (systematic error) [13].

A critical requirement for catalytic activity data is the demonstration that reported rates are free from corrupting influences such as diffusional limitations, catalyst deactivation, or thermodynamic constraints [5]. CatTestHub specifically curates observable macroscopic quantities measured under well-defined reaction conditions, supported by metadata that provides context for the reported data. The database employs unique identifiers in the form of digital object identifiers (DOI) and ORCID to ensure electronic means for accountability, intellectual credit, and traceability for all catalytic activity data [5].

Table 1: Key Quantitative Metrics for Reporting Catalytic Activity

| Metric | Description | Reporting Requirements | Units |

|---|---|---|---|

| Turnover Frequency (TOF) | Number of reactant molecules converted per active site per unit time | Type of active site counted, method of counting | s⁻¹ |

| Conversion | Fraction of reactant converted to products | Definition of conversion, basis for calculation | % |

| Selectivity | Fraction of converted reactant forming a specific product | Complete product distribution | % |

| Yield | Fraction of reactant converted to a specific product | Relationship to conversion and selectivity | % |

| Mass Balance | Closure of carbon (or other element) accounting | Range of acceptable balance (e.g., 96-101%) | % |

| Stability | Change in activity over time | Duration of test, deactivation rate | %/h |

Benchmarking Chemistries in CatTestHub

CatTestHub currently hosts benchmarking data for specific probe reactions selected for their ability to characterize distinct classes of active sites. For metal catalysts, the database includes the decomposition of methanol and formic acid, reactions that provide insights into dehydrogenation capability and metal functionality [5]. For solid acid catalysts, the Hofmann elimination of alkylamines over aluminosilicate zeolites serves as a benchmark reaction for quantifying acid site concentration and strength [9]. These specific reactions were selected because they enable clear differentiation between catalyst functionalities and provide fundamental insights into active site properties.

The selection of these benchmark chemistries follows a strategic approach to catalysis benchmarking. Each reaction serves as a probe reaction specifically chosen to interrogate a particular type of catalytic functionality. For example, alcohol decomposition reactions selectively probe metal sites, while amine elimination reactions selectively probe acid sites. This targeted approach allows researchers to extract specific information about active site properties from the measured kinetic data. The database currently spans over 250 unique experimental data points, collected over 24 solid catalysts, that facilitated the turnover of 3 distinct catalytic chemistries [1]. This growing body of data provides the statistical foundation for establishing reliable benchmarks in heterogeneous catalysis.

Material Characterization

Structural and Chemical Analysis Techniques

Material characterization provides the critical link between catalytic performance and the underlying physical and chemical properties of the catalyst. In the CatTestHub framework, characterization data enables researchers to understand why certain catalysts exhibit superior activity, selectivity, or stability by correlating macroscopic performance with nanoscopic structure. The database includes comprehensive characterization data for each catalyst, documenting both bulk properties and surface characteristics that influence catalytic behavior [5]. This multi-technique approach ensures that the structural features responsible for catalytic performance are properly identified and documented.

Key characterization techniques employed for catalyst analysis include both structural and spectroscopic methods. X-ray photoelectron spectroscopy (XPS) provides information about the elemental composition and chemical state of surface atoms, allowing researchers to quantify the relative abundance of different elements and their oxidation states [14]. Infrared (IR) and Raman spectroscopy identify surface chemical species and probe molecular adsorption and reaction mechanisms, with vibrational frequencies distinguishing between different adsorption modes and bonding configurations of molecules on surfaces [14]. Temperature-programmed desorption (TPD) measures the binding strength and coverage of adsorbates on catalytic surfaces, providing insights into the number and strength of surface adsorption sites that correlate with catalytic activity and selectivity [14].

Table 2: Essential Material Characterization Techniques for Catalysts

| Characterization Technique | Information Obtained | * relevance to Catalysis* |

|---|---|---|

| Surface Area Analysis (BET) | Total specific surface area | Accessible active sites |

| X-ray Photoelectron Spectroscopy (XPS) | Elemental composition, oxidation states | Chemical state of active sites |

| Temperature-Programmed Reduction (TPR) | Reducibility, metal-support interactions | Activation conditions, stability |

| Temperature-Programmed Desorption (TPD) | Acid/base strength, active site density | Number and strength of active sites |

| Electron Microscopy (TEM/SEM) | Particle size, morphology, dispersion | Structure-property relationships |

| X-ray Diffraction (XRD) | Crystalline phases, particle size | Phase identification, stability |

Correlation of Characterization with Catalytic Performance

The ultimate goal of material characterization in the CatTestHub framework is to establish meaningful structure-activity relationships that guide the rational design of improved catalysts. By systematically correlating characterization data with catalytic performance metrics, researchers can identify the specific structural features that confer enhanced catalytic properties. For example, scanning tunneling microscopy (STM) enables atomic-resolution imaging of surface topography and electronic structure, allowing researchers to identify catalytically active sites such as step edges or defects and visualize adsorbate ordering and reaction intermediates [14]. Similarly, transmission electron microscopy (TEM) allows direct visualization of nanoparticle size, shape, and crystal structure, enabling correlations between these structural parameters and catalytic performance [14].

CatTestHub places particular emphasis on characterizing active site identification and quantification, as these parameters directly enable the calculation of turnover frequencies (TOF) that facilitate meaningful comparison between different catalytic materials. For zeolite catalysts, techniques such as amine adsorption and temperature-programmed desorption can distinguish between Brønsted-acid sites in mixtures of different solid acids [5]. For metal catalysts, techniques such as chemisorption and electron microscopy enable the quantification of accessible metal sites. This rigorous approach to active site characterization ensures that reported activity data can be properly normalized to the true concentration of catalytic active sites, enabling valid comparisons between different catalyst formulations.

Figure 1: Material Characterization Workflow for Catalytic Materials

Reactor Configuration and Experimental Protocols

Reactor Systems and Experimental Design

The reactor configuration component of CatTestHub documents the experimental apparatus and conditions under which catalytic activity data are obtained, ensuring that measurements are reproducible and free from artifacts. Different reactor types, including fixed-bed reactors, fluidized-bed reactors, and continuous-flow reactors, each present distinct advantages and limitations for catalytic testing [5]. The database captures essential details about reactor geometry, material of construction, heating method, and measurement capabilities that might influence the observed catalytic performance. This information is critical for identifying and minimizing transport limitations that could obscure the intrinsic kinetics of catalytic reactions.

A key consideration in reactor configuration is the demonstration that reported kinetic data are free from heat and mass transfer limitations that can corrupt measurements of intrinsic catalytic activity [5]. CatTestHub includes metadata that allows users to assess whether appropriate experimental protocols were followed to eliminate such limitations. For example, the Microactivity Test (MAT) reactor used for evaluating fluid catalytic cracking (FCC) catalysts employs a specially designed fixed-bed reactor with a pre-heater section and standardized operating conditions to ensure reproducible assessment of catalyst performance [15]. Similar standardization approaches are applied to other catalytic systems within the database to enhance the reliability and comparability of reported data.

Standardized Testing Protocols

CatTestHub implements standardized testing protocols to ensure that catalytic activity data are comparable across different laboratories and research groups. These protocols define specific parameters such as reactor configuration, catalyst pretreatment procedures, reaction conditions, and product analysis methods [5]. For example, in the evaluation of fluid catalytic cracking (FCC) catalysts using a Microactivity Test (MAT) unit, the ASTM Method D 3907 specifies reactor design, feed composition, catalyst charge, operating temperatures, and analytical procedures [15]. This standardization enables meaningful comparison of results obtained by different researchers using equivalent experimental approaches.

The application of standardized protocols extends to catalyst pretreatment and activation procedures. FCC catalysts are typically deactivated by hydrothermal treatment or steaming prior to MAT evaluation because the catalytic activity of a fresh catalyst does not accurately represent the behavior of a catalyst under commercial operating conditions [15]. Similarly, protocols for catalyst reduction, calcination, or other activation treatments are documented within CatTestHub to ensure consistent catalyst preparation across different research groups. This attention to procedural details enhances the reliability of the benchmarking data and facilitates more accurate comparisons between different catalytic materials.

Figure 2: Standardized Reactor Testing Protocol Workflow

Experimental Protocols and Methodologies

Methanol Decomposition Protocol

The methanol decomposition reaction serves as a key benchmark chemistry for metal catalysts in the CatTestHub database. This protocol begins with catalyst pretreatment, which typically involves reduction in flowing hydrogen at elevated temperatures to activate metal sites followed by purging with inert gas to remove residual hydrogen. The reaction is conducted in a continuous-flow fixed-bed reactor system equipped with precise temperature control and vaporization capabilities for liquid reactants. Methanol is introduced using a liquid syringe pump with vaporization before contact with the catalyst bed, with typical reaction temperatures ranging from 200-300°C and atmospheric pressure [5].

Product analysis for methanol decomposition employs online gas chromatography with appropriate detectors for quantifying both permanent gases (H₂, CO, CO₂) and organic products (unreacted methanol, dimethyl ether). The critical kinetic parameter obtained from this protocol is the turnover frequency (TOF) for hydrogen production, calculated based on the number of surface metal atoms determined through complementary characterization techniques. Mass balance closures between 96-101% are required for data validation, and tests for transport limitations must be performed to ensure intrinsic kinetic data are obtained [5]. This standardized approach allows direct comparison of metal catalysts across different compositions and structures.

Hofmann Elimination Protocol

The Hofmann elimination of alkylamines over solid acid catalysts provides a benchmark reaction for quantifying acid site concentration and strength in the CatTestHub database. The protocol utilizes a pulse titration method where small, discrete quantities of alkylamine (such as trimethylamine) are injected into a carrier gas stream flowing through a catalyst bed maintained at precisely controlled temperature [5]. The alkylamine molecules selectively adsorb to Brønsted acid sites until saturation occurs, with the process monitored by downstream detection.

Following saturation, the temperature is systematically increased under controlled conditions to desorb the alkylamines through the Hofmann elimination mechanism, producing olefins and amines. The amount of olefin produced quantitatively corresponds to the number of Brønsted acid sites present on the catalyst surface [5]. This protocol is particularly valuable for characterizing zeolite catalysts and other solid acids, as it provides a direct measure of active site concentration that can be used to normalize catalytic activity data for meaningful comparisons between different materials.

Microactivity Test (MAT) for FCC Catalysts

The Microactivity Test (MAT) provides a standardized protocol for evaluating fluid catalytic cracking (FCC) catalysts, which represents a more complex catalytic system involving rapid deactivation. This ASTM-standardized method (D 3907) employs a fixed-bed microreactor with a pre-heater section maintained at 900°F (482°C) [15]. The test uses approximately 4 grams of catalyst that is typically deactivated by hydrothermal treatment prior to evaluation to simulate commercial operating conditions. A standard gas oil feed is introduced at a precise rate of 1.33 mL over 75 seconds, followed by nitrogen flushing to remove residual hydrocarbons.

Product analysis in the MAT protocol involves comprehensive characterization of both liquid and gaseous products. Liquid products are collected in a chilled receiver and analyzed by gas chromatography using simulated distillation to determine conversion, defined as the weight percentage of material boiling below 216°C (421°F) [15]. Gaseous products are analyzed for H₂, H₂S, C₁-C₄ hydrocarbons, and C₅+ compounds, while coke deposits are quantified by combustion and CO/CO₂ analysis. The protocol requires mass balance closures between 96-101% for validity and provides information on both catalyst activity and selectivity to various product fractions.

Essential Research Reagents and Materials

Table 3: Essential Research Reagents and Materials for Catalytic Testing

| Material/Reagent | Function and Application | Specification Requirements |

|---|---|---|

| Standard Reference Catalysts | Benchmarking and validation of experimental setups | Commercial sources (e.g., Zeolyst, Sigma Aldrich); well-characterized properties [5] |

| Methanol (>99.9%) | Probe molecule for metal catalyst functionality | High purity, minimal water content; purchased from certified suppliers (e.g., Sigma Aldrich) [5] |

| Formic Acid | Alternative probe for dehydrogenation activity | High purity; standardized concentration solutions |

| Alkylamines (Trimethylamine) | Probe molecules for acid site quantification | High purity; moisture-free handling |

| Gas Oil Feed | Standard feed for FCC catalyst evaluation (MAT testing) | ASTM-specified composition; consistent sourcing [15] |

| High-Purity Gases (H₂, N₂) | Catalyst pretreatment, reaction, and purging | 99.999% purity; proper gas filtration and purification |

| Calibration Standards | Quantitative analysis of reaction products | Certified reference materials for GC, MS, and other analytical methods |

The selection and specification of research reagents and materials play a critical role in ensuring the reproducibility of catalytic testing within the CatTestHub framework. Standard reference catalysts obtained from commercial sources such as Zeolyst or Sigma Aldrich provide well-characterized benchmark materials that enable cross-laboratory validation of experimental setups [5]. These materials typically include supported metal catalysts (e.g., Pt/SiO₂, Pt/C, Pd/C, Ru/C, Rh/C, Ir/C) with carefully controlled metal loadings and particle size distributions that have been characterized using multiple analytical techniques.

High-purity probe molecules including methanol (>99.9%), formic acid, and specific alkylamines are essential for generating reliable catalytic activity data [5]. These materials must be sourced from certified suppliers with documented purity specifications and handled under conditions that prevent contamination or decomposition. Similarly, high-purity gases (H₂, N₂, etc.) with 99.999% purity and proper filtration are necessary for catalyst pretreatment, reaction environments, and system purging to avoid introducing artifacts from impurities. The use of certified calibration standards for analytical instruments ensures quantitative accuracy in product analysis and enables valid comparisons between results obtained from different laboratory setups.

Catalytic probe reactions are fundamental tools for quantifying the performance and mechanistic pathways of catalysts. These reactions provide critical data on catalyst activity, selectivity, and stability—the three pillars of catalytic evaluation [16]. In industrial applications, over 75% of chemical processes utilize catalysts, a figure that exceeds 90% for newly developed processes [16]. The systematic characterization of catalysts through standardized probe reactions generates substantial volumes of quantitative data, requiring robust database architectures like CatTestHub for effective management and analysis. This document outlines key experimental methodologies, catalyst classification frameworks, and data handling protocols essential for modern catalysis research.

Experimental Probes of Reaction Dynamics

Spectroscopic Monitoring of Reactions

Spectroscopic methods provide the foundational approach for monitoring chemical reactions in real-time, allowing researchers to track reactant consumption and product formation kinetics. As established in physical chemistry, "To follow the rate of any chemical reaction, one must have a means of monitoring the concentrations of reactant or product molecules as time evolves. In the majority of current experiments... one uses some form of spectroscopic or alternative physical probe... to monitor these concentrations as functions of time" [17]. These techniques encompass a wide range of technologies including UV-Vis spectroscopy, IR spectroscopy, NMR, and mass spectrometric detection, each providing distinct advantages for specific reaction systems.

Table 1: Spectroscopic Techniques for Reaction Monitoring

| Technique | Measured Parameter | Time Resolution | Applications |

|---|---|---|---|

| UV-Vis Spectroscopy | Electronic transitions | Milliseconds to seconds | Reaction kinetics, concentration profiles |

| IR Spectroscopy | Molecular vibrations | Microseconds to seconds | Functional group transformation |

| NMR Spectroscopy | Magnetic nuclear properties | Seconds to minutes | Structural elucidation, mechanistic studies |

| Mass Spectrometry | Mass-to-charge ratio | Microseconds to milliseconds | Product identification, intermediate detection |

Fast Reaction Techniques

Conventional reaction monitoring faces significant challenges when studying rapid chemical processes. Specialized techniques have been developed to overcome two primary difficulties: (1) the time required to mix reactants or change temperature becoming significant compared to the reaction half-life, and (2) measurement times comparable to the reaction half-life [18]. These methods fall into two principal classes: flow methods and pulse/probe techniques.

Flow Methods involve rapidly introducing two gases or solutions into a mixing vessel, with the resulting mixture flowing quickly along a tube. Concentrations of reactants or products are measured via spectroscopic methods at various positions along the tube, corresponding to different reaction times [18]. The stopped-flow technique represents a modification where reactants are forced rapidly into a reaction chamber, then the flow is suddenly stopped, with amounts measured by physical methods after various short intervals. These flow methods are generally limited to reactions with half-lives greater than approximately 0.01 seconds due to mixing time constraints [18].

Pulse and Probe Methods overcome mixing limitations by using short radiation pulses followed by spectroscopic probes. The flash photolysis method, developed by Norrish and Porter (Nobel Prize, 1967), uses high-intensity, short-duration light flashes to generate atomic and molecular species whose reactions are studied kinetically through spectroscopy [18]. Modern implementations have reduced flash durations from milliseconds to femtoseconds, enabling the study of fundamental processes like bond length changes that occur on 100-femtosecond timescales [18].

The relaxation method (Eigen, Nobel Prize 1967) begins with a system at equilibrium, rapidly alters external conditions (typically temperature in T-jump methods), then measures the relaxation kinetics to the new equilibrium state [18]. Temperature jumps of several degrees can be achieved in under 100 nanoseconds, suitable for studying many chemical processes though not the fastest femtosecond-scale events [18].

Table 2: Techniques for Studying Fast Reactions

| Method | Time Resolution | Application Range | Key Principle |

|---|---|---|---|

| Stopped-Flow | ≥ 10 milliseconds | Solution-phase reactions | Rapid mixing followed by static observation |

| Flash Photolysis | Femtoseconds to milliseconds | Photochemical reactions, intermediates | Light pulse initiation with spectroscopic probing |

| Temperature-Jump | ≥ 100 nanoseconds | Equilibrium perturbations | Rapid temperature change and relaxation monitoring |

| Ultrasonic Methods | Microseconds to nanoseconds | Solvation dynamics, conformational changes | Pressure waves as perturbation source |

Catalyst Classification and Properties

Fundamental Catalyst Categories

Catalysts are broadly classified based on their phase relationship with reactants, with each category exhibiting distinct advantages and limitations for industrial applications [16].

Heterogeneous Catalysts exist in a different phase than the reactants, typically solids interacting with liquid or gaseous reactants. These catalysts dominate industrial applications due to their ease of separation and reusability. Their properties are characterized by high stability and straightforward reactor implementation, though they often exhibit lower selectivity compared to homogeneous systems [16].

Homogeneous Catalysts share the same phase (typically liquid) with reactants, enabling molecular-level interactions that frequently yield higher selectivity and activity under milder conditions. The primary challenge with homogeneous catalysts lies in separation and recovery from the reaction mixture, adding complexity and cost to industrial processes [16].

Key Performance Metrics

Catalyst evaluation relies on three fundamental properties that vary in relative importance depending on the specific process requirements [16]:

- Activity: The rate at which a catalyst accelerates a reaction, typically quantified through turnover frequency (TOF) ranging from 10⁻² to 10² s⁻¹ for industrial applications [16].

- Selectivity: The ability to direct reaction pathways toward desired products while minimizing byproduct formation.

- Stability: The resistance to deactivation over time, encompassing thermal, chemical, and mechanical stability.

Quantitative Data Management in Catalysis Research

Data Quality Assurance Framework

Quantitative data quality assurance represents the systematic processes and procedures used to ensure accuracy, consistency, reliability, and integrity throughout the research lifecycle [19]. Effective quality assurance helps identify and correct errors, reduce biases, and ensure data meets standards required for analysis and reporting [19]. The data management process follows a rigorous step-by-step approach where each stage requires researcher interaction with the dataset in an iterative process to extract relevant information transparently [19].

Data Cleaning Protocols must address several critical issues prior to analysis [19]:

- Checking for duplications: Identify and remove identical copies of data, particularly relevant for automated data collection systems.

- Managing missing data: Establish thresholds for questionnaire completion (e.g., 50-100% completeness) and apply statistical tests like Little's Missing Completely at Random (MCAR) test to determine patterns of missingness [19].

- Identifying anomalies: Run descriptive statistics for all measures to detect values outside expected ranges (e.g., Likert scales exceeding boundaries).

- Data summation: Follow instrument-specific guidelines for constructing composite scores or clinical classifications from raw data [19].

Data Analysis Workflow

Quantitative data analysis proceeds through sequential stages, allowing researchers to build upon rigorous protocols before testing hypotheses [19]. The process involves two primary cycles:

Descriptive Analysis summarizes the dataset using frequencies, means, medians, and modes to identify trends and patterns [19]. This stage includes assessing normality of distribution through measures of kurtosis (±2 indicates normality) and skewness, supplemented by statistical tests like Kolmogorov-Smirnov and Shapiro-Wilk [19].

Inferential Analysis employs statistical methods to compare groups, analyze relationships, and make predictions from data [19]. The choice between parametric and non-parametric tests depends on normality assessment and measurement type (nominal, ordinal, scale) [19].

Data Analysis Decision Workflow

Research Reagent Solutions

The following reagents and materials represent essential components for catalytic reaction studies, particularly those involving probe reactions and kinetic analysis.

Table 3: Essential Research Reagents for Catalytic Studies

| Reagent/Material | Function | Application Notes |

|---|---|---|

| Phage RNA Polymerases | In vitro transcription of RNA probes | Enables synthesis of single-stranded, strand-specific RNA probes of discrete length [20] |

| Klenow Enzyme | Random priming DNA probe synthesis | Efficiently incorporates low molar concentrations of high specific activity radiolabeled nucleotides [20] |

| Polynucleotide Kinase | 5' end-labeling of nucleic acids | Incorporates single [³²P]phosphate per molecule independent of sequence length [20] |

| High Specific Activity [³²P]NTPs | Radiolabeling for detection sensitivity | Critical parameter determining nucleic acid detection sensitivity; 800-6000 Ci/mmol ranges available [20] |

| CU Minus Vectors | Enhanced RNA probe synthesis | Reduces abortive transcription when using high specific activity nucleotides [20] |

Advanced Spectroscopic Probes for Challenging Reactions

Modern catalysis research increasingly relies on sophisticated spectroscopic techniques for investigating complex reduction reactions. As exemplified by research on "Spectroscopic methods for challenging reduction reactions - catalytic coupling of CO₂," these approaches provide critical information about working processes essential for optimization [21]. Spectroscopic investigations are particularly valuable for developing new organometallic, electrocatalytic, and photocatalytic pathways, enabling researchers to characterize transient intermediates and elucidate mechanistic details [21].

The integration of multiple spectroscopic techniques within research training programs offers excellent multidisciplinary education, strengthened through synergetic cooperation between academic institutions and catalysis research centers [21]. These approaches generate substantial volumes of quantitative data requiring sophisticated database infrastructure like CatTestHub for effective management, sharing, and analysis across research communities.

Probe reactions, systematic catalyst classification, and robust quantitative data management form the foundation of modern catalytic research. The experimental protocols outlined herein provide researchers with standardized methodologies for generating comparable, high-quality data on catalyst performance across diverse reaction systems. As catalysis continues to evolve—particularly in emerging areas like CO₂ utilization and sustainable chemistry—the integration of advanced spectroscopic techniques with comprehensive data management platforms like CatTestHub will accelerate discovery and optimization cycles. The structured approach to data quality assurance, analysis workflows, and reagent selection detailed in these application notes will support researchers in generating reliable, reproducible catalytic data for both fundamental studies and industrial application.

The FAIR Guiding Principles establish a framework to enhance the utility of digital assets by making them Findable, Accessible, Interoperable, and Reusable [22]. Originally developed within the biomedical community, these principles have gained widespread adoption across scientific disciplines to address challenges posed by increasing data volume and complexity [23]. In the specific context of experimental catalysis research, implementing FAIR principles enables researchers to overcome barriers in data comparison, validation, and reuse that traditionally hinder progress in materials development and catalyst optimization.

The CatTestHub database represents an applied implementation of these principles, serving as an open-access platform for benchmarking experimental heterogeneous catalysis data [1]. This database specifically addresses the critical need for consistently reported catalytic activity data across different research groups and experimental conditions. By structuring data according to FAIR principles, CatTestHub facilitates quantitative comparisons between catalytic materials and technologies, which is essential for advancing the field beyond qualitative observations toward predictive catalyst design.

The FAIR Principles Framework

The FAIR principles emphasize machine-actionability as a core requirement, recognizing that computational systems increasingly handle data discovery, integration, and analysis without human intervention [22]. This capability becomes particularly important in catalysis research where high-throughput experimentation generates vast datasets that exceed manual processing capacity. The principles are organized across four interconnected dimensions, each with specific requirements for implementation.

Core FAIR Principles

Table 1: The FAIR Guiding Principles for Scientific Data Management [22] [23]

| Principle | Key Requirements | Implementation Focus |

|---|---|---|

| Findable | F1: Globally unique and persistent identifiersF2: Rich metadataF3: Metadata includes identifier for dataF4: (Meta)data in searchable resource | Data discovery through descriptive metadata and indexing |

| Accessible | A1: Retrievable by identifier using standard protocolA1.1: Open, free, universally implementable protocolA1.2: Authentication and authorization where necessaryA2: Metadata accessible even when data unavailable | Data retrieval with appropriate access controls |

| Interoperable | I1: Formal, accessible, shared language for knowledgeI2: FAIR-compliant vocabulariesI3: Qualified references to other (meta)data | Data integration and exchange across systems |

| Reusable | R1: Richly described with accurate attributesR1.1: Clear data usage licenseR1.2: Detailed provenanceR1.3: Meets domain community standards | Future reuse in different contexts |

Implementation Considerations for Catalysis Data

Successful application of FAIR principles in catalysis research requires attention to five critical technical aspects: (1) utilizing open, standardized file formats that ensure long-term readability; (2) creating rich, accurate metadata that uses controlled vocabularies; (3) implementing persistent unique identifiers for all referenced objects; (4) assigning clear usage licenses to define permissible applications; and (5) employing open, universal communication protocols for data access [23]. Each requirement addresses specific challenges in catalysis data management, particularly the fragmentation of reporting standards and inconsistent experimental documentation that complicate comparative analysis.

FAIR Implementation in CatTestHub Database Architecture

The CatTestHub database embodies FAIR principles through its architectural design and data management approach. As a benchmarking database for experimental heterogeneous catalysis, it specifically addresses the reproducibility crisis in catalytic research by providing systematically reported data for selected probe chemistries alongside relevant material characterization and reactor configuration information [1]. This integrated approach balances the fundamental information needs of chemical catalysis with FAIR data design principles.

Data Organization and Structure

CatTestHub's current iteration encompasses over 250 unique experimental data points collected across 24 solid catalysts that facilitated the turnover of 3 distinct catalytic chemistries [1]. This structured organization enables direct comparison of catalytic performance across different material systems and reaction conditions. The database architecture supports continuous expansion through community contributions, establishing a roadmap for incorporating additional kinetic information on select catalytic systems from the heterogeneous catalysis community at large.

The implementation of FAIR principles within CatTestHub directly addresses the critical challenge of data traceability in metrological sciences [23]. By maintaining explicit linkages between experimental results, material properties, and reactor configurations, the database enables full reconstruction of data provenance—a essential requirement for both scientific reproducibility and metrological traceability in catalysis research.

Quantitative FAIR Assessment Metrics

Table 2: CatTestHub FAIR Implementation Metrics and Assessment [1]

| FAIR Component | Implementation in CatTestHub | Quantitative Metric |

|---|---|---|

| Findability | Digital Object Identifiers (DOIs) for datasetsStructured metadata schemaRepository indexing | 250+ unique experimental data points24 solid catalysts3 distinct catalytic chemistries |

| Accessibility | Open-access platformStandardized HTTP protocolsPersistent resolution services | Publicly accessible interfaceMetadata permanence guarantee |

| Interoperability | Standardized data formatsControlled vocabulariesCross-references to related data | Consistent reporting across platformsMaterial and reaction taxonomy |

| Reusability | Detailed provenance trackingClear usage licensesCommunity standards compliance | Experimental workflow documentationReaction condition standardization |

Experimental Protocols for FAIR Data Generation

Protocol: Catalytic Activity Measurement with FAIR Compliance

Purpose: To generate standardized, FAIR-compliant catalytic performance data for inclusion in benchmarking databases like CatTestHub.

Materials and Equipment:

- Catalytic testing reactor system with calibrated mass flow controllers

- Analytical instrumentation (GC, MS, or other appropriate detectors)

- Reference catalyst materials for validation

- Standard gas mixtures for calibration

- Data recording system with timestamp capability

Procedure:

- Pre-experiment Documentation

- Assign unique laboratory identifier to experiment

- Document reactor configuration parameters (reactor type, volume, geometry)

- Record catalyst characterization data (surface area, porosity, composition)

Experimental Conditions Standardization

- Set and document temperature, pressure, and flow conditions

- Establish feed composition using certified standards

- Implement internal standard for analytical validation

Data Acquisition

- Collect time-series data for conversion, selectivity, yield

- Record stability data over predetermined time-on-stream

- Capture system parameters (pressure drop, temperature profiles)

Post-experiment Processing

- Calculate performance metrics using standardized formulas

- Apply statistical analysis to determine measurement uncertainty

- Correlate with characterization data where applicable

FAIR Compliance Implementation

- Generate comprehensive metadata file

- Assign persistent identifier to dataset

- Apply appropriate data usage license

- Submit to repository with standardized protocol

Protocol: Metadata Generation for Catalysis Data

Purpose: To create rich, structured metadata that enables discovery, interpretation, and reuse of catalytic data.

Procedure:

- Administrative Metadata Creation

- Record creator information with ORCID identifiers

- Document funding sources with grant numbers

- Specify project context and objectives

Technical Metadata Development

- Describe experimental apparatus with manufacturer and model

- Document analytical methods with parameters and conditions

- Record data processing algorithms and software versions

Scientific Metadata Compilation

- Characterize catalyst materials with standardized descriptors

- Document reaction conditions with appropriate units

- Report performance metrics with uncertainty estimates

Provenance Tracking

- Record data lineage from raw measurements to reported values

- Document processing steps and transformations

- Note quality control procedures and validation checks

Research Reagent Solutions for Catalysis Data Management

Table 3: Essential Research Reagents and Solutions for FAIR Catalysis Data [1] [24]

| Reagent/Solution | Function in FAIR Implementation | Application Example |

|---|---|---|

| Persistent Identifier Systems | Provides globally unique, permanent references to digital objects | DOI assignment to catalytic datasets in CatTestHub |

| Metadata Standards | Defines structured format for data description | Catalyst characterization metadata schema |

| Controlled Vocabularies | Ensures consistent terminology across datasets | Ontology for reaction types and catalyst materials |

| Data Repository Platforms | Provides indexed, searchable storage for data | CatTestHub institutional repository implementation |

| Standard Communication Protocols | Enables machine-to-machine data access | HTTP API for programmatic data retrieval |

| Provenance Tracking Tools | Documents data lineage and processing history | Workflow system capturing experimental steps |

| Data Licensing Frameworks | Specifies permissible reuse conditions | Creative Commons licenses for catalysis data |

Quality Control and Validation Methods

Data Quality Assessment Protocol

Purpose: To ensure that FAIR-compliant catalysis data maintains scientific rigor and reliability alongside improved accessibility.

Procedure:

- Technical Validation

- Implement internal standard validation for analytical measurements

- Conduct reproducibility testing with reference materials

- Perform statistical analysis of measurement uncertainty

Metadata Quality Control

- Verify completeness against minimum information standards

- Validate terminology against controlled vocabularies

- Check identifier resolution and linkage

FAIRness Assessment

- Evaluate findability through search engine testing

- Verify accessibility via protocol implementation checking

- Assess interoperability through format validation

- Confirm reusability via provenance and license verification

Performance Benchmarking Methodology

Purpose: To establish quantitative benchmarks for catalytic performance that enable meaningful comparison across different experimental systems.

Procedure:

- Reference Catalyst Testing

- Select appropriate reference materials for specific reactions

- Establish standardized testing protocols

- Determine performance ranges and acceptance criteria

Cross-Validation Implementation

- Conduct interlaboratory comparison studies

- Implement blind testing with known samples

- Establish statistical confidence intervals

Benchmark Integration

- Incorporate reference data into CatTestHub

- Develop normalization procedures for cross-study comparison

- Create visualization tools for performance assessment

The implementation of FAIR principles through specialized databases like CatTestHub represents a transformative approach to catalysis research data management. By making catalytic data findable through rich metadata and persistent identifiers, accessible through standardized protocols, interoperable through common formats and vocabularies, and reusable through clear licensing and provenance, these initiatives address critical challenges in reproducibility and knowledge integration. The structured approach outlined in these application notes provides researchers with practical methodologies for generating FAIR-compliant catalysis data that can accelerate materials discovery and optimization.