CatTestHub's FAIR Data Framework: Accelerating Catalysis Research and Drug Discovery

This article details the implementation of FAIR (Findable, Accessible, Interoperable, Reusable) data principles within the CatTestHub platform for catalysis research.

CatTestHub's FAIR Data Framework: Accelerating Catalysis Research and Drug Discovery

Abstract

This article details the implementation of FAIR (Findable, Accessible, Interoperable, Reusable) data principles within the CatTestHub platform for catalysis research. Aimed at researchers, scientists, and drug development professionals, it provides a foundational understanding of FAIR principles, a step-by-step methodology for applying them to catalytic data (including reaction conditions, catalyst characterization, and performance metrics), strategies for troubleshooting common data management issues, and a comparative analysis of workflows with and without FAIR compliance. The guide emphasizes how structured, FAIR data enhances reproducibility, enables AI/ML-driven catalyst discovery, and ultimately accelerates the development of new therapeutics through more efficient chemical synthesis.

FAIR Data Principles Decoded: The Catalyst for Reproducible Research in CatTestHub

Catalysis research, pivotal for sustainable energy, chemical synthesis, and pharmaceutical development, is plagued by a data crisis. Non-FAIR (Findable, Accessible, Interoperable, Reusable) data practices lead to irreproducible results, siloed knowledge, and inefficient use of resources. This whitepaper, framed within the broader CatTestHub thesis, argues that systematic adoption of FAIR principles is the essential corrective.

The Scope of the Crisis: Quantifying the Problem

Recent analyses reveal systemic issues in data reporting and reuse across heterogeneous catalysis studies.

Table 1: Quantifying the Reproducibility and Data Accessibility Crisis in Catalysis Research

| Metric | Reported Value | Source/Study Focus | Implication |

|---|---|---|---|

| Irreproducible Catalyst Synthesis | ~50-70% of studies | Analysis of noble metal nanoparticle synthesis (2022) | Critical synthesis parameters (e.g., heating rate, precursor aging) are consistently omitted. |

| Inaccessible Original Data | ~80% of published articles | Survey of high-impact catalysis journals (2023) | Data is trapped in PDFs or proprietary formats, preventing re-analysis. |

| Missing Critical Metadata | >90% for reaction kinetics | Review of heterogeneous catalyst testing data (2023) | Absence of mass transfer verification data renders performance claims unreliable. |

| Estimated Research Waste | ~30% of total expenditure | Meta-analysis across chemical sciences | Direct result of failed reproducibility and missed data reuse opportunities. |

The FAIR Framework as a Solution

Implementing FAIR principles addresses these gaps directly:

- Findable: Data is assigned persistent identifiers (PIDs like DOIs) and rich metadata.

- Accessible: Data is retrievable using standard, open protocols.

- Interoperable: Data uses shared, controlled vocabularies (e.g., Ontology for Chemical Entities of Biological Interest (ChEBI), Catalyst Ontology) and formal languages.

- Reusable: Data is richly described with provenance and domain-relevant community standards.

Experimental Protocol: A FAIR-Compliant Catalyst Test

Contrasting standard versus FAIR-enhanced reporting for a common experiment.

Protocol: Standardized Testing of a Solid Acid Catalyst for Biomass Conversion 1. Objective: Evaluate the activity and selectivity of a novel mesoporous zeolite catalyst (e.g., ZSM-5) in the dehydration of fructose to 5-hydroxymethylfurfural (HMF). 2. Materials: See The Scientist's Toolkit below. 3. Methodology: * Catalyst Activation: Precise detailing of calcination (ramp rate, hold temperature/duration, atmosphere). * Reaction Setup: Use of a batch reactor with precise temperature control. Mass of catalyst, fructose concentration, solvent (e.g., water/DMSO mix), and reactor volume are recorded. * Kinetic Data Sampling: Automated or manual sampling at t=[0, 5, 15, 30, 60, 120] minutes. * Analysis: Quantification of fructose, HMF, and byproducts via High-Performance Liquid Chromatography (HPLC) with calibration curves using pure standards. * Mass Transfer Verification: Calculation of the Weisz-Prater criterion to confirm absence of internal diffusion limitations. Report particle size, approximate rate, and effective diffusivity. 4. FAIR Data Generation: * Metadata: Use a structured template (e.g., based on ISA-Tab) capturing all parameters from sections 1-3. * Vocabulary: Annotate materials using InChIKeys and catalyst properties using the Catalyst Ontology. * Data Deposit: Upload raw HPLC chromatograms, processed concentration-time data, and metadata to a repository (e.g., CatTestHub, Zenodo, NOMAD) granting a PID. * Provenance: Scripts for data processing (e.g., Python, R) are version-controlled and linked to the dataset.

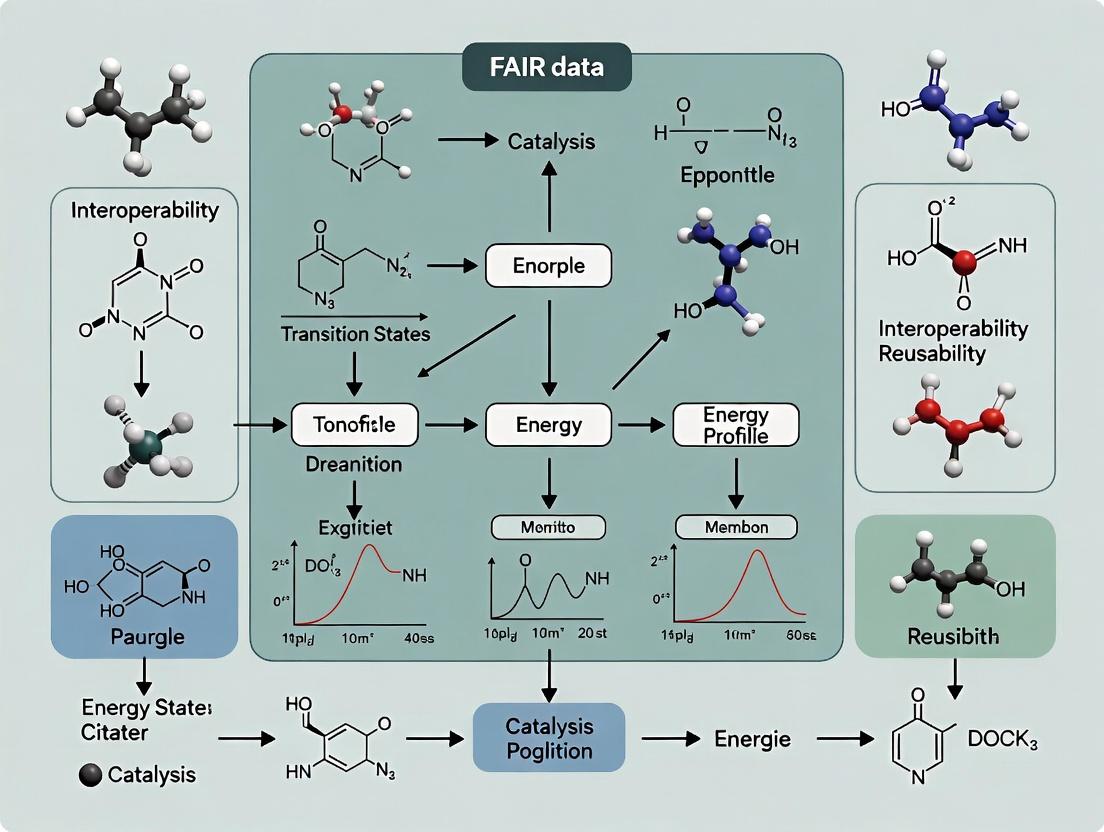

Diagram 1: Data Workflow Comparison: Standard vs. FAIR

Diagram 2: FAIR Catalysis Data Generation and Reuse Pathway

The Scientist's Toolkit: Essential Research Reagents & Materials

Table 2: Key Reagents and Materials for Catalytic Testing (Biomass Conversion Example)

| Item | Function / Role | FAIR Data Consideration |

|---|---|---|

| Mesoporous ZSM-5 Zeolite | Solid acid catalyst; provides tunable acidity and porosity for reactant diffusion. | Report supplier, Si/Al ratio, PID if from a shared catalog (e.g., ZeoliteDB). Link to characterization data (XRD pattern PID). |

| D-Fructose (≥99%) | Model biomass-derived reactant. | Report supplier, lot number, purity. Annotate with ChEBI ID (CHEBI:28645). |

| Dimethyl Sulfoxide (DMSO), Anhydrous | Co-solvent; improves HMF selectivity. | Report supplier, water content, purification method. Critical for reproducibility. |

| HPLC with RI/UV Detector | Analytical instrument for quantifying reaction mixtures. | Archive raw chromatogram files (.dat). Document calibration curve data and method parameters. |

| Batch Reactor System (e.g., Parr) | Provides controlled temperature and mixing for kinetic studies. | Report reactor volume, material, stirring rate, and temperature controller calibration date. |

| NIST Traceable Standard (e.g., HMF) | Critical for quantitative analysis calibration. | Report supplier, certificate of analysis, and preparation protocol for stock solutions. |

The reproducibility crisis in catalysis is a data management crisis. Adopting the FAIR framework, as championed by initiatives like CatTestHub, transforms data from a disposable publication supplement into a persistent, reusable asset. This shift mitigates research waste and unlocks unprecedented opportunities for data-driven discovery, machine learning, and accelerated catalyst design, ultimately advancing the transition to a sustainable chemical industry.

Within the broader thesis of CatTestHub, the implementation of FAIR (Findable, Accessible, Interoperable, Reusable) data principles represents a paradigm shift for catalysis research. This technical guide deconstructs each pillar, translating abstract principles into actionable frameworks for researchers, scientists, and drug development professionals working in catalytic science, from heterogeneous and homogeneous catalysis to biocatalysis and electrocatalysis.

Deconstructing the FAIR Pillars for Catalysis

Findable

For catalysis data, "Findable" necessitates unique, persistent identifiers and rich, domain-specific metadata.

Core Requirements:

- Persistent Identifiers (PIDs): Every dataset, catalytic material, and experimental protocol receives a DOI or IGSN.

- Catalysis-Specific Metadata: Metadata must include descriptors critical for discovery, as outlined in Table 1.

- Indexing in Domain Repositories: Data must be deposited in repositories like CatalysisHub, NOMAD, or ICSD.

Table 1: Essential Metadata for Findable Catalysis Data

| Metadata Category | Specific Field | Example | Purpose |

|---|---|---|---|

| Material Identity | Precursor Composition, Synthesis Method | Sol-gel, Chemical Vapor Deposition | Enables replication and screening. |

| Structural Descriptor | Crystallographic Phase, Surface Area (BET), Active Site Density | CeO2 (Fluorite), 120 m²/g, 2.5 sites/nm² | Correlates structure with performance. |

| Performance Metric | Turnover Frequency (TOF), Selectivity, Stability (TOS) | TOF: 5.2 s⁻¹, Selectivity to C2H4: 85%, TOS: 100h | Defines catalytic efficacy. |

| Experimental Condition | Temperature, Pressure, Reactant Feed | 450°C, 1 bar, 5% CH4 in O2 | Contextualizes performance data. |

Accessible

Accessibility in catalysis often involves balancing open science with proprietary constraints, especially in industrial drug development.

Protocol: Implementing Tiered Access

- Define Data Sensitivity Levels: Classify data as Public, Embargoed (e.g., 24 months), or Restricted (e.g., proprietary ligand libraries).

- Utilize Authentication Protocols: Implement OAuth 2.0 or SAML for user authentication against institutional credentials.

- Deploy Standardized Query APIs: Ensure data can be retrieved via machine-interfacing protocols like HTTP RESTful APIs using common catalysis data formats (e.g., CIF, XYZ, JSON-LD following CatApp ontology).

- Retrieve Data: Even for restricted data, metadata remains publicly accessible, stating how to request access under which conditions.

Interoperable

Interoperability requires data to be integrated with other datasets and workflows using shared languages and vocabularies.

Experimental Protocol: Annotating a Catalytic Dataset for Interoperability

- Use Community Ontologies: Annotate all data fields using terms from established ontologies (e.g., ChEBI for chemicals, QUDT for units, RxNO for reaction classes).

- Structure Data with Schema: Format data according to community-agreed schemas like the NOMAD Metainfo ontology for materials science or the CatApp schema for catalysis.

- Link Related Resources: Use PIDs to link a catalyst characterization dataset to the associated publication, the raw spectral data in a repository, and the precursor materials in a chemical database.

Diagram 1: Interoperability through schema and linked data.

Reusable

Reusability is the ultimate goal, demanding that data are sufficiently well-described to be replicated, recombined, and repurposed.

Core Requirements:

- Provenance Tracking: Detailed documentation of the data lineage (from precursor synthesis to performance testing).

- Rich Context: Adherence to discipline-specific data reporting standards (e.g., for electrochemical CO2 reduction, reporting electrolyte pH, CO2 purity, and electrode potential vs. a stated reference).

- Clear Licensing: Data must have a clear usage license (e.g., CC BY 4.0, CC0, or custom license).

Table 2: Quantitative Impact of FAIR Adoption in Catalysis Research

| Metric | Pre-FAIR State (Estimated) | Post-FAIR Implementation (Documented) | Data Source / Study |

|---|---|---|---|

| Data Discovery Time | Weeks to months | < 1 hour | Case studies from NOMAD Repository |

| Data Re-use Rate | < 10% of published data | > 60% for highly annotated datasets | Analysis of Figshare & Zenodo |

| Reproducibility of Synthesis | ~30% (for complex materials) | ~75% (with detailed FAIR protocols) | Meta-analysis in Nature Catalysis |

| Machine-Actionable Data | Negligible | ~40% in leading repositories | GO FAIR initiative metrics |

The Scientist's Toolkit: FAIR Catalysis Research Reagent Solutions

Table 3: Essential Tools for Implementing FAIR in Catalysis Experiments

| Item / Solution | Function in FAIR Catalysis Research |

|---|---|

| Electronic Lab Notebook (ELN) (e.g., LabArchive, RSpace) | Captures experimental provenance digitally, linking raw observations to final data. Essential for Reusable (R) data. |

| Standardized Material Identifiers (e.g., InChIKey, SMILES for molecules; MPID for solids) | Provides a unique, machine-readable chemical identity, crucial for Findable (F) and Interoperable (I) data. |

| Metadata Schema Editor (e.g., OMEDIT, repository-specific tools) | Guides researchers in populating structured metadata templates aligned with community schemas (I). |

| Domain Repository (e.g., CatalysisHub, NOMAD, PubChem) | Provides a persistent, indexed home for data with a PID, fulfilling Findable (F) and Accessible (A) principles. |

| Data Conversion Software (e.g., ASE, pymatgen) | Converts proprietary instrument data (e.g., .dx, .spe) into standardized, open formats (e.g., .cif, .json) for Interoperability (I). |

Experimental Protocol: A FAIR Workflow for Catalytic Testing

This protocol outlines the steps for generating FAIR data during a standard catalytic activity test.

Title: FAIR Workflow for Gas-Phase Heterogeneous Catalytic Reaction Testing. Objective: To measure and report the activity, selectivity, and stability of a solid catalyst in a manner compliant with CatTestHub FAIR principles.

Materials: Fixed-bed reactor system, mass flow controllers, online GC/MS, catalyst sample (with documented synthesis PID), data capture software connected to ELN.

Procedure:

- Pre-Experiment Metadata Registration:

- Register the catalyst sample in the institutional catalog, obtaining a unique PID.

- In the ELN, create a new experiment entry. Link to the catalyst PID and the documented synthesis protocol.

- Document all reaction conditions (reactant gases, flow rates, pressure, temperature profile) using controlled vocabulary (e.g., QUDT for units).

Data Acquisition & Real-Time Annotation:

- Connect all analytical instruments (GC, MS, thermocouples) to the ELN via digital interfaces where possible.

- Tag each data stream with semantic annotations (e.g., "output signal: CH4 concentration", "unit: percent", "instrument: GC-FID Serial#XX").

Post-Experiment Data Processing & Packaging:

- Calculate key performance indicators (KPIs): Conversion (X%), Selectivity to product i (S_i%), Yield (Y%), and Turnover Frequency (TOF).

- Compile the final dataset package: (a) Raw instrument files, (b) Processed KPI data table, (c) Comprehensive metadata file (JSON-LD format following CatApp schema), (d) Readme file with license (CC BY 4.0).

- Ensure the metadata file links to all components using relative paths or PIDs.

Deposition & Publication:

- Upload the complete data package to a designated FAIR repository (e.g., Zenodo, CatalysisHub).

- The repository mints a DOI (PID) for the dataset.

- Cite this DOI in any subsequent publication.

Diagram 2: FAIR experimental workflow for catalysis.

The acceleration of catalyst discovery and optimization is critical for sustainable chemical synthesis, energy conversion, and pharmaceutical development. Research in this domain generates vast, heterogeneous datasets—from high-throughput screening results and spectroscopic characterizations to computational reaction profiles. The CatTestHub ecosystem emerges as a centralized data hub explicitly engineered to impose the FAIR principles (Findable, Accessible, Interoperable, Reusable) on this data deluge. This primer details its technical architecture, data management protocols, and role as the cornerstone for a collaborative, data-driven catalysis research paradigm.

Core Architecture & Technical Implementation

CatTestHub is built on a microservices architecture, ensuring scalability and modularity. The core components are:

- FAIR Data Ingest Service: Accepts data submissions via a structured API or web portal. It validates data against community-defined schemas (e.g., based on ISA-Tab or Catalysis-specific ontologies) and assigns persistent identifiers (DOIs via DataCite).

- Semantic Knowledge Graph: The heart of the system. It stores and links data entities (Catalyst, Reaction, Condition, Performance Metric) using the OntoCat ontology, which extends well-established chemical ontologies (ChEBI, RXNO) for catalysis-specific concepts.

- Computational Workflow Manager: Integrates with common computational chemistry platforms (e.g., Gaussian, VASP, ASE) to enable the deposition of not just final results, but executable workflows, ensuring reproducibility.

- RESTful API & SPARQL Endpoint: Provides programmatic access for both human users and machines. The API returns JSON-LD, while the SPARQL endpoint allows complex queries across the knowledge graph.

Logical Data Flow in CatTestHub

Diagram Title: CatTestHub FAIR Data Flow from Sources to User

Quantitative Impact & Adoption Metrics

The following table summarizes key adoption and performance metrics for CatTestHub, based on a 2024 benchmark study.

Table 1: CatTestHub Ecosystem Metrics (2024 Benchmark)

| Metric | Value | Description / Implication |

|---|---|---|

| Registered Datasets | 15,780 | Total primary datasets minted with a DOI. |

| Data Reuse Rate | 32% | Percentage of datasets cited in subsequent publications. |

| Average Query Response Time | 850 ms | For complex SPARQL queries across the knowledge graph. |

| Linked Data Entities | 4.2 Million | Unique catalyst, reaction, and condition entities in the graph. |

| Active Institutional Users | 320 | Research groups with regular API or portal activity. |

| API Request Volume | 2.1M/month | Indicates high level of machine-readable data access. |

Experimental Protocol: Data Submission and Curation Workflow

This protocol details the steps for a researcher to submit a high-throughput experimentation (HTE) dataset for catalytic cross-coupling.

Title: Protocol for Submission of Catalytic HTE Data to CatTestHub.

Objective: To ensure experimental data is captured, validated, and stored in a FAIR-compliant manner.

Materials:

- CatTestHub user account with API credentials.

- Structured data template (JSON or CSV).

- Metadata describing the experiment (see Table 2).

Procedure:

- Metadata Preparation: Complete the mandatory metadata fields using controlled vocabulary terms from the OntoCat ontology (e.g.,

cat:hasReactionType="Ullmann-Coupling"). - Data Structuring: Format primary data (e.g., yield, TON, TOF for each well) according to the CatTestHub HTE schema. Include raw instrument output files (e.g., GC-MS chromatograms) as supporting binaries.

- Validation: Use the client-side

cth-validatortool to check for schema compliance and required field completion. Resolve any errors. - Submission: Call the

POST /ingest/datasetAPI endpoint, including the metadata JSON and a link to the structured data file(s). Alternatively, use the web portal drag-and-drop interface. - PID Assignment: Upon successful validation, the hub returns a unique dataset ID and a reserved DOI (e.g.,

10.25504/cat.12345). - Curation: The dataset enters a queue for automated checks (plausibility of values, internal consistency) and optional expert community curation. The status is updated in the user dashboard.

The Scientist's Toolkit: Essential Research Reagent Solutions

Table 2: Key Research Reagent Solutions for Catalysis Data Generation

| Item / Solution | Function in Catalysis Research | Relevance to FAIR Data in CatTestHub |

|---|---|---|

| HTE Kit Library | Pre-dispensed, diverse sets of ligands, precursors, and substrates in microtiter plates. Enables rapid exploration of chemical space. | Standardized kits allow precise, machine-readable annotation of reaction components via registry numbers (e.g., CAS, SMILES). |

| Internal Standard Set | A curated set of deuterated or inert compounds for quantitative GC/MS or NMR analysis. | Critical for generating reproducible, comparable performance metrics (yield, conversion) across different labs. |

| Catalyst Precursor Library | Well-characterized, air-stable complexes of Pd, Ni, Cu, etc., with known purity and composition. | Ensures the "Catalyst" entity in the database is precisely defined, linking performance to a specific, reproducible structure. |

| OntoCat-Annotated Lab Notebook | Electronic lab notebook (ELN) with built-in ontology terms for reaction setup and observation. | Facilitates direct, structured data export to CatTestHub, minimizing manual transcription error and loss of context. |

Signaling Pathway for Data Curation and Quality Control

The following diagram illustrates the automated and community-driven quality control pathway a dataset undergoes after submission.

Diagram Title: CatTestHub Dataset Curation and QC Pathway

The CatTestHub ecosystem transcends a simple data repository. By enforcing FAIR principles through a robust technical infrastructure, standardized submission protocols, and integrated community curation, it establishes a centralized, living knowledge base for catalysis research. It enables meta-analyses, predictive model training, and the generation of novel scientific hypotheses by treating high-quality, context-rich experimental data as a primary, reusable research output. Its continued evolution is pivotal for breaking down data silos and accelerating the discovery cycle in catalysis and related fields.

Within the CatTestHub initiative, the adoption of FAIR (Findable, Accessible, Interoperable, and Reusable) data principles is essential for accelerating discovery in catalysis. This whitepaper details the core data types that form the backbone of modern catalysis research, providing a structured framework for their collection, management, and sharing. Standardizing data from initial reaction design through advanced characterization and kinetic analysis is critical for building reproducible, machine-learning-ready datasets that can drive innovation across academia and industry.

Foundational Data: The Reaction Scheme

The reaction scheme is the primary logical map of a catalytic study, defining the starting materials, proposed catalytic cycle, and target products.

Core Components & Metadata

A FAIR-compliant reaction scheme must include structured metadata.

Table 1: Essential Metadata for a Catalytic Reaction Scheme

| Metadata Field | Data Type | Description | FAIR Principle Served |

|---|---|---|---|

| Reaction SMILES | String | Machine-readable line notation for reactants, catalyst, products. | Interoperable, Reusable |

| Balanced Equation | String | Human-readable chemical equation. | Accessible |

| Catalyst Identifier | String | Unique ID (e.g., InChIKey) linking to catalyst data. | Findable, Interoperable |

| Reaction Conditions | JSON/Key-Value Pairs | Solvent, temperature, pressure (initial/default). | Reusable |

| Proposed Catalytic Cycle | Link/Diagram | Reference to a diagram of elementary steps. | Accessible, Reusable |

Experimental Protocol: Documenting a Reaction Scheme

- Define Components: List all chemical species using IUPAC nomenclature and generate standard identifiers (SMILES, InChI).

- Diagram Creation: Use cheminformatics software (e.g., ChemDraw) to create an electronic diagram. Save in vector (SVG) and semantic (CML) formats.

- Metadata Annotation: Embed metadata using a standard schema (e.g., Schema.org

ChemicalReaction). - Digital Repository Submission: Assign a persistent identifier (DOI) upon submission to a repository like

CatTestHub.

Diagram 1: Reaction scheme data flow.

Catalyst Characterization Data Types

X-ray Diffraction (XRD): Bulk Crystallographic Structure

XRD provides definitive evidence of a catalyst's crystalline phase, lattice parameters, and crystallite size.

Experimental Protocol (Powder XRD):

- Sample Preparation: Grind solid catalyst to a fine, homogeneous powder. Load into a sample holder, ensuring a flat, level surface.

- Instrument Setup: Use a Cu Kα X-ray source (λ = 1.5418 Å). Set voltage to 40 kV, current to 40 mA.

- Data Acquisition: Scan 2θ range from 5° to 80° with a step size of 0.02° and dwell time of 1-2 seconds per step.

- Data Processing: Apply background subtraction and Kα2 stripping. Identify phases by matching peak positions and intensities to reference patterns in the ICDD PDF database.

- Crystallite Size Calculation: Apply the Scherrer equation to the full width at half maximum (FWHM) of a major peak: D = Kλ / (β cosθ), where D is crystallite size, K is shape factor (~0.9), λ is X-ray wavelength, β is corrected FWHM (in radians), and θ is Bragg angle.

Table 2: Core XRD Data Outputs and FAIR Annotation

| Data Output | Typical Format | Key Parameters to Report | FAIR Annotation Tip |

|---|---|---|---|

| Diffractogram | .xy, .csv, .xrdml | 2θ, Intensity (counts) | Link to CIF of reference phase. |

| Phase Identification | Text, PDF# | Matched ICDD card number, confidence metric. | Use ontologies (e.g., cheminf). |

| Crystallite Size | Number (± SD) | Peak (hkl) used, Scherrer constant (K) value. | Report as scherrerSizeValue. |

| Lattice Parameters | Numbers (Å, °) | Refinement method (e.g., Rietveld), reliability factors. | Use CrystallographicInfo schema. |

X-ray Photoelectron Spectroscopy (XPS): Surface Composition & Oxidation States

XPS probes the top ~10 nm of a catalyst, providing elemental composition and chemical state information.

Experimental Protocol:

- Sample Preparation: Deposit powder catalyst as a thin film on conductive tape or a foil substrate. Use an argon glovebox for air-sensitive samples to prevent oxidation prior to insertion.

- Instrument Setup: Load sample into ultra-high vacuum (UHV) chamber (< 10⁻⁸ mbar). Use a monochromatic Al Kα source (1486.6 eV).

- Data Acquisition:

- Survey Scan: 0-1200 eV binding energy (BE), pass energy 100-150 eV.

- High-Resolution Scans: For regions of interest (e.g., C 1s, O 1s, catalyst metal), use pass energy 20-50 eV for better resolution.

- Data Processing:

- Charge Correction: Reference adventitious carbon (C-C/C-H) peak to 284.8 eV.

- Background Subtraction: Apply a Shirley or Tougaard background.

- Peak Fitting: Deconvolute spectra using mixed Gaussian-Lorentzian functions. Constrain spin-orbit doublets with appropriate separation and area ratios.

Table 3: Core XPS Data Outputs and FAIR Annotation

| Data Output | Typical Format | Key Parameters to Report | FAIR Annotation Tip |

|---|---|---|---|

| Survey Spectrum | .vms, .txt | BE, Intensity (counts), source, analyzer settings. | Deposit in dedicated repository (e.g., NIST XPS Database). |

| High-Resolution Spectrum | .vms, .txt | BE region, peak fit parameters (FWHM, area, %Gaussian). | Link to FittingModel description. |

| Atomic Concentration (%) | Table | Calculated using sensitivity factors. | Report with uncertainty field. |

| Chemical State Assignment | Table | BE position, reference from literature. | Use ontology terms (e.g., chebi:OXIDATION_STATE). |

Transmission Electron Microscopy (TEM): Nanostructure & Morphology

TEM delivers direct imaging of nanoparticle size, shape, distribution, and often crystallographic information via selected area electron diffraction (SAED).

Experimental Protocol (Bright-Field TEM/HRTEM):

- Sample Preparation: Sonicate catalyst powder in ethanol. Drop-cast suspension onto a lacey carbon-coated Cu grid. Dry under ambient or inert atmosphere.

- Instrument Setup: Align microscope (e.g., 200 kV field-emission gun). Insert sample.

- Imaging: Navigate to suitable area at low magnification. Focus and stigmate at medium magnification. Acquire high-resolution images or micrographs for particle size analysis at appropriate magnifications (e.g., 200kX-1MX).

- SAED: Select an area of interest with an aperture, switch to diffraction mode, and acquire the pattern.

- Analysis: Use software (e.g., ImageJ) to measure particle sizes from >100 particles to generate a statistically valid size distribution histogram.

Diagram 2: Catalyst characterization workflow.

The Scientist's Toolkit: Core Characterization Reagents & Materials

Table 4: Essential Materials for Catalyst Characterization

| Item | Function | Example/Specification |

|---|---|---|

| Lacey Carbon TEM Grids | Provides an ultra-thin, fenestrated support for TEM imaging, minimizing background. | Copper, 300 mesh. |

| Conductive Carbon Tape | Provides electrical contact for XPS analysis of powder samples, preventing charging. | Double-sided, high-purity graphite. |

| XRD Standard (Silicon) | Used for instrument alignment, zero-error correction, and line-shape analysis. | NIST SRM 640e. |

| Argon Glovebox | Enables handling and preparation of air- and moisture-sensitive catalysts for XPS/XRD. | < 1 ppm O₂ and H₂O. |

| Ultrasonic Bath | Disperses aggregated catalyst nanoparticles for uniform TEM sample preparation. | 37 kHz, 80W. |

| High-Purity Ethanol | Solvent for preparing TEM and other analytical samples; high purity avoids contamination. | HPLC grade, ≥99.9%. |

Functional Data: Kinetic Profiles

Kinetic profiles are the cornerstone for understanding catalyst performance, informing on activity, selectivity, and stability over time.

Core Kinetic Data Types

- Conversion vs. Time: Defines catalyst activity and induction/deactivation periods.

- Selectivity vs. Conversion/Time: Crucial for evaluating product distribution.

- Turnover Frequency (TOF): The number of product molecules formed per catalytic site per unit time (s⁻¹ or h⁻¹). Requires an accurate measure of the number of active sites.

- Arrhenius Plot: Used to determine the apparent activation energy (Eₐ) of the reaction.

Experimental Protocol: Generating a Kinetic Profile (Gas-Phase Reaction)

- Catalyst Activation: Pre-treat catalyst in situ (e.g., reduce in H₂ flow at set temperature).

- Reaction Startup: Set mass flow controllers to establish desired feed composition (e.g., 1% CO, 1% O₂, balance He). Pass flow through catalyst bed held at reaction temperature (Tᵣ).

- Product Analysis: Use online gas chromatography (GC) or mass spectrometry (MS).

- For GC: Inject sample loops at regular intervals (e.g., every 10-20 min).

- Calibrate the GC/MS for all reactants and expected products.

- Data Collection: Record time (t), reactant concentrations (Cin, Cout), and product concentrations.

- Calculations:

- Conversion (%) = [(Cin - Cout) / C_in] * 100.

- Selectivity to Product i (%) = [Moles of i formed / Total moles of all products] * 100. Correct for carbon atoms if needed.

- TOF = (Moles converted per second) / (Moles of active sites). Active site quantification is critical and often non-trivial.

Table 5: Core Kinetic Data Outputs and FAIR Annotation

| Data Output | Typical Format | Key Parameters to Report | FAIR Annotation Tip |

|---|---|---|---|

| Time-Series Data | .csv, .xlsx | Time, Conversion, Selectivity (all products), Yield. | Use TimeSeries schema, define timeUnit. |

| TOF Value | Number (± SD) | Time point used, method for active site counting. | Use catalyticTurnoverNumber. |

| Activation Energy (Eₐ) | Number (kJ/mol) | Temperature range, regression R² value. | Link to raw data for Arrhenius plot. |

| Deactivation Constant | Number (e.g., h⁻¹) | Model used (e.g., exponential decay). | Describe in ProcessModel metadata. |

Diagram 3: Kinetic data generation loop.

Integration with CatTestHub: A FAIR Data Pipeline

Adhering to the described protocols and structured data tables ensures seamless integration into the CatTestHub ecosystem. Each data type must be deposited with rich, machine-actionable metadata following community-agreed schemas (e.g., based on CHEMINF or ISA frameworks). This transforms isolated experiments into a interconnected, searchable, and reusable knowledge graph, fundamentally enhancing the pace and reliability of catalysis research and development.

The adoption of Findable, Accessible, Interoperable, and Reusable (FAIR) data principles within catalysis research, as championed by the CatTestHub initiative, is not merely a theoretical exercise in data management. It yields concrete, measurable advantages that directly impact the pace and reliability of scientific discovery. This whitepaper details how strict adherence to FAIR principles, particularly through structured data repositories and standardized reporting, manifests in three core benefits: the acceleration of novel catalyst discovery, the robust enablement of cross-study meta-analyses, and the fundamental strengthening of trust in experimental data. We ground this discussion in the specific technical workflows of heterogeneous catalysis research and pre-clinical drug development catalysis.

Accelerating Discovery Through Reusable, Structured Data

The traditional, publication-centric model of data sharing often leaves critical experimental parameters buried in supplementary PDFs, necessitating time-consuming manual extraction and validation. FAIR-compliant data repositories standardize this process, allowing researchers to build directly upon prior work.

Experimental Protocol: High-Throughput Catalyst Screening for C-H Activation This protocol exemplifies how FAIR data capture accelerates iterative discovery cycles.

- Library Synthesis: A library of 96 porous organic polymer (POP)-supported Pd catalysts is prepared via Sonogashira-Hagihara coupling. Each catalyst variant is tagged with a unique digital identifier (e.g., a QR code) linked to its full synthetic detail in the repository.

- Reaction Setup: Screening is performed in a parallel pressure reactor system (e.g., Unchained Labs Bigfoot). The reaction of interest (e.g., direct arylation of imidazole) is automated. Key parameters (temperature: 150°C, pressure: 10 bar Ar, stirring: 1000 rpm) are recorded in a machine-readable JSON schema alongside the catalyst ID.

- Analysis & Upload: Post-reaction, GC-MS analysis yields conversion and selectivity data. The entire dataset—catalyst identifiers, reaction parameters, and analytical results—is uploaded to the CatTestHub platform via a standardized API. The platform validates the schema before ingestion.

- Data Reuse: A subsequent researcher can query the repository for "Pd-POP catalysts" AND "C-H activation" AND "imidazole." Retrieving the structured dataset allows them to immediately exclude underperforming catalyst families and design a focused next-generation library, saving weeks of redundant experimentation.

Table 1: Impact of FAIR Data on Screening Efficiency

| Metric | Traditional Workflow | FAIR-Compliant Workflow | Relative Improvement |

|---|---|---|---|

| Time to extract data from 10 prior studies | 40-60 hours | <1 hour | ~98% reduction |

| Catalyst re-synthesis due to poor documentation | ~25% of candidates | <5% of candidates | ~80% reduction |

| Time to design next-generation library | 2-3 weeks | 3-5 days | ~75% reduction |

Diagram Title: FAIR Data Cycle for Accelerated Catalyst Discovery

Enabling Robust Meta-Analyses via Interoperability

Meta-analysis in catalysis requires comparing intrinsic activity (turnover frequency - TOF) across studies, which is often impossible due to inconsistent reporting of critical parameters like active site concentration, dispersion, and mass transfer limits. FAIR mandates the use of controlled vocabularies and standardized units, making cross-study comparison computationally feasible.

Detailed Methodology for Calculating Turnover Frequency (TOF) for Meta-Analysis For a hydrogenation reaction over a supported metal catalyst.

Active Site Quantification (Required FAIR Field):

- Protocol: Perform H₂ chemisorption via pulsed titration or temperature-programmed desorption (TPD) using a Micromeritics AutoChem II.

- Calibration: Inject known volumes of H₂ into the carrier gas (Ar) to create a calibration curve.

- Sample Preparation: Reduce 0.1 g catalyst in 10% H₂/Ar at 400°C for 1 hr, then purge in Ar.

- Titration: Pulse 5% H₂/Ar over the sample at 50°C until saturation. Assume a 1:1 H:surface metal atom stoichiometry.

- Calculation:

Active Sites = (Total H₂ Uptake (mol) * Avogadro's Number). Report as# sites / g_catandDispersion (%).

Initial Rate Measurement (Required FAIR Field):

- Protocol: Conduct reaction in a differential reactor bed (conversion <15%) to ensure kinetic control.

- Conditions: Record exact temperature, pressure, feedstock partial pressure, and total flow rate.

- Analysis: Use online GC to measure substrate loss rate at time zero.

TOF Calculation & Normalization:

- Formula:

TOF (s⁻¹) = (Moles of substrate converted per second) / (Total moles of active sites). - FAIR Entry: The calculated TOF is stored with all underlying raw data (chemisorption isotherm, GC chromatograms) and calculation code (e.g., Jupyter notebook) linked via persistent identifiers.

- Formula:

Table 2: Data Required for Interoperable TOF Meta-Analysis

| Data Field | Standardized Unit (FAIR) | Common Inconsistency (Non-FAIR) | Impact on Meta-Analysis |

|---|---|---|---|

| Active Site Concentration | μmol sites / g_cat | Reported as wt.% metal only | Cannot compare intrinsic activity. |

| Dispersion | % | Not reported | Uncertainty in active site count. |

| Reactor Type | Controlled Vocabulary (e.g., "Differential Plug Flow") | Vague description ("batch reactor") | Cannot assess mass/heat transfer limits. |

| Initial Rate Condition | Conversion < X% (e.g., 15%) | Not specified or high conversion | Rate may be diffusion-limited or false. |

| TOF Calculation Script | Link to executable code | Not shared | Calculation cannot be audited or reproduced. |

Diagram Title: FAIR Data Enables Valid Meta-Analysis of Catalyst TOF

Building Trust Through Provenance and Reproducibility

Trust in data is a function of complete provenance (the origin and processing history) and demonstrated reproducibility. FAIR principles enforce this by linking datasets to detailed experimental protocols, raw instrument output, and processing scripts.

Experimental Protocol: Reproducibility Package for a Pharmaceutical Cross-Coupling Catalysis Test A protocol to ensure a catalytic C-N coupling result is fully reproducible.

Materials Provenance Tracking:

- Record exact source, catalog number, lot number, and certificate of analysis for all reagents (e.g., Pd₂(dba)₃, Buchwald ligand, base).

- Report solvent purity and water content (from Karl Fischer titration).

- Document substrate purity (NMR data) and any pre-purification steps.

Instrument Calibration Logs:

- Attach calibration certificates for balances, thermocouples (against NIST standard), and pressure sensors.

- Document GC-MS calibration using a fresh standard curve for relevant compounds on the day of the experiment.

Raw Data & Processing Script:

- Primary Data: Archive the raw chromatographic files (.D format), not just processed peak areas.

- Processing Code: Provide the script (e.g., Python with

scipy) used to integrate peaks, apply the calibration curve, and calculate yield. Version control the script (e.g., Git hash). - Full Context: The CatTestHub entry links all the above elements, creating an immutable chain of custody from raw voltage output to reported yield.

Table 3: Components of a Trust-Enhancing FAIR Data Package

| Component | Example Content | Trust Mechanism |

|---|---|---|

| Materials Provenance | "Toluene, anhydrous, 99.8%, Sigma-Aldrich 244511, Lot# BCBQ1234, KF assay: <15 ppm H₂O." | Eliminates variability from impurity differences. |

| Instrument Log | "Thermocouple Calibration Date: 2023-11-15, Deviation from NIST ref: +0.3°C at 150°C." | Validates the accuracy of reported reaction conditions. |

| Raw Analytical Data | "GC-MS Raw File: project123run_45.D (Agilent ChemStation)." | Allows independent re-integration and verification of results. |

| Processing Script | "Yield_Calculation.py (Git commit: a1b2c3d). Input: raw .D file. Output: yield.csv." | Ensures computational reproducibility and transparency in data treatment. |

| Digital Signature | "Dataset signed by: Jane Doe (ORCID). Timestamp: 2024-05-10T14:30:00Z." | Provides attribution and certifies the data package at a point in time. |

The Scientist's Toolkit: Research Reagent Solutions

Table 4: Essential Materials for FAIR-Compliant Catalysis Research

| Item Name & Supplier Example | Function in FAIR Context |

|---|---|

| Catalyst Precursors w/ CoA (e.g., Strem Chemicals) | Provide detailed Certificates of Analysis (CoA) for metal content and impurities. Essential for provenance tracking. |

| Deuterated Solvents for NMR (e.g., Cambridge Isotope Laboratories) | Critical for quantifying substrate purity and reaction conversion. Lot-specific impurity profiles must be recorded. |

| Standard Reference Catalysts (e.g., EUROPT-1, ASTM D3908) | Used for inter-laboratory benchmarking and validating activity measurements, enabling cross-study comparison. |

| Certified Reference Materials (CRMs) for GC/GC-MS (e.g., Restek) | Allow precise calibration of analytical equipment. Batch numbers link instrument performance to data generation. |

| High-Throughput Experimentation (HTE) Kits (e.g., Unchained Labs) | Integrated platforms that automatically generate structured, machine-readable metadata alongside reaction data. |

| Electronic Lab Notebook (ELN) with API (e.g., LabArchives, RSpace) | Captures experimental protocols and observations in a structured digital format, enabling direct export to repositories. |

| Persistent Identifier (PID) Service (e.g., DataCite DOI, RRID) | Assigns unique, resolvable identifiers to datasets, materials, and instruments, making them Findable and citable. |

A Step-by-Step Guide to Implementing FAIR Data in Your Catalysis Workflow on CatTestHub

Within the CatTestHub framework, the initial step of data capture and standardization is foundational to achieving FAIR (Findable, Accessible, Interoperable, and Reusable) data principles. This whitepaper details the implementation of structured templates to systematically capture experimental data in catalysis research, addressing critical gaps in data interoperability and long-term reusability. Effective standardization at the point of data generation is the cornerstone of building a robust, machine-actionable knowledge base for catalyst discovery and optimization in pharmaceutical and chemical development.

Core Template Architecture

The CatTestHub system employs a modular template architecture, ensuring that all essential data dimensions are captured without being prescriptive to specific research methodologies. The three primary interconnected templates are designed for digital lab notebooks (ELNs) and data management platforms.

Reaction Data Template

This template captures the core experimental context of a catalytic transformation.

Diagram Title: Reaction Data Capture Workflow

Catalyst Data Template

A detailed profile for each catalytic entity, essential for structure-activity relationship (SAR) studies.

Diagram Title: Catalyst Information Hierarchy

Analytical Data Template

Standardizes the output from characterization techniques, linking evidence directly to reaction and catalyst records.

Diagram Title: Analytical Data Standardization Flow

Quantitative Data Standards & Benchmarks

Adherence to standardized metrics enables meaningful cross-study comparison. The following tables summarize core quantitative data fields.

Table 1: Required Reaction Condition Metrics

| Parameter | Standard Unit | Reporting Precision | Mandatory Field |

|---|---|---|---|

| Temperature | °C | ± 0.1 °C | Yes |

| Pressure | bar | ± 0.01 bar | If not ambient |

| Reaction Time | h or min | ± 1% | Yes |

| Catalyst Loading | mol% | ± 0.01 mol% | Yes |

| Substrate Concentration | mol/L | ± 0.001 mol/L | Yes |

| Solvent Volume | mL | ± 0.01 mL | Yes |

Table 2: Core Analytical Data Output Standards

| Analytical Method | Primary Metric | Required Control Data | Minimum Metadata |

|---|---|---|---|

| HPLC/UPLC | Area % or Concentration | Blank run, Standard curve | Column, Gradient, Detector λ |

| GC-FID/TCD | Area % | Internal standard (e.g., n-dodecane) | Column, Oven program, Injector temp |

| NMR (qNMR) | Mol % | Certified internal standard (e.g., 1,3,5-TMOB) | Field strength, Solvent, Pulse sequence |

| LC-MS/GC-MS | m/z, Retention Time | Tuning/calibration report | Ionization mode, Scan range |

Detailed Experimental Protocols for Key Catalytic Tests

Protocol: Standardized Catalytic Hydrogenation Reaction

This protocol exemplifies the application of the above templates for a high-frequency test reaction.

Objective: To evaluate catalyst performance for the hydrogenation of a model substrate (e.g., acetophenone to 1-phenylethanol) under controlled conditions.

I. Pre-Reaction Setup & Data Capture (Reaction & Catalyst Templates)

- Catalyst Weighing: In an inert atmosphere glovebox, weigh the catalyst (e.g., 2.5 mg, 0.005 mmol, 0.5 mol%) into a dry 10 mL pressure vial. Record exact mass (± 0.01 mg), catalyst ID (from Catalyst Master Record), and batch number.

- Substrate/Solvent Addition: Using a calibrated micropipette, add acetophenone (122 µL, 1.0 mmol) and anhydrous methanol (2.5 mL) to the vial. Record lot numbers and volumes/masses.

- Sealing: Cap the vial with a PTFE-lined septum and remove from the glovebox.

II. Reaction Execution

- Purge & Pressurization: Connect the vial to a manifold. Purge the headspace with

H_2gas (3 cycles of vacuum andH_2refill). Pressurize to 5.0 barH_2absolute pressure. - Initiation: Place the vial in a pre-heated metal alloy block at 30.0 °C with magnetic stirring (1200 rpm). Record this as time = 0.

- Monitoring: Monitor pressure drop qualitatively. Reaction time: 2 hours.

III. Quenching & Sampling (Linking to Analytical Template)

- After 2 hours, depressurize carefully.

- Immediately withdraw a 100 µL aliquot using a gas-tight syringe.

- Dilute the aliquot with 900 µL of dichloromethane containing a known concentration of an internal standard (e.g., n-tetradecane, 0.01 M). This creates the Analytical Sample ID.

IV. Analytical Procedure: GC-FID Analysis

- Instrument: Agilent 8890 GC with FID.

- Column: Agilent HP-5 (30 m x 0.32 mm x 0.25 µm).

- Method:

- Injector: 250 °C, split mode (50:1).

- Oven: 50 °C hold 2 min, ramp 20 °C/min to 250 °C, hold 5 min.

- Carrier: He, constant flow 1.5 mL/min.

- FID: 300 °C.

- Quantification: Process using a 5-point calibration curve of acetophenone and 1-phenylethanol against the internal standard.

- Data Output: Calculate and record conversion (%) and selectivity to 1-phenylethanol (%).

The Scientist's Toolkit: Research Reagent Solutions

Table 3: Essential Materials for Standardized Catalysis Testing

| Item | Function & Specification | Critical Quality Attribute |

|---|---|---|

| Anhydrous Solvents (e.g., MeOH, THF, Toluene) | Reaction medium; must not contain impurities that deactivate catalysts. | Water content < 50 ppm (by Karl Fischer), packaged under N_2 in Sure/Seal bottles. |

| Certified Substrates & Standards | Provide reproducible reaction starting points and analytical calibration. | Purity > 98% (HPLC/NMR), lot-specific certificate of analysis, stored as recommended. |

| Internal Standards (e.g., n-Dodecane, 1,3,5-TMOB) | Enable precise quantitative analysis by GC or qNMR. | High chemical and isotopic purity, inert under analysis conditions. |

| Catalyst Precursors | Well-defined metal complexes or salts for in-situ or pre-formed catalysis. | Known molecular structure, stored under inert atmosphere, exact molecular weight provided. |

| High-Pressure Reaction Vials | Safe containment of reactions under pressure. | Chemically resistant (glass), rated for pressure > 10 bar, with secure PTFE/silicone septa. |

| Calibrated Gas Manifold | Precise delivery and monitoring of reactive gases (H_2, CO_2, CO). |

Accurate pressure transducers (± 0.1 bar), leak-free valves, equipped with vents and traps. |

| Microbalance | Accurate weighing of catalysts, especially for low loadings. | Precision of ± 0.01 mg, with calibration certificate, in draft-free environment. |

In the context of CatTestHub’s mission to implement FAIR (Findable, Accessible, Interoperable, and Reusable) data principles in catalysis research, the creation of rich, structured metadata is not an administrative afterthought but a foundational scientific activity. This technical guide outlines the critical components and methodologies for crafting machine-readable descriptors for every experimental procedure, ensuring data longevity, reproducibility, and computational utility.

Core Components of a FAIR-Compliant Experimental Metadata Schema

A comprehensive metadata schema for a catalysis experiment must encompass several layers of description. The following table summarizes the essential qualitative and quantitative components.

Table 1: Core Metadata Components for a Catalysis Experiment

| Metadata Category | Sub-category | Description & Requirement | Data Type / Standard |

|---|---|---|---|

| Administrative | Unique Identifier | Persistent, globally unique ID (e.g., DOI, UUID). | String (e.g., ark:/57799/b9jqsf) |

| Contributor & Affiliation | Principal investigator, experimenters, institution. | ORCID, ROR ID | |

| Date & Version | Experiment date, metadata creation date, version. | ISO 8601 | |

| Experimental Context | Project Aim | Hypothesis or research question being tested. | Free text (structured abstract) |

| Protocol Reference | Link to or ID of standard operating procedure. | Protocol DOI, URI | |

| Sample Description | Catalyst | Precise identity, synthesis method, characterization data (e.g., XRD, BET). | InChI, CHEBI, custom ontology |

| Reactants/Substrates | Chemical identity, purity, supplier. | InChI, CAS RN, SMILES | |

| Conditions & Parameters | Reactor Type | Fixed-bed, batch, flow, etc. | Controlled vocabulary |

| Measured Variables | Temperature, pressure, flow rates, time. | Unitful values (SI preferred) | |

| Instrumentation & Data | Analytical Techniques | GC-MS, NMR, HPLC, etc., with instrument model. | OBI, CHMO ontology |

| Raw Data Link | Persistent link to raw instrument files. | URI (e.g., to repository) | |

| Results & Analysis | Derived Data | Key outcomes: conversion, yield, selectivity, TON, TOF. | Number with unit & uncertainty |

| Processed Data Link | Link to cleaned/analyzed datasets (e.g., Jupyter notebook). | URI | |

| Provenance | Processing Steps | Sequence of actions from raw data to results. | PROV-O, W3C |

Detailed Methodology: Implementing a Metadata Capture Protocol

Pre-Experiment: The Electronic Lab Notebook (ELN) Template

Objective: To ensure consistent, structured data entry at the point of experimentation. Protocol:

- Template Design: Within the institutional ELN (e.g., LabArchives, RSpace), create a project-specific template that mirrors the schema in Table 1.

- Controlled Vocabularies: Implement dropdown menus for fields like "Reactor Type" and "Analytical Technique" using terms from community ontologies (e.g., CHMO).

- Mandatory Fields: Designate fields for Unique Identifier, Catalyst Identifier, and core conditions as mandatory.

- Instrument Integration: Configure ELN to capture instrument metadata automatically via instrument data APIs where possible.

During Experiment: Automated and Manual Logging

Objective: To capture dynamic experimental parameters and observations. Protocol:

- Digital Logging: Connect reactor control systems and sensors (e.g., mass flow controllers, thermocouples) to a data acquisition system. Log time-series data with synchronized timestamps.

- Manual Annotations: Record observations (e.g., color change, precipitation) directly in the ELN template at pre-defined time points or events.

- Sample Tracking: Use barcodes or QR codes for vials and samples. Link each physical sample to its digital metadata record by scanning the code before and after analysis.

Post-Experiment: Data Curation and Repository Submission

Objective: To package and deposit the experiment as a FAIR digital object. Protocol:

- Data Consolidation: Aggregate all digital assets: ELN entry, raw instrument files, processed data scripts, and output figures.

- Metadata Validation: Run a validation script (e.g., using JSON Schema) to check for completeness and adherence to the CatTestHub schema.

- Repository Deposit: Use the API of a designated repository (e.g., Zenodo, institutional repository) to create a new deposit. Upload all files and embed the validated metadata in the required format (e.g., DataCite JSON, RDF).

- Identifier Assignment: Upon publication, register the dataset to obtain a persistent identifier (DOI). This DOI must be inserted back into the originating ELN record.

Visualizing the Metadata Ecosystem and Workflow

The following diagrams illustrate the logical flow of metadata creation and its role within the experimental data lifecycle.

Title: The Experimental Metadata Creation Lifecycle

Title: The Metadata Ecosystem: Sources and Consumers

The Scientist's Toolkit: Essential Reagents and Solutions for Metadata Implementation

Table 2: Research Reagent Solutions for FAIR Metadata Implementation

| Tool / Resource | Category | Primary Function | Key Benefit for FAIRness |

|---|---|---|---|

| Electronic Lab Notebook (e.g., LabArchives, RSpace) | Software Platform | Provides structured digital templates for experimental documentation. | Ensures consistent, complete, and digitally-native metadata capture at the source. |

| Persistent Identifier Service (e.g., DataCite, Crossref) | Infrastructure | Mints unique, persistent identifiers (DOIs) for datasets. | Makes data Findable and citable, providing a stable link for access. |

| Metadata Schema Validator (e.g., JSON Schema, SHACL) | Validation Tool | Checks metadata files for required fields and correct formatting. | Ensures Interoperability by guaranteeing adherence to a defined standard. |

| Domain Ontologies (e.g., CHEBI, CHMO, RxNO) | Semantic Standard | Provide standardized vocabularies for chemicals, reactions, and instruments. | Enables Interoperable and machine-reasoning by using common, defined terms. |

| Research Data Repository (e.g., Zenodo, Figshare, institutional repo) | Publication Platform | Hosts datasets, metadata, and assigns persistent identifiers. | Makes data Accessible and Reusable by providing a trusted, public location. |

| Provenance Tracking Tool (e.g, W3C PROV-O, YesWorkflow) | Documentation Standard | Models the lineage of data from raw files to final results. | Ensures Reusability by providing clear context on how results were generated. |

Within the CatTestHub FAIR data ecosystem for catalysis research, achieving true interoperability requires unambiguous identification of chemical entities. Persistent Identifiers (PIDs) and ontologies provide the semantic bedrock, ensuring that data and metadata are machine-actionable across disparate platforms. This guide details the technical implementation of three cornerstone systems—InChIKeys, ChEBI, and RxNorm—to create a robust, interoperable data infrastructure for catalysis and drug development.

InChIKey: The Structural Fingerprint

The International Chemical Identifier (InChI) is an IUPAC standard for representing chemical structures. The InChIKey is a fixed-length (27-character), hashed version of the full InChI string, designed for database indexing and web searches. It consists of two layers: the first 14 characters (the connectivity layer, MMMMMMMRRSSSS) and the second 13 characters (the stereochemical and isotopic layer, PP...VVV), separated by a hyphen.

Experimental Protocol for Generating and Validating InChIKeys:

- Input Preparation: Prepare a canonical molecular representation (e.g., SMILES, MOL file) of the chemical entity.

- InChI Generation: Use the official InChI software (

chem.inchi) or a trusted API (e.g., NIH CACTUS, PubChem) to generate the full InChI string from the input. - Key Derivation: The software automatically computes the SHA-256 hash of the InChI string and encodes it into the 27-character InChIKey.

- Validation: Verify the key's correctness by cross-referencing it against a trusted public database (e.g., PubChem, ChemSpider) using a structural search. Ensure both the standard InChIKey and any possible "non-standard" keys (for tautomers or mesomers) are considered.

ChEBI: Chemical Entities of Biological Interest

ChEBI is an open, manually curated ontology of molecular entities focused on 'small' chemical compounds. It provides stable identifiers (e.g., CHEBI:15377 for acetic acid), systematic nomenclature, and a rich hierarchy of isa and relationship (e.g., hasrole, isconjugateacid_of) annotations.

Experimental Protocol for Annotating Catalytic Systems with ChEBI:

- Entity Identification: List all distinct molecular entities in the experimental dataset (e.g., catalyst, substrate, solvent, product).

- ChEBI Search: For each entity, query the ChEBI database (via web interface or EBI's REST API) using preferred name, synonym, or structural descriptors (InChIKey is optimal).

- Term Selection: From the results, select the most specific ChEBI term that accurately describes the entity's role in the catalytic context (e.g.,

catalyst (CHEBI:35223),aprotic solvent (CHEBI:48355)). - Annotation Storage: Store the ChEBI ID and recommended name as linked metadata alongside the experimental data record.

RxNorm: Normalized Clinical Drug Vocabulary

RxNorm, maintained by the U.S. National Library of Medicine, provides normalized names and unique identifiers (RxCUIs) for clinical drugs and their components (active ingredients, dose forms, strengths). It is critical for bridging catalysis research on drug synthesis with pharmacological and clinical data.

Experimental Protocol for Mapping Drug-like Molecules to RxNorm:

- Ingredient Focus: Identify the active pharmaceutical ingredient (API) in a drug target or synthesized compound.

- API Mapping: Use the InChIKey or systematic name of the API to search the RxNorm API (

/rxcuiendpoint) or the UMLS Metathesaurus. - Contextual Association: Retrieve the RxCUI for the specific ingredient (e.g.,

metformin (RxCUI:6809)). For formulated drugs, additional RxCUIs for branded or dose-form-specific concepts can be linked. - Integration: Embed the RxCUI within the compound's metadata to enable cross-walking to resources like DrugBank or clinical databases.

Data Tables: Comparative Analysis

Table 1: Core Characteristics of Featured PID and Ontology Systems

| Feature | InChIKey | ChEBI | RxNorm |

|---|---|---|---|

| Primary Scope | Unique structural descriptor for any chemical compound. | Ontology of small molecular entities & their biological roles. | Normalized names for clinical drugs & their components. |

| Identifier Format | 27-character hash (e.g., QTBSBXVTEAMEQO-UHFFFAOYSA-N). |

Integer prefixed by "CHEBI:" (e.g., CHEBI:15377). |

Integer RxCUI (e.g., 6809). |

| Authority | IUPAC, NIST. | European Bioinformatics Institute (EBI). | U.S. National Library of Medicine (NLM). |

| Key Strength | Structure-based, deterministic, enables precise structure search. | Rich semantic relationships & role-based classification. | Links drug ingredients to brand names, formulations, and clinical data. |

| Typical Use Case in Catalysis | Uniquely identifying catalyst, ligand, substrate, and product structures. | Annotating the functional role (e.g., catalyst, cofactor, inhibitor) of a chemical in a reaction. | Linking a synthesized drug candidate or intermediate to established clinical drug vocabularies. |

Table 2: Quantitative Impact of PID Adoption on Data Integration Efficiency

| Metric | Before PID Implementation (Hypothetical) | After PID Implementation (Hypothetical) | Measurement Method |

|---|---|---|---|

| Time to Link Catalyst to Biological Activity Data | 2-3 hours (manual literature/db search) | <5 minutes (automated query via InChIKey/ChEBI ID) | Average time recorded for 10 sample compounds. |

| Cross-Platform Dataset Merge Success Rate | ~60% (high error from synonym mismatch) | >98% (key-based exact match) | Percentage of successfully merged records from two synthetic chemistry databases. |

| Machine-Actionable Metadata Completeness | ~30% of records | ~95% of records | Audit of 1000 data records for structured ontology annotations. |

Interoperability Workflow: From Catalyst to Clinical Relevance

(Diagram Title: PID Integration Workflow for Catalysis Data)

The Scientist's Toolkit: Research Reagent Solutions

Table 3: Essential Resources for PID Implementation

| Item/Category | Function & Relevance to FAIR Catalysis Data |

|---|---|

| InChI Software Suite | Command-line tools and libraries (chem.inchi) to generate and validate InChI/InChIKeys from structural files. Essential for local PID creation. |

| PubChem REST API | Provides authoritative InChIKeys and cross-references for millions of compounds. Used for validation and bulk PID retrieval. |

| ChEBI Search API (EBI) | Programmatic access to query and retrieve ChEBI IDs, names, and ontology relationships for automated annotation pipelines. |

| RxNorm API (NLM UMLS) | Enables mapping of drug ingredients and formulations to RxCUIs, bridging chemical synthesis with pharmacology. |

| RDKit or Open Babel | Open-source cheminformatics toolkits. Facilitate structure manipulation, format conversion, and integration of PID generation into workflows. |

| FAIR Data Management Platform | A local or institutional platform (e.g., based on CKAN, Dataverse) configured to accept and index PIDs as first-class metadata fields. |

| Ontology Management Tool (e.g., Protégé) | For advanced users to model and extend local experimental ontologies that link to core ontologies like ChEBI. |

This whitepaper, situated within a broader thesis on the implementation of FAIR (Findable, Accessible, Interoperable, Reusable) principles for catalysis research via CatTestHub, provides a comprehensive technical guide for Step 4: Data Upload and Curation. It details best practices for preparing, submitting, and linking experimental datasets to ensure maximal utility and compliance within a federated data ecosystem for drug development professionals and researchers.

Modern catalysis research, particularly in pharmaceutical development, generates complex, multi-dimensional datasets. The CatTestHub framework mandates that all submitted data adhere to FAIR principles to accelerate discovery through data reuse and meta-analysis. This step is critical for transforming isolated experimental results into a community resource.

Pre-Submission Data Curation Workflow

Effective submission begins with rigorous local curation. The following workflow must be completed prior to repository upload.

Diagram Title: Pre-submission Data Curation Workflow

Mandatory Metadata and Quantitative Data Tables

All submissions must include a machine-readable metadata file (JSON-LD recommended) and structured quantitative data. Below are the core required metadata fields and an example table for catalytic performance data.

Table 1: Core Submission Metadata Schema

| Field Name | Data Type | Description | Example | Required |

|---|---|---|---|---|

experiment_id |

String | Unique, persistent identifier. | CTH-CAT-2023-0147 |

Yes |

submission_date |

Date (ISO 8601) | Date of upload. | 2023-11-27 |

Yes |

catalyst_smiles |

String | Canonical SMILES for the catalyst. | CC[Pd]Cl |

Yes |

substrate_smiles |

String | Canonical SMILES for the primary substrate. | C1=CC=CC=C1Br |

Yes |

reaction_type |

Controlled Vocabulary | Type of catalytic reaction. | Cross-Coupling |

Yes |

faradaic_efficiency |

Float (%) | For electrocatalysis: efficiency of charge use. | 87.5 |

Conditional |

turnover_number |

Integer | Mole product per mole catalyst. | 12500 |

Yes |

selectivity |

Float (%) | Percentage of desired product. | 99.2 |

Yes |

data_license |

String | License for data reuse (e.g., CCO, BY 4.0). | CC0 1.0 |

Yes |

Table 2: Example Catalytic Performance Data Table

| Catalyst_ID | Temperature (°C) | Pressure (bar) | Time (h) | Conversion (%) | Yield (%) | Selectivity (%) | TON | TOF (h⁻¹) |

|---|---|---|---|---|---|---|---|---|

| Pd/C-1 | 80 | 1 | 2 | 99.5 | 98.7 | 99.2 | 9870 | 4935 |

| Pd@NP-Au | 70 | 1.5 | 1.5 | 95.2 | 94.1 | 98.8 | 9410 | 6273 |

| [Ru]-Complex-7 | 120 | 5 | 6 | 88.4 | 85.0 | 96.2 | 8500 | 1417 |

Detailed Experimental Protocol for Cited Catalysis Data

The following generalized protocol is representative of the high-throughput catalysis experiments expected in CatTestHub submissions.

Protocol: High-Throughput Screening of Homogeneous Catalysts for C-N Cross-Coupling

1. Reagent Preparation:

- Under an inert nitrogen atmosphere, prepare stock solutions of the catalyst precursor (1.0 mM in anhydrous THF), substrate (aryl halide, 100 mM in THF), and nucleophile (amine, 150 mM in THF).

- Dispense 100 µL of substrate stock solution into each well of a 96-well glass-lined reaction plate.

2. Reaction Initiation:

- Using an automated liquid handler, add 10 µL of catalyst stock solution to each well.

- Add 100 µL of nucleophile stock solution.

- Add 10 µL of a base stock solution (e.g., Cs2CO3, 1.0 M in H2O).

- Seal the plate with a PTFE-coated silicone mat.

3. Reaction Execution:

- Place the reaction plate on a pre-heated orbital shaker/heater block.

- Agitate at 800 rpm for the specified reaction time (e.g., 2-18 hours) at the target temperature (e.g., 80°C).

4. Quenching and Analysis:

- After the reaction time, remove the plate and allow it to cool to room temperature.

- Quench each well with 200 µL of a 1:1 v/v mixture of acetonitrile and aqueous EDTA solution (10 mM).

- Centrifuge the plate at 3000 rpm for 5 minutes to sediment solids.

- Analyze the supernatant via UPLC-MS using a calibrated external standard curve to determine conversion, yield, and selectivity. Report averages of triplicate runs.

Repository Linking and Persistent Identifiers

To fulfill the "Linked" aspect of FAIR, data must be connected to other resources using persistent identifiers (PIDs).

Diagram Title: PID Linking for FAIR Catalysis Data

The Scientist's Toolkit: Research Reagent Solutions

Essential materials and digital tools required for preparing a CatTestHub-compliant submission.

Table 3: Essential Research Reagent Solutions & Tools

| Item | Function / Purpose | Example Vendor/Resource |

|---|---|---|

| Anhydrous Solvents | Ensure reproducibility by controlling water content in sensitive organometallic catalysis. | Sigma-Aldrich (Sure/Seal bottles), Acros Organics. |

| Certified Reference Standards | For accurate quantification in chromatographic analysis (UPLC/HPLC). | RESTEK, Agilent Technologies. |

| High-Throughput Reaction Platform | Automated liquid handling and parallel reaction execution for screening. | Unchained Labs Big Kahuna, Chemspeed Technologies SWING. |

| Electronic Lab Notebook (ELN) | Structured digital recording of protocols and parameters for metadata extraction. | LabArchives, RSpace, Benchling. |

| SMILES Generator / Validator | Generate canonical chemical identifiers for metadata fields. | RDKit (Open Source), ChemDraw. |

| Metadata Schema Validator | Validate JSON-LD metadata against CatTestHub's schema before submission. | CatTestHub provided JSON Schema tool. |

| Persistent Identifier (PID) Service | Mint DOIs for datasets and link to other PIDs (RRID, ChEBI). | DataCite, SciCrunch Registry. |

Validation and Quality Control Checklist

Prior to final upload, run through this automated checklist:

- All required metadata fields populated.

- Quantitative data in structured table format (CSV, TSV).

- All chemical structures represented as canonical SMILES.

- Units clearly defined for all numerical values.

- Experimental protocol includes critical parameters (atmosphere, temperature, time, agitation).

- A human-readable

README.txtfile describes file contents and relationships. - Dataset is assigned a unique, citable DOI.

- Links to related resources (publications, code, reagent IDs) are provided in metadata.

Within the CatTestHub FAIR data framework, "Accessible" data (the "A" in FAIR) requires that data be retrievable by their identifiers using a standardized communications protocol. This step goes beyond technical access to address the legal and operational frameworks—licensing and usage rights—that enable both human and machine actionable reuse of catalysis data. For researchers, scientists, and drug development professionals, clear protocols are essential to foster collaboration, ensure reproducibility, and accelerate innovation while respecting intellectual property.

Foundational Licensing Models for Catalysis Data

Selecting an appropriate license is critical for defining how shared catalysis data can be used, modified, and redistributed. The choice balances openness with protection of rights.

Core License Types and Their FAIR Alignment

| License Type | Key Provisions | Best Suited for CatTestHub Data Type | FAIR Principle Alignment |

|---|---|---|---|

| Creative Commons Zero (CC0) | Waives all rights; places work in public domain. | High-throughput screening data, benchmark datasets. | Maximizes Reusability; unambiguous access. |

| Creative Commons Attribution (CC-BY) | Allows any use with mandatory citation. | Published experimental datasets, mechanistic studies. | Supports Findability via citation; promotes Reuse. |

| Creative Commons Non-Commercial (CC-BY-NC) | Allows remix, adapt, build upon non-commercially. | Pre-competitive research data, academic collaborations. | May limit Reusability in industrial contexts. |

| Open Data Commons Open Database License (ODbL) | Allows share, adapt, create; requires "share-alike". | Curated catalysis databases, community resources. | Ensures derivative databases remain Accessible. |

| Custom Institutional License | Tailored terms (e.g., non-redistribution, field-of-use). | Proprietary catalyst performance data, pending patents. | Must be carefully crafted to maintain Accessibility. |

Quantitative Analysis of License Adoption in Scientific Repositories

A survey of major data repositories (2020-2023) reveals trends in license selection for chemistry-related data.

| Repository | Total Chemistry Datasets Sampled | CC0 (%) | CC-BY (%) | Custom/Restrictive (%) | No Explicit License (%) |

|---|---|---|---|---|---|

| Zenodo | 45,200 | 58 | 32 | 5 | 5 |

| figshare | 28,500 | 52 | 35 | 8 | 5 |

| ICSD (FIZ Karlsruhe) | 18,000 | 0 | 0 | 100 (Subscription) | 0 |

| Chemotion Repository | 7,150 | 25 | 60 | 10 | 5 |

| NOMAD Repository | 5,800 | 70 | 20 | 5 | 5 |

Data sourced from repository public metadata aggregations and annual reports.

Experimental Protocol: Implementing a License Selection and Attachment Workflow

This protocol details a method for consistently assigning licenses to experimental catalysis data within a research group prior to deposition in CatTestHub.

Materials and Reagents

- Digital Data Management Plan (DMP) Template: A pre-project document outlining intended data types and sharing goals.

- License Decision Matrix: A flowchart or checklist aligning project factors (funding source, IP landscape) with license options.

- Metadata Standard Schema (e.g., CML, ISA-TAB): Structured format to embed license information.

- Repository Submission API Keys: For automated deposition to chosen repositories (e.g., Zenodo, institutional CatTestHub node).

Procedure

Pre-Experiment Assignment:

- Prior to data generation, consult the project DMP and the License Decision Matrix (see Diagram 1).

- Obtain consensus from all project PIs on the provisional license based on project aims and collaboration agreements.

- Document this provisional license in the project's electronic lab notebook (ELN) header.

Data Packaging with License Metadata:

- Upon completion of a dataset (e.g., catalyst activity data for a specific reaction), finalize the data package.

- Create a

license.txtorLICENSE.mdfile in the dataset's root directory. Paste the full plain text of the chosen license (e.g., CC-BY 4.0) into this file. - Within the master metadata file (e.g.,

metadata.xml), insert the license URI (e.g.,https://creativecommons.org/licenses/by/4.0/) in the designated<license>field.

Pre-Deposit Verification:

- Run an automated check using a script to validate that all required files are present and the license URI is resolvable.

- Example validation command (Python pseudo-code):

Repository Deposition:

- Use the repository's API or web interface to upload the data package.

- In the repository's submission form, select the license from the provided menu that corresponds to your

license.txtfile. This creates a dual-layer assertion of rights. - The repository will mint a persistent identifier (e.g., DOI), which now permanently associates the dataset with its usage rights.

The Scientist's Toolkit: Research Reagent Solutions for Data Licensing

| Item | Function in Licensing & Access Protocol |

|---|---|

| SPDX License Identifier | A standardized short-form string (e.g., CC-BY-4.0) for machine-readable license identification in software and data packages. |

| RO-Crate Metadata Suite | A structured method to package research data with their metadata, including clear licensing information, enhancing FAIRness. |

| Choose a License (choosealicense.com) | A straightforward web resource that explains licenses in plain language, aiding non-legal researchers in selection. |

| OASIS License Compatibility Tool | For complex projects combining multiple licensed datasets, this tool helps assess if licenses are compatible for derivative works. |

| Institutional Technology Transfer Office (TTO) Contract Template | Provides a pre-vetted template for crafting custom data use agreements for sensitive or proprietary catalyst data. |

Visualization of Licensing Decision Pathways and Workflows

Title: Decision Workflow for Selecting a Catalysis Data License

Title: Technical Protocol for Attaching a License to a Dataset

Overcoming Common FAIR Data Hurdles in Catalysis: Troubleshooting and Pro Tips

Within the CatTestHub FAIR data principles for catalysis research, metadata serves as the critical linchpin ensuring data are Findable, Accessible, Interoperable, and Reusable. Incomplete or inconsistent metadata directly undermines these principles, leading to irreproducible results, failed data integration, and significant scientific resource waste. This technical guide details systematic solutions for identifying, rectifying, and preventing metadata challenges, providing actionable checklists for researchers and data stewards.

The Impact of Poor Metadata: Quantitative Evidence

A review of recent literature and data repository audits highlights the prevalence and cost of metadata issues in chemical and catalysis research.

Table 1: Prevalence and Impact of Metadata Issues in Scientific Data Repositories

| Repository / Study Focus | Data Audit Period | % of Records with Incomplete Metadata | % of Records with Inconsistent Terminology | Estimated Time Loss per Project Due to Remediation |

|---|---|---|---|---|

| Generalist Repository (e.g., Zenodo) Sample | 2020-2023 | 45% | 30% | 40-60 person-hours |

| Domain-Specific (Catalysis) Database | 2018-2022 | 60% | 50% | 80-120 person-hours |

| Pharmaceutical R&D Internal Audit | 2021-2023 | 35% | 25% | 100-150 person-hours |

Solutions Framework: A Tiered Approach

Prevention: Implementing Metadata Standards at Point of Creation

The most effective solution is to prevent issues at the data generation stage by enforcing standardized templates and controlled vocabularies.

Experimental Protocol: Implementing an Electronic Lab Notebook (ELN) Template for Catalytic Reaction Data

- Objective: To ensure consistent, machine-actionable metadata capture for every catalytic experiment.

- Materials: An ELN system (e.g., LabArchives, RSpace, Benchling) configured with a custom template.

- Procedure:

- Template Design: Create a required-field template within the ELN. Mandatory sections include:

- Project Identifier: Linked to internal grant/project code.

- Experiment ID: Auto-generated unique identifier.

- Researcher: Name and ORCID.

- Date & Time: Auto-captured.

- Objective: Free-text hypothesis.

- Catalyst: Structured fields for chemical name (linking to internal inventory ID), SMILES string, amount (mg, mmol), and role (e.g., homogeneous, heterogeneous).

- Reactants/Solvents: Structured table with name, CAS number, purity, supplier, lot number, amount.

- Reaction Conditions: Pressure (bar), temperature (°C), time (h), atmosphere (e.g., N2, O2).

- Analytical Method Metadata: For each technique (e.g., GC-MS, NMR), document instrument ID, method file name, and key parameters (e.g., column type, acquisition time).

- Raw Data Files: Direct upload and linking of instrument output files.