Community Benchmarking Standards for Catalytic Performance: Best Practices for Reproducible Research and Accelerated Discovery

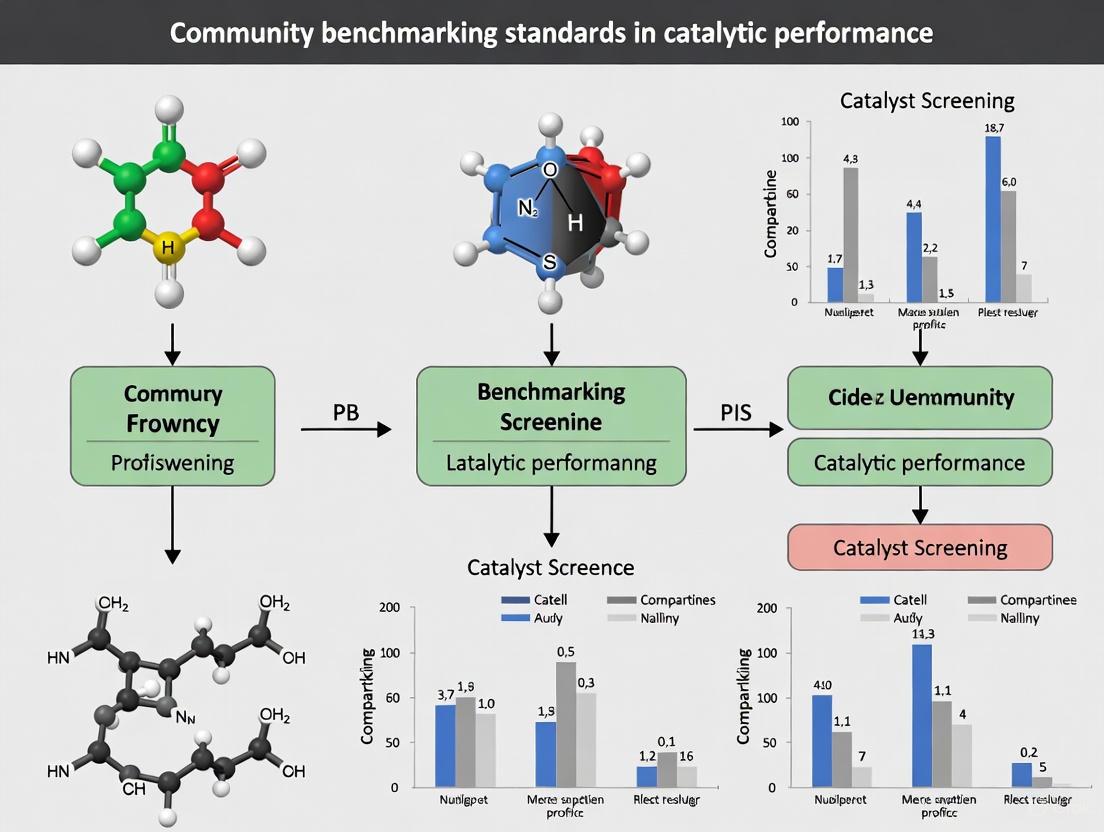

This article provides a comprehensive framework for implementing community benchmarking standards in catalytic performance evaluation, addressing critical needs across biomedical and chemical research.

Community Benchmarking Standards for Catalytic Performance: Best Practices for Reproducible Research and Accelerated Discovery

Abstract

This article provides a comprehensive framework for implementing community benchmarking standards in catalytic performance evaluation, addressing critical needs across biomedical and chemical research. It explores the fundamental importance of standardized metrics and protocols for ensuring reproducible, comparable results in catalyst development. The content covers practical methodologies for cross-study data integration, advanced computational approaches including AI-driven platforms, and robust statistical validation techniques. By addressing common challenges in data inconsistency and establishing best practices for performance comparison, this guide empowers researchers to accelerate catalyst discovery and optimization through reliable, community-verified benchmarking standards.

The Critical Foundation: Understanding Catalytic Benchmarking Principles and Community Standards

In catalysis research, defining state-of-the-art performance remains challenging due to variability in reported data across studies. Benchmarking provides a solution by creating external standards for evaluating catalytic performance, enabling meaningful comparisons between new catalytic materials and established references. As catalysis science evolves with advanced materials and novel energetic stimuli, the community requires consistent frameworks to verify that newly reported catalytic activities genuinely outperform existing systems [1]. This guide examines how community consensus drives the development of standardized assessment protocols that ensure fair, reproducible, and relevant evaluation of catalyst performance metrics including activity, selectivity, and deactivation profiles [2].

The fundamental challenge stems from how catalytic activity is assessed across different laboratories worldwide. Without standardized reference materials, reaction conditions, and reporting formats, comparing catalytic rates becomes problematic. As contemporary catalysis research embraces data-centric approaches, the availability of well-curated experimental datasets becomes equally important as computational data for understanding catalytic trends [1]. This article explores the transition from isolated catalyst evaluation to community-driven benchmarking initiatives that provide foundational standards for the field.

Theoretical Foundations: Principles of Community Benchmarking

Benchmarking in catalysis science represents a community-based and preferably community-driven activity involving consensus-based decisions on reproducible, fair, and relevant assessments [2]. This approach extends beyond simple performance comparisons to encompass careful documentation, archiving, and sharing of methods and measurements. The theoretical framework for catalytic benchmarking incorporates several foundational principles that ensure its effectiveness and adoption across the research community.

The concept of benchmarking dates back centuries and has evolved with specifics varying by field, but consistently represents the evaluation of a quantifiable observable against an external standard [1]. In heterogeneous catalysis, benchmarking comparisons can take multiple forms: determining if newly synthesized catalysts outperform predecessors, verifying that reported turnover rates lack corrupting influences like diffusional limitations, or validating that applied energy sources genuinely accelerate catalytic cycles. Unlike fields with natural benchmarks, catalysis benchmarks are best established through open-access community-based measurements that generate consensus around reference materials and protocols [1].

Effective benchmarking requires balancing multiple performance criteria against practical considerations. Optimal catalysts must balance activity, selectivity, and stability with sustainability factors including abundance, affordability, recoverability, and safety [3]. The complexity of catalyst evaluation lies not only in meeting these diverse requirements but in identifying combinations of catalyst properties and reaction conditions that yield desirable performance. This necessitates multidimensional screening where composition, structure, loading, temperature, solvent, and other variables must be simultaneously explored [3].

Practical Implementation: Catalytic Benchmarking Databases & Platforms

CatTestHub: A FAIR Database Architecture

The CatTestHub database represents an implementation of benchmarking principles specifically designed for heterogeneous catalysis. This open-access platform addresses previous limitations in catalytic data comparison by housing systematically reported activity data for selected probe chemistries alongside material characterization and reactor configuration information [1] [4]. The database architecture was informed by the FAIR principles (Findability, Accessibility, Interoperability, and Reuse), ensuring relevance to the heterogeneous catalysis community [1].

CatTestHub employs a spreadsheet-based format that balances fundamental information needs with practical accessibility. This structure curates key reaction condition information required for reproducing experimental measurements while providing details of reactor configurations. To contextualize macroscopic catalytic activity measurements at the nanoscopic scale of active sites, structural characterization accompanies each catalyst material [1]. The database incorporates metadata to provide context and uses unique identifiers including digital object identifiers (DOI), ORCID, and funding acknowledgements to ensure accountability and traceability [1].

In its current iteration, CatTestHub spans over 250 unique experimental data points collected across 24 solid catalysts facilitating the turnover of 3 distinct catalytic chemistries [4]. The platform currently hosts metal and solid acid catalysts, using decomposition of methanol and formic acid as benchmarking chemistries for metals, and Hofmann elimination of alkylamines over aluminosilicate zeolites for solid acids [1]. This curated approach provides a collection of catalytic benchmarks for distinct classes of active site functionality, enabling more meaningful comparisons between catalyst categories.

High-Throughput Scoring Models

Complementing database approaches, automated scoring models represent another practical implementation of catalytic benchmarking. Recent research demonstrates high-throughput experimentation (HTE) combined with catalyst informatics as a powerful strategy for multidimensional catalyst evaluation [3]. One developed system utilizes real-time optical scanning to assess catalyst performance in nitro-to-amine reduction, monitoring reaction progress via well-plate readers that track fluorescence changes as non-fluorescent nitro-moieties reduce to amine forms [3].

This approach screened 114 different catalysts comparing them across multiple parameters including reaction completion times, material abundance, price, recoverability, and safety [3]. Using a simple scoring system, researchers plotted catalysts according to cumulative scores while incorporating intentional biases such as preference for environmentally sustainable catalysts. This methodology highlights how benchmarking can extend beyond simple activity measurements to encompass broader sustainability considerations that reflect real-world application requirements.

The fluorogenic system enables optical reaction monitoring in 24-well plate formats, facilitating simultaneous tracking of multiple reactions [3]. This platform collects time-resolved kinetic data using standard well-plate readers, allowing efficient screening, optimization, and kinetic analysis. By integrating environmental considerations like cost, abundance, and recoverability into the evaluation process, such platforms promote selection of sustainable catalytic materials while maintaining rigorous performance standards [3].

Standardized Assessment Protocols

Experimental Methodologies for Reliable Benchmarking

Standardized experimental protocols form the foundation of reliable catalytic benchmarking. For database-driven approaches like CatTestHub, this involves carefully controlled probe reactions using well-characterized reference catalysts. The methanol decomposition and formic acid decomposition reactions employed for metal catalysts provide representative examples of standardized assessment methodologies [1]. These specific reactions were selected because they enable clear differentiation of catalytic performance while minimizing complications from side reactions or transport limitations.

For high-throughput screening approaches, standardized protocols involve detailed preparation and data collection procedures. The fluorogenic assay system for nitro-to-amine reduction follows a meticulous workflow [3]:

- Well Plate Setup: 24-well polystyrene plates are populated with 12 reaction wells and 12 corresponding reference wells, each containing precise mixtures of catalyst, nitronaphthalimide probe, aqueous N₂H₄, acetic acid, and H₂O totaling 1.0 mL volume [3].

- Real-time Monitoring: Once reactions initiate, plates undergo orbital shaking followed by fluorescence intensity scanning at 485nm excitation/590nm emission, with absorption spectra scanned from 300-650nm [3].

- Data Collection Intervals: The shaking-fluorescence-absorption cycle repeats every 5 minutes for 80 minutes total, generating comprehensive kinetic profiles [3].

This systematic approach generates 32 data points per sample including fluorescence and UV absorption measurements, totaling over 7,000 data points across full catalyst libraries. The large data volume provides sufficient resolution for meaningful comparisons while enabling detection of reaction complexities through monitoring isosbestic point consistency [3].

Data Processing and Quality Validation

Standardized assessment requires rigorous data processing and validation protocols. In high-throughput screening, original microplate reader data undergoes conversion to CSV files followed by transfer to structured databases like MySQL [3]. This facilitates systematic analysis while maintaining data integrity. For each catalyst, performance profiles incorporate multiple visualization formats:

- Absorption evolution spectra showing decaying reactant and growing product peaks

- Absorbance values over time for key wavelengths

- Isosbestic point stability monitoring

- Intermediate formation tracking [3]

These comprehensive profiles enable quality validation through consistency checks. Catalysts exhibiting unstable isosbestic points during reactions receive lower reliability scores, as this indicates complications like pH changes or complex mechanisms that undermine straightforward performance comparisons [3]. Similarly, samples showing significant intermediate accumulation receive lower selectivity scores, reflecting practical application requirements where long-lived reactive intermediates complicate product isolation [3].

The following diagram illustrates the complete experimental workflow for high-throughput catalytic benchmarking:

Database Architecture and Community Integration

The CatTestHub database implements a structured architecture designed for community-wide adoption and data integration. The following diagram illustrates how this platform connects diverse data types within a unified benchmarking framework:

Comparative Analysis of Benchmarking Approaches

The field employs distinct catalytic benchmarking methodologies, each with specific applications and advantages. The table below systematically compares database and screening approaches:

Table 1: Comparative Analysis of Catalytic Benchmarking Approaches

| Evaluation Criteria | Database Approach (CatTestHub) | High-Throughput Screening |

|---|---|---|

| Primary Focus | Community-standard reference data for performance validation [1] [4] | Accelerated catalyst discovery through multidimensional screening [3] |

| Data Generation | Curated collection of reproducible measurements across laboratories [1] | Automated parallel experimentation with real-time kinetic monitoring [3] |

| Catalyst Scope | Well-characterized reference materials (commercial/synthesized) [1] | Extensive libraries (100+ catalysts) with diverse compositions [3] |

| Key Metrics | Turnover rates free from transport limitations, standardized conditions [1] | Reaction completion time, selectivity, abundance, price, recoverability [3] |

| Implementation | Open-access spreadsheet format adhering to FAIR principles [1] | Fluorogenic assay system with plate readers and automated analysis [3] |

| Community Role | Centralized platform for data sharing and comparative analysis [1] | Methodology standardization enabling cross-study comparisons [3] |

Essential Research Reagent Solutions

Catalytic benchmarking relies on specialized materials and instrumentation to ensure reproducible results. The following table details key research reagents and their functions in standardized assessments:

Table 2: Essential Research Reagents for Catalytic Benchmarking

| Reagent/Instrument | Function in Benchmarking | Application Examples |

|---|---|---|

| Standard Reference Catalysts | Provide baseline performance measurements for cross-study comparisons [1] | EuroPt-1, EUROCAT materials, World Gold Council standards [1] |

| Probe Molecules | Enable standardized activity measurements through well-defined reactions [1] [3] | Methanol, formic acid for metal catalysts; alkylamines for solid acids [1] |

| Fluorogenic Assay Systems | Facilitate high-throughput screening through optical reaction monitoring [3] | Nitronaphthalimide reduction for catalyst performance ranking [3] |

| Well Plate Readers | Allow parallelized kinetic data collection across multiple reactions [3] | BioTek Synergy HTX for simultaneous fluorescence/absorption monitoring [3] |

| Characterization Standards | Ensure consistent material properties assessment across laboratories [1] | BET surface area, TEM particle size, acid site quantification [1] |

Catalytic benchmarking has evolved from isolated comparisons to systematic community-driven initiatives that establish reproducible standards across the research ecosystem. Platforms like CatTestHub demonstrate how open-access databases incorporating FAIR principles can provide reference points for evaluating new catalytic materials and technologies [1] [4]. Simultaneously, high-throughput screening methodologies enable multidimensional catalyst assessment that balances performance metrics with sustainability considerations [3].

The future of catalytic benchmarking lies in expanded community participation, with researchers contributing standardized kinetic information across diverse catalytic systems. This requires ongoing consensus-building around reference materials, probe reactions, and reporting formats. As these frameworks mature, they will accelerate catalyst discovery and validation while ensuring that performance claims are based on rigorous, comparable measurements. Ultimately, standardized assessment protocols strengthen the entire catalysis research ecosystem, enabling more efficient knowledge transfer from laboratory innovation to practical application.

Reproducible catalyst testing is the cornerstone of progress in catalysis science, enabling accurate comparison of new materials, reliable structure-function relationships, and validated mechanistic insights. However, the field faces a significant reproducibility crisis, where findings from one laboratory often cannot be replicated in another. This crisis primarily stems from a lack of standardized methodologies for evaluating catalytic performance. Inconsistent reporting of metrics, uncharacterized reactor hydrodynamics, and unaccounted transport phenomena introduce substantial variability, obscuring true catalytic behavior and impeding scientific and industrial progress [5]. This guide objectively compares standardized and non-standardized experimental approaches, providing a framework of community benchmarking standards to overcome these challenges and advance catalytic research.

Standardization Guidelines for Catalyst Testing

The move toward standardization addresses key procedural aspects of catalyst testing where inconsistencies most frequently occur. The core principles involve selecting appropriate reactors, confirming ideal operating conditions, and rigorously reporting data to enable direct comparisons.

Core Principles for Rigorous Testing

- Reactor Selection and Hydrodynamics: The choice of reactor and its hydrodynamic properties is foundational. Testing must be conducted in a reactor system with well-defined flow characteristics (e.g., perfectly mixed or plug flow) to ensure that observed rates are intrinsic to the catalyst and not artifacts of the reactor itself. The reactor must adhere to the behavior described by its design equations [5].

- Transport Phenomena Evaluation: Before attributing performance to catalytic kinetics, investigators must rule out the influence of transport limitations. This includes intraparticle diffusion (within catalyst pores) and interphase transport (between the fluid and the catalyst particle) [5]. Experiments should demonstrate that reaction rates are not limited by these physical transport processes.

- Reporting at Differential Conversion: Catalyst performance data, specifically rates and selectivities, should be measured and reported at low, differential conversion (typically below 20%) of the limiting reagent. This practice ensures that the reported data reflects the intrinsic kinetics of the catalyst, free from confounding issues such as reactant depletion, product inhibition, or approach to equilibrium [5].

Comparative Analysis: Standardized vs. Non-Standardized Practices

The impact of standardization becomes clear when comparing data quality and reproducibility across different methodologies. The table below summarizes the critical differences in approach and outcome.

Table 1: Comparison of Catalyst Testing Practices and Outcomes

| Aspect of Testing | Standardized & Rigorous Practice | Non-Standardized & Common Practice | Impact on Reproducibility |

|---|---|---|---|

| Reactor Hydrodynamics | Uses reactors with well-defined flow and mixing; confirms ideal behavior [5] | Uses reactors with complex or uncharacterized hydrodynamics | High: Fundamental rate data cannot be separated from reactor-specific fluid dynamics. |

| Transport Limitations | Systematically evaluates and rules out mass and heat transport limitations [5] | Does not test for or report on potential transport effects | High: Reported "activity" may reflect diffusion speeds, not intrinsic catalytic activity. |

| Reporting Conversion | Reports initial rates at differential conversion (<20%) [5] | Reports data at high or complete conversion | High: Data is conflated with reactor flow patterns and equilibrium effects. |

| Performance Metrics | Reports turnover frequencies (TOF) based on quantified active sites | Reports bulk conversion or yield without site normalization | Medium: Precludes direct comparison of different catalyst materials. |

| Synthesis Protocols | Uses machine-readable, step-by-step action sequences with defined parameters [6] | Describes synthesis in unstructured, prose-like natural language [6] | High: Minor, unreported variations in procedure lead to different catalyst structures. |

Quantitative Impact of Standardization

The implementation of standardized, machine-readable synthesis protocols demonstrates a quantifiable benefit. A proof-of-concept study using a transformer model to extract synthesis protocols for single-atom catalysts (SACs) revealed that the manual literature analysis for 1000 publications would require a minimum of 500 researcher-hours. In contrast, automated text mining of the same corpus using standardized protocols achieved the same goal in 6-8 hours, representing a more than 50-fold reduction in time investment and dramatically accelerating the research cycle [6].

Experimental Protocols for Community Benchmarking

To establish community-wide standards, specific experimental protocols must be adopted. These methodologies ensure that data generated in different laboratories is directly comparable.

Protocol for Measuring Intrinsic Kinetics

Objective: To obtain a reaction rate that is free from transport limitations and reflective of the catalyst's intrinsic activity.

- Catalyst Pretreatment: Activate the catalyst in a controlled atmosphere (e.g., flowing H₂ for reduction) at a specified temperature and duration. Report the gas, space velocity, temperature ramp, and final hold time.

- Transport Limitation Testing:

- Interphase Diffusion: Measure the reaction rate at constant temperature and varying gas flow rates (or agitation speeds for slurry reactors) while maintaining constant catalyst mass. The observed rate should be independent of the external flow/agitation regime.

- Intraparticle Diffusion: Measure the reaction rate using catalyst samples of different particle sizes. The observed rate should be independent of particle size once intra-particle diffusion is eliminated.

- Kinetic Measurement: Conduct testing at differential conversion (<20%). Monitor conversion as a function of time-on-stream to account for deactivation. Report the initial, steady-state rate.

- Active Site Quantification: Use chemisorption (e.g., H₂, CO, NH₃), titration methods, or other spectroscopic techniques to count the number of active sites. Report the Turnover Frequency (TOF) as (molecules converted per active site per unit time) [5].

Protocol for Standardized Synthesis Reporting

Objective: To create a machine-readable and reproducible synthesis procedure.

- Action Sequence Definition: Break down the synthesis into discrete, defined action terms (e.g., dissolve, mix, impregnate, dry, calcine, reduce) [6].

- Parameter Association: For each action, systematically report all associated parameters.

- Temperature: Ramp rate, hold temperature, and hold time.

- Atmosphere: Gas composition and flow rate.

- Concentrations: Precursor types and concentrations.

- Volumes and Masses: Precise quantities of all reagents and solvents.

- Structured Reporting: Report the procedure as a structured sequence of actions and parameters instead of a paragraph of prose. This format is both human-readable and easily parsed by language models for automated analysis [6].

Table 2: Essential Research Reagent Solutions and Materials

| Reagent/Material | Function in Catalyst Testing & Synthesis | Standardization Consideration |

|---|---|---|

| Metal Precursors | Source of the active catalytic metal (e.g., Ni(NO₃)₂, H₂PtCl₆) | Report exact salt, purity, and supplier. Standardize precursor solutions for incipient wetness impregnation. |

| Catalyst Support | High-surface-area material to disperse active metal (e.g., Al₂O₃, SiO₂, TiO₂, C) | Characterize and report key properties: surface area, pore volume, pore size distribution, and impurity profile. |

| Probe Molecules | Used to quantify active sites and characterize surface properties (e.g., CO, H₂, NH₃, N₂O) | Standardize purity, adsorption conditions (temperature, pressure), and calibration procedures for chemisorption. |

| Reactant Feed Gases/Liquids | Source of reactants for activity testing (e.g., H₂, O₂, CO, alkanes) | Report purity and the presence of any additives or internal standards. Use mass flow controllers for precise dosing. |

Visualizing the Path to Rigorous Catalyst Testing

The following workflow diagrams, created using the specified color palette, outline the critical pathways for achieving standardized catalyst synthesis and performance evaluation.

Standardized Synthesis Protocol Workflow

Catalyst Testing and Validation Workflow

The adoption of community-wide benchmarking standards is not a constraint on creativity but a necessary foundation for reliable and cumulative progress in catalyst research. By standardizing protocols for synthesis, testing, and reporting—from using ideal reactors and reporting at differential conversion to structuring synthesis data for machine readability—the field can overcome its reproducibility crisis. This commitment to rigor will enable true comparisons between catalytic materials, accelerate the discovery cycle, and build a more robust and trustworthy body of scientific knowledge for developing the sustainable chemical processes of the future.

Evaluation frameworks are essential for quantifying progress, ensuring reproducibility, and maintaining data integrity in scientific research. For researchers in catalysis and drug development, these frameworks provide the standardized metrics and experimental protocols necessary to benchmark performance reliably. This guide examines the core components of modern evaluation frameworks, with a specific focus on community benchmarking standards for catalytic performance research.

Foundational Metrics for Quantitative Assessment

The core of any evaluation framework is a robust set of metrics that provide quantitative measures of performance. These metrics enable objective comparison across different systems, materials, or models.

Traditional Information Retrieval Metrics

In fields like catalysis research and data management, where literature and data retrieval are fundamental, traditional metrics offer proven assessment methods [7]:

- Precision @ K: Measures the fraction of retrieved items that are relevant, calculated as

(Relevant items in top K) / K[7]. - Recall @ K: Measures the fraction of all relevant items that were successfully retrieved, calculated as

(Relevant items in top K) / (Total relevant items)[7]. - Mean Reciprocal Rank (MRR): Evaluates the ranking quality of relevant results, calculated as

MRR = (1/|Q|) × Σ(1/rank_i)where rank_i is the position of the first relevant document for query i [7]. - Normalized Discounted Cumulative Gain (nDCG): Accounts for both relevance and ranking position with logarithmic discounting, providing a more nuanced view of retrieval quality [7].

Specialized Framework Metrics

Modern evaluation frameworks have developed specialized metrics for complex systems. The RAGAS (Retrieval-Augmented Generation Assessment) framework, for instance, employs a composite scoring approach [7]:

RAGAS Score = α×Faithfulness + β×Answer_Relevancy + γ×Context_Precision + δ×Context_Recall

Table: Comparative Analysis of Evaluation Framework Metrics

| Framework | Primary Metrics | Application Scope | Technical Approach | Data Requirements |

|---|---|---|---|---|

| RAGAS | Faithfulness, Answer Relevancy, Context Precision/Recall [7] | Retrieval-Augmented Generation systems [8] | LLM-as-judge with traditional metrics [7] | Input queries, retrieved contexts, generated answers [8] |

| OpenAI Evals | Match, Includes, Choice, Model-graded [7] | General LLM capabilities [7] | Modular, composable evaluation functions [7] | Standardized datasets, expected outputs [7] |

| Anthropic Constitutional AI | Helpfulness, Harmlessness, Honesty [7] | AI safety and alignment [7] | Principle-based assessment [7] | Constitutional principles, human oversight data [7] |

| Traditional Catalysis Benchmarking | Turnover frequency, selectivity, conversion rate [1] | Experimental catalysis [1] | Experimental measurement under standardized conditions [1] | Well-characterized catalyst materials, controlled reaction data [1] |

Experimental Protocols and Methodologies

Robust experimental protocols ensure that evaluations are reproducible, comparable, and scientifically valid. Community-wide benchmarking initiatives depend on standardized methodologies.

Community Benchmarking for Catalysis Research

The CatTestHub database exemplifies a structured approach to experimental catalysis benchmarking [1]. Its protocol emphasizes:

- Standardized Materials: Use of well-characterized, abundantly available catalysts sourced from commercial vendors (e.g., Zeolyst, Sigma Aldrich) or reliably synthesized materials [1].

- Controlled Reaction Conditions: Measurement of catalytic turnover rates at agreed-upon reaction conditions, free from influences such as catalyst deactivation, heat/mass transfer limitations, and thermodynamic constraints [1].

- Data Documentation: Comprehensive curation of reaction conditions, reactor configurations, and catalyst characterization data to enable reproduction [1].

- FAIR Principles Implementation: Ensuring data is Findable, Accessible, Interoperable, and Reusable through standardized formats and rich metadata [1].

AI System Evaluation Protocols

For AI and machine learning systems, evaluation protocols have evolved to address complex cognitive architectures:

- LLM-as-Judge Methodology: Leveraging AI models themselves as evaluators through structured prompting and criteria-based assessment, achieving high correlation (r = 0.89) with human judgment [7].

- Multi-turn Conversation Evaluation: Frameworks like MT-Bench assess performance across 8 categories including writing, reasoning, math, and coding through iterative interactions [7].

- Red Team Exercises: Adversarial testing to identify failure modes, safety vulnerabilities, and potential harmful outputs before deployment [7].

The following diagram illustrates the integrated workflow of a modern evaluation framework, from experimental design to data integrity assurance:

Evaluation Framework Workflow

Data Integrity and Governance Foundations

Data integrity forms the bedrock of reliable evaluation frameworks, requiring systematic approaches to data quality, security, and management.

Data Governance Components

Effective data governance frameworks incorporate several critical components that directly support evaluation integrity [9]:

- Data Quality Management: Ensures data accuracy, completeness, and reliability through automated monitoring, validation, and improvement processes with target data quality scores >95% [9].

- Data Catalog and Metadata Management: Creates centralized repositories for data asset discovery, documentation, and relationship mapping, enabling traceability and reproducibility [9].

- Data Security and Privacy: Implements comprehensive protection measures ensuring data confidentiality, integrity, and regulatory compliance across all systems [9].

- Performance Measurement: Tracks governance effectiveness through business-impact metrics enabling data-driven optimization and maturity advancement [9].

Implementation in Research Databases

The CatTestHub catalysis database demonstrates practical implementation of data integrity principles through [1]:

- Unique Identifiers: Use of digital object identifiers (DOI), ORCID, and funding acknowledgements for accountability and traceability [1].

- Structural Characterization: Providing nanoscopic context for macroscopic catalytic measurements through detailed material characterization [1].

- Spreadsheet-based Architecture: Ensuring longevity, accessibility, and ease of use through common formats and structures [1].

- Metadata Standards: Employing rich metadata to provide context for reported data, supporting proper interpretation and reuse [1].

Essential Research Reagents and Materials

Standardized materials and reagents are fundamental to reproducible experimental evaluation across scientific domains.

Table: Essential Research Reagent Solutions for Catalysis Benchmarking

| Reagent/Material | Function | Source Examples | Critical Specifications |

|---|---|---|---|

| Reference Catalysts | Standardized materials for activity comparison [1] | Johnson-Matthey EuroPt-1, World Gold Council standards [1] | Well-characterized structure, composition, and particle size [1] |

| Zeolite Frameworks | Acid-catalyst benchmarks for specific reaction types [1] | International Zeolite Association (MFI, FAU frameworks) [1] | Defined pore structure, acidity, and Si/Al ratio [1] |

| Methanol (>99.9%) | Benchmark reactant for decomposition studies [1] | Sigma-Aldrich (34860-1L-R) [1] | High purity, minimal water content [1] |

| Evaluation Datasets | Standardized inputs and expected outputs for validation [10] | Confident AI, Hugging Face Hub [10] [7] | Comprehensive coverage, expert-validated, version-controlled [10] |

Integrated Framework Architecture

Modern evaluation requires combining specialized frameworks rather than relying on single-solution approaches. The most effective systems employ layered architectures that address different aspects of the evaluation lifecycle.

Multi-Layer Framework Integration

This integrated approach enables comprehensive evaluation across multiple dimensions:

- Modular Framework Composition: Combining specialized tools like RAGAS for retrieval assessment, DeepEval for unit-testing LLM outputs, and Hugging Face Evaluate for standardized metric calculation [8] [7].

- Cross-Framework Verification: Using multiple evaluation methodologies to validate results and minimize biases inherent in any single approach [7].

- Continuous Evaluation Pipelines: Implementing automated testing in CI/CD workflows to catch regressions and enable rapid iteration while maintaining quality standards [8].

Community-Driven Benchmarking Standards

The evolution of evaluation frameworks increasingly emphasizes community-driven standards that align research with public priorities and scientific needs.

Publicly-Commissioned Benchmarks

Initiatives like the proposed TELOS (Targeted Evaluations for Long-term Objectives in Science) program highlight the strategic importance of coordinated benchmarking [11]. This approach addresses critical gaps in the evaluation ecosystem by:

- Aligning with Public Incentives: Directing research toward high-impact scientific challenges rather than solely commercial applications [11].

- Leveraging Public Expertise: Incorporating government and academic expertise in problems of national importance, such as energy resilience and healthcare innovation [11].

- Establishing Public Credibility: Providing authoritative endorsement and visibility through public leaderboards that attract talent and resources to priority areas [11].

Characteristics of Effective Community Benchmarks

Successful community benchmarking initiatives share several key characteristics:

- Clear Objective Functions: Well-defined success metrics that enable unambiguous performance assessment, as demonstrated in protein folding (CASP) and ancient text recovery (Vesuvius Challenge) [11].

- Standardized Datasets: High-quality, anonymized datasets that enable reproducible evaluation across research groups and institutions [11].

- Public Leaderboards: Transparent performance tracking that drives competition and accelerates progress through visible recognition [11].

- Iterative Refinement: Continuous improvement of evaluation methodologies based on community feedback and evolving research needs [11].

For catalysis researchers and drug development professionals, engaging with these evolving evaluation standards ensures their work contributes to and benefits from community-wide progress in measurement science. The integration of robust metrics, standardized protocols, and rigorous data integrity practices provides the foundation for breakthrough discoveries and reliable benchmarking across the scientific ecosystem.

From Qualitative Comparisons to Quantitative Science

Benchmarking, once a qualitative management tool for comparing business practices, has undergone a profound transformation into a rigorous scientific methodology. Its origins lie in the corporate sector, where it was defined as a continuous, systematic process for evaluating the products, services, and work processes of organizations that are recognized as representing best practices for the purpose of organizational improvement [12]. Fortune 500 companies like Xerox Corporation and AT&T embraced this approach to duplicate the success of top performers [12]. In marketing, this initially involved comparing performance against competitors and industry leaders to set targets and guide strategic decisions [13].

The critical shift from a qualitative exercise to a quantitative science began with the introduction of robust analytical frameworks, most notably Data Envelopment Analysis (DEA). Originally proposed by Charnes, Cooper, and Rhodes in 1978, DEA provided a methodology to compute the relative productivity (or efficiency) of various decision-making units using multiple inputs and outputs simultaneously [12]. This allowed for the identification of role models and the setting of specific, data-driven goals for improvement, addressing a major gap in early benchmarking efforts [12]. The application of DEA to marketing productivity, for instance in benchmarking retail stores, marked a significant step toward a more formal and scientific process [12].

Today, in fields like catalysis science, benchmarking is recognized as a community-driven activity involving consensus-based decisions on making reproducible, fair, and relevant assessments [2]. This evolution positions benchmarking not just as a tool for comparison, but as a rigorous framework for scientific validation and progress.

The Catalysis Science Paradigm: A Community-Driven Standard

The field of catalysis science exemplifies the modern, scientific application of benchmarking. Here, benchmarking has been formalized to accelerate understanding of complex reaction systems by integrating experimental and theoretical data [2]. The core objective is to make reproducible, fair, and relevant assessments of catalytic performance.

Core Principles and Performance Metrics

In catalysis, benchmarking establishes consensus on the key metrics and methods required for meaningful comparison. The foundational principles include careful documentation, archiving, and sharing of methods and measurements to maximize the value of research data [2]. This ensures that comparisons between new catalysts and standard reference catalysts are valid and reliable.

Table 1: Essential Catalyst Performance Metrics for Benchmarking

| Metric | Description | Role in Benchmarking |

|---|---|---|

| Activity | The rate of catalytic reaction. | Measures the catalyst's efficiency in accelerating the desired chemical transformation [2]. |

| Selectivity | The catalyst's ability to direct the reaction toward the desired product. | Crucial for evaluating process efficiency and minimizing byproducts [2]. |

| Deactivation Profile | The stability of the catalyst over time under operating conditions. | Determines the catalyst's operational lifetime and economic viability [2]. |

Experimental Protocols for Catalytic Performance

A rigorous benchmarking study in catalysis requires a standardized experimental protocol to ensure data comparability. The following workflow outlines the key stages in generating benchmark-quality data for a catalytic reaction.

Title: Catalysis Benchmarking Workflow

The methodology involves several critical stages:

- Catalyst Synthesis and Characterization: The catalyst is prepared and thoroughly characterized using techniques like X-ray diffraction (XRD) for structure, surface area analysis (BET), and transmission electron microscopy (TEM) to determine morphology and particle size [2].

- Reactor Setup and Calibration: The catalytic testing apparatus is meticulously calibrated to ensure accurate control and measurement of reaction conditions.

- Standard Reaction Conditions: Tests are performed under a set of community-agreed standard conditions, including temperature (T), pressure (P), and feed gas composition, to allow for direct comparison with other catalysts [2].

- Performance Evaluation and Stability Testing: The catalyst's activity and selectivity are measured, followed by long-term testing to assess its stability and deactivation profile [2].

- Data Analysis, Validation, and Reporting: Results are analyzed and compared against a reference catalyst. All data, along with detailed methodologies, are documented and shared according to community standards to ensure full reproducibility [2].

Essential Guidelines for Rigorous Benchmarking Design

The transition to scientific benchmarking requires adherence to strict design principles to ensure accuracy and avoid bias. Comprehensive guidelines have been developed, particularly in computational biology, but are applicable across scientific domains [14].

Table 2: Essential Guidelines for Rigorous Method Benchmarking

| Guideline Principle | Description & Best Practices | Common Pitfalls to Avoid |

|---|---|---|

| Defining Purpose & Scope [14] | Clearly state the benchmark's goal (e.g., neutral comparison vs. new method demonstration). A neutral benchmark should be as comprehensive as possible. | A scope that is too narrow yields unrepresentative and misleading results. |

| Selection of Methods [14] | Include all relevant methods or a justified, representative subset. For neutral studies, inclusion criteria (e.g., software availability) must be unbiased. | Excluding key state-of-the-art methods, which skews the comparison. |

| Selection of Datasets [14] | Use a variety of datasets (simulated with known ground truth and real experimental data) to evaluate performance under diverse conditions. | Using too few datasets or simulation scenarios that are overly simplistic and do not reflect real-world complexity. |

| Evaluation Criteria [14] | Select key quantitative performance metrics that translate to real-world performance. Use multiple metrics to reveal different strengths and trade-offs. | Relying on a single metric or metrics that give over-optimistic estimates of performance. |

A critical design choice is the use of simulated versus real data. Simulated data provides a known "ground truth," enabling precise quantitative evaluation. However, simulations must accurately reflect the properties of real experimental data to be meaningful [14]. Conversely, real data provides ultimate environmental relevance but may lack a perfectly known ground truth, making absolute performance assessment more challenging.

The Scientist's Toolkit: Research Reagent Solutions for Catalysis Benchmarking

Conducting a high-quality benchmarking study in catalysis requires access to well-characterized materials and tools. The following table details key research reagent solutions essential for experimental work in this field.

Table 3: Essential Research Reagents and Materials for Catalysis Benchmarking

| Reagent/Material | Function in Benchmarking |

|---|---|

| Reference Catalyst | A standard, well-characterized catalyst (e.g., certain types of supported platinum or zeolites) used as a benchmark to compare the performance of newly developed catalysts under identical conditions [2]. |

| High-Purity Gases/Feedstocks | Gases and chemical feedstocks of certified high purity are essential to ensure that performance metrics (activity, selectivity) are not skewed by impurities or side reactions. |

| Standardized Reactor Systems | Commercially available or custom-built reactor systems (e.g., plug-flow, continuous-stirred tank reactors) that allow for precise control and measurement of temperature, pressure, and flow rates. |

| Characterization Standards | Certified reference materials (e.g., specific powder samples for calibrating surface area analyzers) used to validate the accuracy of catalyst characterization instruments [2]. |

Experimental Benchmarking: Validating Observational Methods

A powerful demonstration of benchmarking's scientific rigor is the concept of experimental benchmarking, where results from observational (non-experimental) studies are compared against findings from randomized controlled trials (RCTs) to calibrate bias [15]. This approach, attributed to Robert LaLonde's 1986 work on evaluating employment programs, tests whether non-experimental methods can recover the unbiased causal estimates provided by experiments [15].

This methodology is applied in medical and social science research. For example, studies have compared non-experimental methods like propensity score matching to RCT data when evaluating the impact of inhaled corticosteroids in asthma or welfare-to-work programs [15]. The findings often reveal that while non-experimental methods can sometimes approximate experimental results, the potential for significant bias remains, which can critically impact policy and clinical decisions [15]. This practice underscores the role of rigorous benchmarking as the ultimate validator for scientific methods, separating robust findings from those that may be merely correlational or biased.

In the field of catalytic research and development, the rigorous evaluation of catalyst performance is fundamental to progress. For researchers, scientists, and drug development professionals, the triad of Activity, Selectivity, and Stability forms the cornerstone of a universal language for comparing and benchmarking catalytic materials. These metrics provide the quantitative foundation necessary to objectively assess a catalyst's efficiency, precision, and operational lifespan, enabling meaningful comparisons across different laboratories and research initiatives. As the chemical industry increasingly focuses on sustainability—driving demand for catalysts that enable cleaner energy production and reduce emissions—the importance of standardized performance assessment has never been greater [16].

The global refining industry itself generates large volumes of equilibrium fluid catalytic cracking catalysts (ECAT) as waste material, which highlights the need for standardized assessment to identify promising materials for secondary applications, such as plastic cracking catalysts [17]. This guide is structured to provide a practical framework for the experimental determination of these essential KPIs, complete with protocols, data presentation templates, and visualization tools designed to align with emerging community benchmarking standards.

Defining the Fundamental Metrics

Activity

Activity quantifies the rate at which a catalyst accelerates a chemical reaction toward equilibrium. It is a direct measure of a catalyst's efficiency in converting reactants into products. In industrial contexts, higher activity directly translates to improved process efficiency and lower operational costs, as it can reduce the required reactor size, lower energy input, or increase throughput [16]. For researchers, accurately measuring activity is the first step in evaluating a catalyst's potential.

Common measures of activity include:

- Conversion (X): The fraction of a key reactant consumed during the reaction.

- Turnover Frequency (TOF): The number of reactant molecules converted per active site per unit time, which provides a fundamental measure of intrinsic catalytic activity.

- Reaction Rate (r): The rate of formation of a specified product or consumption of a reactant, typically normalized to the mass or surface area of the catalyst.

Selectivity

Selectivity defines a catalyst's ability to direct the reaction pathway toward a desired product, minimizing the formation of by-products. This KPI is paramount for process economics and environmental impact, particularly in complex reactions like those in pharmaceuticals manufacturing, where it influences yield purity, simplifies downstream separation, and reduces waste [16]. In refining and petrochemicals, which account for nearly 40% of catalyst demand, selectivity directly influences product value and process sustainability [16].

Selectivity is typically expressed as:

- Product Selectivity (S): The fraction of the converted reactant that forms a specific desired product.

- Yield (Y): The combined measure of activity and selectivity, calculated as Conversion × Selectivity.

Stability

Stability measures a catalyst's ability to maintain its activity and selectivity over time under operational conditions. It reflects the catalyst's resistance to deactivation mechanisms such as sintering, coking, poisoning, or leaching. Catalyst stability is a critical determinant of operational continuity and total process cost, as it dictates the frequency of catalyst regeneration or replacement, directly impacting the viability of industrial processes [16]. The industry's focus on improving catalyst durability and longevity underscores its commercial importance [16].

Stability is often assessed through:

- Lifespan/Time-on-Stream: The total operational time before activity or selectivity falls below a critical threshold.

- Deactivation Rate Constant (k_d): A quantitative measure of the rate of activity loss over time.

- Cycle Life: For batch processes, the number of reaction-regeneration cycles a catalyst can undergo while maintaining performance.

Experimental Protocols for KPI Determination

To ensure data comparability for community benchmarking, the following standardized experimental protocols are recommended.

Protocol for Measuring Activity and Selectivity

Objective: To determine the conversion, selectivity, and yield of catalysts under controlled conditions.

Materials and Equipment:

- Fixed-Bed Flow Reactor System or equivalent batch reactor

- Mass Flow Controllers for gaseous feeds / HPLC Pump for liquid feeds

- On-line Gas Chromatograph (GC) or HPLC system equipped with appropriate detectors (FID, TCD)

- Catalyst pelletizing press and sieve set (e.g., 60-80 mesh)

- Temperature-controlled furnace

Procedure:

- Catalyst Preparation: Pelletize the catalyst and sieve to obtain a specific particle size range (e.g., 250-350 µm). Load a known mass (W) into the reactor tube.

- Reactor Conditioning: Prior to reaction, condition the catalyst in-situ under a specified gas stream (e.g., H₂ for reduction, He for drying) at a set temperature for a defined period.

- Establish Reaction Conditions: Bring the reactor to the target temperature (T) and pressure (P). Introduce the reactant feed at a precise flow rate (F).

- Data Collection: After achieving steady-state (typically 1 hour on stream), analyze the reactor effluent using the GC/HPLC at regular intervals (e.g., every 30 minutes). Collect data for at least three separate time points to confirm stability.

- Data Calculation:

- Conversion (X): ( X(\%) = \frac{[Moles{Reactant,in} - Moles{Reactant,out}]}{Moles{Reactant,in}} \times 100 )

- Selectivity to Product i (Si): ( Si(\%) = \frac{Moles{Product\ i, out}}{Total\ Moles\ of\ Reactant\ Converted} \times \frac{Stoichiometric\ Factor}{ } \times 100 )

- Yield of Product i (Yi): ( Yi(\%) = \frac{X \times S_i}{100} )

Protocol for Assessing Stability

Objective: To evaluate the change in catalyst performance over an extended time-on-stream.

Materials and Equipment:

- Same as Protocol 3.1, with capacity for long-duration operation.

- Thermogravimetric Analyzer (TGA) for post-run coke analysis.

Procedure:

- Initial Performance Benchmark: Following Protocol 3.1, measure the initial conversion (X₀) and selectivity (S₀) at standard conditions.

- Long-Term Operation: Continue the reaction under the same fixed conditions, periodically measuring conversion and selectivity at predefined intervals (e.g., every 4-8 hours for the first 24 hours, then daily).

- Post-Run Analysis: After a predetermined time (t) or when conversion drops below a set threshold (e.g., 50% of X₀), stop the reaction.

- Cool the reactor under an inert atmosphere.

- Recover the spent catalyst for characterization.

- Quantify coke deposition via TGA by burning off the carbon in air and measuring weight loss.

- Data Calculation:

- Relative Activity Retention: ( Activity\ Retention\ at\ time\ t\ (\%) = \frac{Xt}{X0} \times 100 )

- Deactivation Rate: Can be modeled from the activity decay profile.

The logical sequence and data interdependence of these core experiments are visualized below.

Comparative Performance Data

Applying the above protocols generates quantitative data for direct catalyst comparison. The following tables present illustrative data for different catalyst formulations (Cat-A, Cat-B, Cat-C) in a model reaction.

Table 1: Comparative Activity and Selectivity Performance at Standard Conditions (T=350°C, P=1 atm)

| Catalyst ID | Conversion (%) | Selectivity to Target (%) | Yield of Target (%) | TOF (s⁻¹) |

|---|---|---|---|---|

| Cat-A | 85 | 92 | 78.2 | 0.45 |

| Cat-B | 78 | 95 | 74.1 | 0.51 |

| Cat-C | 92 | 85 | 78.2 | 0.38 |

Table 2: Long-Term Stability Performance Over 100 Hours Time-on-Stream

| Catalyst ID | Initial Conversion, X₀ (%) | Conversion at t=100h, X₁₀₀ (%) | Activity Retention (%) | Coke Deposited (wt%) |

|---|---|---|---|---|

| Cat-A | 85 | 82 | 96.5 | 3.2 |

| Cat-B | 78 | 70 | 89.7 | 7.8 |

| Cat-C | 92 | 75 | 81.5 | 12.5 |

Analysis of Comparative Data:

- Cat-A demonstrates an optimal balance of high activity, excellent selectivity, and superior stability, as evidenced by its minimal deactivation and low coke formation. This profile is ideal for continuous industrial processes.

- Cat-B shows the highest intrinsic activity (TOF) and best selectivity but exhibits moderate deactivation, suggesting potential susceptibility to poisoning or coking.

- Cat-C, while achieving the highest initial conversion, suffers from lower selectivity and the poorest stability, indicating rapid deactivation likely linked to its high coke formation.

The Scientist's Toolkit: Essential Research Reagents & Materials

The following table details key materials and reagents essential for conducting the standardized experiments described in this guide.

Table 3: Essential Research Reagents and Materials for Catalytic Testing

| Item Name | Function/Application | Key Characteristics |

|---|---|---|

| Reference Catalyst (e.g., ECAT Sample) | Serves as a benchmark material for cross-laboratory performance comparison and method validation [17]. | Well-characterized composition and known performance profile. |

| High-Purity Gaseous Feeds (H₂, N₂, He, Air) | Used as reactant, carrier gas, purge gas, or for catalyst conditioning. | Ultra-high purity (≥99.999%) to prevent catalyst poisoning. |

| Certified Calibration Gases | Quantitative calibration of Gas Chromatographs (GC) for accurate product identification and quantification. | Certified mixture composition with known uncertainty. |

| Silica/Alumina Support Materials | Common high-surface-area supports for dispersing active catalytic phases. | Controlled pore size distribution and high thermal stability. |

| Active Metal Precursors (e.g., H₂PtCl₆, Ni(NO₃)₂) | Salts used in the preparation of supported metal catalysts via impregnation. | High solubility and purity to ensure reproducible catalyst synthesis. |

| Thermogravimetric Analysis (TGA) Instrument | Quantifies coke deposition on spent catalysts and determines thermal stability. | High-temperature capability with controlled atmosphere. |

The rigorous application of Activity, Selectivity, and Stability as fundamental KPIs provides an objective framework for catalyst evaluation, crucial for advancing catalytic science. The experimental protocols and data standardization presented here offer a pathway toward community-wide benchmarking standards, enabling more direct comparison of research outcomes and accelerating the development of next-generation catalysts. This is particularly vital for emerging applications such as green hydrogen production, carbon capture, and chemical recycling, where catalyst performance is a key enabling factor [16]. As the field evolves with trends like AI-enabled optimization and nanostructured materials, a consistent approach to measuring these foundational metrics will ensure that research efforts are quantifiable, comparable, and effectively translated into industrial innovation.

Implementing Benchmarking Standards: Practical Frameworks and AI-Driven Approaches

Standardized Experimental Protocols for Consistent Performance Evaluation

The pursuit of reproducible catalysis research relies fundamentally on standardized experimental protocols that enable accurate performance evaluation and cross-comparison of catalyst materials. Inconsistent testing methodologies have historically hampered the development of catalytic technologies, as data generated under different conditions and measurement approaches cannot be meaningfully compared or validated. The establishment of community benchmarking standards addresses this critical gap by providing unified frameworks for catalyst assessment, creating a common language for researchers worldwide to evaluate and communicate catalytic performance.

Benchmarking represents a community-based activity involving consensus-based decisions on how to make reproducible, fair, and relevant assessments of catalyst performance metrics including activity, selectivity, and deactivation profiles [2]. This approach requires careful documentation, archiving, and sharing of methods and measurements to ensure that the full value of research data can be realized. Beyond these fundamental goals, benchmarking presents unique opportunities to advance and accelerate understanding of complex reaction systems by combining and comparing experimental information from multiple techniques with theoretical insights [2].

The development of standardized protocols has been driven by collaborative efforts across academia, industry, and government institutions. For instance, the Advanced Combustion and Emission Control Technical Team in support of the U.S. DRIVE Partnership has developed a set of standardized aftertreatment protocols specifically designed to accelerate the pace of aftertreatment catalyst innovation by enabling accurate evaluation and comparison of performance data from various testing facilities [18]. Such initiatives recognize that consistent metrics for catalyst evaluation are essential for maximizing the impact of discovery-phase research occurring across the nation.

Standardized Testing Protocols for Catalysts

Protocol Development and Structure

Standardized catalyst test protocols consist of a set of uniform requirements and test procedures that sufficiently capture the performance capability of a catalyst technology in a manner adaptable across various laboratories. These protocols provide detailed descriptions of the necessary reactor systems, steps for achieving desired aged states of catalysts, sample pretreatments required prior to testing, and realistic test conditions for evaluating performance [18]. The structural framework typically includes general guidelines applicable to all catalyst types, supplemented by specific testing procedures tailored to particular catalyst classes and their operating mechanisms.

The development of these protocols addresses a clearly identified need from industry partners for consistent metrics that enable reliable comparison of catalyst technologies. Without such standardization, research facilities generate data under different conditions using varying measurement techniques, creating significant challenges in determining true performance advantages of newly developed catalysts. Standardized protocols establish minimum documentation requirements, specify necessary reactor configurations, define accurate measurement techniques, and outline procedures for catalyst aging and pretreatment—all essential components for generating comparable performance data [18].

Catalyst-Specific Testing Methodologies

Comprehensive testing protocols have been established for major catalyst categories, each with specialized methodologies tailored to their specific operating mechanisms and performance metrics:

Oxidation Catalysts: Protocols focus on conversion efficiency under standardized temperature conditions, assessing light-off behavior and species-resolved conversion efficiencies during degradation testing [18].

Passive Storage Catalysts: Testing methodologies evaluate storage capacity and release characteristics under controlled conditions, with particular attention to hydrocarbon storage modeling and cold-start emission performance [18].

Three-Way Catalysts: Standardized tests measure simultaneous conversion of multiple pollutants across varying air-fuel ratios, with protocols for evaluating oxygen storage capacity and redox functionality [18].

NH₃-SCR Catalysts: Protocols assess selective catalytic reduction performance using ammonia as reductant, including evaluation of low-temperature hydrothermal stability and resistance to chemical poisoning [18].

For specialized catalyst systems like nanozymes (nanomaterials with enzyme-like properties), standardized assays have been developed to determine catalytic activity and kinetics based on Michaelis-Menten enzyme kinetics, updated to account for unique physicochemical properties of nanomaterials [19]. These protocols incorporate determinations of active sites alongside other physicochemical properties such as surface area, shape, and size to better characterize catalytic kinetics across different nanomaterial structures [19].

Community Benchmarking Initiatives

CatTestHub Database Framework

The catalysis research community has developed CatTestHub, an experimental catalysis database that standardizes data reporting across heterogeneous catalysis and provides an open-access community platform for benchmarking [1]. Designed according to FAIR principles (Findability, Accessibility, Interoperability, and Reuse), this database employs a spreadsheet-based format that curates key reaction condition information required for reproducing reported experimental measures of catalytic activity, along with details of reactor configurations used during testing [1].

CatTestHub currently hosts two primary classes of catalysts—metal catalysts and solid acid catalysts—with specific benchmarking reactions established for each category. For metal catalysts, methanol and formic acid decomposition serve as benchmarking chemistries, while for solid acid catalysts, Hofmann elimination of alkylamines over aluminosilicate zeolites provides the benchmark reaction [1]. This structured approach enables researchers to contextualize their newly developed catalysts against established reference materials under identical testing conditions.

Reference Materials and Procedures

Community benchmarking relies on well-characterized catalysts that are abundantly available to the research community. These reference materials typically originate from commercial vendors, research consortia, or standardized synthesis procedures that can be reliably reproduced by individual researchers [1]. Historical examples include Johnson-Matthey's EuroPt-1, EUROCAT's EuroNi-1, World Gold Council's standard gold catalysts, and International Zeolite Association's standard zeolite materials with MFI and FAU frameworks [1].

The benchmarking process requires that turnover rates for catalytic reactions over these standard catalyst surfaces be measured under agreed reaction conditions that are free from confounding influences such as catalyst deactivation, heat/mass transfer limitations, and thermodynamic constraints [1]. When these standardized measurements are repeated by multiple independent researchers and housed in open-access databases, the community establishes validated benchmark values against which new catalytic materials can be fairly evaluated.

Experimental Testing Methodologies

Laboratory Testing Systems

Standardized catalyst testing employs controlled laboratory systems designed to replicate real-world operating conditions while ensuring precise measurement capabilities. A basic testing setup typically consists of a tube reactor with temperature-controlled furnace and mass flow controllers to maintain specific reaction conditions [20]. The reactor output connects directly to analytical instruments including gas chromatographs, FID hydrocarbon detectors, CO detectors, and FTIR systems for comprehensive product analysis [20].

These testing systems must be capable of replicating established testing protocols such as EPA Test Method 25A for emissions testing while providing the flexibility to adapt to specific catalyst requirements [20]. Proper testing environment preparation requires ensuring that temperature, pressure, and gas mixture conditions accurately mirror actual industrial operating environments, with component concentrations matching those found in real plant conditions [20].

Performance Evaluation Metrics

Catalyst performance assessment focuses on three primary metrics that collectively describe functional efficiency:

Activity: The conversion rate represents the percentage of reactants transformed under standardized conditions, typically measured as a function of temperature to determine light-off characteristics [20].

Selectivity: The ratio of desired to unwanted reaction products, indicating the catalyst's ability to direct reaction pathways toward specific outcomes while minimizing byproduct formation.

Stability: The maintenance of catalytic activity over extended time periods, measuring degradation rates and resistance to poisoning under accelerated aging conditions [20].

For nanozyme catalysts, additional characterization includes determining the number of active sites and calculating hydroxyl adsorption energy from crystal structure using density functional theory methods [19]. These measurements, combined with physicochemical properties such as surface area, shape, and size, provide comprehensive kinetic characterization that enables precise comparison across different nanomaterial structures [19].

Table 1: Standardized Testing Methods for Different Catalyst Categories

| Catalyst Type | Primary Testing Method | Key Performance Indicators | Standard References |

|---|---|---|---|

| Oxidation Catalysts | Temperature-programmed oxidation | Light-off temperature, conversion efficiency | EPA Method 25A [20] |

| Three-Way Catalysts | Dynamometer testing | Simultaneous CO, NOx, HC conversion | U.S. DRIVE Protocols [18] |

| NH₃-SCR Catalysts | Flow reactor testing | NOx conversion, N₂ selectivity, hydrothermal stability | ISO Standardized Methods [18] |

| Nanozymes | Peroxidase-like activity assays | Catalytic kinetics, active site quantification | Nature Protocols [19] |

Data Quality Assurance and Analysis

Quantitative Data Management

Robust catalyst performance evaluation requires systematic quality assurance procedures to ensure data accuracy, consistency, and reliability throughout the research process [21]. Effective quality assurance helps identify and correct errors, reduce biases, and ensure data meets established standards for analysis and reporting. The data management process follows a rigorous step-by-step approach that requires researchers to interact with datasets iteratively to extract relevant information in a transparent manner [21].

Critical steps in data quality assurance include:

Checking for duplications: Identifying and removing identical copies of data, particularly important for online data collection systems where respondents might complete questionnaires multiple times [21].

Managing missing data: Establishing percentage thresholds for completion and distinguishing between truly missing data and not relevant responses using statistical analysis such as Little's Missing Completely at Random test [21].

Identifying anomalies: Detecting data points that deviate from expected patterns through descriptive statistics analysis, ensuring all responses align with anticipated measurement ranges [21].

Data summation: Aggregating instrument measurements to construct level following established scoring protocols for standardized assessment tools [21].

Statistical Analysis Framework

Quantitative data analysis employs statistical methods to describe, summarize, and compare catalyst performance data through structured analytical cycles:

Descriptive Analysis: Summarizes dataset characteristics using frequencies, means, medians, and modes to identify trends and response patterns [21].

Inferential Analysis: Compares data relationships and makes predictions through parametric or non-parametric tests, depending on data distribution characteristics [21].

Assessment of normality distribution represents a critical step in determining appropriate statistical tests. Analysis measures include kurtosis (peakedness or flatness of distribution) and skewness (deviation of data around the mean score), with values of ±2 indicating normal distribution [21]. Additional tests such as Kolmogorov-Smirnov and Shapiro-Wilk provide further indication of normality distribution, particularly important for larger sample sizes where normality values are more likely to be violated [21].

Table 2: Essential Analytical Methods for Catalyst Performance Evaluation

| Analysis Type | Primary Methods | Application in Catalyst Testing | Data Output |

|---|---|---|---|

| Descriptive Statistics | Mean, median, mode, standard deviation | Baseline performance characterization | Central tendency measures, data variability |

| Normality Testing | Kurtosis, skewness, Kolmogorov-Smirnov, Shapiro-Wilk | Validation of statistical test assumptions | Distribution characteristics, significance values |

| Reliability Analysis | Cronbach's alpha, test-retest correlation | Instrument validation and measurement consistency | Internal consistency scores (>0.7 acceptable) |

| Comparative Analysis | ANOVA, t-tests, chi-squared | Performance comparison across catalyst formulations | Significant differences, effect sizes |

| Relationship Analysis | Correlation, regression | Process parameter influence on catalyst performance | Relationship strength and direction |

Research Reagent Solutions

The experimental evaluation of catalytic performance requires specific reagent systems and analytical tools tailored to different catalyst categories:

Enzyme Mimetics: Nanozyme testing employs peroxidase substrates like 3,3',5,5'-Tetramethylbenzidine (TMB) or 2,2'-Azinobis(3-ethylbenzothiazoline-6-sulfonic acid) (ABTS) for colorimetric activity quantification [19].

Zeolite Catalysts: Standardized materials with MFI and FAU frameworks available through the International Zeolite Association provide reference surfaces for acid-catalyzed reactions [1].

Metal Nanoparticles: Precious metal catalysts including Pt/SiO₂, Pt/C, Pd/C, Ru/C, Rh/C, and Ir/C available from commercial sources (Sigma Aldrich, Strem Chemicals) enable controlled metal-catalyzed reactions [1].

Spectroscopy Standards: Reference materials for instrument calibration including certified gas mixtures for FTIR and GC analysis, ensuring accurate concentration measurements during catalytic testing [20].

Accelerated Aging Materials: Poisoning compounds for durability testing, including sulfur compounds and phosphorus-containing substances that simulate real-world deactivation mechanisms [18].

Visualization of Standardized Testing Workflows

Catalyst Testing Protocol Implementation

Catalyst Testing Workflow: This diagram illustrates the sequential implementation of standardized testing protocols from objective definition through final benchmarking.

Data Quality Assurance Process

Data Validation Process: This workflow outlines the systematic quality assurance procedures applied to experimental data before performance analysis.

Standardized experimental protocols provide the essential foundation for consistent performance evaluation and meaningful comparison of catalytic materials across different research facilities and testing environments. The development of community-wide benchmarking initiatives represents a transformative approach to catalysis research, enabling accurate contextualization of new catalyst technologies against established reference materials and standardized testing methodologies. Through continued refinement of these protocols and expanded participation in benchmarking databases, the catalysis research community can accelerate innovation while ensuring the reproducibility and reliability of performance claims.

The implementation of standardized protocols requires meticulous attention to experimental design, data quality assurance, and statistical validation to generate comparable performance metrics. By adhering to these established frameworks and contributing to community benchmarking efforts, researchers and drug development professionals can effectively evaluate catalytic performance while advancing the broader goal of standardized assessment methodologies across the scientific community.

In the field of catalysis research, inconsistent metrics and reporting standards present significant obstacles to progress and reproducibility. Researchers, scientists, and drug development professionals face considerable challenges when comparing catalytic performance across studies due to varying experimental conditions, measurement techniques, and data reporting formats. These inconsistencies undermine the development of reliable community benchmarking standards, ultimately slowing innovation in catalyst development for critical applications including pharmaceutical synthesis and energy conversion.

The core issue extends beyond simple data collection to the fundamental processes of data curation—the systematic organization, annotation, and preservation of data to ensure long-term accuracy and accessibility [22]. Without robust curation practices, catalytic data remains siloed, incomparable, and of limited value for cross-study analysis or machine learning applications. This article examines current approaches to catalytic data management, provides structured comparisons of catalytic systems and data methodologies, and outlines experimental frameworks for establishing consistent benchmarking standards.

Quantitative Comparison of Catalytic Systems and Data Standards

Performance Metrics Across Catalyst Types

Understanding the performance landscape across different catalyst categories requires standardized metrics. The table below compares key performance indicators and data characteristics for major catalyst types relevant to pharmaceutical and industrial applications.

Table 1: Comparative Performance Metrics for High-Performance Catalysts

| Catalyst Type | Key Applications | Performance Metrics | Data Challenges | Market Trends |

|---|---|---|---|---|

| Heterogeneous | Petrochemicals, Refining, Environmental Protection | Enhanced reaction efficiency, process stability under harsh conditions [16] | Composition-process-performance relationships, material characterization data | Dominant segment (CAGR 4.8%), digitalization for optimization [23] [16] |

| Homogeneous | Pharmaceuticals, Specialty Chemicals, Polymer Synthesis | Precise chemical conversions, high selectivity, low waste production [16] | Reaction mechanism data, solvent effects, catalyst recovery | Growing demand in high-purity applications, bio-based catalysts [16] |

| Automotive Catalytic | Vehicle Emissions Control | Conversion efficiency for CO, NOx, hydrocarbons; durability [24] [25] | Real-world vs. lab performance correlation, poisoning data | Market growth to $73.08B in 2025 (10.6% CAGR), nanoparticle innovations [25] |

| FeCoCuZr HAS Catalysts | Higher Alcohol Synthesis | STYHA: 1.1 gHA h⁻¹ gcat⁻¹; Selectivity: <30% [26] | Multicomponent optimization, reaction condition effects | Active learning reducing experiments from billions to 86 [26] |

Catalytic Converter Market and Material Considerations

The broader catalyst market reveals material constraints and regional trends that impact data standardization efforts across the research community.

Table 2: Automotive Catalytic Converter Market and Material Analysis

| Parameter | Regional Leadership | Material Considerations | Growth Projections |

|---|---|---|---|

| Market Size | Europe: $59.33B (2024), 35% global share [27] | Palladium: 53% market share, effective for petroleum engines [27] | Global market: $387.84B by 2034 (8.63% CAGR) [27] |

| Growth Region | Asia-Pacific: Fastest growth (12.72% CAGR) [27] | Platinum: Good oxidation catalyst, high resistance to poisoning [27] | Three-way oxidation-reduction: >49% market share [27] |

| Key Drivers | Stringent emission regulations (Euro 7, EPA Tier 4) [24] [25] | Rhodium: Critical for NOx reduction | Digitalization, AI-driven design, lightweight designs [25] |

Experimental Protocols for Consistent Catalytic Metrics

Active Learning Framework for Catalyst Optimization

The development of high-performance catalysts for complex reactions like higher alcohol synthesis (HAS) demonstrates how structured experimental frameworks can generate consistent, high-quality data. A recent study on FeCoCuZr catalysts employed an active learning approach integrating data-driven algorithms with experimental workflows to navigate an extensive chemical space of approximately five billion potential combinations [26].

Methodology Overview:

- Initialization: Begin with seed data from related catalyst systems (e.g., 31 FeCoZr, FeCuZr, and CuCoZr catalysts) [26]

- Model Training: Train Gaussian Process (GP) with Bayesian Optimization (BO) algorithms using elemental compositions (Fe, Co, Cu, Zr molar content) and corresponding performance metrics (e.g., STYHA) [26]