Decoding Catalyst Design: A Comprehensive Guide to SHAP Analysis for Interpretable Descriptor Identification

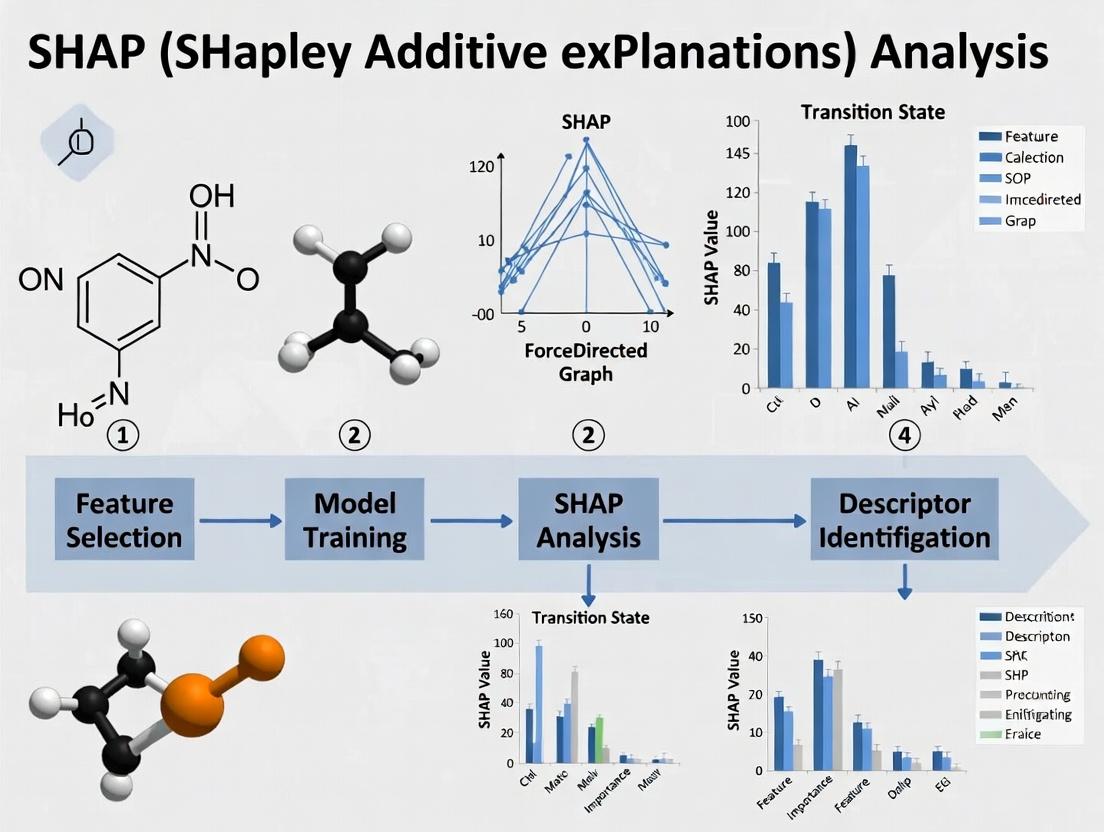

This article provides a complete framework for applying SHAP (SHapley Additive exPlanations) analysis to identify and interpret key molecular and material descriptors in catalyst design.

Decoding Catalyst Design: A Comprehensive Guide to SHAP Analysis for Interpretable Descriptor Identification

Abstract

This article provides a complete framework for applying SHAP (SHapley Additive exPlanations) analysis to identify and interpret key molecular and material descriptors in catalyst design. Aimed at researchers and development professionals in chemistry and drug discovery, we bridge the gap between complex machine learning models and actionable chemical insights. We cover foundational concepts, step-by-step methodologies for application, strategies for troubleshooting common pitfalls, and comparative validation against other interpretability techniques. The goal is to empower scientists to build more transparent, reliable, and efficient workflows for catalyst discovery and optimization.

What is SHAP Analysis? Demystifying Interpretable AI for Catalyst Discovery

The Black Box Problem in Catalyst Machine Learning Models

1. Introduction and Context within SHAP Thesis Research The application of machine learning (ML) to catalyst discovery has accelerated the identification of high-performance materials. However, these models are often "black boxes," where the relationship between input descriptors (e.g., electronic structure, geometric parameters) and catalytic output (e.g., activity, selectivity) is opaque. This black box problem hinders scientific trust and, crucially, the extraction of fundamental chemical insights. This document frames the black box challenge within a broader thesis focused on using SHapley Additive exPlanations (SHAP) analysis to identify interpretable, physically meaningful catalyst descriptors. The goal is to transition from correlative ML models to causally informative tools for catalyst design.

2. Quantitative Data Summary: Model Performance vs. Interpretability Trade-offs

Table 1: Comparison of Common ML Models in Catalyst Informatics

| Model Type | Typical R² (Activity Prediction) | Interpretability Level | Key Black-Box Challenge | SHAP Compatibility |

|---|---|---|---|---|

| Linear Regression | 0.3 - 0.6 | High | Limited by linear assumption | High; direct feature weights |

| Random Forest (RF) | 0.7 - 0.85 | Medium | Complex ensemble of trees | High; native TreeSHAP support |

| Gradient Boosting (XGBoost) | 0.75 - 0.9 | Medium | Dense ensemble of sequential trees | High; native TreeSHAP support |

| Deep Neural Network (DNN) | 0.8 - 0.95 | Very Low | High-dimensional non-linear transformations | Medium; requires KernelSHAP or DeepSHAP |

| Support Vector Machine (SVM) | 0.65 - 0.8 | Low | Kernel-induced high-dimensional space | Low; KernelSHAP computationally expensive |

Table 2: SHAP Value Analysis for a Hypothetical CO2 Reduction Catalyst Dataset

| Catalyst Descriptor | Mean | SHAP Value | (impact on model output) | Descriptor Range in Dataset | Physical Interpretation |

|---|---|---|---|---|---|

| d-band center (eV) | -2.1 | 0.85 | [-3.5, -1.0] | Strongly influences adsorbate binding energy | |

| Oxidation State | +2.3 | 0.52 | [+1, +4] | Linked to metal reactivity and stability | |

| Coordination Number | 5.8 | 0.41 | [4, 8] | Affects site availability and geometry | |

| Electronegativity | 1.8 | 0.15 | [1.3, 2.4] | Moderate impact on electron transfer |

3. Experimental Protocols for SHAP-Driven Descriptor Identification

Protocol 3.1: Building and Interpreting a Catalyst ML Model

- Data Curation: Assemble a consistent dataset. Example: Catalytic turnover frequency (TOF) for methanation on transition metal alloys.

- Descriptor Calculation: Compute atomic and structural features (e.g., using DFT: d-band center, formation energy, Bader charges).

- Model Training: Split data (80/20 train/test). Train a Tree-based model (e.g., XGBoost) using 5-fold cross-validation.

- SHAP Analysis: Calculate SHAP values using the

shapPython library (TreeExplainer). - Insight Extraction: Identify top 5 descriptors by mean absolute SHAP value. Plot SHAP summary and dependence plots to reveal linear/non-linear relationships with the target property.

Protocol 3.2: Validating SHAP-Identified Descriptors Experimentally

- Hypothesis Formulation: Based on SHAP output, hypothesize that "d-band center width" is a key descriptor for selectivity.

- Catalyst Series Synthesis: Prepare a controlled series of M-Cu bimetallic nanoparticles (M = Fe, Co, Ni) via incipient wetness impregnation.

- Descriptor Measurement: Use X-ray photoelectron spectroscopy (XPS) valence band spectra to estimate d-band width.

- Performance Testing: Evaluate catalysts in a fixed-bed reactor under standard conditions (e.g., CO2 hydrogenation, 250°C, 20 bar).

- Correlation Analysis: Plot measured selectivity against the experimental descriptor. A strong correlation validates the SHAP-derived insight.

4. Mandatory Visualizations

Title: SHAP Analysis Workflow for Catalyst ML Interpretability

Title: The Role of SHAP in Bridging Black Box Models to Insight

5. The Scientist's Toolkit: Essential Research Reagents & Solutions

Table 3: Key Tools for Interpretable Catalyst ML Research

| Item | Function/Benefit | Example Tools/Software |

|---|---|---|

| Density Functional Theory (DFT) Code | Calculates electronic structure descriptors as model inputs. | VASP, Quantum ESPRESSO, Gaussian |

| Machine Learning Library | Provides algorithms for building predictive models. | scikit-learn, XGBoost, PyTorch |

| SHAP Library | Computes Shapley values for model interpretation. | shap Python package (Kernel, Tree, Deep explainers) |

| High-Throughput Experimentation (HTE) Rig | Generates consistent, large-scale catalyst performance data. | Automated synthesis and testing reactors |

| Spectroscopic Characterization Suite | Measures experimental descriptors for validation. | XPS, XAFS, FTIR, STEM-EDS |

| Catalyst Database | Source of curated historical data for initial training. | CatApp, NOMAD, ICSD |

Core Theoretical Framework

SHAP (SHapley Additive exPlanations) is an interpretability method rooted in cooperative game theory, adapted for machine learning models. It attributes the prediction of an instance to each input feature fairly, based on their marginal contribution across all possible feature coalitions.

Key Equations:

- Shapley Value (Original Game Theory): For a feature i, its Shapley value is calculated as: (\phii = \sum{S \subseteq N \setminus {i}} \frac{|S|! (|N|-|S|-1)!}{|N|!} [v(S \cup {i}) - v(S)]) where N is the set of all features, S is a subset of features without i, and v(S) is the payoff (model output) for the subset S.

- SHAP Value (ML Adaptation): In SHAP, the payoff v(S) is defined as the expected model prediction conditioned on the feature subset S: (E[f(x) | x_S]).

Summary of SHAP Variants for Catalyst Research:

| SHAP Variant | Model Agnostic? | Best Suited For | Computational Cost | Key Consideration for Catalyst Data |

|---|---|---|---|---|

| KernelSHAP | Yes | Any model, small to medium feature sets (<100) | High | Intractable for exhaustive catalyst descriptor sets. Good for final interpretation of top descriptors. |

| TreeSHAP | No (Tree-based only) | Random Forest, XGBoost, LightGBM | Low | Highly recommended for high-dimensional catalyst screening. Enables fast analysis of 1000s of descriptors. |

| DeepSHAP | No (Deep Learning) | Neural Networks | Medium | Applicable for descriptor-reactivity models using deep neural networks. |

SHAP Analysis Protocol for Catalyst Descriptor Identification

This protocol outlines the process for identifying interpretable physical descriptors from a high-throughput catalyst screening dataset.

A. Experimental Workflow

Diagram Title: SHAP Analysis Workflow for Catalyst Descriptors

B. Detailed Methodology

Protocol 1: Model Training and TreeSHAP Computation Objective: Train a robust predictive model and compute exact SHAP values for interpretability.

- Data Preparation: Split the complete dataset (catalyst samples x descriptors) into 80% training and 20% hold-out test sets using a stratified split if the target property is categorical.

- Model Training: Train an XGBoost Regressor/Classifier using 5-fold cross-validation on the training set to optimize hyperparameters (maxdepth, nestimators, learning_rate). Use the scikit-learn API.

- SHAP Computation: Instantiate a

shap.TreeExplainerwith the trained best model. Calculate SHAP values for the entire hold-out test set usingexplainer.shap_values(X_test).

Protocol 2: Identification and Interpretation of Key Descriptors Objective: Rank and physically interpret the most influential descriptors.

- Descriptor Ranking: Calculate the mean absolute SHAP value (

mean(|SHAP value|)) for each descriptor across the test set. Sort descriptors in descending order to create an importance ranking. - Global Interpretation (Beeswarm Plot): Use

shap.plots.beeswarm(shap_values)to visualize the distribution of SHAP values for the top 20 descriptors. High-density regions indicate the descriptor's typical impact on prediction. - Local & Dependence Analysis:

- Individual Prediction: Use

shap.force_plot(...)to explain a single catalyst's predicted activity. - Dependence Plot: For a top descriptor (e.g., d-band center), use

shap.dependence_plot(...)to plot its SHAP value vs. its feature value, colored by a potentially interacting descriptor (e.g., coordination number).

- Individual Prediction: Use

The Scientist's Toolkit: Essential Research Reagents & Software

| Item Name/Software | Category | Function in SHAP Catalyst Research |

|---|---|---|

| XGBoost / LightGBM | Software Library | Provides high-performance, tree-based models compatible with the exact and fast TreeSHAP algorithm. Essential for handling 1000s of catalyst descriptors. |

| SHAP (shap) Python Library | Software Library | Core toolkit for computing SHAP values and generating all standard interpretability plots (beeswarm, dependence, force plots). |

| pymatgen / ASE | Software Library | Used for generating atomic-scale descriptors (e.g., coordination numbers, elemental properties, structural motifs) from catalyst structures. |

| Catalyst Database (e.g., CatHub, NOMAD) | Data Source | Provides curated experimental or computational datasets for training and validating descriptor-activity models. |

| DFT Software (VASP, Quantum ESPRESSO) | Computational Tool | Generates high-fidelity electronic structure descriptors (d-band center, Bader charges, density of states) and target properties for the training set. |

Application in Interpretable Catalyst Design: A Case Study

Context: Identifying descriptors for the oxygen evolution reaction (OER) activity of perovskite oxides.

Quantitative SHAP Output Example: Table: Top 5 SHAP-Identified Descriptors for OER Overpotential on Perovskites (ABO₃)

| Descriptor Rank | Descriptor Name (Feature) | Physical Meaning | Mean | SHAP Value | Impact Direction (SHAP vs. Feature Value) | |

|---|---|---|---|---|---|---|

| 1 | O 2p-band center (ε_O-2p) | Average energy of oxygen 2p states relative to Fermi level. | 0.142 eV | Lower (more negative) ε_O-2p → Lower (better) overpotential. | ||

| 2 | B-site metal electronegativity (χ_B) | Pauling electronegativity of the B-site transition metal. | 0.098 eV | Higher χ_B → Lower overpotential. | ||

| 3 | Tolerance factor (t) | Measure of structural distortion from ideal cubic perovskite. | 0.076 eV | Optimal ~0.96 minimizes overpotential (U-shaped dependence). | ||

| 4 | B-O bond length | Average bond distance between B-site metal and oxygen. | 0.064 eV | Shorter bond → Lower overpotential. | ||

| 5 | A-site ionic radius | Radius of the A-site cation. | 0.051 eV | Larger radius → Higher overpotential (in this dataset). |

Interpretation Workflow & Logical Relationship:

Diagram Title: From SHAP Output to Catalyst Design Rule

Key Advantages of SHAP for Chemical and Material Science

Application Notes

Within the thesis on SHAP analysis for interpretable catalyst descriptor identification, SHAP (SHapley Additive exPlanations) provides a unified framework for interpreting complex machine learning model predictions in chemical and material science. Its key advantages stem from its rigorous mathematical foundation in cooperative game theory, ensuring consistent and locally accurate attribution of a prediction to each input feature (descriptor).

Primary Advantages:

- Global Interpretability: Identifies the most impactful material descriptors (e.g., adsorption energies, d-band centers, atomic radii) across an entire dataset, guiding fundamental understanding.

- Local Interpretability: Explains individual predictions (e.g., why a specific alloy shows high activity) by quantifying each descriptor's contribution, crucial for diagnosing outliers.

- Model Agnosticism: Applicable to any ML model—from random forests to deep neural networks—used for property prediction (catalytic activity, band gap, yield strength).

- Directionality: Reveals whether a specific increase in a descriptor value (e.g., electronegativity) positively or negatively impacts the target property.

- Descriptor Interaction Detection: Quantifies and visualizes non-linear interactions between descriptors (e.g., between coordination number and valence electron count), uncovering complex design rules.

Table 1: Comparative Analysis of Interpretability Methods in Catalyst Design

| Method | Mathematical Foundation | Local Fidelity | Global Summary | Handles Feature Interaction | Output Type |

|---|---|---|---|---|---|

| SHAP | Cooperative Game Theory (Shapley values) | Yes | Yes (SHAP summary plots) | Yes | Additive feature attributions |

| Permutation Importance | Feature Randomization | No | Yes | No | Importance scores |

| PDP (Partial Dependence Plot) | Marginalization | No | Yes | Limited (typically 2D) | Marginal effect plot |

| LIME | Local Linear Surrogates | Yes | No | Limited | Local surrogate model coefficients |

| Saliency Maps | Gradient-based (for NN) | Yes | No | Limited | Gradient magnitude |

Table 2: Example SHAP Values from a Hypothetical ORR Catalyst Study

| Material Descriptor | Mean | SHAP Value | (Impact) | High Value Effect | Typical Range in Dataset |

|---|---|---|---|---|---|

| d-band center (eV) | -2.5 | 0.85 | Negative (lower is better) | -3.5 to -1.5 | |

| O* adsorption energy (eV) | -1.2 | 0.65 | Volcano relationship | -2.0 to -0.5 | |

| Surface strain (%) | 3.0 | 0.45 | Positive (higher is better) | 0.5 to 5.5 | |

| Pauling electronegativity | 2.1 | 0.30 | Negative (lower is better) | 1.6 to 2.4 |

Experimental Protocols

Protocol 1: SHAP Analysis for Catalytic Activity Model Interpretation

Objective: To identify and rank the most critical physicochemical descriptors governing the predicted overpotential for the oxygen evolution reaction (OER) from a random forest model.

Materials & Software: Python 3.8+, scikit-learn, shap library, pandas, numpy, matplotlib. Dataset of catalyst compositions with calculated/elemental descriptors and target OER overpotential.

Procedure:

- Model Training: Train a validated random forest regressor (

sklearn.ensemble.RandomForestRegressor) to predict the target property (e.g., overpotential) from the descriptor matrix. - SHAP Explainer Initialization: Instantiate a

shap.TreeExplainerfor the trained random forest model. For non-tree-based models, useKernelExplainer(model-agnostic but slower) orDeepExplainerfor neural networks. - SHAP Value Calculation: Compute SHAP values for the entire training/test set using

explainer.shap_values(X), whereXis the (nsamples, ndescriptors) matrix. - Global Analysis (Summary Plot): Generate a

shap.summary_plot(shap_values, X, plot_type="dot"). This plot ranks descriptors by global importance (mean absolute SHAP value) and shows the distribution of each descriptor's impact and directionality via color. - Interaction Analysis: To probe specific interactions, use

shap.dependence_plot("primary_descriptor", shap_values, X, interaction_index="secondary_descriptor"). - Local Explanation: For a specific catalyst of interest, visualize a force plot using

shap.force_plot(explainer.expected_value, shap_values[index], X.iloc[index])to deconstruct its prediction.

Protocol 2: SHAP-Guided Descriptor Selection for Inverse Design

Objective: To reduce descriptor dimensionality and inform a genetic algorithm for the inverse design of novel polymer dielectrics.

Procedure:

- Initial Screening: Perform SHAP analysis on a high-dimensional descriptor set (e.g., 200+ features including compositional, topological, and electronic descriptors) to obtain mean absolute SHAP values.

- Feature Reduction: Select the top k descriptors contributing to 95% of the cumulative SHAP importance. This creates a physically informed, lower-dimensional design space.

- Design Space Definition: Use the ranges of the top k descriptors from the existing dataset to define the boundaries of a search space for inverse design.

- Inverse Design Loop: Integrate the SHAP-informed search space into a genetic algorithm. Use the trained model as the fitness function (predicting dielectric constant) and apply SHAP constraints to penalize candidates with descriptor combinations historically linked to poor performance.

- Validation: Synthesize or simulate top-predicted candidates and validate their properties.

Visualizations

Title: SHAP Analysis Workflow for Catalyst Design

Title: SHAP Additive Feature Attribution Principle

The Scientist's Toolkit

Table 3: Key Research Reagent Solutions for SHAP-Driven Catalyst Research

| Item / Tool | Function & Relevance to SHAP Analysis |

|---|---|

| High-Throughput DFT/DFTB Codes (VASP, Quantum ESPRESSO, DFTB+) | Generate consistent, accurate quantum-mechanical descriptor data (adsorption energies, electronic structure) as primary inputs for the predictive model. |

Machine Learning Libraries (scikit-learn, XGBoost, TensorFlow/PyTorch) |

Provide algorithms to build the complex, high-performance predictive models (target property = f(descriptors)) that SHAP will interpret. |

SHAP Python Library (shap) |

Core computational engine for calculating Shapley values. Offers optimized explainers (Tree, Kernel, Deep) for different model classes. |

| Descriptor Calculation Tools (pymatgen, RDKit, custom scripts) | Compute physicochemical and structural descriptors (compositional, topological, electronic) from atomic structures to build the feature matrix X. |

Data Management & Visualization (pandas, numpy, matplotlib, seaborn) |

Handle, clean, and organize descriptor/target dataframes; create publication-quality plots of SHAP summary, dependence, and force plots. |

Inverse Design Platforms (ASE, GAucsd, custom genetic algorithms) |

Utilize SHAP-derived insights (key descriptors, directionality) to define search spaces and fitness functions for computational discovery of new materials. |

Within the broader thesis on SHAP (SHapley Additive exPlanations) analysis for interpretable catalyst descriptor identification, this article details the primary descriptor spaces—electronic, structural, and compositional—used to represent heterogeneous and homogeneous catalysts. These feature spaces provide the foundational data on which interpretable machine learning (ML) models are built, allowing researchers to decode the "black box" and identify the physicochemical drivers of catalytic performance.

Descriptor Spaces: Definitions and Data

Catalytic descriptors are numerical representations of a catalyst's properties. The following table categorizes and defines key descriptors within the three primary spaces, which are commonly used as input features (X) in ML models predicting catalytic activity, selectivity, or stability (y).

Table 1: Common Catalyst Descriptor Spaces and Key Features

| Descriptor Space | Key Features | Typical Measurement/Calculation | Relevance to Catalytic Function |

|---|---|---|---|

| Electronic | d-band center, work function, ionization potential, electron affinity, Bader charge, density of states (DOS) features. | DFT calculations, XPS, UPS, cyclic voltammetry. | Governs adsorbate binding strength, charge transfer, and activation barriers. |

| Structural | Coordination number, lattice parameters, particle size, surface area (BET), facet exposure, bond lengths/angles, disorder index. | XRD, EXAFS, TEM, STEM, adsorption isotherms. | Determines availability and geometry of active sites, influencing ensemble and ligand effects. |

| Compositional | Elemental identity & ratio, dopant concentration, alloying degree (e.g., Pauling electronegativity, atomic radius). | EDS, ICP-MS, XRF, bulk chemical analysis. | Defines the fundamental chemical nature and synergy in multi-component systems. |

Application Notes & Protocols

Protocol: Calculating d-band Center from Density Functional Theory (DFT)

Purpose: To obtain a fundamental electronic descriptor for transition metal and alloy catalysts, strongly correlated with adsorption energies.

Materials & Reagent Solutions:

- Software: DFT code (e.g., VASP, Quantum ESPRESSO), visualization/analysis tool (e.g., pymatgen, VESTA).

- Computational Resource: High-Performance Computing (HPC) cluster.

- Input File: Catalyst slab or cluster model with optimized geometry.

Methodology:

- Geometry Optimization: Fully relax the catalytic model (slab/cluster) until forces on all atoms are below 0.01 eV/Å.

- Self-Consistent Field (SCF) Calculation: Perform a static calculation on the optimized structure to obtain the converged charge density and wavefunctions.

- Density of States (DOS) Calculation: Run a non-self-consistent calculation with a dense k-point mesh (e.g., > 30 points per Å⁻¹) to obtain a high-resolution projected density of states (PDOS).

- Projection: Project the DOS onto the d-orbitals of the catalytic metal atom(s) of interest.

- d-band Center Calculation: Calculate the first moment (weighted average) of the d-projected DOS relative to the Fermi energy (EF): [ \varepsilond = \frac{\int{-\infty}^{EF} E \cdot \rhod(E) dE}{\int{-\infty}^{EF} \rhod(E) dE} ] where (\rho_d(E)) is the d-projected DOS.

Protocol: Extracting Structural Descriptors from X-ray Absorption Fine Structure (EXAFS)

Purpose: To determine local atomic structure descriptors (coordination number, bond distance, disorder) for supported nanoparticles or amorphous catalysts.

Materials & Reagent Solutions:

- Synchrotron Facility: Beamline capable of X-ray absorption spectroscopy (XAS).

- Sample: Powder catalyst pellet or solid in a suitable holder.

- Software: Data processing suites (e.g., Athena, Demeter, Larch).

Methodology:

- Data Collection: Collect X-ray absorption spectra (XANES and EXAFS regions) at the absorption edge of the active metal.

- Pre-processing: Use

Athenato align, normalize, and background-subtract the raw data. - k-space Conversion: Convert absorption vs. energy to (\chi(k)) vs. photoelectron wavevector (k).

- Fourier Transform: Transform (k)-weighted (\chi(k)) to (R)-space to obtain a radial distribution function.

- Shell Fitting: In

Artemis, fit the EXAFS equation to the Fourier-transformed data using a theoretical model generated byFEFF. Key fitted parameters include:- Coordination Number (N): Number of neighbors in a shell.

- Bond Distance (R): Distance to the neighbor shell.

- Disorder Factor ((\sigma^2)): Debye-Waller factor representing mean-square disorder.

Protocol: Generating Compositional Feature Vectors for High-Throughput Screening

Purpose: To encode multi-component catalyst compositions into a fixed-length numerical vector for ML model input.

Materials & Reagent Solutions:

- Data Source: Composition list (e.g., Pt80Ni20, Fe2O3).

- Software: Python with libraries (pymatgen, matminer, pandas).

- Reference Data: Periodic table properties (atomic number, mass, radius, electronegativity).

Methodology:

- Define Formula: Input catalyst composition in standard chemical formula notation.

- Elemental Property Aggregation: For each element in the formula, retrieve a set of foundational properties (e.g., atomic number, group, period, electronegativity, atomic radius).

- Feature Engineering: Use

matminerfeaturizers to create composition-based descriptors:- Stoichiometric Attributes: Atomic fraction, weight fraction.

- Elemental Property Statistics: For the mixture, compute statistics (mean, range, variance, mode) of the chosen atomic properties across all constituent elements.

- Weighting: Statistics can be weighted by atomic or fractional composition.

- Vector Output: The output is a 1D array where each column is a distinct compositional feature (e.g.,

mean_electronegativity,range_atomic_radius), ready for concatenation with electronic and structural descriptors.

The Scientist's Toolkit

Table 2: Essential Research Reagents & Tools for Descriptor Acquisition

| Item | Function/Description |

|---|---|

| VASP/Quantum ESPRESSO | First-principles DFT software for calculating electronic and geometric descriptors (d-band center, adsorption energy). |

| pymatgen/matminer | Python libraries for materials analysis and automated featurization of compositional/structural data. |

| Synchrotron Beamtime | Enables collection of XAS data for local structural descriptors (EXAFS) and oxidation states (XANES). |

| High-Resolution TEM/STEM | Provides direct imaging for structural descriptors like particle size distribution, shape, and lattice fringes. |

| SHAP Library (Python) | Post-hoc explanation tool for interpreting ML model predictions and ranking descriptor importance. |

| BET Surface Area Analyzer | Measures specific surface area (a key structural descriptor) via gas physisorption isotherms. |

| Inductively Coupled Plasma Mass Spectrometry (ICP-MS) | Provides precise quantitative compositional analysis of bulk and trace elements. |

Visualization of Workflows

Title: Descriptor Acquisition to SHAP Analysis Workflow

Title: SHAP Value Calculation Logic

This document details the essential prerequisites for conducting SHapley Additive exPlanations (SHAP) analysis within a broader research thesis focused on interpretable catalyst descriptor identification. The accurate application of SHAP for identifying key physical, electronic, or compositional descriptors in catalytic materials hinges on rigorous data preparation and model compatibility. This protocol ensures the foundational integrity required for deriving chemically meaningful interpretations from complex machine learning models.

Core Prerequisites for SHAP Analysis

Data Format Specifications

SHAP requires data to be structured in a consistent, numerical format. The following table summarizes the mandatory data characteristics.

Table 1: Data Format Requirements for SHAP Analysis

| Data Attribute | Requirement | Rationale & Catalyst Research Example |

|---|---|---|

| Data Type | Must be numeric (float, integer). Categorical data must be encoded. | Catalytic descriptors (e.g., d-band center, formation energy, coordination number) are inherently numerical. |

| Missing Values | Must be imputed or removed prior to SHAP calculation. | Incomplete characterization data (e.g., missing BET surface area) will cause computation failures. |

| Data Shape | Features matrix X: (nsamples, nfeatures). Target vector y: (n_samples,). |

Aligns with standard ML libraries (scikit-learn). For catalyst screening, n_samples is the number of catalyst compositions/structures. |

| Normalization/Scaling | Recommended, especially for distance- or tree-based models. Not strictly required for tree-based models. | Descriptors like adsorption energy (eV) and particle size (nm) exist on different scales; scaling prevents feature dominance. |

| Data Structure | Pandas DataFrame (with column names) or NumPy array. | DataFrames preserve descriptor names, which are critical for interpreting SHAP output plots. |

| Train/Test Split | SHAP values are typically computed on a held-out test set. | Evaluates interpretability on unseen catalyst data, ensuring robustness of identified key descriptors. |

Model Compatibility Matrix

Not all machine learning models are directly compatible with all SHAP explainers. The choice of explainer is dictated by model type and the desired explanation (global vs. local).

Table 2: SHAP Explainer Compatibility with Common Model Types

| Model Type | Recommended SHAP Explainer | Thesis Application Notes | Computation Speed |

|---|---|---|---|

| Tree-based Models (Random Forest, XGBoost, LightGBM, CatBoost) | TreeExplainer |

Preferred for catalyst property prediction. Exact, fast, and supports interaction effects. | Very Fast |

| Linear Models (Lasso, Ridge, Logistic Regression) | LinearExplainer |

Provides exact SHAP values. Useful for baseline models comparing against more complex non-linear approaches. | Fast |

| Deep Learning Models (Neural Networks) | DeepExplainer (TensorFlow/PyTorch) or GradientExplainer |

For complex descriptor-property relationships learned via neural networks. | Medium-Slow |

| Any Model (Model-Agnostic) | KernelExplainer (uses Lime) |

Fallback for unsupported models. Can be applied to SVMs or custom catalyst models. Warning: Computationally expensive. | Very Slow |

| Any Model (Model-Agnostic) | PermutationExplainer |

A newer, more efficient model-agnostic alternative to KernelExplainer. | Medium |

Experimental Protocols for SHAP Workflow in Catalyst Research

Protocol 3.1: Data Preparation Pipeline

Objective: To preprocess catalyst descriptor and target property data into a format suitable for model training and SHAP analysis.

- Descriptor Consolidation: Compile all calculated/experimental descriptors (e.g., elemental features, structural motifs, computed electronic properties) into a single Pandas DataFrame. Each row is a unique catalyst sample.

- Categorical Encoding: Encode categorical descriptors (e.g., crystal system, primary metal) using one-hot encoding.

- Missing Value Imputation: For numerical descriptors, impute missing values using median imputation (robust to outliers) or a domain-informed value (e.g., DFT calculation failure set to a high-value flag). Document all imputations.

- Train-Test Split: Perform a stratified or random 80/20 split on the data to create

X_train,X_test,y_train,y_test. The test set is reserved for final SHAP evaluation. - Feature Scaling: Standardize

X_trainusingStandardScaler(z-score normalization). Fit the scaler onX_trainonly, then transform bothX_trainandX_test.

Protocol 3.2: Model Training for SHAP Compatibility

Objective: To train a model optimized for both predictive performance and interpretability via SHAP.

- Model Selection: Based on data size and non-linearity, select a compatible model (e.g., XGBoost Regressor for small-to-medium datasets).

- Hyperparameter Tuning: Use

GridSearchCVorRandomizedSearchCVon the training set only to optimize model parameters. Use cross-validation to prevent overfitting. - Model Training: Train the final model with optimal parameters on the entire

X_train,y_train. - Performance Validation: Evaluate the model on the held-out

X_test,y_testusing relevant metrics (RMSE, MAE, R²). A reliable model is a prerequisite for reliable explanations.

Protocol 3.3: SHAP Value Calculation and Visualization

Objective: To compute and visualize SHAP values to identify key catalyst descriptors.

- Explainer Initialization: Instantiate the appropriate SHAP explainer. For an XGBoost model

model:

SHAP Value Calculation: Calculate SHAP values for the test set:

Global Interpretation - Feature Importance: Generate a summary plot of the mean absolute SHAP values:

Local Interpretation - Force Plot: Analyze individual catalyst predictions to understand descriptor contributions for a specific sample:

Visual Workflows and Pathways

Diagram 1: SHAP Analysis Workflow for Catalyst Descriptor Identification

Diagram 2: SHAP Explainer Selection Logic

The Scientist's Toolkit: Essential Research Reagents & Software

Table 3: Key Research Reagent Solutions for SHAP-Driven Catalyst Research

| Item / Reagent / Tool | Function & Role in SHAP Analysis | Example / Specification |

|---|---|---|

| Python SHAP Library | Core computational engine for calculating SHAP values and generating interpretability plots. | shap package (version >=0.44.0). Provides all explainers (Tree, Kernel, Deep, etc.). |

| Compatible ML Library | Trains the predictive model that SHAP will explain. Must be compatible with a SHAP explainer. | scikit-learn, XGBoost, LightGBM, CatBoost, TensorFlow, PyTorch. |

| Jupyter Notebook / IDE | Environment for interactive data exploration, model development, and SHAP visualization. | JupyterLab, Google Colab, or VS Code with Python kernel. |

| Catalyst Descriptor Dataset | The structured numerical input (features) for the model. The subject of interpretation. | DataFrame containing columns for d-band center, oxidation state, atomic radii, etc. |

| Model Persistence Tool | Saves trained models for later SHAP analysis without retraining. | pickle or joblib for serialization. |

| High-Performance Compute (HPC) | Resource for computationally intensive steps (DFT descriptor calculation, neural network training, KernelSHAP). | Access to GPU clusters or cloud computing (AWS, GCP) may be required for large datasets. |

Step-by-Step SHAP Workflow: From Model Training to Descriptor Visualization

Building and Training Your Catalyst Property Prediction Model

This protocol details the construction of a machine learning (ML) model for predicting catalytic properties, a core experimental component within a broader thesis focused on SHAP (SHapley Additive exPlanations) analysis for interpretable catalyst descriptor identification. The workflow generates predictive models that serve as the essential substrates for subsequent SHAP interrogation, enabling the extraction of physically meaningful and actionable chemical insights from complex feature spaces.

Key Research Reagent Solutions & Materials

Table 1: Essential Computational Toolkit for Catalyst ML Model Development

| Item | Function/Brief Explanation |

|---|---|

| Catalyst Dataset (Structured) | A curated, tabular dataset containing featurized catalyst representations (e.g., composition, structural descriptors, reaction conditions) and associated target property labels (e.g., yield, turnover frequency, selectivity). |

| Python 3.8+ Environment | Core programming environment with essential packages: scikit-learn, pandas, numpy, matplotlib, seaborn. |

| Machine Learning Libraries | XGBoost or LightGBM for gradient boosting; CatBoost for categorical feature handling; TensorFlow/PyTorch for deep neural networks. |

| Interpretability Library | SHAP (shap) library for post-model analysis and descriptor importance quantification. |

| Descriptor Generation Software | pymatgen, RDKit, or custom scripts for generating atomic, electronic, and geometric features from catalyst structures. |

| Hyperparameter Optimization Tool | Optuna, Hyperopt, or scikit-optimize for efficient, automated model tuning. |

| Validation Framework | Custom scripts for robust k-fold cross-validation and temporal/holdout set validation to prevent data leakage and overfitting. |

Data Acquisition & Preprocessing Protocol

Protocol: Data Collection and Curation

- Source: Assemble data from heterogeneous sources: internal high-throughput experimentation (HTE), published literature (using text-mining APIs), and public databases (e.g., CatApp, NOMAD).

- Curation: Standardize catalyst representations (e.g., to a unique SMILES or CIF format), unit conversion for targets, and reaction condition normalization.

- Annotate: Log all metadata (e.g., measurement technique, uncertainty, citation).

Protocol: Feature Engineering & Selection

- Generate Descriptors: From standardized catalyst input, compute:

- Compositional: Stoichiometric attributes, elemental properties (electronegativity, valence, radii).

- Structural: Coordination numbers, bond lengths/diameters, symmetry indices.

- Electronic: (DFT-calculated) d-band centers, Bader charges, density of states features.

- Conditional: Temperature, pressure, concentration.

- Feature Reduction: Apply variance threshold filtering, followed by recursive feature elimination (RFE) or correlation analysis to reduce multicollinearity.

Table 2: Exemplar Feature Set for a Bimetallic Catalyst Dataset (Quantitative Summary)

| Feature Category | Specific Descriptor | Mean Value (± Std Dev) | Correlation with Target (r) |

|---|---|---|---|

| Compositional | Atomic % of Metal A | 50.2% (± 28.5) | 0.15 |

| Pauling Electronegativity Difference | 0.34 (± 0.21) | 0.72 | |

| Structural | Average Coordination Number | 10.5 (± 1.8) | -0.41 |

| Lattice Parameter (Å) | 3.89 (± 0.15) | 0.33 | |

| Electronic (DFT) | d-band Center (eV) | -2.10 (± 0.45) | -0.68 |

| Surface Energy (J/m²) | 1.85 (± 0.30) | 0.22 | |

| Conditional | Reaction Temperature (K) | 450.0 (± 75.0) | 0.55 |

Data Preprocessing and Feature Engineering Pipeline

Model Building & Training Protocol

Protocol: Model Selection and Training

- Baseline: Train a simple linear model (Ridge/Lasso) as a performance baseline.

- Ensemble Methods: Train tree-based models: Random Forest (RF), Gradient Boosting Machines (XGBoost/LightGBM). Use 5-fold stratified cross-validation.

- Advanced Models: If data size permits, train a graph neural network (GNN) using atomic graphs as input.

- Hyperparameter Tuning: For the best-performing algorithm, use Bayesian optimization (via Optuna) over 100 trials to tune key parameters (e.g., learning rate, tree depth, regularization).

Protocol: Validation and Evaluation

- Split: Perform an 80/10/10 temporal or cluster-based split to create training, validation, and holdout test sets.

- Metrics: Evaluate using Mean Absolute Error (MAE), Root Mean Squared Error (RMSE), and Coefficient of Determination (R²) on the holdout set only.

- Analysis: Generate parity plots and residual error distributions for diagnostic checking.

Table 3: Model Performance Comparison on Holdout Test Set

| Model Type | Key Hyperparameters | MAE (Target Units) | RMSE (Target Units) | R² |

|---|---|---|---|---|

| Ridge Regression | Alpha = 1.0 | 12.45 | 16.89 | 0.61 |

| Random Forest | nestimators=200, maxdepth=15 | 8.23 | 11.56 | 0.81 |

| XGBoost | learningrate=0.05, maxdepth=7, n_estimators=500 | 6.78 | 9.87 | 0.86 |

| GNN (Attentive FP) | hidden_dim=64, layers=3, epochs=300 | 7.95 | 10.89 | 0.83 |

Model Training and Validation Strategy

Integration with SHAP Analysis for Descriptor Identification

Protocol: SHAP Analysis on Trained Model

- Calculation: Using the trained model (e.g., XGBoost) and the entire training dataset, compute SHAP values via the

TreeSHAPalgorithm. - Global Importance: Generate a bar plot of mean absolute SHAP values to rank descriptor global importance.

- Local Effects: Create SHAP summary plots (beeswarm plots) and dependence plots to reveal feature-target relationships and interaction effects.

Table 4: Top Catalyst Descriptors Identified by SHAP Analysis

| Rank | Descriptor Name | Category | Mean | Absolute SHAP Value | Primary Effect Direction |

|---|---|---|---|---|---|

| 1 | d-band Center | Electronic | -2.10 eV | 2.45 | Negative Correlation |

| 2 | Electronegativity Diff. | Compositional | 0.34 | 1.89 | Positive Correlation |

| 3 | Reaction Temperature | Conditional | 450 K | 1.56 | Positive Correlation |

| 4 | Avg. Coordination Number | Structural | 10.5 | 1.23 | Negative Correlation |

| 5 | Surface Energy | Electronic | 1.85 J/m² | 0.78 | Complex (Interaction) |

SHAP Analysis for Model Interpretation and Insight Generation

This document details the application of SHapley Additive exPlanations (SHAP) for interpretable machine learning in catalyst descriptor identification, a core component of our broader thesis. SHAP values provide a unified measure of feature importance, attributing a model's prediction to each input feature. The selection of the appropriate SHAP algorithm—TreeSHAP, KernelSHAP, or DeepSHAP—is critical for accurate and efficient interpretation in catalyst discovery and drug development pipelines.

Table 1: Quantitative Comparison of SHAP Algorithms

| Algorithm | Model Compatibility | Computational Complexity | Exact/Approximate | Key Assumption/Constraint | Primary Use Case in Catalyst Research |

|---|---|---|---|---|---|

| TreeSHAP | Tree-based models (RF, GBT, XGBoost) | O(TL D²) | Exact for trees | Feature independence | High-speed analysis of descriptor importance from ensemble models. |

| KernelSHAP | Model-agnostic (any black-box) | O(2^M + T M²) | Approximate (Kernel-based) | Linear in SHAP space | Interpreting any custom catalyst property predictor. |

| DeepSHAP | Deep Neural Networks | O(T B) | Approximate (Backprop-based) | DeepLIFT compositional rule | Interpreting deep learning models for complex structure-property relationships. |

Key: T = # of background samples, L = Max tree leaves, D = Max tree depth, M = # of input features, B = # of background samples for DeepSHAP.

Experimental Protocols

Protocol 1: TreeSHAP for Random Forest Catalyst Models

Objective: To compute exact SHAP values for a trained Random Forest model predicting catalyst activity.

- Model Training: Train a Random Forest regressor using a dataset of catalyst descriptors (e.g., elemental properties, coordination numbers, surface energies) and the target property (e.g., turnover frequency).

- Background Data Preparation: Select a representative sample (typically 100-500 instances) from the training dataset to serve as the background distribution.

- SHAP Value Calculation: Instantiate the

shap.TreeExplainer(Python SHAP library) with the trained model and the background dataset. - Explanation Generation: Call the

explainer.shap_values(X)method on the feature matrixXof interest (e.g., test set or novel catalyst candidates). - Aggregation & Analysis: Aggregate absolute SHAP values across the dataset to rank global descriptor importance. Analyze individual predictions for local interpretability.

Protocol 2: KernelSHAP for Black-Box Catalyst Screening Functions

Objective: To approximate SHAP values for a proprietary or complex catalyst scoring function.

- Model Wrapping: Define a wrapper function

f(x)that takes an array of catalyst descriptors and returns the model's predicted score. - Background & Feature Selection: Select a background dataset. Due to combinatorial explosion, use a reduced set of features via prior domain knowledge or a summary technique.

- Kernel Weighting Configuration: The SHAP library's

shap.KernelExplaineruses a specially weighted kernel to approximate Shapley values. The default settings are typically sufficient. - Approximation Computation: Call

explainer.shap_values(X, nsamples="auto"), wherensamplescontrols the number of feature coalition evaluations. A higher number increases accuracy but also computational cost. - Variance Evaluation: Check the

shap_valuesoutput for stability across multiple runs to ensure approximation quality.

Protocol 3: DeepSHAP for Neural Network-Based Descriptor Models

Objective: To efficiently approximate SHAP values for a deep neural network predicting catalyst performance.

- Model Definition: Construct a Deep Neural Network (e.g., Multi-Layer Perceptron or Graph Neural Network) using a framework like PyTorch or TensorFlow.

- Model Training: Train the network to convergence on the catalyst dataset.

- Explainer Initialization: Instantiate

shap.DeepExplainer(model, background_data_tensor). The background data should be a representative subset. - SHAP Value Computation: Pass a tensor of catalyst samples to

explainer.shap_values(input_tensor). DeepSHAP uses a compositional propagation rule based on DeepLIFT. - Pathway Visualization: Use the computed SHAP values to identify which input neurons (and thus, original descriptors) most influenced the final-layer prediction.

Visualization of SHAP Analysis Workflow

Title: SHAP Analysis Workflow for Catalyst Models

The Scientist's Toolkit: Research Reagent Solutions

Table 2: Essential Tools for SHAP-Based Catalyst Research

| Item / Software | Function / Purpose | Typical Specification / Version |

|---|---|---|

| SHAP Python Library | Core library for computing TreeSHAP, KernelSHAP, and DeepSHAP values. | v0.44+ |

| scikit-learn | Provides standard tree-based models (Random Forest, GBDT) for use with TreeSHAP. | v1.3+ |

| XGBoost / LightGBM | High-performance gradient boosting frameworks compatible with TreeSHAP. | Latest stable |

| PyTorch / TensorFlow | Deep learning frameworks required for building models interpretable by DeepSHAP. | v2.0+ |

| Matplotlib / Seaborn | Visualization libraries for creating summary plots (beeswarm, bar) of SHAP values. | v3.7+ |

| RDKit | Cheminformatics toolkit for generating molecular descriptors for catalyst candidates. | 2023.09+ |

| pandas & NumPy | Data manipulation and numerical computation for handling descriptor matrices. | v1.5+ / v1.24+ |

| Jupyter Notebook / Lab | Interactive environment for exploratory SHAP analysis and visualization. | - |

Application Notes: SHAP for Catalyst Descriptor Identification

Conceptual Framework

Within the broader thesis on interpretable machine learning for heterogeneous catalysis, SHapley Additive exPlanations (SHAP) provides a game-theoretic approach to quantify the contribution of each molecular or structural descriptor to a catalyst's predicted performance (e.g., turnover frequency, selectivity). Global feature importance is derived by aggregating SHAP values across an entire dataset, moving beyond single-instance explanations to identify universally critical physicochemical descriptors.

Key Advantages in Catalyst Discovery

- Model Agnosticism: Applicable to complex models (e.g., gradient boosting, neural networks) that capture non-linear descriptor-property relationships.

- Directionality: Distinguishes descriptors that promote or inhibit catalytic activity.

- Ranking Consistency: Provides a mathematically grounded, consistent ranking compared to permutation-based methods.

Table 1: Global SHAP Value Rankings for Catalytic Descriptors (Representative Data)

| Rank | Descriptor Category | Specific Descriptor | Mean | SHAP Value (Absolute) | Direction of Influence |

|---|---|---|---|---|---|

| 1 | Electronic Structure | d-band center (eV) | 0.452 | + | Promotes activity up to optimal value |

| 2 | Geometric | Coordination number | 0.387 | - | Lower coordination generally favorable |

| 3 | Adsorption Energy | *OH adsorption energy (eV) | 0.321 | - | Weaker binding favorable |

| 4 | Atomic Property | Pauling electronegativity | 0.265 | +/ | Complex, non-monotonic |

| 5 | Bulk Property | Molar volume (cm³/mol) | 0.187 | - | Smaller volume favorable |

Table 2: Comparison of Feature Importance Metrics

| Method | Descriptor Rank 1 | Descriptor Rank 2 | Computation Time (Relative) | Notes |

|---|---|---|---|---|

| SHAP (Kernel) | d-band center | Coordination number | High | Gold standard, but computationally expensive |

| SHAP (Tree) | d-band center | *OH energy | Low | Fast, accurate for tree-based models |

| Permutation Importance | Coordination number | d-band center | Medium | Can be unreliable with correlated features |

| Gini Importance | Pauling electronegativity | Molar volume | Very Low | Model-specific (tree-based), biased |

Experimental Protocols

Protocol: SHAP-Based Descriptor Ranking Workflow

Aim: To compute and interpret global feature importance for a trained catalyst performance prediction model.

Prerequisites:

- A trained machine learning model (

model) on catalyst data. - Feature matrix

X(nsamples × ndescriptors) and target vectory. - Test or validation set (

X_test).

Procedure:

- SHAP Explainer Initialization:

- Select explainer based on model type.

- For tree-based models (e.g., Random Forest, XGBoost):

SHAP Value Calculation:

- Compute SHAP values for the evaluation set.

- Compute SHAP values for the evaluation set.

Global Importance Calculation:

- Calculate mean absolute SHAP value per feature.

- Calculate mean absolute SHAP value per feature.

Ranking and Visualization:

- Create a bar plot of sorted

global_importance. - Generate summary plots (

shap.summary_plot(shap_values, X_test)) to show value distributions and effects.

- Create a bar plot of sorted

Protocol: Validation via Targeted Descriptor Screening

Aim: To experimentally validate the SHAP-derived descriptor ranking by synthesizing catalysts that systematically vary the top-ranked descriptor.

Materials: (See Scientist's Toolkit) Procedure:

- Based on SHAP ranking (e.g., Table 1), select the top 2-3 descriptors.

- Design a catalyst library (e.g., 10-15 compositions/alloys) where the primary descriptor (e.g., d-band center) is varied, while secondary descriptors are controlled as tightly as possible.

- Synthesize catalysts using a controlled method (e.g., incipient wetness co-impregnation on a fixed support, followed by standardized calcination/reduction).

- Characterize the electronic/geometric descriptor using XPS (d-band center proxy) or EXAFS (coordination number).

- Evaluate catalytic performance (e.g., rate, selectivity) under identical, standardized reactor conditions.

- Correlation Analysis: Plot the measured descriptor value vs. measured activity. A strong, model-predicted correlation validates the SHAP importance ranking.

Visualizations

Title: SHAP Global Feature Ranking Workflow

Title: Experimental Validation Protocol Flow

The Scientist's Toolkit

Table 3: Essential Research Reagents & Materials

| Item | Function/Brief Explanation |

|---|---|

| SHAP Python Library (v0.44+) | Core computational toolkit for calculating SHAP values with various explainers. |

| scikit-learn / XGBoost | Libraries for building the underlying predictive regression models for catalyst properties. |

| Catalyst Precursor Salts (e.g., H₂PtCl₆, Ni(NO₃)₂, Co(acac)₃) | High-purity metal sources for the controlled synthesis of catalyst libraries. |

| High-Surface-Area Support (e.g., γ-Al₂O₃, TiO₂, Carbon) | Standardized support material to ensure consistent catalyst dispersion and comparison. |

| Tube Furnace with Gas Flow System | For precise calcination and reduction pre-treatments under controlled atmospheres. |

| X-ray Photoelectron Spectroscopy (XPS) | Surface-sensitive technique to characterize electronic descriptors (e.g., oxidation state, approximate d-band shift). |

| X-ray Absorption Fine Structure (EXAFS) | Provides local geometric structure information (e.g., coordination number, bond distance). |

| Bench-Scale Continuous Flow Reactor | Standardized setup for evaluating catalyst performance (conversion, selectivity, rate) under relevant conditions. |

| Standard Analysis Gases (e.g., 5% H₂/Ar, 10% O₂/He, reaction feedstock) | For catalyst pre-treatment and activity/selectivity testing. |

Analyzing Local Explanations for Single Catalyst Candidates

This protocol is framed within a doctoral thesis investigating SHAP (SHapley Additive exPlanations) analysis for interpretable catalyst descriptor identification. The core objective is to move beyond global, population-level model interpretations to local explanations for individual catalyst candidates. This enables the precise attribution of a candidate's predicted activity or selectivity to specific physicochemical, structural, or electronic descriptors, offering actionable insights for iterative design. These methodologies bridge advanced machine learning with fundamental catalyst science, providing a rigorous framework for explainable AI (XAI) in materials and molecular discovery.

Core Experimental Protocol: SHAP-Based Local Explanation Analysis

Prerequisite: Model Training and Global Descriptor Importance

- Objective: Train a predictive model and establish a baseline global feature importance.

- Protocol:

- Dataset Curation: Assemble a dataset of catalyst candidates with validated experimental outcomes (e.g., turnover frequency, yield, selectivity). Each candidate is represented by a vector of n numerical descriptors (e.g., d-band center, coordination number, solvent-accessible surface area, etc.).

- Model Training: Employ a tree-based ensemble model (e.g., Gradient Boosting, Random Forest) or a neural network. Use an 80/20 train/test split with stratified sampling to maintain outcome distribution. Optimize hyperparameters via Bayesian optimization or randomized search with 5-fold cross-validation.

- Global SHAP Analysis: Compute SHAP values for the entire training set using the

TreeSHAP(for tree models) orKernelSHAP(for any model) algorithm. Summarize by taking the mean absolute SHAP value for each descriptor across the dataset to produce a global ranking.

Protocol for Generating Local Explanations

- Objective: Explain the prediction for a single, specific catalyst candidate.

- Step-by-Step Methodology:

- Candidate Selection: Identify the catalyst candidate of interest (e.g., a high-performing outlier, a synthesis failure, a newly proposed candidate).

- Local SHAP Value Calculation: Using the pre-trained model from 2.1, compute the SHAP values specifically for the chosen candidate's descriptor vector. This yields a set of n SHAP values, one per descriptor.

- Explanation Interpretation:

- The sum of the SHAP values plus the model's expected value (base value) equals the candidate's predicted output.

- A positive SHAP value for a descriptor indicates that descriptor's specific value for this candidate pushes the model prediction higher than the average prediction.

- A negative SHAP value indicates the descriptor's value lowers the prediction relative to the average.

- Visualization & Analysis: Generate a force plot or waterfall plot to visualize the local explanation. Analyze which 3-5 descriptors contribute most significantly (positively or negatively) to this specific prediction.

Protocol for Experimental Validation of Local Insights

- Objective: Design experiments to test hypotheses generated from the local explanation.

- Methodology:

- Hypothesis Formulation: From the local explanation, formulate a chemical hypothesis (e.g., "Candidate A is predicted to be highly selective because its local explanation shows a strong positive contribution from a low electrophilicity index").

- Descriptor Perturbation Design: Design a small set (3-5) of related catalyst candidates where the key positively contributing descriptor is systematically varied while attempting to hold others constant (e.g., synthesize analogs with gradually increasing electrophilicity).

- Synthesis & Testing: Synthesize and experimentally test the candidate series under standard catalytic conditions.

- Correlation Analysis: Plot the experimental outcome against the perturbed descriptor value. A strong correlation confirms the local explanation's insight, validating the descriptor's causal role for this catalyst class.

Data Presentation: Comparative Analysis of Local Explanations

Table 1: Local SHAP Explanation for Three Distinct Catalyst Candidates Model predicts Turnover Frequency (TOF) for a hydrogenation reaction. Base Value (Average Prediction) = 12.5 s⁻¹.

| Catalyst ID | Predicted TOF (s⁻¹) | Top Positive Contributor Descriptor (Value) | SHAP Value | Top Negative Contributor Descriptor (Value) | SHAP Value | Experimental TOF (s⁻¹) |

|---|---|---|---|---|---|---|

| Cat-A-103 | 45.2 | d-band Center (-2.1 eV) | +18.7 | Particle Size (8.2 nm) | -4.1 | 41.7 ± 3.1 |

| Cat-B-77 | 5.1 | Metal-O Coordination (4.2) | +1.2 | Work Function (5.4 eV) | -9.8 | 6.0 ± 1.5 |

| Cat-C-12 | 11.8 | Surface Charge Density (0.12 e/Ų) | +0.5 | Lattice Strain (%) | -1.4 | 13.2 ± 2.2 |

Table 2: Key Research Reagent Solutions & Materials

| Item Name | Function/Description | Example Vendor/Product |

|---|---|---|

| SHAP Python Library | Core computational tool for calculating SHAP values and generating local explanation plots. | GitHub: shap/shap |

| Catalyst Dataset | Curated database of catalyst structures, descriptors, and activity data. Essential for model training. | Custom SQL/CSV; possible public sources like CatApp or NOMAD. |

| Descriptor Calculation Software | Computes physicochemical and electronic descriptors from catalyst structures (e.g., DFT outputs, SMILES). | RDKit, pymatgen, ASE, custom DFT scripts. |

| Tree-Based ML Library | Used to train high-performance, SHAP-compatible predictive models. | scikit-learn, XGBoost, LightGBM |

| Jupyter Notebook Environment | Interactive platform for running analysis, visualization, and documentation. | Project Jupyter |

| Standard Catalytic Test Rig | Bench-scale reactor system for experimental validation of predicted catalyst performance. | Custom setup or commercial (e.g., PID Eng & Tech). |

Visualizations

SHAP Analysis Workflow for Catalyst Design

Local Force Plot Explanation for Cat-A-103

Within the thesis "SHAP Analysis for Interpretable Catalyst Descriptor Identification," advanced SHAP visualizations are critical for translating model outputs into chemically intuitive insights. While global feature importance rankings identify key descriptors, summary, dependence, and force plots are essential for probing the nature and context of feature influence on predicted catalytic activity or selectivity, enabling rational catalyst design.

Core Visualization Types: Application Notes

- Purpose: Provides a global overview of feature importance and impact direction across the entire dataset.

- Application Note: In catalyst research, it rapidly prioritizes descriptors (e.g., d-band center, coordination number, adsorption energy) for further investigation. Overlapping points reveal subgroups or clusters in the data, hinting at different catalytic regimes.

Table 1: Interpretation of Summary Plot Patterns in Catalyst Data

| Visual Pattern | Potential Chemical Interpretation |

|---|---|

| High-density vertical strip of one color | Descriptor has a monotonic, uniform effect (e.g., stronger binding always increases activity within studied range). |

| Mixed colors at high SHAP values | Descriptor's optimal effect is context-dependent, requiring synergy with another feature. |

| Distinct horizontal bands | Clustering of catalysts (e.g., metals vs. oxides) with different baseline activities. |

Dependence Plot

- Purpose: Isolates the relationship between a single descriptor's value and its SHAP value, revealing nonlinearities and interactions.

- Application Note: Essential for identifying optimal descriptor ranges (e.g., an optimal d-band center for a Sabatier peak) and probing feature interactions via coloring.

Table 2: Dependence Plot Analysis for Catalyst Descriptor X

| Plot Characteristic | Observation | Inference for Catalyst Design |

|---|---|---|

| Shape | Inverted-U (parabolic) | Confirms Sabatier-type behavior; identifies optimal value. |

Colored by Y |

Clear separation of colors along trend | Descriptor Y strongly interacts with X; design must consider both. |

| Scatter | High variance at mid-range | Effect of X is less deterministic in this region; other descriptors dominate. |

Force Plot (Local Explanation)

- Purpose: Explains a single prediction by deconstructing the model output, showing how each feature pushes the prediction from the baseline (average) value.

- Application Note: For a specific high-performance catalyst prediction, it quantifies the contribution of each descriptor (e.g., "High electronegativity contributed +0.8 log(TOF), while low oxidation state contributed -0.3 log(TOF)").

Table 3: Force Plot Decomposition for a High-Activity Catalyst Prediction

| Feature | Value | SHAP Value (Impact) | Interpretation |

|---|---|---|---|

| Metal-O Bond Strength | 2.1 eV | +1.5 | Primary driver for high activity in this catalyst. |

| Surface Charge | +0.3 | -0.4 | Moderately detrimental, but outweighed by other factors. |

| Coordination # | 4 | +0.6 | Low coordination favors active site formation. |

| Baseline Value | -- | 2.1 (Avg. log(TOF)) | -- |

| Model Output | -- | 3.8 (Predicted log(TOF)) | -- |

Experimental Protocols

Protocol 1: Generating and Interpreting a SHAP Dependence Plot with Interaction Detection

Objective: To elucidate the relationship and key interactions for a top-ranked catalyst descriptor.

- Compute SHAP Values: Using a trained model (e.g., Gradient Boosting, NN) and test set, calculate SHAP values (

shap.Explainer). - Isolate Feature: Select the top-ranked descriptor from the summary plot (e.g.,

Descriptor_A). - Plot Basic Dependence: Execute

shap.dependence_plot('Descriptor_A', shap_values, X_test), whereX_testis the feature matrix. - Identify Interaction: Re-plot, coloring by the feature identified as a potential interactor in the summary plot or via

shap.interaction_values. Usecolor='Descriptor_B'. - Chemical Interpretation: Correlate trends with known catalytic principles (e.g., Brønsted-Evans-Polanyi relations, scaling relationships).

Protocol 2: Creating and Comparing Local Force Plots for Catalyst Classification

Objective: To compare the mechanistic drivers for catalysts classified as "Active" vs. "Inactive."

- Select Exemplars: From the test set, identify one catalyst predicted with high probability as "Active" and one as "Inactive."

- Generate Individual Plots: For each catalyst, generate a force plot using

shap.force_plot(explainer.expected_value, shap_values[instance], X_test.iloc[instance]). - Comparative Analysis: Tabulate the top 3 positive and top 3 negative contributing features for each exemplar.

- Hypothesis Generation: Formulate design rules (e.g., "Active catalysts consistently show moderate

Descriptor_Xwith highDescriptor_Y").

Diagrams

Title: SHAP Analysis Workflow for Catalyst Descriptors

Title: Force Plot Logic: From Baseline to Prediction

The Scientist's Toolkit: Research Reagent Solutions

Table 4: Essential Tools for Advanced SHAP Visualization in Computational Catalysis

| Item / Solution | Function / Purpose | Example (Package/Library) |

|---|---|---|

| SHAP Computation Library | Calculates consistent additive feature attributions for any model. | shap Python library (KernelSHAP, TreeSHAP, DeepSHAP). |

| Model-Specific Explainer | Ensures efficient and exact SHAP value calculation. | TreeExplainer for tree-based models (GBR, RF); DeepExplainer for neural networks. |

| Visualization Backend | Renders interactive plots for detailed inspection. | matplotlib, plotly (for interactive dependence plots). |

| Feature Processing Toolkit | Standardizes and scales descriptors for meaningful comparison. | scikit-learn StandardScaler, MinMaxScaler. |

| Chemical Descriptor Database | Provides the raw input features for the model. | Computational outputs (DFT energies, structural descriptors), materials databases (Citrination, OQMD). |

| Jupyter Notebook Environment | Integrates computation, visualization, and documentation. | Jupyter Lab/Notebook for reproducible analysis workflows. |

Overcoming Challenges: Optimizing SHAP for Robust Catalyst Insights

Handling High-Dimensional and Correlated Descriptor Sets

Application Notes

In the pursuit of interpretable catalyst descriptor identification using SHAP (SHapley Additive exPlanations) analysis, a primary challenge is the preprocessing and analysis of high-dimensional descriptor sets rife with multicollinearity. These sets, often derived from Density Functional Theory (DFT) calculations or complex molecular fingerprints, can contain hundreds to thousands of intercorrelated features, which obscure model interpretability and destabilize regression coefficients.

Key strategies include:

- Dimensionality Reduction: Techniques like Principal Component Analysis (PCA) and Uniform Manifold Approximation and Projection (UMAP) are employed not for feature elimination but for creating orthogonal latent spaces. SHAP values can then be calculated on contributions to these latent dimensions, with back-propagation to original features.

- Regularized Regression: LASSO (L1) and Elastic Net regressions perform automatic feature selection and handle correlation by penalizing coefficient magnitude, providing a more stable ground for subsequent SHAP analysis.

- Tree-Based Methods: Gradient Boosting Machines (e.g., XGBoost, LightGBM) naturally handle multicollinearity by selecting splits based on impurity reduction. Their inherent feature selection makes them excellent models for TreeSHAP, offering fast and accurate Shapley value approximations.

- Descriptor Clustering & Aggregation: Highly correlated descriptors (Pearson |r| > 0.85) are clustered using hierarchical clustering. A single representative descriptor (e.g., the one with the highest mutual information with the target property) is selected from each cluster, drastically reducing dimensionality while preserving information.

Table 1: Impact of Preprocessing on Model Performance and Interpretability for a Catalytic Turnover Frequency (TOF) Dataset (n=150 samples)

| Preprocessing Method | Initial Descriptors | Final Descriptors | Model Type | Test R² | Top 5 SHAP Descriptor Stability* | ||

|---|---|---|---|---|---|---|---|

| None | 320 | 320 | Linear | 0.45 | 35% | ||

| Elastic Net (α=0.01) | 320 | 48 | Linear | 0.78 | 92% | ||

| PCA (95% variance) | 320 | 18 PCs | Linear | 0.82 | 100% (on PCs) | ||

| Correlation Filtering ( | r | <0.9) | 320 | 110 | XGBoost | 0.91 | 88% |

| Clustering & Selection | 320 | 65 | XGBoost | 0.93 | 96% |

Stability measured as the Jaccard index overlap of top 5 features across 50 bootstrap iterations.

Experimental Protocols

Protocol 1: Hierarchical Descriptor Clustering and Selection for SHAP-Ready Datasets

Objective: To reduce multicollinearity in a descriptor matrix by grouping correlated features and selecting a robust representative for each group.

Materials:

- High-dimensional descriptor data (CSV format).

- Computational environment (Python with SciPy, scikit-learn, pandas).

Procedure:

- Data Standardization: Standardize all descriptor columns to have zero mean and unit variance using

StandardScaler. - Correlation Matrix Calculation: Compute the full Pearson correlation matrix (R) for all descriptors.

- Distance Matrix Conversion: Convert the correlation matrix to a distance matrix: D = 1 - |R|.

- Hierarchical Clustering: Apply agglomerative hierarchical clustering using Ward's linkage on distance matrix D. Cut the dendrogram at a height corresponding to a maximum intra-cluster correlation of 0.85 (or a domain-informed threshold).

- Representative Descriptor Selection: For each cluster: a. Calculate the mutual information between each cluster member descriptor and the target catalytic property. b. Select the descriptor with the highest mutual information score as the cluster representative.

- Dataset Compilation: Create a new dataset comprising only the selected representative descriptors. This dataset is now suitable for downstream ML modeling and SHAP analysis.

Protocol 2: Integrated PCA-SHAP Analysis Workflow

Objective: To obtain stable, interpretable feature importance scores from a model trained on orthogonal principal components.

Procedure:

- PCA Transformation: Perform PCA on the standardized descriptor matrix. Retain the number of components (PCs) that explain >95% of cumulative variance.

- Model Training: Train a predictive model (e.g., Ridge Regression, Support Vector Regressor) on the PC scores matrix. Record performance via cross-validation.

- SHAP Analysis on PC Space: Compute SHAP values for the model predictions relative to the principal components. This identifies which PCs are most influential.

- Back-Projection to Original Features: For each influential PC (e.g., top 3 by mean |SHAP|), calculate the absolute contribution of each original descriptor via: Contributionj = |SHAPPCi| * |Loadingij|, where Loading_ij is the PCA loading for descriptor j on PC i. Aggregate contributions across top PCs to rank original descriptors.

The Scientist's Toolkit

Table 2: Essential Research Reagent Solutions for Descriptor Handling & SHAP Analysis

| Item/Software | Function in Workflow |

|---|---|

| RDKit | Open-source cheminformatics library for generating molecular descriptors (Morgan fingerprints, topological indices) and handling chemical data. |

| Dragon | Commercial software for calculating a comprehensive suite (>5000) molecular descriptors for small molecules or catalyst ligands. |

| scikit-learn | Primary Python library for data preprocessing (StandardScaler), dimensionality reduction (PCA), clustering, and implementing core ML models. |

| SHAP Library (Lundberg & Lee) | Unified framework for calculating SHAP values across all model types (KernelSHAP, TreeSHAP, DeepSHAP). Critical for interpretability. |

| XGBoost/LightGBM | Gradient boosting frameworks that provide high-performance, tree-based models which natively handle correlated features and integrate with TreeSHAP for rapid explanation. |

| UMAP | Non-linear dimensionality reduction technique useful for visualizing high-dimensional descriptor spaces and identifying latent clusters before modeling. |

| Graphviz | Tool for rendering pathway and workflow diagrams from DOT scripts, essential for visualizing relationships in descriptor-property models. |

Visualizations

Title: Workflow for Handling Correlated Descriptor Sets

Title: SHAP Back-Projection from Principal Components

Addressing Computational Cost and Scalability for Large Catalyst Libraries

Application Notes: Integrating SHAP Analysis with High-Throughput Virtual Screening

Within the broader thesis on SHAP analysis for interpretable catalyst descriptor identification, a primary challenge is the computational expense of generating the requisite quantum mechanical (QM) data for large, diverse catalyst libraries. This protocol details a multi-fidelity screening approach that combines fast, approximate methods with high-accuracy calculations, guided by SHAP analysis, to enable scalable exploration.

Core Strategy: Implement a tiered computational workflow. An initial library of 50,000 candidate catalysts is screened using semi-empirical methods (e.g., GFN2-xTB) or machine-learned force fields (MACE, CHGNET). The top 5-10% of performers are promoted to Density Functional Theory (DFT) for accurate property calculation (e.g., adsorption energies, activation barriers). SHAP analysis is then applied to the combined dataset (features from both low- and high-fidelity levels) to identify universal, interpretable descriptors that govern performance, thereby informing the design of subsequent iterative library generations.

Table 1: Performance and Cost Benchmark of Computational Methods for Catalyst Pre-Screening

| Method | Avg. Time per Catalyst (CPU-hr) | Typical Error vs. DFT (eV) | Suitable Library Size | Primary Use Case |

|---|---|---|---|---|

| GFN2-xTB | 0.05 - 0.2 | 0.3 - 0.8 | >100,000 | Geometry optimization, rough energy ranking |

| Machine Learning Force Fields (MACE) | 0.01 - 0.1 | 0.05 - 0.2 | >1,000,000 | High-throughput MD & energy evaluation |

| DFT (GGA/PBE) | 10 - 50 | Reference | < 1,000 | Final evaluation, descriptor calculation |

| DFT (Hybrid) | 100 - 500 | Reference | < 100 | High-accuracy benchmarking |

Table 2: SHAP Analysis Output for a Model Trained on Multi-Fidelity Data (Example: CO2 Reduction Catalysts)

| Descriptor | Mean Absolute SHAP Value | Impact Direction | Interpretable Chemical Meaning |

|---|---|---|---|

| d-band center (εd) | 0.45 | Higher value lowers barrier | Metal surface reactivity |

| *Bader charge on active site | 0.38 | Positive correlates with activity | Electrophilicity of the metal center |

| Nearest-neighbor distance | 0.31 | Optimal mid-range value | Strain and ligand effects |

| LUMO energy (from low-fidelity) | 0.28 | Lower energy improves activity | Proxy for electron affinity |

Note: Descriptors like Bader charge require high-fidelity DFT but can be predicted for the full library via a model trained on the high-fidelity subset.

Experimental Protocols

Protocol 1: Tiered High-Throughput Virtual Screening (HTVS) Workflow

Objective: To efficiently screen a catalyst library of >50,000 materials for a target reaction (e.g., oxygen evolution reaction - OER).

Materials & Software:

- Library Database: Materials Project, QM9, or in-house enumerated organocatalyst library.

- Software: ASE (Atomic Simulation Environment), SCALE-MS for workflow orchestration, xTB for semi-empirical calculations, VASP/Quantum ESPRESSO for DFT.

- Computing Resources: High-performance computing (HPC) cluster with CPU nodes and GPU acceleration for MLFF inference.

Methodology:

- Library Pre-processing: Generate initial 3D structures. Apply convex-hull or heuristic stability filters to reduce library size by ~20%.

- Tier 1 - Ultra-Fast Screening:

- Perform single-point energy and force calculations using a pre-trained MACE model.

- Calculate cheap electronic descriptors (e.g., orbital eigenvalues from extended Hückel theory).

- Train a quick surrogate model (Random Forest) on these descriptors against a simple activity proxy (e.g., binding energy of a key intermediate from a minimal cluster model).

- Rank the full library and select the top 5,000 candidates for Tier 2.

- Tier 2 - Refined Evaluation:

- Perform full geometry optimization using GFN2-xTB.

- Extract more accurate descriptors: HOMO/LUMO energies, partial charges, vibrational frequencies.

- Re-evaluate the surrogate model. Select the top 500 candidates for Tier 3.

- Tier 3 - High-Fidelity DFT Validation:

- Execute DFT calculations (PBE functional, medium basis set/pseudopotential) for key reaction intermediates.

- Compute accurate reaction energies and activation barriers.

- Calculate high-quality descriptors (d-band center for metals, Fukui indices, Bader charges).

- Data Integration & SHAP Analysis:

- Create a unified dataset containing all descriptors (low- and high-fidelity) and target properties for the 500 DFT-validated catalysts.

- Train a final ML model (e.g., XGBoost) on this dataset.

- Perform SHAP analysis on this model to identify the most impactful, interpretable descriptors across the entire fidelity spectrum.

Protocol 2: Active Learning for Iterative Library Expansion

Objective: To minimize the number of costly DFT calculations by iteratively selecting the most informative catalysts for computation.

Methodology:

- Start with an initial small set (n=50) of catalysts with DFT-calculated target properties.

- Train an initial Gaussian Process (GP) model on their low-fidelity descriptors and DFT targets.

- For all remaining candidates in the low-fidelity library, use the GP model to predict the target property and its associated uncertainty (standard deviation).

- Apply an acquisition function (e.g., Upper Confidence Bound - UCB):

UCB = μ + κ * σ, where μ is predicted property, σ is uncertainty. - Select the top 20 candidates with the highest UCB scores for DFT calculation.

- Add these new data points to the training set and retrain the GP model.

- Repeat steps 3-6 for 5-10 cycles. Use the final dataset for robust SHAP analysis, ensuring descriptors are identified from a strategically sampled, information-rich dataset.

Workflow and Relationship Diagrams

Diagram 1: Tiered computational screening workflow for large libraries.

Diagram 2: Active learning loop for cost-efficient DFT data generation.

The Scientist's Toolkit: Research Reagent Solutions

Table 3: Essential Computational Tools for Scalable Catalyst Screening & SHAP Analysis

| Tool / Solution | Primary Function | Role in Addressing Cost/Scalability |

|---|---|---|

| MACE (MPNN) | Machine Learning Force Fields | Enables energy/force evaluation ~1,000x faster than DFT for initial library pruning and MD simulations. |

| xtB (GFN2) | Semi-empirical Quantum Chemistry | Provides reasonably accurate geometries and energies at ~0.1% the cost of DFT for intermediate screening tiers. |

| DScribe/Matminer | Descriptor Generation | Automates calculation of hundreds of compositional, structural, and electronic features from atomic structures. |