Overcoming Reproducibility Challenges in Catalysis Research: A Comprehensive Guide to Rigor and Best Practices

This article addresses the critical issue of reproducibility in catalysis research, a challenge that spans computational, homogeneous, and heterogeneous systems.

Overcoming Reproducibility Challenges in Catalysis Research: A Comprehensive Guide to Rigor and Best Practices

Abstract

This article addresses the critical issue of reproducibility in catalysis research, a challenge that spans computational, homogeneous, and heterogeneous systems. Aimed at researchers, scientists, and drug development professionals, it provides a structured framework to enhance research rigor. The content moves from foundational concepts—exploring the root causes of irreproducibility—to actionable methodologies, including the adoption of managed workflows and FAIR data principles. It further offers troubleshooting strategies for common experimental pitfalls and establishes a framework for validation through community-driven benchmarks and comparative analysis. By synthesizing insights from recent community reports and case studies, this guide serves as an essential resource for improving the reliability, transparency, and impact of catalytic science, with direct implications for accelerating catalyst discovery and development in biomedical and industrial applications.

Understanding the Reproducibility Crisis in Catalytic Science

Defining Reproducibility and Rigor in Catalysis Contexts

Frequently Asked Questions (FAQs)

Q1: What are the most common sources of irreproducibility in experimental catalysis research? Irreproducibility often stems from undescribed critical process parameters in synthetic protocols and insufficient characterization of the catalytic system [1]. Key factors include:

- Unidentified Impurities: Trace metal contaminants in reagents or solvents can be the actual catalytic species, leading to mechanistic misinterpretations and severe reproducibility problems [2].

- Insufficiently Reported Experimental Conditions: Factors like electrode surface area and geometry in electrochemistry, or photon flux and reactor type in photochemistry, are frequently under-reported yet drastically impact outcomes [3] [4].

- Data and Provenance Issues: Publications sometimes include only intermediary data rather than raw data, use different labels for data in papers versus associated files, or lack all input parameters needed to rerun computational or data analysis workflows [5].

Q2: How can I improve the reproducibility of my catalyst testing and evaluation? Rigorous catalyst testing requires careful attention to reactor design and operation to ensure reported metrics are meaningful [6].

- Eliminate Transport Limitations: Ensure your reactor operates in a regime where chemical kinetics, not fluid flow or diffusive transport, dictate the measured rate.

- Report Intrinsic Kinetics: Avoid reporting data collected at near-complete conversion or near equilibrium. Use differential reactor conditions and report rates (e.g., turnover frequencies) and selectivities, not just conversion [6].

- Standardize Reporting: Clearly detail all reactor parameters, catalyst mass, fluid flow rates, and the method of rate calculation to enable direct comparison.

Q3: What tools are available to systematically assess a reaction's sensitivity to parameter changes? The sensitivity screen is a dedicated tool for this purpose. It involves varying single reaction parameters (e.g., concentration, temperature, catalyst loading) in positive and negative directions while keeping others constant [3]. The impact on a target value like yield or selectivity is measured and plotted on a radar diagram. This visually identifies parameters most crucial for reproducibility and aids in efficient troubleshooting [3].

Q4: What are the best practices for ensuring reproducibility in computational catalysis studies? Reproducibility is a cornerstone for computational data-driven science [7].

- Provide All Relevant Data: This includes input files for calculations, cartesian coordinates of all intermediate species, and output files.

- Share Custom Code: Any specially developed computer code central to the study's claims should be available in the Supplementary Information or an external repository [7].

- Use Accessible Formats: Data and code should be provided in formats that facilitate extraction and subsequent manipulation by other researchers [7].

Troubleshooting Guides

Guide 1: Troubleshooting Failed Reproducibility of a Synthetic Protocol

Problem: You are following a published synthetic procedure for a catalyst but cannot reproduce the reported performance (e.g., yield, selectivity, activity).

| Step | Action | Details & Reference |

|---|---|---|

| 1 | Verify Critical Parameters | Systematically check and adjust parameters identified as highly sensitive to variation. The sensitivity screen methodology is designed for this [3]. |

| 2 | Check for Impurities | Analyze reagents, solvents, and your product for trace metal contaminants. Follow a dedicated guideline to elucidate the real catalyst and exclude the role of impurities [2]. |

| 3 | Assess Equipment Differences | For specialized methods (electro-, photo-chemistry), compare your equipment (e.g., electrode material/surface area, photoreactor type/light source) with the original study. Tiny variations can cause major outcome changes [3]. |

| 4 | Re-examine Reported Data | Check if the original publication provides all necessary raw data and input parameters. Inconsistent data labeling or missing information are common hurdles [5]. |

Guide 2: Troubleshooting Rigor in Catalyst Testing

Problem: Your catalytic performance data (e.g., rate, turnover frequency) varies significantly between experiments or differs from literature values for similar materials.

| Step | Action | Details & Reference |

|---|---|---|

| 1 | Diagnose Transport Effects | Perform experiments to rule out interphase or intraparticle mass/heat transport limitations. The observed rate should be dependent only on the catalyst's intrinsic kinetics [6]. |

| 2 | Calibrate Analytical Systems | Ensure your analytical equipment (e.g., GC, HPLC) is properly calibrated. Use internal standards where appropriate to verify quantitative accuracy. |

| 3 | Confirm Reactor Hydrodynamics | Validate that your reactor operates as assumed (e.g., well-mixed for a batch reactor, plug flow for a fixed-bed reactor). Incorrect hydrodynamics lead to incorrect rate measurements [6]. |

| 4 | Report Comprehensive Metrics | Move beyond just conversion. Report rigorous metrics like turnover frequency (TOF) and selectivity under differential conversion to allow for meaningful comparison [6]. |

Key Research Reagent Solutions & Materials

The following table details essential items and methodologies for enhancing reproducibility in catalysis research.

| Item / Methodology | Function & Importance |

|---|---|

| Sensitivity Screen [3] | An experimental tool to identify critical parameters affecting a reaction's outcome. It systematically tests variations in conditions (e.g., concentration, temperature) to create a robustness profile, guiding troubleshooting and protocol refinement. |

| High-Purity Reagents & Substrates [3] [2] | To prevent trace metal impurities or contaminants from acting as unintended catalytic species, which can lead to erroneous mechanistic conclusions and severe reproducibility issues. |

| Standardized Electrodes [3] | In electrochemistry, using electrodes with specified material, defined surface area, and known geometry is crucial, as these factors significantly impact yield and reproducibility. |

| Characterized Photoreactors [3] | For photochemistry, the reactor type, light source, and photon flux are critical parameters. Using well-characterized systems and reporting these details is essential for reproducibility. |

| RO-Crate (Research Object Crate) [5] | A packaging standard to create a single digital object that includes all inputs, outputs, parameters, and the links between them for a computational workflow. This ensures full provenance and eases reproduction. |

| Process Characterization Tools [1] | Methodologies adapted from other industries (e.g., pharmaceuticals) to identify undescribed but critical process parameters in catalyst synthesis that are the root cause of reproducibility challenges. |

Experimental Protocol: Conducting a Sensitivity Screen

This protocol provides a detailed methodology for assessing the robustness and reproducibility of a catalytic reaction, based on the tool described in the search results [3].

1. Principle The sensitivity screen evaluates how a reaction's target value (e.g., yield, conversion, selectivity) responds to deliberate variations of single parameters away from their standard conditions. This identifies parameters requiring careful control for reproducibility.

2. Preparation

- Define Standard Conditions (SC): Establish the optimal set of reaction parameters.

- Prepare a Master Stock Solution: To minimize experimental error, prepare a single stock solution containing all reactants for the number of tests you will run.

- Select Parameters & Variations: Choose key parameters to test. Common choices include: concentration, temperature, catalyst loading, reaction time, and solvent/atmosphere purity. Define a realistic variation for each (e.g.,

SC + 10°CandSC - 10°C).

3. Procedure

- For each parameter to be tested, set up parallel reactions where only that parameter is varied according to your plan, while all others are kept constant at SC.

- Run the reactions and work them up in an identical manner.

- Analyze the products using a consistent quantitative method (e.g., GC, HPLC with internal standard).

4. Data Analysis & Visualization

- Calculate the target value (e.g., yield) for each variation.

- For each parameter, calculate the absolute deviation from the target value obtained under SC.

- Plot the results on a radar (spider) diagram. Each axis represents one parameter, and the deviation in the target value is plotted. This provides an intuitive visual summary of the reaction's sensitivity.

Sensitivity Screen Experimental Workflow

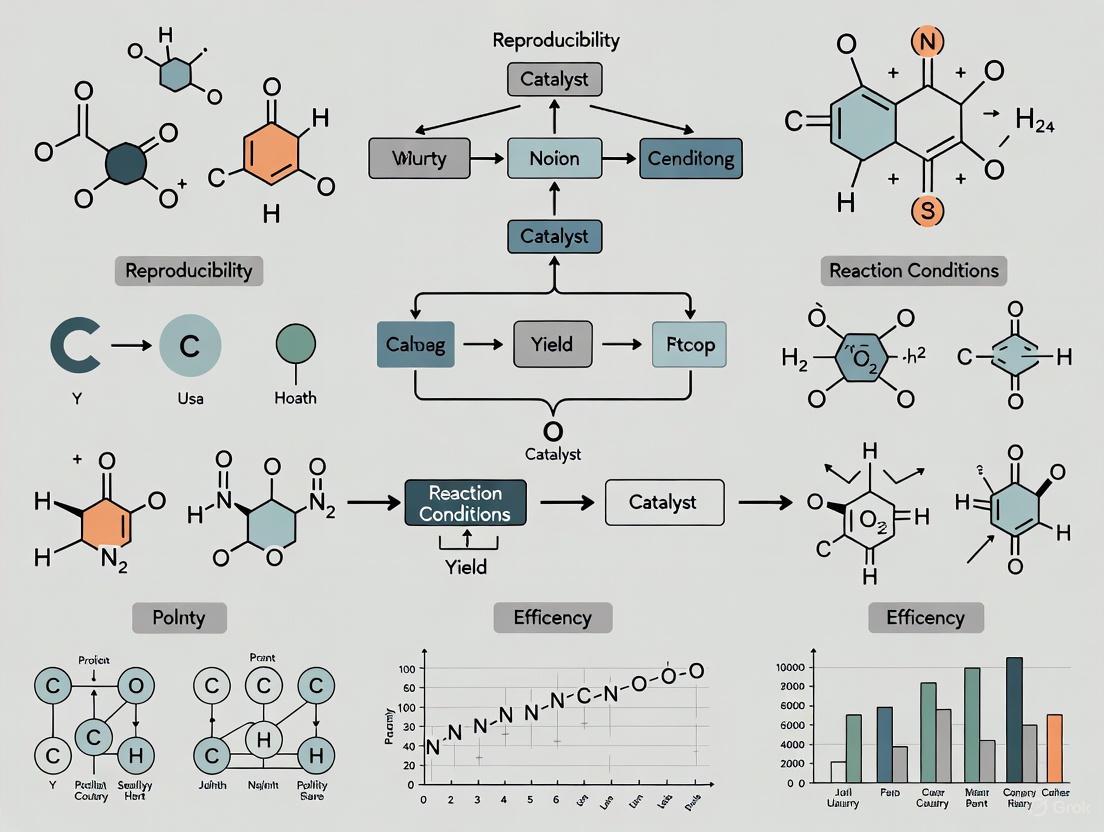

Conceptual Diagram: A Multi-Faceted Strategy for Reproducibility

The following diagram illustrates the interconnected strategies, derived from recent literature, for overcoming reproducibility challenges in catalysis research.

A Multi-Faceted Strategy for Reproducibility

This technical support resource synthesizes current best practices and emerging tools to help researchers diagnose, troubleshoot, and prevent reproducibility issues, thereby strengthening the foundation of catalytic science.

Reproducibility is a fundamental requirement for scientific reliability, yet research across multiple disciplines faces a significant reproducibility crisis. In catalysis research, ensuring that experimental protocols can be consistently replicated is particularly challenging due to inherent experimental complexity, specialized workflows, and the sensitivity of catalytic systems [1]. A 2016 survey revealed that in biology alone, over 70% of researchers were unable to reproduce others' findings, and approximately 60% could not reproduce their own results [8]. The financial impact is substantial, with estimates suggesting $28 billion per year is spent on non-reproducible preclinical research [8].

This technical support center addresses the key sources of irreproducibility, with particular focus on data provenance and metadata reporting issues that commonly affect catalysis research and related fields.

Quantitative Impact of Irreproducibility

Table 1: Survey Data on Research Reproducibility Challenges

| Field of Study | Researchers Unable to Reproduce Others' Work | Researchers Unable to Reproduce Their Own Work | Key Contributing Factors |

|---|---|---|---|

| Biology | >70% | ~60% | Lack of methodological details, raw data access, biological material issues [8] |

| Psychology | ~40-65% (varies by study) | N/A | Selective reporting, analytical flexibility, cognitive biases [8] [9] |

| Journal of Memory and Language | 34-56% (after open data policy) | N/A | Insufficient data/code sharing despite policies [10] |

| Psychological Science | 60% (non-reproducible despite open data badges) | N/A | Incomplete data sharing, methodological ambiguity [10] |

| Science (Computational) | 74% (non-reproducible) | N/A | Changing computational environments, specialized infrastructure [10] |

Frequently Asked Questions: Troubleshooting Reproducibility

Data Provenance & Metadata Issues

Q: What is data provenance and why is it critical for reproducible catalysis research?

Data provenance is the documentation of why, how, where, when, and by whom data was produced. It captures the historical record of data as it moves through various processes and transformations [11] [12]. In catalysis research, this is particularly important because:

- It enables interpretation and reuse of complex experimental data

- It builds trust, credibility and reproducibility by documenting origins and transformations [11]

- It allows researchers to trace the origins of data discrepancies and identify where data quality issues arise [12]

Q: Our team is struggling to reproduce XAS catalysis experiments from publications. What key metadata should we document?

Based on analysis of reproducibility challenges in X-ray Absorption Spectroscopy (XAS) for catalysis research [5], ensure you capture:

- Raw experimental data rather than only intermediary processed forms

- All input parameters needed for each step of the analysis process

- Consistent labeling between data objects and final publication

- Processing workflows with all parameter settings and software versions

Table 2: Essential Research Reagent Solutions for Reproducible Catalysis Research

| Reagent/Material | Function | Reproducibility Considerations |

|---|---|---|

| Nickel-iron-based oxygen evolution electrocatalysts | Electrocatalysis studies | Batch-to-batch variability; synthesis protocol parameters [1] |

| Authenticated, low-passage reference materials | Biomaterial-based research | Verification of phenotypic and genotypic traits; lack of contaminants [8] |

| Cell lines and microorganisms | Biological catalysis studies | Authentication to avoid misidentified or cross-contaminated materials [8] |

| Specialized solvents and precursors | Chemical synthesis | Source documentation, purity verification, lot number tracking |

Experimental Protocol & Workflow Issues

Q: How can we improve the reproducibility of our electrocatalysis experimental protocols?

A global interlaboratory study of nickel-iron-based oxygen evolution electrocatalysts revealed substantial reproducibility challenges originating from undescribed but critical process parameters [1]. Implement these practices:

- Use managed computational workflows (like Galaxy) that generate Research Object Crates (RO-Crates) containing all inputs, outputs, parameters and links between them [5]

- Apply process characterization tools from pharmaceutical industry to identify critical parameters [1]

- Document key assumptions, parameters, and algorithmic choices thoroughly so others can test robustness [10]

Q: What are the most common sources of error in experimental design that affect reproducibility?

- Inadequate controls: Failure to include appropriate positive and negative controls

- Sample quality issues: Using misidentified, cross-contaminated, or over-passaged biological materials [8]

- Unmanaged complexity: Inability to handle complex datasets without proper tools and knowledge [8]

- Insufficient documentation: Lack of thorough methods description including blinding, replicates, standards, and statistical analysis [8]

Technical Troubleshooting Guides

Q: We cannot reproduce published computational results for our catalysis data analysis. What should we check?

Follow this systematic troubleshooting approach adapted from molecular biology protocols [13]:

- Identify the problem precisely: Which specific results cannot be reproduced?

- List all possible explanations: Software versions, operating system differences, parameter defaults, random seed variations, missing dependencies [10]

- Collect data: Document your environment details, software versions, and compare with original publication

- Eliminate explanations: Systematically test each potential cause

- Check with experimentation: Run simplified test cases, verify with different parameters

- Identify the cause: Pinpoint the specific factor preventing reproduction [13]

Q: Our catalysis experiments show high variance between operators. How can we standardize our procedures?

Troubleshooting Steps:

- Protocol Documentation: Create step-by-step protocols with explicit instructions, avoiding ambiguous terms [14]

- Operator Training: Implement formal training sessions using the "Pipettes and Problem Solving" approach where experienced researchers present scenarios with unexpected outcomes [14]

- Control Experiments: Include standardized control experiments in each run to monitor technique consistency

- Blinded Assessment: Where possible, implement blinding to reduce operator bias [8]

Best Practices for Enhancing Reproducibility

Data Provenance Implementation

For computational catalysis research:

- Use workflow management systems (like Kepler, Galaxy, or Taverna) that automatically capture provenance [11] [12]

- Generate Research Object Crates (RO-Crates) that package all inputs, outputs, parameters and their relationships [5]

- Apply standard provenance models (W3C PROV-DM, PROV-O) for machine-readable provenance [11]

For experimental catalysis research:

- Implement data collection manifests that track data from origin through all transformations [15]

- Capture critical metadata: date created, creator, instrument parameters, processing methods [11]

- Use both human-readable (README files) and structured, machine-readable provenance formats [11]

Metadata Reporting Standards

Essential metadata for reproducible catalysis experiments:

Table 3: Critical Metadata Categories for Reproducible Research

| Metadata Category | Specific Elements to Document | Tools/Standards |

|---|---|---|

| Experimental Conditions | Temperature, pressure, solvent composition, catalyst loading, time | Domain-specific standards |

| Instrument Parameters | Equipment model, calibration dates, software versions, settings | Instrument metadata schemas |

| Data Processing | Software versions, parameters, algorithms, random seeds | Workflow systems, RO-Crate [5] |

| Sample Provenance | Source, preparation method, characterization data, storage conditions | Electronic lab notebooks |

| Personnel & Protocol | Operator, date, protocol version, modifications | Version control systems |

Cultural & Procedural Improvements

- Publish negative results: Create avenues for sharing non-confirmatory results to avoid publication bias [8]

- Pre-register studies: Register proposed studies including approaches before initiation to reduce analytical flexibility [8]

- Implement data governance: Establish frameworks with rules and standards for data management and provenance tracking [12]

- Foster collaboration: Encourage team problem-solving approaches like "Pipettes and Problem Solving" where groups work together to identify sources of experimental problems [14]

Addressing irreproducibility requires both technical solutions and cultural shifts. By implementing robust data provenance systems, comprehensive metadata reporting, and systematic troubleshooting approaches, catalysis researchers can significantly enhance the reliability and reproducibility of their findings. Universities and research institutes play a critical role in providing tools, training, and incentives that support these practices [10].

The reproducibility journey begins with recognizing that even seemingly mundane factors—such as aspiration technique in cell washing [14] or undescribed critical process parameters in electrocatalysis [1]—can substantially impact experimental outcomes. Through diligent attention to provenance, metadata, and systematic troubleshooting, the catalysis research community can build a more solid foundation for scientific advancement.

Troubleshooting Guide: Common Experimental Failures

This guide helps researchers identify and resolve common issues that undermine reproducibility in catalysis research.

Symptom: Inconsistent Catalyst Performance Between Batches

Potential Cause 1: Undescribed Critical Process Parameters

- Diagnosis: The synthesis protocol lacks sufficient detail on parameters that significantly impact the final catalyst's properties, such as exact temperature ramping rates, mixing speeds, or aging times. A global study found these undescribed parameters are a primary source of reproducibility challenges [1].

- Solution: Implement a Process Characterization Tool (common in the pharmaceutical industry) to systematically identify and control these critical parameters. Document every variable meticulously in the experimental section [1].

Potential Cause 2: Improper Sample Preparation and Selection

- Diagnosis: Catalyst samples are not representative of the entire batch, or the preparation environment does not mirror real-world operating conditions [16].

- Solution:

Symptom: Poor Replication of Published Kinetic Data

- Potential Cause: Incorrect Assumptions About Experimental Errors

- Diagnosis: Assuming experimental errors are constant, follow a normal distribution, and are independent of reaction conditions. Research shows errors in catalytic tests can vary strongly with factors like temperature, and measurements can be significantly correlated [17].

- Solution: Properly characterize the covariance matrix of experimental errors instead of relying on simplifying assumptions. This is crucial for meaningful parameter estimation and statistical interpretation of kinetic models [17].

Symptom: Catalyst Deactivation or Unexpected Selectivity

- Potential Cause: Presence of Catalyst Poisons or Feed Impurities

- Diagnosis: The reactant feed contains trace impurities (e.g., sulfur compounds) that poison the active sites of the catalyst, or the catalyst undergoes thermal sintering.

- Solution:

- Implement rigorous feed purification steps.

- Perform thorough catalyst characterization (e.g., BET surface area, chemisorption) before and after reaction to identify changes in the catalyst's physical and chemical properties.

Frequently Asked Questions (FAQs)

Q1: What are the most critical factors leading to the "reproducibility crisis" in catalysis? The primary factors include insufficiently detailed experimental protocols that omit critical process parameters, a lack of proper characterization of experimental errors, and the inherent sensitivity and complexity of heterogeneous catalytic systems [1] [17] [18].

Q2: How can I improve the reproducibility of my catalyst synthesis? Define clear objectives, choose representative catalyst samples, and prepare the testing environment to precisely mirror real operating conditions. Most importantly, use a systematic approach to identify, control, and document all critical process parameters [1] [16].

Q3: Why do my model's parameter estimates and predictions have high uncertainty?

This often stems from an improper characterization of experimental errors. If the covariance matrix of errors (V̄̄χ) is not known correctly, the subsequent calculation of the parameter covariance matrix (V̄̄β) and prediction errors (V̄̄̂χ) will be statistically meaningless [17].

Q4: What is the role of error analysis in model building for catalysis? Proper error analysis is paramount. It specifies data quality and ensures the significance of your kinetic models and parameter estimates. Without it, statistical interpretations and conclusions about catalyst performance may be unreliable [17].

Data Presentation: Experimental Error Variations

The table below summarizes how experimental errors can depend on reaction conditions, based on a study of the combined carbon dioxide reforming and partial oxidation of methane over a Pt/γ-Al2O3 catalyst [17].

Table 1: Dependence of Concentration Standard Deviations on Reaction Temperature

| Reaction Component | Standard Deviation at ~600°C | Standard Deviation at ~900°C | Trend & Implications |

|---|---|---|---|

| CH₄ | 0.0300 | 0.0005 | Sharp decrease. Errors are not constant; assuming so leads to oversimplification. |

| CO | 0.0200 | 0.0010 | Sharp decrease. The amount of information from each data point varies with temperature. |

| H₂ | 0.0400 | 0.0015 | Sharp decrease. Error structure can contain information about the reaction mechanism. |

| CO₂ | 0.0100 | 0.0010 | Decrease. Highlights the need for proper error characterization in kinetic analysis. |

Experimental Protocols

Protocol 1: Standardized Catalyst Performance Test

This protocol outlines a general method for evaluating catalyst activity and selectivity in a laboratory reactor [16].

- Reactor Setup: Use a tube reactor with a temperature-controlled furnace and mass flow controllers for gases.

- System Connection: Connect the reactor output directly to analytical instruments like a Gas Chromatograph (GC) equipped with a Flame Ionization Detector (FID) or a CO detector.

- Condition Establishment: Set the temperature, pressure, and feed composition to match the desired reaction conditions.

- Data Collection: Once steady-state is achieved, record the concentrations of reactants and products at the reactor outlet.

- Performance Calculation:

- Conversion (%) =

[( moles of reactant in) - ( moles of reactant out)] / ( moles of reactant in) * 100 - Selectivity to Product A (%) =

[ ( moles of product A formed) / ( total moles of reactant converted)] * 100

- Conversion (%) =

Protocol 2: Characterizing Experimental Errors for Kinetic Modeling

This methodology is essential for obtaining reliable kinetic parameters [17].

- Repeated Measurements: Conduct multiple experimental runs at identical operating conditions to capture the inherent variability of the measurements.

- Covariance Matrix Calculation: For each set of conditions, calculate the covariance matrix (

V̄̄χ) of the experimental measurements (e.g., concentrations, conversions). This matrix captures the variances and the correlations between different measured variables. - Error Pattern Analysis: Analyze how the standard deviations and covariances change with reaction conditions (e.g., temperature). Do not assume they are constant.

- Model Fitting: Use the calculated covariance matrix in a weighted least-squares estimation procedure to determine kinetic parameters. The objective function

Fshould be defined asF = (χ̄ - χ̄e)ᵀ (V̄̄χ)⁻¹ (χ̄ - χ̄e), whereχ̄is the model prediction andχ̄eis the experimental measurement vector.

� Workflow and Relationship Diagrams

Troubleshooting Workflow for Catalysis Research

Robust Experimental Protocol Flowchart

The Scientist's Toolkit: Essential Research Reagents & Materials

Table 2: Key Materials and Their Functions in Catalysis Testing

| Item | Function & Purpose |

|---|---|

| Tube Reactor | The core vessel where the catalytic reaction takes place under controlled conditions [16]. |

| Temperature-Controlled Furnace | Heats the reactor to precisely maintain the desired reaction temperature [16]. |

| Mass Flow Controllers | Precisely regulate the flow rates of gaseous reactants entering the reactor [16]. |

| Gas Chromatograph (GC) | An analytical instrument used to separate and quantify the composition of the reactor effluent stream (products and unreacted feed) [16]. |

| Pt/γ-Al2O3 Catalyst | A common heterogeneous catalyst used in reforming reactions; platinum is the active metal, and gamma-alumina is the high-surface-area support [17]. |

| Process Characterization Tool | A methodology (adapted from pharmaceuticals) to identify and control critical parameters that affect catalyst synthesis reproducibility [1]. |

The Impact of Irreproducibility on Scientific Progress and Drug Development Timelines

Technical Support Center: Troubleshooting Guides

Guide 1: Troubleshooting Experimental Irreproducibility

Problem: An experiment, which previously yielded consistent results, now produces inconsistent and highly variable data, making interpretation difficult.

Investigation & Resolution Path:

Detailed Steps:

- Verify Biological Materials: Cross-contaminated or misidentified cell lines are a major source of irreproducibility [8]. Check the authentication status of cell lines using STR profiling. Test for mycoplasma or bacterial contamination, which can alter experimental outcomes [8].

- Audit Reagents & Kits: Review the storage conditions and expiration dates of all critical reagents, especially enzymes and antibodies [19]. If possible, test a new aliquot from a different lot number to rule out reagent degradation.

- Review Data Management: Ensure an auditable record exists from raw data to the analysis file. Check data management programs for errors and confirm the correct version of analysis scripts is used [20].

- Scrutinize Experimental Protocol: Evaluate if the experiment was sufficiently blinded to prevent unconscious bias [20]. Confirm that samples were properly randomized and that the number of replicates is statistically adequate to detect an effect [8] [19].

- Identify Root Cause & Implement Solution: Based on the audit above, pinpoint the most likely cause. Implement a corrective action, such as acquiring new authenticated cell lines, revising the protocol to include more replicates, or standardizing reagent aliquoting.

Guide 2: Troubleshooting a Failed Catalysis Experiment Reproduction

Problem: Inability to reproduce the results of a published catalysis study using the provided methodology.

Investigation & Resolution Path:

Detailed Steps:

- Check Data Provenance: A common hurdle is that publications often include only processed or intermediary data, not the raw data collected from the instrument [5]. Contact the corresponding author to request the raw data files.

- Verify Input Parameters: The published methods may omit minor but critical input parameters for the data analysis workflow [5]. Scrutinize the methods section and supplementary information for any missing details on software settings, data filtering thresholds, or normalization procedures.

- Clarify Data Labeling: Inconsistent labeling between the figures in the paper and the associated data files can lead to confusion and incorrect data processing [5]. Carefully cross-reference all labels and descriptors.

- Adopt Managed Workflows: To overcome these issues, use computational platforms like Galaxy that support managed workflows and the creation of Research Object Crates (RO-Crates) [5]. These tools automatically bundle all inputs, outputs, parameters, and the links between them into a single, reproducible digital object.

- Achieve Reproduction: Using a fully documented workflow ensures that every step of the analysis is traceable, allowing for the exact reproduction of the original results and providing a sustainable model for future work [5].

Frequently Asked Questions (FAQs)

FAQ 1: What is the difference between reproducibility and replicability in science?

There is no universal agreement on these terms, but a common framework distinguishes them as follows [9]:

- Reproducibility refers to the ability to obtain consistent results using the same input data and computational methods as the original study. It focuses on the transparency of the analytical process [21] [9].

- Replicability (or replication) refers to the ability to confirm a study's findings by collecting new data, often using an independent study design but testing the same underlying hypothesis [21] [9]. The American Society for Cell Biology further breaks this down into direct, analytic, systemic, and conceptual replication [8].

FAQ 2: How widespread is the irreproducibility problem?

Evidence suggests the issue is significant. A 2016 survey of scientists found that over 70% of researchers have been unable to reproduce another scientist's experiments, and approximately 60% have been unable to reproduce their own findings [8] [19]. In drug development, the problem is stark: one study attempted to confirm findings from 53 "landmark" preclinical cancer studies and succeeded in only 6 cases (approximately 11%) [20].

FAQ 3: What are the primary factors contributing to irreproducible research?

The causes are multifaceted and often interconnected. Key factors include [8] [19]:

- Inadequate experimental design and statistics: Poorly designed studies, low statistical power, and inappropriate analysis.

- Lack of access to raw data, code, and methodologies.

- Use of unauthenticated or contaminated biological materials.

- Cognitive and selection biases, such as confirmation bias.

- A competitive culture that rewards novel, positive results over robust, negative ones.

FAQ 4: What are the financial and temporal costs of irreproducibility in drug development?

Irreproducibility has a profound impact, wasting significant resources and time. A meta-analysis estimated that $28 billion per year is spent on non-reproducible preclinical research in the U.S. alone [8]. The overall drug development process is already extraordinarily long, typically taking 12-13 years from discovery to market, with a failure rate of over 90% for drugs entering clinical trials [22] [23]. Irreproducibility in early, preclinical stages exacerbates this timeline by advancing flawed candidates that later fail in costly human trials [22].

FAQ 5: What practical steps can my lab take today to improve reproducibility?

- Implement robust sharing: Share all raw data, protocols, and code via public repositories [8].

- Use authenticated reagents: Use validated, low-passage cell lines and characterized antibodies, and routinely check for contaminants [8].

- Pre-register studies: Publicly pre-register your study design and analysis plan to reduce selective reporting [8].

- Publish negative results: Support journals and platforms that publish well-conducted studies with null or negative results [8] [19].

- Formalize training: Provide formal training for all lab members in experimental design, statistical analysis, and troubleshooting methodologies [19] [14].

Quantitative Data on Reproducibility and Drug Development

Survey Data on the Reproducibility Crisis

Table: Evidence of the Reproducibility Challenge

| Field of Research | Nature of the Evidence | Key Finding | Source |

|---|---|---|---|

| General Biology | Survey of 1,500 scientists | >70% of researchers have failed to reproduce another's experiment; ~60% have failed to reproduce their own. | [8] |

| Psychology | Replication of 100 representative studies | Only 36% of replications had statistically significant results; <50% were subjectively successful. | [20] |

| Oncology (Preclinical) | Attempt to confirm 53 "landmark" studies | Findings from only 6 studies (11%) were confirmed. | [20] |

| Drug Development (Preclinical) | Review of validation studies | Only 20-25% of studies were "completely in line" with original reports. | [20] |

The Drug Development Pipeline and Attrition

Table: Typical Timeline and Attrition in Drug Development

| Development Stage | Typical Duration | Number of Compounds | Key Reasons for Failure / Challenges |

|---|---|---|---|

| Discovery & Preclinical | 3-6 years | 5,000 - 10,000 down to ~100-200 leads | Lack of efficacy in models, toxicity, poor drug-like properties [23]. |

| Phase I Clinical Trials | Several months - 1 year | ~100-200 down to ~60-140 | Unexpected human toxicity, intolerable side effects, poor pharmacokinetics [23]. |

| Phase II Clinical Trials | 1-2 years | ~60-140 down to ~18-49 | Inadequate efficacy in patients, emerging safety issues [23]. |

| Phase III Clinical Trials | 2-4 years | ~18-49 down to 1 | Insufficient efficacy in large trials, long-term safety problems, commercial decisions [23]. |

| Regulatory Review | 0.5 - 1 year | 1 approved drug | Incomplete data, manufacturing issues, risk-benefit assessment [23]. |

| TOTAL | 12-13 years | ~10,000 → 1 | High failure rates at each stage, often linked to translational gaps from preclinical models [22] [23]. |

The Scientist's Toolkit: Essential Research Reagent Solutions

Table: Key Reagents for Ensuring Reproducible Research

| Reagent / Material | Critical Function | Best Practices for Reproducibility |

|---|---|---|

| Cell Lines | Fundamental model systems for in vitro biology. | Regularly authenticate using STR profiling or other methods. Test frequently for mycoplasma contamination. Avoid long-term serial passaging to prevent genetic drift. Use early-passage, frozen stocks [8]. |

| Antibodies | Key reagents for detecting specific proteins (e.g., in Western blot, IHC). | Use validated antibodies from reputable sources. Report clone/catalog numbers. Include relevant controls (e.g., knockout cell lines) to confirm specificity [19]. |

| Chemical Inhibitors/Compounds | Tools to modulate biological pathways. | Verify purity and stability. Use appropriate vehicle controls. Confirm target engagement in your specific assay system. |

| Reference Materials | Authenticated, traceable biomaterials (e.g., NIST standards). | Use as positive controls and for calibrating assays. They provide a baseline for comparing results across experiments and laboratories [8]. |

| Competent Cells | Essential for molecular cloning and plasmid propagation. | Check transformation efficiency with control plasmid upon receipt. Properly store at -80°C to maintain efficiency over time [24]. |

Community Initiatives and the Growing Push for Standardization

Frequently Asked Questions: Troubleshooting Reproducibility

Q1: What are the most common sources of reproducibility issues in catalysis experiments? Reproducibility problems often stem from undescribed critical process parameters in synthesis protocols, variations in catalyst activation (like pyrolysis or annealing temperatures), and inconsistencies in sample preparation or characterization equipment [1] [4]. Non-standardized reporting of experimental methods makes these issues difficult to detect and correct.

Q2: My catalytic reaction yields inconsistent results. What should I check first? First, repeat the experiment to rule out simple human error [25]. Then, systematically verify your equipment and materials: check calibration of instruments like mass spectrometers or GC inlets, confirm reagent storage conditions and integrity, and ensure consistent sample preparation techniques [26] [14].

Q3: How can I improve the reproducibility of my catalyst synthesis procedures? Implement detailed documentation of all critical parameters including temperature ramps, atmosphere, duration, and solvent sources [4] [14]. Use high-throughput screening systems where possible to conduct parametric studies that identify sensitive variables [27] [28]. Follow emerging guidelines for machine-readable protocol reporting to enhance standardization [4].

Q4: What controls should I include to validate my catalysis experiments? Always include appropriate positive and negative controls [25]. For catalyst testing, this may include materials with known activity, blanks to detect contamination, and replicates to measure experimental variance. Proper controls help determine if unexpected results indicate protocol problems or legitimate scientific findings [25] [14].

Q5: How can our research group systematically improve troubleshooting skills? Consider implementing formal troubleshooting training like "Pipettes and Problem Solving" sessions, where researchers work through hypothetical experimental failures to develop diagnostic skills [14]. These structured exercises teach methodical approaches to identifying error sources while fostering collaborative problem-solving.

Troubleshooting Guides

Guide 1: Addressing Poor Reproducibility in Catalyst Performance Testing

Problem: Measured catalyst activity or selectivity shows high variability between identical experiments.

Troubleshooting Steps:

Verify analytical system function

Assess sample preparation consistency

- Document and standardize all weighing, mixing, and purification steps

- Verify solvent purity and degassing procedures

- Confirm catalyst loading consistency through multiple preparation batches [25]

Evaluate reactor system integrity

- Check for leaks in pressurized systems

- Verify temperature uniformity in reactor zones

- Confirm gas flow rates and mixing efficiency

- Monitor for catalyst bed channeling or settling variations [26]

Implement statistical process control

- Run standard catalyst materials regularly to track system performance over time

- Use control charts to detect trends or shifts in measured activities

- Establish quality control limits for key performance metrics [28]

Guide 2: Troubleshooting Inconsistent Catalyst Synthesis Outcomes

Problem: Reproducibility challenges in preparing nickel-iron-based oxygen evolution electrocatalysts and other materials, as identified in global interlaboratory studies [1].

Systematic Approach:

Troubleshooting Methodology:

Establish reproducibility - Confirm you can consistently replicate the problem by identifying precise steps that trigger the issue [30].

Document everything - Maintain detailed notes on all synthesis parameters including:

Change one variable at a time when testing potential solutions:

- Start with easiest-to-adjust parameters (e.g., microscope settings, dilution factors)

- Progress to more fundamental variables (e.g., precursor concentrations, thermal treatment conditions)

- Use parallel experiments where possible to efficiently test multiple conditions [25]

Apply risk-based approach to variable selection:

Experimental Protocol Standardization

Standardized Reporting Framework for Catalyst Synthesis

Based on analysis of single-atom catalyst literature and reproducibility studies, the following framework improves protocol replication:

Essential Parameters to Document:

| Category | Specific Parameters | Reporting Standard |

|---|---|---|

| Precursor Information | Chemical identity, source, purity, lot number, preparation date/storage conditions | Full chemical name, supplier, catalog number, % purity |

| Mixing Steps | Order of addition, stirring rate/time, temperature, container type | Precise sequence, RPM, duration (min/sec), vessel material |

| Thermal Treatments | Temperature profile, atmosphere, container, ramp rates, hold times | Exact values with tolerances, gas composition/flow rates |

| Post-treatment | Washing procedures, drying conditions, activation methods | Solvent volumes/concentrations, temperature, atmosphere, duration |

| Characterization | Instrument settings, calibration standards, measurement conditions | Complete instrument description with model numbers |

High-Throughput Protocol Optimization

Methodology for Parameter Identification:

Utilize high-throughput screening platforms to rapidly test multiple parameter combinations [27] [28]

Apply Design of Experiments (DoE) approaches to efficiently explore parameter space and identify interactions

Implement statistical analysis to determine critical parameters significantly affecting catalyst performance

Establish operating ranges for each critical parameter to define robust synthesis conditions

The Scientist's Toolkit: Essential Research Reagent Solutions

| Tool/Technique | Function in Catalysis Research | Application Example |

|---|---|---|

| High-Throughput Screening Systems | Parallel evaluation of multiple catalyst formulations under identical conditions | Rapid screening of Ni-Fe OER catalyst compositions [27] [28] |

| In Situ/Operando Characterization | Observation of catalysts under actual reaction conditions | X-ray techniques at Advanced Photon Source to study working catalysts [27] |

| Process Characterization Tools | Identification of critical process parameters affecting reproducibility | Pharmaceutical industry tools applied to electrocatalyst synthesis [1] |

| Atomic Layer Deposition | Precise deposition of thin films with controlled thickness | Creating well-defined catalyst structures with improved stability [27] |

| Transformer Language Models | Automated extraction and standardization of synthesis protocols from literature | ACE model for converting unstructured protocols into machine-readable formats [4] |

| Electronic Microscopy Center | Nanoscale imaging and analysis of catalyst structures | Analytical TEM and SEM for catalyst morphology characterization [27] |

Data Analysis and Reproducibility Assessment

Quantifying Reproducibility Challenges:

| Material System | Reproducibility Issue Identified | Impact on Research |

|---|---|---|

| Ni-Fe based OER catalysts | Substantial reproducibility challenges across laboratories | Global interlaboratory study revealed undescribed critical parameters [1] |

| Single-Atom Catalysts (SACs) | Extreme diversity in synthesis approaches and reporting standards | Rapid growth (1200+ papers since 2010) with non-standardized protocols [4] |

| Thermal treatment steps | Broad temperature ranges for similar processes (e.g., pyrolysis: 573-1173K) | Distinct performance peaks around 1173K, but widespread practice variation [4] |

Implementation Workflow for Protocol Standardization:

Implementing Reproducible Workflows and Data Management Frameworks

This technical support center is designed within the context of a broader thesis on overcoming reproducibility challenges in catalysis research. Managed workflow systems like Galaxy directly address key reproducibility issues identified in catalysis studies, including lack of provenance between inputs and outputs, missing metadata, and incomplete data reporting [5]. This resource provides catalysis researchers with practical troubleshooting guidance to ensure their computational experiments are reproducible, well-documented, and compliant with data preservation standards.

Frequently Asked Questions (FAQs)

Getting Started

How do I create an account on a Galaxy server? To create an account at any public Galaxy instance, choose your server from the available list of Galaxy Platforms (such as UseGalaxy.org, UseGalaxy.eu, or UseGalaxy.org.au). Click on "Login or Register" in the masthead, then find the "Register here" link on the login page. Fill in the registration form and click "Create." Your account will remain inactive until you verify your email address using the confirmation email sent to you [31].

Can I create multiple accounts on the same Galaxy server? No, you are not allowed to create more than one account per Galaxy server. This is a violation of the terms of service and may result in account deletion. However, you are permitted to have separate accounts on different Galaxy servers (e.g., one on Galaxy US and another on Galaxy EU) [31].

How do I update my account preferences and information? After logging in, navigate to "User" → "Preferences" in the top menu bar. Here you can update various settings including your registered email address, public name, password, dataset permissions for new histories, API key, and interface preferences [31].

Analysis & Tools

What should I do if I can't find a tool needed for a tutorial? First, check that you are using a compatible Galaxy server by reviewing the "Available on these Galaxies" section in the tutorial's overview. Use the Tutorial mode feature by clicking the curriculum icon on the top menu to open the GTN inside Galaxy, where tool names will appear as blue buttons that open the correct tool. If you still can't find the tool, ask for help in the Galaxy communication channels [32].

How can I add a custom database or reference genome? Navigate to the history containing your FASTA file for the reference genome. Ensure the FASTA format is standardized. Then go to "User" → "Preferences" → "Manage Custom Builds." Create a unique name and database key (dbkey) for your reference build, select "FASTA-file from history" under Definition, and choose your FASTA file. Click "Save" to complete the process [31].

What are the common requirements for differential expression analysis tools? Ensure your reference genome, reference transcriptome, and reference annotation are all based on the same genome assembly. Differential expression tools require sample count replicates with at least two factor levels/groups/conditions with two samples each. Factor names should contain only alphanumeric characters and underscores, without spaces. If using DEXSeq, the first condition must be labeled as "condition" [31].

Data Management

How can I reduce my storage quota usage while retaining prior work? You can download datasets as individual files or entire histories as archives, then purge them from the server. Transfer datasets or histories to another Galaxy server. Copy your most important datasets into a new history, then purge the original. Extract workflows from histories before purging them. Regularly back up your work by downloading archives of your full histories [31].

How do I share my history with collaborators? Access the history sharing menu via the History Options dropdown (galaxy-history-options) and click "Share or Publish." You can share via link or publish it publicly. Sharing your history allows others to import and access the datasets, parameters, and steps of your analysis, which is particularly useful when seeking help or collaborating [33].

Troubleshooting Guides

Common Error Resolution

When you encounter a red dataset in your history, follow these systematic troubleshooting steps [34]:

Examine the Error Message: Expand the red history dataset by clicking on it. Sometimes the error message is visible immediately.

Check Detailed Logs: Expand the history item and click on the details icon. Scroll down to the Job Information section to view both "Tool Standard Output" and "Tool Standard Error" logs, which provide technical details about what went wrong.

Submit a Bug Report: If the problem remains unclear, click the bug icon (galaxy-bug) and provide comprehensive information about the issue, then click "Report."

Seek Community Help: Ask for assistance in the GTN Matrix Channel, Galaxy Matrix Channel, or Galaxy Help Forum. When asking for help, share a link to your history for more effective troubleshooting.

Common Tool Errors and Solutions

Table: Common Galaxy Analysis Issues and Resolution Strategies

| Error Category | Common Causes | Resolution Steps |

|---|---|---|

| Red Dataset (Tool Failure) | Incorrect parameters, problematic input data, tool bugs [34] | Follow systematic troubleshooting: check error messages, review logs, submit bug reports if needed [34]. |

| Differential Expression Analysis Failures | Identifier mismatches, insufficient replicates, incorrect factor labels, header issues [31] | Standardize identifiers, ensure proper replicates, use alphanumeric factor names without spaces, verify header settings [31]. |

| Reference Genome Issues | Custom databases not properly formatted, identifier mismatches [31] | Standardize FASTA format, use "Manage Custom Builds" in user preferences, ensure consistent identifiers across inputs [31]. |

| Reproducibility Challenges | Missing provenance, incomplete parameters, inconsistent data labeling [5] | Use Galaxy's workflow management and RO-Crate generation to capture all inputs, outputs, and parameters in a single digital object [5]. |

Advanced Troubleshooting for Catalysis Research

For catalysis researchers working with X-ray Absorption Spectroscopy (XAS) data, specific reproducibility challenges require targeted approaches [5]:

- Challenge: Publications including only intermediary data rather than raw values.

Solution: Use Galaxy to preserve complete data provenance from raw data through all processing stages.

Challenge: Missing input parameters for analysis steps.

Solution: Leverage Galaxy's workflow system that automatically captures all parameters used in each analysis.

Challenge: Different labeling between final paper and associated data objects.

- Solution: Implement consistent naming conventions within Galaxy workflows and utilize RO-Crates to maintain clear connections between all data elements.

Experimental Protocols for Catalysis Research

Managed Workflow for XAS Data Analysis

This protocol outlines a reproducible methodology for analyzing X-ray Absorption Spectroscopy (XAS) data in catalysis research, based on the Galaxy case study addressing reproducibility challenges [5].

Principle: Implement managed workflows with complete provenance tracking to overcome common reproducibility limitations in catalysis research, including insufficient metadata and disconnected data relationships.

Materials:

- Raw XAS data from catalysis experiments (e.g., Diamond Light Source via UK Catalysis Hub)

- Galaxy platform with workflow tools

- RO-Crate export capability

Procedure:

Workflow Design Phase:

- Map all analysis steps from raw data processing to final results

- Identify all input parameters required for each processing step

- Establish consistent labeling conventions that will be maintained through publication

Data Import Phase:

- Upload raw experimental data (not just intermediary forms)

- Apply standardized metadata tagging

- Verify data integrity after transfer

Workflow Execution Phase:

- Execute processing steps within the Galaxy platform

- Document all parameter selections systematically

- Generate intermediate results with maintained provenance

Reproducibility Packaging Phase:

- Use Galaxy to generate RO-Crates for each workflow invocation

- Verify the RO-Crate contains all inputs, outputs, parameters, and their relationships

- Export the complete digital object for publication or sharing

Troubleshooting Tips:

- If encountering missing parameter errors, verify all analysis steps have explicit parameter settings

- For data labeling inconsistencies, implement naming conventions early in the workflow

- When provenance gaps appear, utilize Galaxy's history tracking to identify missing connections

Workflow Visualization

Galaxy Workflow for Reproducible Catalysis Research

Research Reagent Solutions

Table: Essential Research Reagents and Computational Tools for Catalysis Research

| Reagent/Tool | Function in Catalysis Research | Implementation in Galaxy |

|---|---|---|

| XAS Data | Primary experimental data from catalysis experiments | Raw data import with standardized metadata tagging |

| Reference Spectra | Standard compounds for calibration and comparison | Managed as reference datasets within analysis workflows |

| RO-Crate | Reproducible research object containing all workflow components | Automated generation through Galaxy's export functionality |

| Processing Parameters | Specific values and settings for data analysis | Captured automatically during workflow execution |

| Galaxy Workflows | Managed analytical processes with provenance tracking | Designed, executed, and shared through Galaxy platform |

Utilizing RO-Crates for Packaging Complete Research Objects

A technical support guide for catalysis researchers tackling data reproducibility challenges

Catalysis research, particularly in fields like X-ray Absorption Spectroscopy (XAS), faces significant reproducibility challenges including incomplete data publication, missing provenance between inputs and outputs, and inconsistently labeled data objects between publications and their associated datasets [5]. Research Object Crates (RO-Crates) address these challenges by providing a standardized framework for packaging complete research objects with rich metadata, execution provenance, and clear relationships between all components [35]. This technical support center provides practical guidance for catalysis researchers implementing RO-Crates to enhance the reproducibility and reusability of their computational experiments.

RO-Crate Fundamentals: Core Concepts

What is an RO-Crate?

RO-Crate (Research Object Crate) is a method for aggregating and describing research data into distributable, reusable digital objects with structured metadata [36]. It serves as a packaging mechanism that brings together data files, scripts, workflows, and their contextual descriptions in a machine-actionable yet human-readable format.

Key Components:

- RO-Crate Metadata File (

ro-crate-metadata.json): Machine-readable description of the crate's contents and relationships [36] - RO-Crate Root: Directory containing the metadata file and payload data [37]

- Data Entities: Files and directories containing research data [36]

- Contextual Entities: Descriptions of people, organizations, instruments, and other contextual information [36]

Why RO-Crates for Catalysis Research?

In catalysis research, RO-Crates help overcome specific reproducibility challenges by [5]:

- Capturing complete experimental context including all input parameters

- Preserving provenance between raw data, processed results, and published findings

- Standardizing metadata across different instruments and research groups

- Enabling workflow re-execution with precise parameter documentation

Troubleshooting RO-Crate Implementation: Common Issues and Solutions

RO-Crate Metadata Creation Issues

Problem: Invalid or malformed JSON in metadata file

- Symptoms: Validation tools fail to parse the file, RO-Crate processors return syntax errors

- Solution: Use a JSON validator or editor with JSON syntax highlighting (e.g., VS Code) [38]

- Prevention: Always test your

ro-crate-metadata.jsonin the RO-Crate Playground validator before distribution [38]

Problem: Using nested JSON instead of flat structure

- Symptoms: Metadata not properly recognized, linked entities not resolved

- Solution: Use RO-Crate's flat structure with cross-referencing via

@idinstead of nested objects [38] - Example Correction:

Problem: Missing or duplicate @id values

- Symptoms: Entities not properly linked, references broken

- Solution: Ensure every

@idis unique within the@graphand all referenced entities exist [38] - Validation: Use the

rocrate-validatorPython package to check for missing entities [38]

Data and Contextual Entity Problems

Problem: Files referenced in metadata but not present in crate

- Symptoms: Broken links when processing the crate, missing data entities

- Solution: Ensure all files referenced in

hasPartor via@idexist in the RO-Crate root or subdirectories [36] - Prevention: Use tools like

rocrate-validatorto verify file existence [38]

Problem: Insufficient metadata for reproducibility

- Symptoms: Other researchers cannot understand or reuse the data

- Solution: Include essential properties for all key entities [39]:

Table: Required and Recommended Metadata Properties

| Entity Type | Required Properties | Recommended Properties | Catalysis-Specific Extensions |

|---|---|---|---|

| Root Data Entity | @id, @type |

name, description, datePublished, license, publisher |

instrument, experimentalConditions |

| File | @id, @type |

name, encodingFormat, license, author |

measurementType, sampleID |

| Person | @id, @type |

name, affiliation |

ORCID, roleInExperiment |

| Organization | @id, @type |

name, url |

facility, beamline |

Licensing and Provenance Challenges

Problem: Ambiguous licensing terms

- Symptoms: Uncertainty about data reuse rights, legal barriers to reproduction

- Solution: Use SPDX license identifiers and apply licenses at appropriate granularity [37]

- Implementation:

Problem: Incomplete provenance tracking

- Symptoms: Cannot trace how results were derived from raw data

- Solution: Use RO-Crate's provenance mechanisms to document data transformations [36]

- Catalysis Example: Link raw spectrometer output to processed data and published charts

Frequently Asked Questions (FAQs)

RO-Crate Creation and Structure

Q: How do I start creating an RO-Crate for my catalysis dataset? A: Begin by creating a directory for your data and adding the RO-Crate metadata file [37]:

- Create a folder for your dataset (e.g.,

catalysis_experiment_2025) - Add your data files (raw spectra, processed data, analysis scripts)

- Create

ro-crate-metadata.jsonwith the basic structure [37] - Validate using RO-Crate Playground or

rocrate-validator[38]

Q: What is the minimum required content for a valid RO-Crate? A: At minimum, an RO-Crate must contain [39]:

ro-crate-metadata.jsonfile in the root directory- Metadata descriptor entity with

@id: "ro-crate-metadata.json" - Root data entity with

@id: "./"and@type: "Dataset" - All entities must have unique

@idvalues and appropriate@typedeclarations [39]

Q: Can I include remote data files in my RO-Crate? A: Yes, RO-Crates support web-based data entities. You can reference files via URLs instead of local paths [40]:

Metadata and Contextual Information

Q: How detailed should my contextual entities be? A: Include sufficient context for reproducibility without excessive elaboration [41]. Focus on:

- People: Researchers, operators with ORCIDs if available [41]

- Organizations: Research institutions, facility providers [36]

- Instruments: Spectrometers, reactors with relevant specifications

- Software: Analysis tools with versions [41]

Q: How do I handle licensing for different components of my dataset? A: RO-Crate allows different licenses for different files [37]. The root dataset should have an overall license, but individual files can specify their own licenses [41]:

Catalysis-Specific Implementation

Q: How can RO-Crates help with the specific reproducibility challenges in catalysis research? A: RO-Crates address key catalysis reproducibility issues through [5]:

- Complete data packaging: Including both raw and processed data

- Parameter preservation: Documenting all input parameters for analysis steps

- Consistent labeling: Establishing clear connections between data objects and publication labels

- Provenance tracking: Linking spectrometer outputs to final results

Q: What catalysis-specific metadata should I include? A: Beyond basic metadata, consider including:

- Experimental conditions (temperature, pressure, catalyst loading)

- Instrument calibration data

- Sample preparation details

- Reference to catalytic reaction scheme

- Analytical method parameters

Step-by-Step Implementation Guide

Creating a Basic RO-Crate for Catalysis Data

Step 1: Set up the directory structure

Step 2: Create the basic metadata structure

Step 3: Add data entities with catalysis-specific metadata

Step 4: Add contextual entities

Using Programming Tools for RO-Crate Creation

Python implementation with ro-crate-py:

Table: RO-Crate Research Reagent Solutions

| Tool/Resource | Type | Function | Implementation Example |

|---|---|---|---|

| ro-crate-py | Python library | Programmatic RO-Crate creation and manipulation | pip install rocrate [40] |

| RO-Crate Playground | Web validator | Online validation and visualization of RO-Crates | https://www.researchobject.org/ro-crate-playground |

| rocrate-validator | Command-line tool | Validation of RO-Crate structure and metadata | rocrate-validator validate <path> [38] |

| JSON-LD | Data format | Machine-readable linked data format for metadata | Use @context and @graph structure [36] |

| SPDX Licenses | License identifiers | Standardized license references | Use http://spdx.org/licenses/ URLs [37] |

| ORCID | Researcher identifiers | Unique identification of researchers | Use https://orcid.org/ URIs [41] |

| BagIt | Packaging format | Reliable storage and transfer format | Combine with RO-Crate for checksums [42] |

Advanced RO-Crate Applications in Catalysis Research

Workflow Provenance Capture

For complex catalysis data analysis pipelines, RO-Crates can capture detailed workflow provenance:

Integration with Computational Workflows

When using platforms like Galaxy for catalysis data analysis, RO-Crates can automatically capture [5]:

- All input parameters and data files

- Software versions and execution environment

- Output files and their derivations

- Computational provenance connecting inputs to outputs

This automated capture significantly enhances reproducibility by ensuring no parameter or data transformation is omitted from the documentation.

Validation and Quality Assurance Checklist

Before distributing your catalysis RO-Crate, verify:

- JSON Structure:

ro-crate-metadata.jsonis valid JSON-LD [38] - Required Entities: Metadata descriptor and root data entity are present [39]

- Unique Identifiers: All

@idvalues are unique within the@graph[39] - File References: All files referenced in

hasPartexist in the crate [36] - License Clarity: Clear licensing information for reuse [37]

- Provenance: Data transformations and processing steps are documented [5]

- Context: Sufficient experimental context for reproducibility [41]

- Accessibility: Human-readable preview available (

ro-crate-preview.html) [36]

Use both the RO-Crate Playground and rocrate-validator to automatically check the structural integrity of your RO-Crate before publication or sharing [38].

Core FAIR Principles and Their Importance in Catalysis Research

The FAIR Data Principles are a set of guiding concepts to enhance the Findability, Accessibility, Interoperability, and Reuse of digital assets, with a specific emphasis on machine-actionability [43] [44]. These principles provide a framework for managing scientific data, which is crucial for overcoming reproducibility challenges in fields like catalysis research [1] [5].

The Four Principles Explained

- Findable: The first step in data reuse is discovery. Data and metadata must be easy to find for both humans and computers. This is achieved by assigning globally unique and persistent identifiers (e.g., DOIs) and rich, machine-readable metadata, which are then indexed in a searchable resource [43] [44] [45].

- Accessible: Once found, users need to know how the data can be accessed. Data and metadata should be retrievable using standardized, open protocols. It is important to note that data can be accessible under restricted conditions and still be FAIR; the metadata should remain available even if the data itself is no longer accessible [43] [46].

- Interoperable: Data must be able to be integrated with other data and applications. This requires the use of formal, accessible, and shared languages for knowledge representation, such as standardized formats, shared vocabularies, and community ontologies [43] [44] [47].

- Reusable: The ultimate goal of FAIR is to optimize the reuse of data. This depends on the data being richly described with a plurality of accurate attributes, including clear usage licenses, detailed provenance, and adherence to domain-relevant community standards [43] [44].

The Critical Role of Machine-Actionability

A key differentiator of the FAIR principles is their emphasis on machine-actionability [43] [48]. As data volume and complexity grow, humans increasingly rely on computational agents for discovery and analysis. FAIR ensures that data is structured not just for human understanding, but also for automated processing by machines, which is essential for scaling AI and multi-modal analytics in drug development and materials science [49] [45].

FAIR Data Implementation Workflow

The following diagram illustrates the key stages and decision points for implementing the FAIR data principles in a research environment.

Troubleshooting Common FAIR Implementation Challenges

This section addresses specific, common problems researchers face when trying to make their data FAIR, with a focus on catalysis and related fields.

Findability & Accessibility Issues

Problem: "My dataset is in a repository, but other researchers cannot find it or access it correctly."

- Solution:

- Ensure your repository assigns a Persistent Identifier (PID) like a DOI. If not, consider using a general-purpose repository like Zenodo or Dataverse that provides one [46].

- Write a comprehensive README file. Describe each filename, column headings, measurement units, and data processing steps [46].

- Clarify access restrictions. Data can be FAIR without being fully open. If data is restricted, the metadata should be public and clearly state the procedure for gaining access [46].

- Solution:

Problem: "I cannot reproduce the data from a catalysis publication because the raw data or critical input parameters are missing" [5].

- Solution:

- Use managed workflows (e.g., in platforms like Galaxy) that automatically capture all inputs, parameters, and outputs in a single digital object like an RO-Crate [5].

- Deposit not just processed, but also raw data in a subject-specific or general repository and link it directly to the publication.

- Solution:

Interoperability & Reusability Issues

Problem: "Data from different labs in our consortium cannot be integrated due to inconsistent formats and terminology."

- Solution:

- Avoid proprietary formats. Store and share data in open, machine-readable formats (e.g., CSV, JSON) [50].

- Use controlled vocabularies and ontologies. Instead of free-text descriptions, use standardized terms from community-accepted resources (e.g., SNOMED CT for biomedical subjects, or domain-specific ontologies for catalysis) [49] [47]. This ensures all researchers describe the same concept in the same way.

- Solution:

Problem: "I found a relevant dataset, but I don't know if I'm allowed to use it for my analysis or how to cite it properly."

FAIR Data Solutions and Tools for Catalysis Research

The table below summarizes key resources and methodologies to address FAIR implementation challenges.

Table 1: FAIR Solutions and Essential Tools for Researchers

| Challenge Area | Solution / Tool | Function & Benefit |

|---|---|---|

| Findability | Persistent Identifiers (DOIs) | Unambiguously identifies a dataset and facilitates reliable citation [46]. |

| Findability | General Repositories (e.g., Zenodo, Dataverse) | Provides a platform to deposit data, assigns a PID, and makes it discoverable [46]. |

| Findability | Subject-Specific Repositories (e.g., re3data.org) | Discipline-focused repositories that often offer enhanced metadata standards [46]. |

| Interoperability | Controlled Vocabularies & Ontologies | Uses shared, formal languages (e.g., MeSH, SNOMED) to ensure consistent meaning and enable data integration [49] [47]. |

| Interoperability | Open File Formats (e.g., CSV, JSON) | Ensures data is not locked into proprietary software and remains readable by different systems [50]. |

| Reusability | Workflow Management Systems (e.g., Galaxy) | Captures the entire analytical process, including all parameters, for full reproducibility and provenance [5]. |

| Reusability | Clear Data Licenses (e.g., CC-BY, CC-0) | Defines the terms of reuse, removing ambiguity and encouraging appropriate data sharing [46]. |

Frequently Asked Questions (FAQs)

Q1: Does making data FAIR mean I have to share all my data openly with everyone? A: No. FAIR is often confused with "Open Data," but they are distinct concepts. Data can be FAIR but not open. For example, sensitive clinical or proprietary catalysis data can have rich metadata that is publicly findable (F), with clear instructions for how to request access (A), while the actual data files are kept behind authentication barriers. The key is that the metadata is open and the path to access is clear, even if authorization is required [49] [46].

Q2: What is the typical cost of implementing a FAIR data management plan? A: Guides on implementing FAIR data practices suggest that the cost of a data management plan in compliance with FAIR should be approximately 5% of the total research budget [44]. While there are upfront investments in tooling and training, the long-term ROI is achieved through reduced assay duplication, faster submissions, and improved readiness for AI-driven analytics [49] [45].

Q3: How do FAIR principles support regulatory compliance in drug development? A: While FAIR is not a regulatory framework itself, it directly supports compliance with standards like GLP, GMP, and FDA data integrity guidelines. By improving data transparency, traceability, and structure, FAIR practices inherently create an environment that is more audit-ready. The detailed provenance and unbroken chain of documentation required by FAIR align perfectly with regulatory expectations for data integrity and version control [49].

Q4: What are the CARE Principles and how do they relate to FAIR? A: The CARE Principles (Collective Benefit, Authority to Control, Responsibility, Ethics) were developed by the Global Indigenous Data Alliance as a complementary guide to FAIR. While FAIR focuses on the technical aspects of data sharing, CARE focuses on data ethics and governance, ensuring that data involving Indigenous peoples is used in ways that advance their self-determination and well-being. The two sets of principles are not mutually exclusive and can be implemented together for responsible and effective data stewardship [44] [45].

Troubleshooting Guide: Common Catalyst Issues and Solutions

This guide addresses frequent challenges in catalytic research, helping you diagnose and resolve issues affecting catalyst performance and reproducibility.

Table 1: Catalyst Deactivation and Performance Issues

| Observed Symptom | Potential Causes | Diagnostic Steps & Solutions |

|---|---|---|

| Rapid decline in conversion [51] | Catalyst poisoning, sintering, temperature runaway, feed contaminants [51] | Analyze feed for poisons (e.g., S, Na); check for hot spots and verify operating temperature is within design limits [52] [51]. |

| Gradual decline in conversion [51] | Normal catalyst aging, slow coking/carbon laydown, loss of surface area [51] | Confirm with sample and analysis error checks; plan for periodic catalyst regeneration or replacement [51]. |

| Pressure Drop (DP) higher than design [51] | Catalyst bed channeling, sudden coking, internal reactor damage, catalyst fines [51] | Check for radial temperature variations >6-10°C indicating channeling; inspect for fouling or physical damage during loading [51]. |

| Pressure Drop (DP) lower than expected [51] | Catalyst bed channeling due to poor loading, voids in the bed [51] | Verify catalyst loading procedure; look for erratic radial temperature profiles and difficulty meeting product specifications [51]. |

| Temperature Runaway [51] | Loss of quench gas, uncontrolled heater firing, change in feed quality, hot spots [51] | Immediately verify safety systems; check flow distribution and cooling media; analyze feed composition changes [51]. |

| Poor Selectivity [51] | Bad catalyst batch, faulty preconditioning, incorrect temperature/pressure settings [51] | Re-calibrate instruments; verify catalyst activation/pretreatment protocol against supplier specifications [51]. |

| Low Conversion with Increasing DP [51] | Maldistribution of flow, feed precursors for polymerization/coking [51] | Inspect and clean inlet distributors; check for plugging with fine solids or sticky deposits [51]. |

Table 2: Synthesis and Reproducibility Issues

| Observed Symptom | Potential Causes | Diagnostic Steps & Solutions |

|---|---|---|

| Irreproducible catalyst activity between batches [52] | Uncontrolled variation in synthesis parameters (pH, mixing time, temperature); contaminated reagents or glassware [52] | Standardize and meticulously record all synthesis steps, durations, and reagent sources/lot numbers. Use high-purity reagents [52]. |

| Inconsistent nanoparticle sizes [52] | Variations in precursor concentration, mixing intensity, or contact time during deposition [52] | For methods like deposition-precipitation, ensure precise control and reporting of mixing speeds and reaction times [52]. |

| Loss of active species (e.g., in molecular catalysts) [53] | Ligand decomposition or metal center dissociation from the support [53] | Employ self-healing strategies: design systems with an excess of vacant ligand sites to recapture metal centers [53]. |

| Poor performance after storage [52] | Contamination from atmospheric impurities (e.g., carboxylic acids on TiO2, ppb-level H2S) [52] | Implement proper storage in inert atmospheres; clean catalyst surfaces in situ before reactivity measurements [52]. |