Revolutionizing Drug Discovery: How AI-Driven Platforms Enable Rapid Catalytic Performance Assessment

This article explores the transformative role of Artificial Intelligence in accelerating the assessment of catalytic performance for drug development.

Revolutionizing Drug Discovery: How AI-Driven Platforms Enable Rapid Catalytic Performance Assessment

Abstract

This article explores the transformative role of Artificial Intelligence in accelerating the assessment of catalytic performance for drug development. Targeted at researchers, scientists, and industry professionals, it covers the foundational concepts of catalysis in pharmaceuticals, details the methodology of modern AI platforms, addresses common implementation challenges, and validates these tools against traditional methods. By synthesizing current research, the article provides a comprehensive guide to leveraging AI for faster, more accurate catalyst evaluation, ultimately aiming to streamline the entire drug discovery pipeline.

Catalysis in Drug Discovery: Understanding the Core Problem AI Aims to Solve

The Critical Role of Catalysts in Modern Pharmaceutical Synthesis

Within the paradigm of AI-driven platform rapid catalytic performance assessment research, catalysts are indispensable for enabling efficient, selective, and sustainable synthesis of active pharmaceutical ingredients (APIs). This application note details protocols for key catalytic transformations and integrates quantitative performance data to guide research.

Application Notes & Protocols

Protocol: AI-Guided High-Throughput Screening for Asymmetric Hydrogenation Catalyst

Objective: Rapid identification of optimal chiral catalysts for enantioselective synthesis of a beta-amino acid precursor using an AI-driven screening platform.

Materials & Workflow:

- Substrate Preparation: Charge 96-well microreactor plates with 1.0 µmol of beta-(acetylamino) acrylate substrate in 200 µL of degassed THF per well.

- Catalyst Library: Dispense a diverse library of 96 chiral bisphosphine-Rh(I) and Ru(II) complexes (0.01 µmol, 1 mol%) using a liquid handling robot.

- Reaction Execution: Under inert atmosphere (N₂), introduce H₂ to 10 bar pressure. Agitate plates and heat to 40°C for 2 hours.

- AI-Integrated Analysis:

- Sampling: Quench aliquots with 10 µL of ethyl diisopropylamine.

- Analysis: Perform ultra-high-performance liquid chromatography (UHPLC) with chiral stationary phase to determine conversion and enantiomeric excess (ee).

- Data Pipeline: Streamline conversion and ee results directly to the AI platform database. The AI model correlates catalyst structural fingerprints (e.g., steric/electronic descriptors) with performance.

- Prediction: The AI platform iteratively predicts and prioritizes the next set of promising catalyst candidates for validation.

Expected Outcome: Identification of a lead catalyst providing >99% conversion and >98% ee within 3 screening cycles.

Protocol: Continuous-Flow Photoredox Catalysis for C–H Functionalization

Objective: To safely and scalably perform a visible-light-mediated cross-dehydrogenative coupling for API fragment synthesis.

Materials & Workflow:

- System Setup: Assemble a continuous-flow photoreactor consisting of:

- Two HPLC pumps for substrate and catalyst streams.

- A PTFE tubing coil (10 mL volume) wrapped around a blue LED array (450 nm, 30 W).

- A back-pressure regulator (BPR) set to 50 psi.

- Preparation: Prepare solution A: 0.1 M tetrahydroisoquinoline in acetonitrile. Prepare solution B: 1.0 mol% [Ir(dF(CF₃)ppy)₂(dtbbpy)]PF₆ (photoredox catalyst) and 1.2 equiv. of diethyl bromomalonate in acetonitrile.

- Process Execution: Pump solutions A and B at a combined flow rate of 1.0 mL/min (residence time: 10 min). Pass the mixture through the irradiated coil maintained at 25°C.

- Monitoring & Collection: Monitor reaction completion by inline UV-Vis spectrophotometry. Collect the outflow directly into a quenching solution containing 5% aqueous NaHCO₃. Concentrate in vacuo and purify by flash chromatography.

Expected Outcome: Achieve >85% yield of the functionalized product with 24/7 continuous operation, significantly outperforming batch safety and efficiency.

Data Presentation

Table 1: Performance Metrics of Key Catalytic Transformations in API Synthesis

| Transformation Type | Typical Catalyst Class | Average Yield (%) | Typical Turnover Number (TON) | Key Benefit for Pharma |

|---|---|---|---|---|

| Asymmetric Hydrogenation | Chiral Ru/Bisphosphine | 95-99 | 1,000-10,000 | High enantiopurity of chiral centers |

| Suzuki-Miyaura Coupling | Pd/Pho s phine (e.g., SPhos) | 85-98 | 10,000-100,000 | Robust biaryl synthesis for scaffolds |

| Photoredox C–H Activation | Iridium polypyridyl complexes | 75-90 | 50-200 | Direct functionalization, reduces steps |

| Organocatalysis (e.g., Aldol) | Proline derivatives | 80-95 | 50-500 | Metal-free, biodegradable catalysts |

Table 2: AI-Driven vs. Traditional Catalyst Screening Efficiency

| Screening Metric | Traditional HTS (96-well) | AI-Guided Iterative Screening | Efficiency Gain |

|---|---|---|---|

| Time to Lead Catalyst (hr) | 120-168 | 24-48 | 5-7x faster |

| Number of Experiments Run | 96 (full plate) | 20-30 (per cycle) | ~70% reduction |

| Material Used (mg substrate) | ~1000 | ~200 | 80% less waste |

| Predictive Success Rate (%) | N/A (random) | >40 (after training) | Significant |

Visualizations

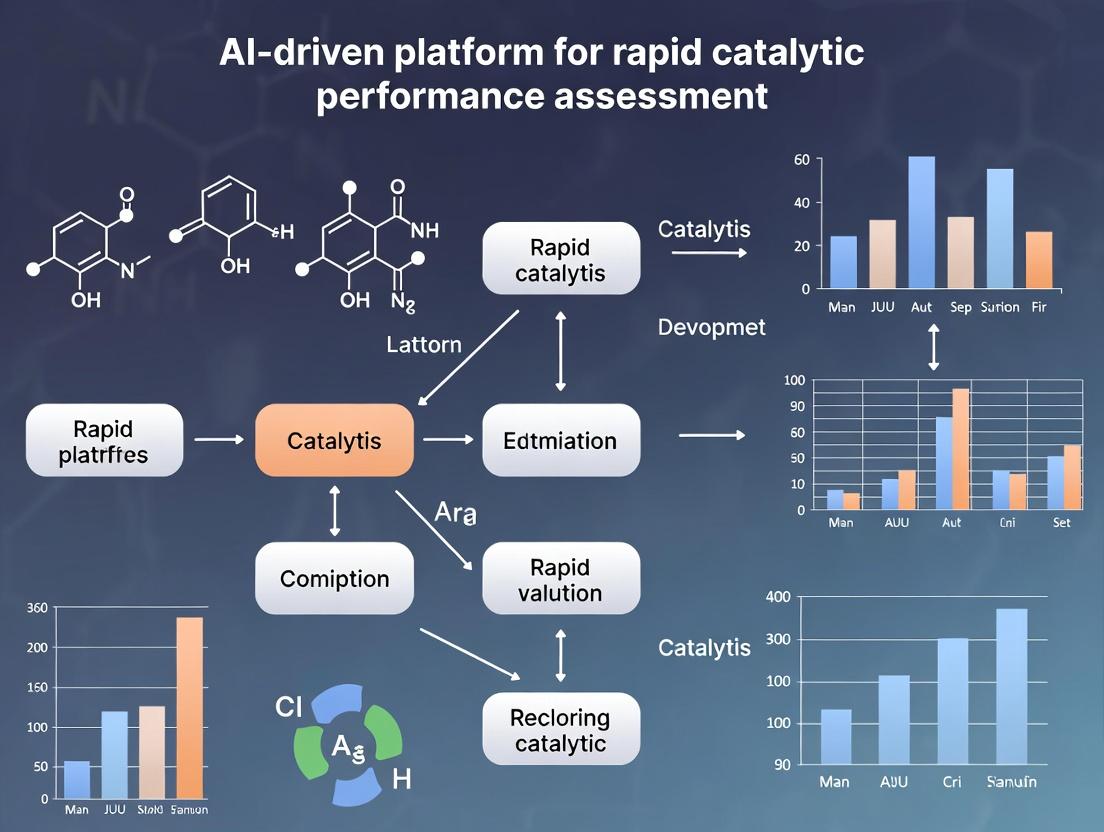

AI-Driven Catalyst Discovery Workflow

Photoredox Catalysis Mechanism

The Scientist's Toolkit: Research Reagent Solutions

| Item | Function in Catalytic Pharma Synthesis |

|---|---|

| Chiral Bisphosphine Ligands (e.g., (R)-BINAP, Josiphos) | Imparts stereochemical control in asymmetric metal catalysis (hydrogenation, cross-coupling). |

| Palladium Precursors (e.g., Pd₂(dba)₃, Pd(OAc)₂) | Core catalyst for cross-coupling reactions (Suzuki, Buchwald-Hartwig) to form C-C/C-N bonds. |

| Iridium & Ruthenium Photoredox Catalysts | Absorbs visible light to initiate single-electron transfer (SET) processes for radical-based synthesis. |

| Organocatalysts (e.g., MacMillan, proline derivatives) | Metal-free catalysts for enantioselective transformations like aldol or Diels-Alder reactions. |

| Solid-Supported Reagents (e.g., polymer-bound PS-Pd-NHC) | Enables heterogeneous catalysis, simplifying purification and catalyst recycling in flow chemistry. |

| Deuterated & ¹³C-Labeled Reagents | Essential for kinetic isotope effect (KIE) studies to elucidate catalytic mechanisms. |

Within the framework of AI-driven rapid catalytic performance assessment, traditional catalyst screening remains a critical bottleneck in drug development and chemical synthesis. This document details the quantitative inefficiencies and provides standardized protocols for key screening methods, highlighting the transition towards high-throughput and AI-enhanced approaches.

Quantitative Analysis of Traditional Screening Limitations

Table 1: Cost and Time Analysis of Manual Catalyst Screening

| Screening Parameter | Traditional Batch Method | High-Throughput Parallel Method | Relative Efficiency Gain |

|---|---|---|---|

| Catalysts Tested per Week | 10 - 50 | 1,000 - 10,000 | 100x - 200x |

| Material Cost per Test | $200 - $500 | $5 - $20 | ~40x reduction |

| Personnel Hours per Data Point | 4 - 8 | 0.1 - 0.5 | ~80% reduction |

| Time to SAR (Structure-Activity Relationship) | 6 - 12 months | 2 - 4 weeks | ~90% reduction |

| False Positive/Negative Rate | 15% - 25% | 5% - 10% | ~60% reduction |

Table 2: Resource Allocation in a Typical Medicinal Chemistry Campaign

| Resource Category | % of Total Project Time (Traditional) | % of Total Project Time (AI-Informed) |

|---|---|---|

| Catalyst Synthesis & Sourcing | 35% | 15% |

| Reaction Setup & Execution | 30% | 10% |

| Product Analysis & Characterization | 25% | 15% |

| Data Analysis & Decision Making | 10% | 60% (incl. AI model training/validation) |

Detailed Experimental Protocols

Protocol 1: Traditional Batch-Mode Cross-Coupling Catalyst Screening

Objective: To evaluate Pd-based catalyst libraries for a Suzuki-Miyaura coupling in a manual, one-reaction-at-a-time format.

Materials: See "The Scientist's Toolkit" below. Procedure:

- Reaction Setup: In an inert atmosphere glovebox, charge 24 individual 5 mL microwave vials with aryl halide (0.1 mmol, 1.0 equiv).

- Catalyst/Additive Addition: To each vial, add a distinct catalyst (2 mol%) and ligand (4 mol%) from the library. Use a fresh micropipette tip for each addition.

- Base and Solvent Addition: Add base (2.0 equiv) and degassed solvent mixture (1.0 mL of dioxane/H₂O 4:1) to all vials.

- Initiation: Add boronic acid (1.2 equiv) via syringe to start the reaction. Seal vials.

- Heating: Remove vials from glovebox and heat in a pre-heated aluminum block at 80°C for 16 hours.

- Quenching & Analysis: Cool vials to RT. Quench each with saturated NH₄Cl (1 mL). Extract with EtOAc (3 x 2 mL). Combine organic layers, dry over MgSO₄, filter, and concentrate.

- Yield Determination: Analyze each crude residue by quantitative NMR using an internal standard (e.g., 1,3,5-trimethoxybenzene). Calculate conversion and yield.

Protocol 2: Parallel High-Throughput Screening Workflow

Objective: To screen 96 catalysts in parallel for asymmetric hydrogenation using automated liquid handling. Procedure:

- Plate Preparation: Using an automated liquid handler, dispense stock solutions of substrate (10 µL, 0.1 M in toluene) into all wells of a 96-well glass-coated microtiter plate.

- Catalyst/Ligand Dispensing: Dispense unique catalyst/ligand combinations from stock solutions into individual wells (2 µL each, 2 mol% catalyst).

- Solvent Addition: Add degassed solvent (188 µL) to each well to bring total volume to 200 µL.

- Reaction Initiation: Seal plate with a gas-permeable membrane. Transfer plate to a parallel pressure reactor. Purge with H₂ (3x), then pressurize to 50 bar H₂.

- Parallel Agitation & Heating: Agitate and heat the entire plate at 30°C for 6 hours.

- Automated Sampling & Analysis: Depressurize. Using the liquid handler, dilute an aliquot from each well with methanol (1:10) and transfer to a 96-well analysis plate.

- High-Throughput Analysis: Analyze via UPLC-MS with a run time of < 3 minutes per sample. Use automated peak integration and enantiomeric excess (ee) calculation via chiral column separation.

Visualization of Workflows and Relationships

Title: Traditional Catalyst Screening Bottleneck Workflow

Title: AI-Enhanced High-Throughput Screening Workflow

The Scientist's Toolkit: Research Reagent Solutions

Table 3: Essential Materials for Catalytic Screening

| Item | Function & Rationale |

|---|---|

| Pre-catalysts (e.g., Pd(PPh₃)₄, Pd₂(dba)₃, [Ir(COD)Cl]₂) | Air-stable metal sources that activate in situ to generate active catalytic species. |

| Ligand Libraries (e.g., phosphines (XPhos, SPhos), N-heterocyclic carbenes (IMes, SIPr), chiral ligands (BINAP, Josiphos)) | Modulate catalyst activity, selectivity, and stability. Diversity is key for exploration. |

| Internal Standards for qNMR (e.g., 1,3,5-Trimethoxybenzene, Dimethyl terephthalate) | Enables rapid, accurate yield determination without full purification. |

| Degassed Solvents in Sure/Seal Bottles | Removes O₂ and H₂O, crucial for air-sensitive catalysts, ensuring reproducibility. |

| Automated Liquid Handler Tips & Microtiter Plates | Enables precise, parallel delivery of reagents in sub-milliliter volumes for HTE. |

| Glass-Coated or Polymer-Based 96-Well Reaction Plates | Chemically resistant wells for parallel reactions at various temperatures and pressures. |

| Parallel Pressure Reactor Stations | Allows simultaneous execution of multiple gas-phase reactions (H₂, CO, etc.) under pressure. |

| UPLC-MS with Automated Sampler | Provides rapid analytical turnaround (minutes/sample) with mass confirmation for HTE. |

| Chiral UPLC/HPLC Columns (e.g., Chiralpak IA, IB, IC) | Essential for determining enantiomeric excess (ee) in asymmetric catalysis screens. |

Within the paradigm of AI-driven rapid catalytic performance assessment, precise and standardized experimental metrics are the fundamental data inputs for machine learning models. The accurate determination of yield, selectivity, turnover frequency (TOF), and stability is critical for generating high-fidelity datasets. These datasets train predictive algorithms to de-novo design catalysts, optimize reaction conditions, and accelerate the development cycle in pharmaceutical and fine chemical synthesis. This Application Note details the protocols for measuring these core performance indicators.

Key Performance Metrics: Definitions and Quantitative Benchmarks

Table 1: Core Catalytic Performance Metrics

| Metric | Definition & Formula | Ideal Range (Pharma Context) | Primary Analytical Method |

|---|---|---|---|

| Yield (%) | (Moles of product formed / Moles of limiting reactant) x 100 | >90% (API steps) | NMR, GC, HPLC |

| Selectivity (%) | (Moles of desired product / Moles of total products) x 100 | >95% (minimize isomers/byproducts) | GC-MS, LC-MS |

| Turnover Number (TON) | Total moles of product per mole of catalyst. | 10⁴ - 10⁶ (for cost-effective processes) | Calculated from yield |

| Turnover Frequency (TOF, h⁻¹) | TON per unit time (initial rate period). TOF = (Moles product)/([Cat.] x Time). | 10 - 10⁵ (process-dependent) | Kinetic analysis (in situ IR, calorimetry) |

| Stability (Lifetime) | Operational time or total TON before activity/selectivity drops by 50%. | >1000 h (continuous flow) | Long-duration testing |

Table 2: Common Stability Test Outcomes

| Test Type | Protocol | Data Output for AI Training |

|---|---|---|

| Batch Reusability | Catalyst recovered, washed, and reused in identical cycles. | Yield vs. Cycle Number plot. |

| Continuous Flow | Fixed-bed reactor under constant feed; monitor outlet. | Conversion vs. Time-on-Stream (TOS) plot. |

| Leaching Test | Reaction mixture analyzed for catalyst metal post-reaction. | ppm-level metal detected by ICP-MS. |

| Hot Filtration | Reaction filtered hot to remove catalyst; filtrate monitored. | Confirms heterogeneous vs. homogeneous nature. |

Experimental Protocols

Protocol 3.1: Standardized Catalytic Test for Initial Performance Assessment Objective: To obtain concurrent data for Yield, Selectivity, and initial TOF in a batch reactor. Procedure:

- Reaction Setup: In an inert atmosphere glovebox, charge a stirred reactor with substrate (1.0 mmol), internal standard (for GC/NMR, e.g., 0.1 mmol), and catalyst (0.5-1.0 mol%). Seal the reactor.

- Initiation: Transfer reactor out, connect to pressure/thermo-control, and add solvent (2 mL) via syringe. Start stirring (800 rpm) and heat to target temperature (T1).

- Kinetic Sampling: At precise time intervals (t = 2, 5, 10, 15, 30, 60 min), withdraw a small aliquot (∼50 µL) using a gas-tight syringe. Quench immediately and dilute for analysis.

- Analysis: Quantify substrate consumption and product formation using calibrated GC-FID or HPLC. Use the internal standard for absolute quantification.

- Calculation:

- Yield (t) = (mol Product (t) / mol Substrate initial) x 100.

- Selectivity (t) = (mol Desired Product (t) / Σ all Products (t)) x 100.

- Initial TOF: Calculate from the slope of the product mol vs. time curve at <10% conversion, normalized to catalyst moles and time.

Protocol 3.2: Catalyst Stability and Reusability Test Objective: To assess catalyst deactivation and robustness over multiple cycles. Procedure:

- Initial Run: Perform reaction per Protocol 3.1 for a fixed duration (e.g., 2 h).

- Catalyst Recovery: Cool reaction mixture. For heterogeneous catalysts, centrifuge to recover solid. Wash with solvent (3 x 2 mL) and dry in vacuo. For homogeneous catalysts, attempt recovery via precipitation or extraction.

- Reuse Cycles: Charge the recovered catalyst with fresh substrate and solvent. Repeat the reaction under identical conditions.

- Leaching Check (ICP-MS): After Step 2, digest the supernatant or filtrate in concentrated HNO₃. Analyze metal content via Inductively Coupled Plasma Mass Spectrometry (ICP-MS).

- Data Reporting: Plot Yield and Selectivity versus Cycle Number (1-5+). Report cumulative TON.

The Scientist's Toolkit: Research Reagent Solutions

Table 3: Essential Materials for Catalytic Performance Screening

| Item | Function & Rationale |

|---|---|

| High-Throughput Parallel Reactor (e.g., 24-well) | Enables simultaneous testing of multiple catalysts/conditions, generating uniform data for AI/ML model training. |

| In Situ Reactor Probe (ATR-FTIR, Raman) | Provides real-time kinetic data for accurate TOF determination without sampling disturbances. |

| Automated Liquid Handling Robot | Ensures precision and reproducibility in catalyst/substrate dosing, eliminating human error. |

| Standardized Catalyst Libraries | Well-characterized, diverse sets of molecular or heterogeneous catalysts for model validation. |

| Integrated Analytics (GC/MS, HPLC/MS) | Coupled with auto-samplers for rapid, high-volume analysis of reaction mixtures. |

| Stable Isotope-Labeled Substrates | Used in mechanistic studies to trace atom pathways, informing selectivity models. |

Visualization: AI-Driven Workflow for Performance Assessment

Diagram 1: AI-driven catalytic performance assessment workflow.

Diagram 2: Data pipeline from reaction to key metrics.

The integration of Artificial Intelligence (AI) and Machine Learning (ML) into chemistry is revolutionizing research methodologies, particularly within the scope of AI-driven platform rapid catalytic performance assessment. This paradigm enables researchers to move beyond traditional trial-and-error experimentation, leveraging data-driven models to predict catalyst efficacy, optimize reaction conditions, and accelerate the discovery of novel materials and pharmaceuticals. This primer introduces core AI/ML concepts and provides actionable protocols for chemists to implement these tools in catalytic and drug development research.

Core AI/ML Concepts for Chemical Research

Artificial Intelligence (AI) is a broad field focused on creating systems capable of performing tasks that typically require human intelligence. Machine Learning (ML), a subset of AI, involves algorithms that improve their performance at a task through experience (data). In chemistry, the most relevant branches are:

- Supervised Learning: Training models on labeled data (e.g., catalyst structures paired with their turnover frequency). Used for prediction and classification.

- Unsupervised Learning: Finding hidden patterns or groupings in unlabeled data (e.g., clustering similar reaction outcomes).

- Deep Learning: Utilizing multi-layered neural networks to model complex, non-linear relationships in high-dimensional data (e.g., from spectral analysis or molecular graphs).

Application Notes for Catalytic Performance Assessment

An AI-driven platform for rapid assessment typically follows a cyclical workflow: Data Curation -> Feature Engineering -> Model Training -> Prediction & Validation -> Experimental Feedback.

Current literature highlights the performance of various ML models in predicting catalytic properties. The following table summarizes key metrics from recent studies (2023-2024).

Table 1: Performance of ML Models in Catalytic Property Prediction

| Model Type | Application Example | Key Metric (e.g., R² Score) | Dataset Size | Reference Year |

|---|---|---|---|---|

| Graph Neural Network (GNN) | Predicting catalyst selectivity in C-H activation | R² = 0.89 | ~5,000 reactions | 2024 |

| Random Forest (RF) | Classifying high/low activity from descriptor set | Accuracy = 94% | ~2,000 catalysts | 2023 |

| Gradient Boosting (XGBoost) | Predicting turnover frequency (TOF) from elemental properties | MAE* = 12.3 h⁻¹ | ~3,500 data points | 2024 |

| Convolutional Neural Network (CNN) | Analyzing microscopy images for active site identification | F1-Score = 0.91 | ~10,000 images | 2023 |

*MAE: Mean Absolute Error

Detailed Experimental Protocols

Protocol 1: Building a Predictive Model for Catalyst Activity Using Supervised Learning

Objective: To train a model that predicts the yield of a catalytic reaction based on molecular descriptors of the catalyst and reaction conditions.

Materials & Software:

- Dataset: Curated CSV file containing catalyst SMILES strings, temperature, pressure, solvent polarity index, and corresponding reaction yield.

- Software: Python (v3.9+) with libraries:

scikit-learn,pandas,numpy,rdkit(for descriptor calculation).

Procedure:

- Feature Engineering:

- Use the

rdkit.Chemmodule to parse SMILES strings and compute molecular descriptors (e.g., molecular weight, number of aromatic rings, topological surface area) for each catalyst. - Combine these with continuous reaction condition variables (temperature, pressure) into a single feature matrix (

X).

- Use the

- Data Preprocessing:

- Split the dataset into training (70%), validation (15%), and test (15%) sets using

sklearn.model_selection.train_test_split. - Scale all features to zero mean and unit variance using

sklearn.preprocessing.StandardScaler.

- Split the dataset into training (70%), validation (15%), and test (15%) sets using

- Model Training & Validation:

- Initialize a Random Forest Regressor (

sklearn.ensemble.RandomForestRegressor). - Train the model on the training set using default parameters.

- Use the validation set to tune hyperparameters (e.g.,

n_estimators,max_depth) via grid search.

- Initialize a Random Forest Regressor (

- Evaluation:

- Predict yields on the held-out test set.

- Calculate performance metrics: R² score and Mean Absolute Error (MAE).

- Deployment:

- Save the trained model as a

.pklfile using thepicklemodule. - Create a simple function that takes new catalyst SMILES and conditions as input and returns a predicted yield.

- Save the trained model as a

Protocol 2: Active Learning for High-Throughput Catalyst Screening

Objective: To iteratively guide experiments by using an ML model to select the most informative catalysts to test next, maximizing performance discovery.

Procedure:

- Initial Model: Train a preliminary model on a small, diverse seed dataset of known catalysts (~50 data points).

- Uncertainty Sampling:

- Use the model to predict outcomes for a large virtual library of candidate catalysts (~10,000).

- For each prediction, calculate the uncertainty (e.g., standard deviation of predictions from all trees in a Random Forest ensemble).

- Selection & Experimentation:

- Rank the candidates by prediction uncertainty.

- Select the top 10-20 candidates with the highest uncertainty for synthesis and experimental testing. This targets the model's "unknown unknowns."

- Iterative Loop:

- Add the new experimental results to the training dataset.

- Retrain the model on the expanded dataset.

- Repeat steps 2-4 for 5-10 cycles. The model rapidly converges on high-performance regions of the chemical space.

Visualization of Key Workflows

AI-Driven Catalyst Discovery Workflow

ML Model for Property Prediction

The Scientist's Toolkit: Key Reagents & Solutions

Table 2: Essential Materials for Implementing AI/ML in Chemical Research

| Item / Solution | Function in AI/ML Workflow | Example Vendor / Library |

|---|---|---|

| Curated Chemical Dataset | The foundational fuel for training models. Requires consistent formatting and annotation. | PubChem, Citrination, MIT Catalyst Database |

| Computational Descriptors | Quantitative representations of molecular structures used as model input features. | RDKit (for 2D descriptors), Dragon, COSMOtherm |

| ML Algorithm Library | Provides pre-built, optimized algorithms for model development and training. | scikit-learn (classic ML), PyTorch/TensorFlow (Deep Learning) |

| Automated Reaction Platform | Physical hardware for generating high-fidelity validation data in the feedback loop. | Chemspeed, Unchained Labs, home-built flow/HTE systems |

| Model Serving Framework | Allows deployment of trained models as APIs for easy use by other researchers. | Flask, FastAPI, TorchServe |

The discovery and optimization of catalysts, critical for pharmaceutical synthesis and green chemistry, have traditionally relied on iterative, time-consuming experimental screening. This Application Note details the integration of an AI-driven platform for rapid catalytic performance assessment, framed within a broader thesis that machine learning can accelerate the entire discovery pipeline—from virtual screening and mechanistic insight to experimental validation and scale-up.

Table 1: Comparative Performance of AI-Driven vs. Traditional High-Throughput Experimentation (HTE) for Cross-Coupling Catalyst Discovery

| Metric | Traditional HTE | AI-Guided Platform (Reported Averages) | Improvement Factor |

|---|---|---|---|

| Initial Screening Rate (candidates/week) | 50 - 200 | 5,000 - 20,000 (virtual) | ~100x |

| Experimental Validation Required | 100% of library | 0.5 - 5% of virtual library | ~95% reduction |

| Cycle Time for Lead Optimization | 6 - 12 months | 1 - 3 months | ~4x faster |

| Success Rate (Yield >90% & ee >95%) | < 1% | 8 - 15% | ~10-15x |

| Material Consumption per Test | 1 - 10 mg | 0.1 - 1 mg (microscale) | ~10x reduction |

Table 2: Performance Metrics of Representative AI-Identified Catalysts (2023-2024)

| Reaction Class | AI-Predicted Catalyst | Key Performance Indicator (Predicted) | Experimental Validation | Reference |

|---|---|---|---|---|

| Asymmetric Hydrogenation | Bidentate phosphine-oxazoline Fe complex | 98% ee, 99% conv. | 96% ee, >99% conv. | Science (2023) |

| C-N Cross-Coupling | Heterogeneous Pd single-atom on N-doped carbon | TON: 10^5 | TON: 9.2 x 10^4 | Nat. Catal. (2024) |

| Photoredox C-C Coupling | Organic polymer photocatalyst | Quantum Yield: 0.45 | Quantum Yield: 0.41 | JACS (2024) |

| CO2 Electroreduction | Doped Cu-Zn alloy | Selectivity to C2+: 85% @ -1.0V | Selectivity: 82% @ -1.0V | Nature (2024) |

Experimental Protocols

Protocol 1: AI-Guided Microscale Kinetic Screening for Homogeneous Catalysis

Purpose: To experimentally validate AI-predicted catalyst leads using minimal materials. Workflow:

- Input Preparation: From the AI-generated shortlist (typically 50-100 candidates), prepare stock solutions (10 mM in appropriate anhydrous solvent) in a glovebox.

- Microscale Reaction Setup: Utilize an automated liquid handling system to dispense:

- 2 µL of catalyst stock (20 nmol) into a 384-well microtiter plate.

- 100 µL of substrate solution (0.1 M, 10 µmol).

- 50 µL of co-catalyst/activator solution if required.

- Reaction Execution: Seal plates under inert atmosphere. Place in a pre-heated/shaken thermal block or photoreactor for the prescribed time (1-24h).

- Quenching & Analysis: Add 50 µL of quenching solution (e.g., 1% TFA in MeCN). Directly analyze 10 µL injections via UPLC-MS equipped with a fast autosampler.

- Data Processing: Integrate peaks for product and internal standard. Convert to conversion and yield using calibration curves. Upload results to the AI platform for model refinement.

Protocol 2: High-Throughput Characterization for Heterogeneous Catalysts

Purpose: To synthesize and characterize AI-predicted solid catalyst formulations. Workflow:

- Parallel Synthesis: Use a robotic sol-gel or impregnation station to prepare catalyst libraries on 64-well ceramic monolith plates. Follow AI-suggested stoichiometries and calcination temperatures.

- Rapid Characterization:

- PXRD: Perform rapid synchrotron or micro-XRD mapping across the library to confirm phase purity.

- XPS/EDS: Use automated electron microscopy for surface composition mapping.

- Performance Testing: Load the monolith plate into a parallel plug-flow reactor system. Expose to standardized reactant gas/liquid streams. Monitor effluent composition in real-time using multiplexed mass spectrometry.

- Stability Test: For top performers, run extended duration tests (24-72h) under accelerated aging conditions (elevated temperature).

Visualization: Workflows & Pathways

Title: AI-Driven Catalyst Discovery Closed-Loop Workflow

Title: AI-Modeled Catalytic Cycle with Key Transition States

The Scientist's Toolkit: Research Reagent Solutions

Table 3: Essential Materials for AI-Driven Catalyst Discovery & Validation

| Item | Function in AI-Driven Workflow | Example Product/Specification |

|---|---|---|

| Automated Liquid Handler | Enables precise, reproducible microscale reaction setup for validating 100s of AI predictions. | Hamilton Microlab STAR, Labcyte Echo (acoustic dispenser). |

| Parallel Microreactor System | Allows simultaneous testing of reaction conditions (temp, pressure, light) for shortlisted catalysts. | Unchained Labs Little Bird Series, HEL FlowCAT. |

| High-Throughput UPLC-MS | Provides rapid, quantitative analysis of reaction outcomes for model feedback. | Waters Acquity UPLC with Isocratic Solvent Manager and QDa Detector. |

| Robotic Synthesis Platform | Automates synthesis of novel solid or organometallic catalyst libraries from AI-generated structures. | Chemspeed Technologies SWING, Freeslate CGS. |

| Multiplexed Gas/Liquid Analyzer | Real-time monitoring of product streams in heterogeneous catalysis tests. | Hiden Analytical HPR-20 Mass Spectrometer, IRmadillo FTIR. |

| Quantum Chemistry Software | Generates training data (energies, geometries) for AI/ML models. | Gaussian 16, ORCA, with automated scripting interfaces (ASE, pyscf). |

| Catalyst Database License | Provides structured, historical data for model training. | Reaxys, CAS Catalysis Resource, NIST Catalysis. |

| Active Learning Platform | Orchestrates the iterative loop between prediction, experiment, and learning. | Citrine Informatics CAT, Atonometrics Sphinx, custom Python (scikit-learn, PyTorch). |

Building and Deploying AI Platforms for Catalyst Assessment: A Step-by-Step Guide

Within the paradigm of AI-driven rapid catalytic performance assessment, the predictive power of machine learning (ML) models is fundamentally constrained by the quality, scope, and veracity of the underlying data. This document outlines comprehensive Application Notes and Protocols for sourcing, curating, and preparing high-quality datasets for catalytic research, with a focus on heterogeneous and enzymatic catalysis relevant to pharmaceutical synthesis.

Note 2.1: Primary vs. Secondary Data Sourcing

- Primary Experimental Sourcing: Direct generation of catalytic performance data (e.g., conversion, yield, turnover frequency (TOF), enantiomeric excess (ee)) via standardized in-house high-throughput experimentation (HTE). This offers maximum control over data consistency but is resource-intensive.

- Secondary Literature Sourcing: Extraction of data from published scientific literature and established databases. This rapidly scales dataset size but introduces heterogeneity from varied experimental conditions.

Note 2.2: Critical Metadata Requirements For any catalytic data point to be ML-ready, it must be associated with comprehensive metadata. Incomplete metadata renders data points unusable for predictive modeling.

Note 2.3: The Catalyst Identifier Crisis A major challenge in data unification is the lack of standardized, machine-readable representations for catalyst structures (especially organometallic complexes) and reaction conditions. Adopting canonical identifiers (e.g., InChIKey, SMILES) is non-negotiable for database construction.

Protocols for Data Curation

Protocol 3.1: Systematic Data Extraction from Literature

Objective: To consistently transform published catalytic performance data into a structured, queryable format. Materials: Literature database access (e.g., SciFinder, Reaxys), chemical structure drawing software, data templating spreadsheet or database schema. Procedure:

- Define Query & Selection Criteria: Specify search terms (e.g., "asymmetric hydrogenation," "C-N coupling," "TOF"). Apply filters for publication date, catalyst type, and substrate scope.

- Populate Data Template: For each relevant publication, extract information into pre-defined fields (see Table 1).

- Structure Standardization: Convert all reported catalyst, ligand, substrate, and product structures into canonical SMILES strings. Validate using a cheminformatics toolkit (e.g., RDKit).

- Unit Harmonization: Convert all reported numerical values (e.g., pressure from psi to bar, temperature from °F to K, concentration from mol% to molarity) to a consistent SI-unit-based system.

- Contextual Flagging: Flag entries with missing critical metadata (e.g., missing TOF, undefined ee measurement conditions) for potential exclusion or imputation.

Protocol 3.2: Curation and Validation of Primary HTE Data

Objective: To ensure internally generated data adheres to FAIR (Findable, Accessible, Interoperable, Reusable) principles. Materials: HTE reactor system, automated analytics (e.g., UPLC, GC), Laboratory Information Management System (LIMS). Procedure:

- Experimental Design File: Generate a digital file linking each reactor well to a specific combination of catalyst, substrate, and condition variables (e.g.,

Well_A01: [Cat_SMILES], [Sub_SMILES], T=373.15, P=10). - Raw Data Capture: Automate the transfer of analytical results (e.g., chromatogram peak areas) from instruments to the LIMS, using the experimental design file as a map.

- Performance Metric Calculation: Apply consistent formulas within the LIMS to calculate conversion, yield, and selectivity from raw analytical data.

- Outlier Detection & Review: Employ statistical methods (e.g., Z-score analysis) within the dataset to flag outliers. Manually review chromatograms/spectra of flagged reactions for technical errors.

- Data Packaging: Export the validated dataset as a structured file (e.g.,

.csv,.json) containing all performance metrics and their associated, structured metadata.

Protocol 3.3: Data Integration and Conflict Resolution

Objective: To merge data from multiple sources into a single, coherent dataset. Procedure:

- Schema Alignment: Map fields from different source tables to a unified master schema.

- Deduplication: Identify entries representing the same reaction using catalyst/substrate SMILES and condition similarity. Resolve conflicts by prioritizing primary data or data from a predefined "trusted source" hierarchy.

- Missing Data Imputation: Decide on a policy for handling missing values (e.g., removal, imputation using median/mode, or tagging with a placeholder). Note: Imputation is not recommended for critical performance outputs (yield, ee).

Data Presentation

Table 1: Essential Data Fields for a Catalytic Performance Dataset

| Field Category | Specific Field | Data Type | Description & Example |

|---|---|---|---|

| Reaction Core | Reaction_SMILES | String | Transformations in SMILES format. e.g., [C:1]=[C:2]>>[C:1][C:2] |

| Reaction_Type | Categorical | e.g., "Hydrogenation", "Cross-Coupling", "Oxidation". | |

| Catalyst | Catalyst_SMILES | String | Canonical SMILES of the pre-catalyst or active species. |

| Catalyst_Loading | Float | In mol% or molarity. | |

| Substrates/Products | Substrate_SMILES | String | Canonical SMILES of the major substrate. |

| Product_SMILES | String | Canonical SMILES of the target product. | |

| Conditions | Temperature_K | Float | Reaction temperature in Kelvin. |

| Pressure_bar | Float | Pressure of gases (if applicable). | |

| Time_hr | Float | Reaction time in hours. | |

| Solvent | String | Standardized name (e.g., "MeOH", "THF"). | |

| Performance | Conversion | Float | 0.0 to 1.0 (or 0-100%). |

| Yield | Float | 0.0 to 1.0 (or 0-100%). | |

| Selectivityoree | Float | Enantiomeric excess (0.0 to 1.0) or selectivity metric. | |

| TOF | Float | Turnover Frequency (h⁻¹). | |

| Metadata | Data_Source | Categorical | e.g., "PrimaryHTE", "JournalXYZ". |

| DOI | String | Digital Object Identifier for source. | |

| Confidence_Score | Integer | 1-5 rating based on metadata completeness. |

Visualization of Workflows

Diagram: Catalytic Data Curation Pipeline

Diagram: FAIR Data Curation Protocol

The Scientist's Toolkit: Research Reagent Solutions & Essential Materials

| Item | Function in Data Curation |

|---|---|

| Laboratory Information Management System (LIMS) | Digital backbone for tracking samples, experiments, and analytical data, ensuring traceability and metadata integrity. |

| Cheminformatics Toolkit (e.g., RDKit) | Software library for standardizing chemical structures (to SMILES/InChI), validating formats, and calculating molecular descriptors. |

| Electronic Lab Notebook (ELN) | Digital platform for recording experimental procedures and observations in a structured, searchable format, linked to results. |

| High-Throughput Experimentation (HTE) Platform | Automated reactor blocks and liquid handlers that generate large, consistent primary data under controlled parameter matrices. |

| Automated Analytical Systems (e.g., UPLC/GC autosamplers) | Enable high-throughput, consistent analysis of reaction outcomes, with digital output for direct data capture. |

| Literature Mining Software (e.g., NLP tools) | Assist in the automated extraction of reaction data and conditions from published literature and patents. |

| Standardized Data Template (e.g., .csv schema) | Pre-defined, column-based format that enforces consistent data entry fields and units during collection. |

| Catalyst/Substrate Library (Barcoded Vials) | Physically organized, digitally catalogued collections of reagents, enabling reliable linking of identity to experimental wells. |

Within the thesis on AI-driven rapid catalytic performance assessment, a critical preprocessing step is the conversion of raw chemical structure data into numerical feature vectors that machine learning (ML) algorithms can process. This feature engineering process, known as molecular descriptor calculation, is foundational for predicting catalytic properties such as activity, selectivity, and stability.

Core Descriptor Categories & Quantitative Data

Molecular descriptors are categorized based on the structural information they encode. The following table summarizes prevalent descriptor types, their dimensionality, and their relevance to catalytic assessment.

Table 1: Categories of Molecular Descriptors for Catalytic Performance Prediction

| Descriptor Category | Number of Typical Descriptors | Information Encoded | Relevance to Catalysis | Common Software/Toolkit |

|---|---|---|---|---|

| 1D/2D (Constitutional & Topological) | 50 - 300 | Molecular weight, atom counts, bond counts, connectivity indices, electronegativity. | Rapid screening, bulk property estimation. | RDKit, Dragon, PaDEL-Descriptor |

| 3D (Geometric & Steric) | 150 - 500 | van der Waals volume, surface area, radius of gyration, 3D moments. | Active site accessibility, substrate fit, steric hindrance. | RDKit, Open3DALIGN, Schrodinger Maestro |

| Electronic & Quantum Chemical | 50 - 200+ | HOMO/LUMO energies, dipole moment, partial atomic charges, Fukui indices. | Reaction mechanism insight, adsorption energy correlation. | Gaussian, ORCA, Psi4, ADF |

| Fingerprint-Based (Binary) | 512 - 2048+ | Presence/absence of specific substructures or topological paths (e.g., ECFP, MACCS keys). | Similarity searching, pattern recognition for active motifs. | RDKit, CDK, ChemAxon |

Experimental Protocols

Protocol 3.1: Standardized Workflow for 2D/3D Descriptor Generation Using RDKit

This protocol details the generation of a comprehensive descriptor set from a SMILES string, a common starting point in AI-driven catalyst discovery pipelines.

Materials:

- Input Data: A list of catalyst or ligand structures in SMILES or SDF format.

- Software: Python environment with RDKit and NumPy/Pandas installed.

- Hardware: Standard workstation (3D descriptor generation may require significant CPU resources).

Procedure:

- Structure Standardization: Load SMILES strings using

rdkit.Chem.MolFromSmiles(). Apply standardization (sanitization, neutralization, tautomer normalization) usingrdkit.Chem.MolStandardizemodules to ensure consistency. - Conformer Generation (for 3D Descriptors): For each molecule, use

rdkit.Chem.AllChem.EmbedMolecule()to generate a 3D conformer. Optimize the geometry using the MMFF94 or UFF force field viardkit.Chem.AllChem.UFFOptimizeMolecule(). - Descriptor Calculation:

- 2D Descriptors: Use the

rdkit.Chem.Descriptorsmodule for simple descriptors (e.g.,MolWt,NumHAcceptors). For a broad set, utilize therdkit.ML.Descriptors.MoleculeDescriptorsmodule. - 3D Descriptors: Calculate using

rdkit.Chem.Descriptors3D(e.g.,PBF,PMI1,NPR1,RadiusOfGyration). - Fingerprints: Generate ECFP4 fingerprints using

rdkit.Chem.AllChem.GetMorganFingerprintAsBitVect(mol, radius=2, nBits=2048).

- 2D Descriptors: Use the

- Data Compilation: Compile all calculated descriptors into a Pandas DataFrame, where rows represent molecules and columns represent features.

- Output: Export the DataFrame as a CSV file for subsequent ML model training.

Protocol 3.2: Calculation of Quantum Chemical Descriptors via ORCA

This protocol outlines the steps to obtain electronic structure descriptors, crucial for understanding electronic interactions in catalysis.

Materials:

- Input: 3D molecular geometry file (.xyz or .sdf).

- Software: ORCA quantum chemistry package (version 5.0 or later).

- Hardware: High-performance computing cluster recommended.

Procedure:

- Input File Preparation: Create an ORCA input file (

.inp). Specify:- Calculation Method: Density Functional Theory (DFT) with a functional like B3LYP and basis set such as def2-SVP.

- Job Type: Single-point energy calculation followed by property analysis (

SPandProp). - Add keywords for desired outputs:

Fukui,Mulliken,Hirshfeld,ESP.

- Job Submission: Submit the input file to the computing cluster using the appropriate command (e.g.,

orca molecule.inp > molecule.out). - Output Parsing: Upon completion, parse the output file (

.out) to extract:- HOMO and LUMO energies (Ehomo, Elumo).

- Global reactivity indices: Chemical Potential (μ = (Ehomo + Elumo)/2), Hardness (η = Elumo - Ehomo), Electrophilicity Index (ω = μ²/2η).

- Partial atomic charges (Mulliken or Hirshfeld).

- Data Aggregation: Aggregate the extracted electronic descriptors for all molecules in the dataset into a structured table.

Visualizations

Molecular Descriptor Generation Pipeline

AI-Driven Catalyst Assessment Loop

The Scientist's Toolkit: Research Reagent Solutions

Table 2: Essential Tools & Resources for Molecular Feature Engineering

| Item Name | Type (Software/Library/Database) | Primary Function in Descriptor Engineering |

|---|---|---|

| RDKit | Open-Source Cheminformatics Library | Core toolkit for molecule handling, 2D/3D descriptor calculation, and fingerprint generation within Python. |

| PaDEL-Descriptor | Standalone Software/Java Library | Calculates >1,800 1D, 2D, and 3D molecular descriptors directly from chemical structure files. |

| Dragon | Commercial Software | Industry-standard for computing >5,000 molecular descriptors, offering extensive validation. |

| Gaussian / ORCA | Quantum Chemistry Software | Compute high-fidelity electronic structure descriptors (HOMO/LUMO, Fukui indices) via ab initio or DFT methods. |

| Cambridge Structural Database (CSD) | Crystallographic Database | Source of experimentally determined 3D geometries for calculating accurate geometric descriptors. |

| ChemAxon JChem / CDK | Cheminformatics Suite / Library | Alternative platforms for structure management, standardization, and descriptor calculation. |

| Mordred | Python Descriptor Calculator | Calculates >1,800 2D/3D descriptors using RDKit as a backend, with a concise API. |

Application Notes

The application of hierarchical machine learning models enables the rapid, in-silico assessment of catalytic performance for drug-relevant chemical transformations. This pipeline accelerates the identification of optimal reaction conditions and catalysts by predicting key performance metrics such as yield, enantioselectivity, and turnover number (TON).

Table 1: Comparative Performance of AI/ML Models in Catalytic Reaction Prediction

| Model Class | Example Model | Primary Use Case in Catalysis | Avg. Yield Prediction MAE (%) | Enantioselectivity Prediction Accuracy | Data Efficiency (Min. Samples) | Reference Year |

|---|---|---|---|---|---|---|

| Ensemble | Random Forest | Condition Optimization (Solvent, Ligand) | 8.5 | Low | 100 | 2023 |

| Graph-Based | GNN (MPNN) | Catalyst Structure-Activity Relationship | 6.2 | Medium | 500 | 2024 |

| Transformer | Chemformer | Reaction Outcome from SMILES Sequences | 5.1 | High | 10,000 | 2024 |

| Hybrid | GNN-Transformer | Multi-task Performance Prediction | 4.3 | High | 3,000 | 2025 |

Key Insights: Advanced Transformer architectures, particularly those pre-trained on large molecular corpora, demonstrate superior accuracy in predicting complex stereoselective outcomes but require substantial training data. Hybrid models (GNN-Transformer) offer a balanced approach for high-fidelity prediction with moderate data requirements, ideal for experimental research platforms.

Experimental Protocols

Protocol 1: Building a Random Forest Model for Reaction Condition Screening

Objective: To predict reaction yield based on categorical and numerical descriptors of reaction components. Materials: Scikit-learn library (v1.3+), Pandas, NumPy, dataset of catalytic reactions with condition labels.

- Data Curation: Compile a CSV file with columns for: Catalyst_ID (string), Ligand (string), Solvent (string), Temperature (°C, float), Time (h, float), and Yield (% , float). Encode categorical variables using one-hot encoding.

- Feature Engineering: Add calculated molecular descriptors (e.g., from RDKit) for catalyst and ligand if available. Normalize all numerical features using StandardScaler.

- Model Training: Split data 80/20 for training/test. Initialize

RandomForestRegressor(n_estimators=500, max_depth=15, random_state=42). Train on the training set. - Validation: Predict on test set. Calculate Mean Absolute Error (MAE) and R². Use permutation importance to identify critical condition variables.

- Deployment: Save model via

joblib. Integrate into platform for real-time condition recommendation.

Protocol 2: Training a Graph Neural Network (GNN) for Catalyst Performance Prediction

Objective: To directly learn from catalyst molecular graph to predict turnover frequency (TOF). Materials: PyTorch Geometric (v2.4+), RDKit, dataset of catalyst structures (SMILES) and associated TOF values.

- Graph Representation: Convert catalyst SMILES to molecular graph. Nodes represent atoms (featurized with atomic number, hybridization). Edges represent bonds (featurized with bond type).

- Model Architecture: Implement a 4-layer Message Passing Neural Network (MPNN). Use global mean pooling for graph-level representation. Follow with 3 fully connected layers (ReLU activation) to regress TOF.

- Training Loop: Use Mean Squared Error (MSE) loss and Adam optimizer (lr=0.001). Train for 300 epochs with early stopping. Perform 5-fold cross-validation.

- Interpretation: Apply GNNExplainer to visualize atom contributions to the predicted activity.

Protocol 3: Fine-Tuning a Reaction Transformer for Enantioselectivity Prediction

Objective: To predict enantiomeric excess (ee) from text-based representations of full chemical reactions. Objective: To predict enantiomeric excess (ee) from text-based representations of full chemical reactions. Materials: HuggingFace Transformers library, pre-trained Chemformer or RxnFP model, dataset of asymmetric reactions with reported ee.

- Data Preparation: Represent each reaction as a SMILES string:

[Reactants].[Catalyst].[Conditions]>>[Products]. Tokenize using model's subword tokenizer. - Model Setup: Load pre-trained Transformer encoder. Add a regression head (dropout + linear layer) on the [CLS] token output.

- Fine-Tuning: Use a small batch size (8-16). Employ a gradual unfreezing strategy, starting from the regression head. Use a low learning rate (5e-5). Loss function: Huber loss.

- Evaluation: Report MAE and RMSE for ee prediction. Generate attention maps to identify substrate-catalyst interactions critical for stereoselectivity.

Visualizations

Title: AI Model Pipeline for Catalytic Performance Assessment

Title: GNN Catalyst Modeling Workflow

The Scientist's Toolkit: Research Reagent Solutions

Table 2: Essential Computational Reagents for AI-Driven Catalysis Research

| Item/Category | Example/Specification | Function in AI/ML Workflow |

|---|---|---|

| Molecular Representation Library | RDKit (2024.03.x) | Converts SMILES to molecular graphs, calculates fingerprints and descriptors for featurization. |

| Deep Learning Framework | PyTorch (2.2+) with PyTorch Geometric (2.5+) | Provides flexible environment for building and training custom GNN and Transformer models. |

| Pre-trained Chemical Language Models | HuggingFace Chemformer, MolT5 |

Offers transfer learning starting points for reaction prediction, reducing data requirements. |

| Hyperparameter Optimization Suite | Optuna (v3.5) | Automates the search for optimal model architectures and training parameters. |

| Model Interpretation Tool | SHAP (v0.44) or GNNExplainer | Explains model predictions, identifies critical molecular features or reaction conditions. |

| High-Throughput Data Curation Tool | rxn-chem-utils (IBM) |

Parses and standardizes reaction data from electronic lab notebooks (ELNs) and literature. |

| Quantum Chemistry Data Source | QCArchive (Psi4, ORCA computations) | Provides high-fidelity electronic structure data for training or validating models. |

| Cloud ML Platform | Google Cloud Vertex AI, AWS SageMaker | Enables scalable training of large Transformer models and deployment of prediction pipelines. |

Application Notes

This document details a standardized framework for integrating AI-driven property prediction with automated experimental workflows. The objective is to accelerate the closed-loop discovery and optimization of catalysts, with a focus on applications in sustainable chemistry and pharmaceutical synthesis. The process is designed for a rapid assessment platform, minimizing human intervention between computational design and experimental validation.

Core Integration Workflow

The system operates on a cyclic "Design-Make-Test-Analyze" (DMTA) principle, enhanced by AI/ML at the design phase and automation at the make/test phases.

Table 1: Quantitative Benchmarks for AI-HTE Integration in Catalysis Research

| Metric | Target Performance | Typical Range (Current State) | Key Challenge |

|---|---|---|---|

| Cycle Turnaround Time (Idea to Data) | < 72 hours | 5-14 days | Robotic reconfiguration & analysis latency |

| Experiment Throughput (Reactions/Day) | 1,536+ | 96 - 384 | Liquid handling speed & catalyst preparation |

| Prediction-to-Validation Accuracy (Top 10%) | > 70% Hit Rate | 40-65% Hit Rate | Domain shift in training data |

| Data Points per Campaign | 10,000+ | 1,000 - 5,000 | Sample logistics & analytical throughput |

Table 2: Essential Research Reagent Solutions for Catalytic HTE

| Reagent / Material | Function in Workflow | Key Considerations |

|---|---|---|

| Pre-catalyst Libraries | Metal-ligand complexes for cross-coupling, hydrogenation, etc. | Stock stability in solution, compatibility with dispenser materials. |

| Substrate Plates | Diverse electrophiles/nucleophiles for reaction scoping. | Normalized concentration, premixed in inert solvent. |

| Automation-Compatible Solvents | Anhydrous DMF, THF, toluene, etc. | Low viscosity for pipetting, sealed reservoir systems. |

| Quench/Internal Standard Plates | Solutions to stop reactions and enable HPLC/GC analysis. | Must not interfere with chromatography or detection. |

| Solid-Phase Extraction (SPE) Cartridges (96-well) | High-throughput reaction work-up and purification for analysis. | Critical for removing catalyst/debris prior to UHPLC-MS. |

Detailed Experimental Protocols

Protocol 1: AI-Driven Candidate Selection & Plate Map Generation

- Input: A search space of potential catalysts (e.g., 10,000 ligand-metal-substrate combinations) is defined using a combinatorial library.

- AI Prediction: A trained graph neural network (GNN) or gradient-boosting model predicts key performance indicators (KPIs: e.g., yield, enantioselectivity, turnover number) for all candidates.

- Down-Selection: The top 5% of candidates (~500) are selected. To ensure diversity and exploration, an additional 2% (~200) are chosen via Thompson sampling or UCB (Upper Confidence Bound) algorithms from the remaining pool.

- Plate Map Generation: A scheduling algorithm assigns the 700 selected reactions to wells on 96- or 384-well microplates, balancing solvent groups, catalyst types, and control positions (positive, negative, internal standard). This digital "plate map" file (CSV/JSON) is the primary instruction set for the robotic platform.

Protocol 2: Automated High-Throughput Reaction Execution & Quench

- System Preparation: An automated liquid handling robot (e.g., Hamilton, Chemspeed) is equipped with solvent reservoirs, source plates for substrates/catalysts, and a carousel of empty reaction plates (typically 1-2 mL vial-type 96-well plates).

- Plate Preparation (Make): a. The robot follows the plate map to dispense specified volumes of anhydrous solvent to each well under an inert atmosphere (N2 glovebox). b. Substrates (A and B) are dispensed from stock solution plates. c. Pre-catalyst and ligand solutions are added. d. The plate is sealed with a PTFE-coated silicone mat, agitated briefly, and transferred to a heated/shaking incubation station.

- Reaction Execution & Quench (Test): a. Reactions proceed for the prescribed time (e.g., 2-18 hours) at set temperature (e.g., 60-100°C). b. At t = final, the plate is automatically transferred to a second liquid handler. c. A quench solution (e.g., 100 µL of 1M HCl or a solution containing an internal standard for analysis) is dispensed into each well to stop the reaction.

Protocol 3: High-Throughput Analysis & Data Structuring

- Sample Preparation: An aliquot from each quenched well is automatically diluted and filtered through a 96-well SPE plate or syringe filter plate.

- Parallelized Analysis: The prepared samples are analyzed via: a. UHPLC-MS/UV: A dual-injection system with a 96-well autosampler runs back-to-back methods for rapid quantification (UV) and identification (MS). b. GC-FID/MS: For volatile products, a multi-channel GC system is used.

- Automated Data Processing: Analysis software (e.g., Chromeleon, ChemStation) scripts convert chromatograms into quantitative yields/conversion rates. MS data is parsed for byproduct identification.

- Data Structuring: Results are automatically mapped back to the original plate map and candidate identifiers, creating a structured dataset (CSV/SDF) with fields: CandidateID, PredictedYield, ActualYield, Byproducts, ReactionConditions.

Protocol 4: Model Retraining & Closed-Loop Iteration

- Data Aggregation: The new experimental dataset is appended to the historical campaign database.

- Feature Engineering: Reaction conditions and molecular descriptors (e.g., Morgan fingerprints, DFT-calculated properties) are updated.

- Model Retraining: The AI prediction model is retrained on the expanded dataset. Bayesian optimization algorithms use the new data to propose the next set of experiments, focusing on high-performance regions and model uncertainty.

- Iteration: A new plate map is generated (Protocol 1), initiating the next DMTA cycle.

Visualizations

Diagram 1: AI-HTE Catalysis Platform Workflow

Diagram 2: DMTA Cycle Logic for Rapid Assessment

Application Notes

This document presents case studies demonstrating the integration of artificial intelligence (AI) with high-throughput experimentation (HTE) for the accelerated discovery of novel catalytic entities. The work is contextualized within a broader thesis on developing AI-driven platforms for rapid catalytic performance assessment, aiming to compress the traditional discovery timeline from years to months.

Case Study 1: AI-Driven Organocatalyst Discovery for Asymmetric Synthesis A landmark study utilized a multi-step computational pipeline to identify novel chiral organocatalysts from a vast virtual library. An initial library of ~100,000 potential aminocatalyst structures was generated. A machine learning (ML) model, trained on DFT-calculated steric and electronic descriptors of known catalysts, performed an initial screen. This was followed by quantum mechanics (QM)-based transition-state modeling for top candidates, predicting enantiomeric excess (ee). Key findings are summarized below.

Table 1: Performance of AI-Identified Organocatalysts in a Model Aldol Reaction

| Catalyst ID (Type) | Predicted ee (%) | Experimental ee (%) | Yield (%) | Notes |

|---|---|---|---|---|

| Cat-A1 (Bicyclic tertiary amine) | 94 | 91 | 85 | Novel scaffold; outperformed reference catalyst Jørgensen-Hayashi (88% ee). |

| Cat-B3 (Spirocyclic diamine) | 87 | 89 | 82 | Excellent substrate generality predicted and confirmed. |

| Ref-Cat (Jørgensen-Hayashi) | (Reference) | 88 | 80 | Standard for comparison. |

Case Study 2: Discovery of Photoredox-Active Transition Metal Complexes A separate platform focused on identifying earth-abundant transition metal complexes as alternatives to rare metals like Iridium and Ruthenium in photoredox catalysis. A graph neural network (GNN) was trained on molecular graphs and UV-Vis spectral data to predict key photophysical properties: absorption wavelength (λ_abs) and excited-state lifetime (τ). Promising candidates were synthesized and characterized.

Table 2: Properties of AI-Identified Photoredox Catalysts

| Complex ID (Metal/Core) | Predicted λ_abs (nm) | Experimental λ_abs (nm) | Predicted τ (ns) | Experimental τ (ns) | Redox Potential E1/2 [M*/M–] (V vs SCE) |

|---|---|---|---|---|---|

| [Cu(P^N)_2]^+ (Copper) | 450 | 465 | 110 | 95 | -1.8 |

| [Fe(N^N)_3]^2+ (Iron) | 520 | 505 | 0.5 | 0.4 | -1.6 |

| [Ir(ppy)_3] (Reference) | 380 | 380 | 1900 | 1900 | -2.2 |

Experimental Protocols

Protocol 1: High-Throughput Screening of Organocatalyst Candidates Objective: To experimentally validate the enantioselectivity and yield of AI-predicted organocatalysts in a benchmark aldol reaction. Materials: AI-prioritized catalyst libraries (5-10 mg each), aldehyde substrate, ketone nucleophile, solvent (DCM or toluene), HPLC vials, automated liquid handler, chiral HPLC system. Procedure:

- Reaction Setup: Using an automated liquid handler, prepare reaction arrays in 1.0 mL HPLC vials. To each vial, add: aldehyde (0.1 mmol, 1.0 eq), catalyst (5 mol%), and solvent (0.5 mL).

- Initiation: Add the ketone nucleophile (0.2 mmol, 2.0 eq) to initiate the reaction.

- Conditions: Seal vials and stir the reaction array at 25°C for 24 hours.

- Quenching & Analysis: Quench reactions with a drop of acetic acid. Dilute an aliquot (10 µL) with methanol (1 mL) for analysis.

- Chiral HPLC: Inject samples onto a chiral stationary phase HPLC column (e.g., Chiralpak AD-H). Determine conversion (from UV trace area) and enantiomeric excess (ee) by integrating the areas of the enantiomer peaks. ee = |(R – S)| / (R + S) * 100%.

- Data Integration: Upload yield and ee data to the AI platform for model refinement.

Protocol 2: Synthesis and Photophysical Characterization of Transition Metal Complexes Objective: To synthesize AI-predicted complexes and characterize their photoredox-relevant properties. Materials: Metal salts (e.g., Cu(MeCN)₄PF₆, Fe(BF₄)₂·6H₂O), ligand stocks, Schlenk line for inert atmosphere, photoreactor for screening, UV-Vis spectrophotometer, fluorometer with time-correlated single photon counting (TCSPC), potentiostat. Procedure:

- Synthesis: Under nitrogen, combine metal salt (0.05 mmol) and ligand (0.105 mmol for bidentate) in degassed methanol (5 mL). Reflux for 2 hours. Cool, precipitate, filter, and dry under vacuum to obtain the complex.

- UV-Vis Absorption: Prepare a ~10 µM solution of the complex in acetonitrile. Record absorption spectrum from 250-700 nm to determine λ_abs.

- Emission Lifetime (TCSPC): Prepare a degassed solution of the complex (OD < 0.1 at λex). Excite at the λabs maximum. Measure the decay of emission intensity over time to fit the excited-state lifetime (τ).

- Electrochemical Analysis: Perform cyclic voltammetry in a three-electrode cell (glassy carbon working electrode). Use a 0.1 M TBAPF₆ electrolyte in MeCN. Scan to determine the reduction potential of the photoexcited state (estimated via ground-state redox and emission energy).

- Photocatalytic Validation: Test candidate complexes in a benchmark C–N cross-coupling reaction under blue LED irradiation, comparing conversion rates to [Ir(ppy)₃].

Visualization

AI-Driven Catalyst Discovery Workflow

GNN-Based Photocatalyst Prediction Pipeline

The Scientist's Toolkit: Research Reagent Solutions

| Item | Function in AI-Driven Discovery |

|---|---|

| Automated Liquid Handler | Enables precise, high-throughput setup of catalytic reactions in microtiter plates or vial arrays for rapid experimental validation of AI predictions. |

| Chiral HPLC/UPLC System | Critical for the quantitative analysis of enantiomeric excess (ee), the key performance metric for asymmetric organocatalysts identified by AI models. |

| Time-Correlated Single Photon Counting (TCSPC) Module | Measures excited-state lifetimes (τ) of photoredox catalyst candidates, a key predicted and validated property for screening. |

| Schlenk Line/Glovebox | Provides an inert atmosphere for the synthesis and handling of air-sensitive organocatalysts and transition metal complexes. |

| Parallel Photoreactor | Allows simultaneous testing of multiple photocatalyst candidates under controlled LED irradiation for activity screening. |

| DFT Software (e.g., Gaussian, ORCA) | Performs quantum mechanical calculations to generate training data (descriptors, energies) and validate AI-predicted transition states. |

| ML Framework (e.g., PyTorch, TensorFlow) | Used to build, train, and deploy models for virtual screening and property prediction. |

Overcoming Challenges: Best Practices for Optimizing AI Catalyst Platforms

Within AI-driven platforms for rapid catalytic performance assessment, particularly in enzyme and catalyst discovery for drug synthesis, data scarcity is a fundamental constraint. High-throughput experimental characterization is costly and time-intensive, yielding sparse, high-dimensional datasets. This document details practical protocols for leveraging small data techniques and active learning (AL) loops to accelerate discovery cycles.

Core Techniques & Comparative Analysis

Small Data Techniques

Techniques to maximize information extraction from limited datasets.

Table 1: Small Data Technique Comparison

| Technique | Key Principle | Best For | Typical Data Size | Key Limitation |

|---|---|---|---|---|

| Transfer Learning (TL) | Leverage pre-trained models from large source domains (e.g., general protein models). | Enzyme function prediction, catalyst property regression. | 50-500 samples | Domain shift; requires relevant source model. |

| Data Augmentation | Generate synthetic data via rule-based (SMILES enumeration) or model-based (GAN, VAE) methods. | Molecular property prediction, reaction yield estimation. | 100-1000 samples | Risk of generating physically unrealistic examples. |

| Few-Shot Learning | Meta-learning to adapt rapidly from few examples (e.g., Prototypical Networks, MAML). | Classifying novel enzyme families, predicting catalytic motifs. | 1-10 samples per class | Complex training; unstable with high noise. |

| Gaussian Processes (GP) | Bayesian non-parametric models providing uncertainty estimates. | Modeling reaction landscapes, optimizing process parameters. | 50-300 samples | Poor scalability to very high dimensions. |

Active Learning (AL) Strategies

Strategies to iteratively select the most informative samples for experimental validation.

Table 2: Active Learning Query Strategy Comparison

| Strategy | Selection Criterion | Advantage | Disadvantage | Uncertainty Metric Used |

|---|---|---|---|---|

| Uncertainty Sampling | Selects points where model prediction is most uncertain (e.g., highest entropy). | Simple, effective for model improvement. | Can select outliers; ignores data distribution. | Predictive Entropy, BALD |

| Query-by-Committee | Selects points with maximal disagreement among an ensemble of models. | Robust, reduces model bias. | Computationally expensive. | Vote Entropy, Consensus Disagreement |

| Expected Model Change | Selects points that would cause the greatest change to the model if labeled. | Targets high learning impact. | Very computationally heavy for large models. | Gradient Magnitude |

| Bayesian Active Learning by Disagreement (BALD) | Selects points that maximize mutual information between predictions and model parameters. | Optimal for Bayesian models like GPs, Neural Networks. | Computationally intensive for deep networks. | Mutual Information |

Detailed Experimental Protocols

Protocol: Initiating an Active Learning Loop for Enzyme Catalyst Discovery

Objective: Identify novel enzyme variants with high catalytic efficiency for a target reaction using ≤ 200 experimental assays. Duration: 4-6 weeks per cycle.

Materials & Initial Setup:

- Initial Seed Dataset: Minimum 30-50 characterized enzyme variants (sequence, basic properties) with associated turnover number (k_cat) or yield measurements.

- Pre-trained Model: A publicly available protein language model (e.g., ESM-2, ProtBERT).

- Platform: Python environment with libraries:

scikit-learn,PyTorch,GPyTorch(for GPs),deepchem,modAL(for AL).

Procedure:

- Feature Representation:

- Input enzyme variant sequences into the pre-trained ESM-2 model.

- Extract the last hidden layer embeddings (1280 dimensions) as feature vectors for each variant.

- Apply dimensionality reduction (e.g., UMAP to 50 dimensions) to mitigate the curse of dimensionality.

Initial Model Training (Surrogate Model):

- Split seed data: 80% train, 20% hold-out test.

- Train a Gaussian Process Regression (GPR) model with a Matern kernel on the training set to predict catalytic efficiency (e.g., log(k_cat)).

- Key: The GPR provides both a prediction and a standard deviation (uncertainty) for each unlabeled candidate.

Candidate Pool Creation:

- Generate a virtual library of 5,000-10,000 enzyme variants via site-saturation mutagenesis in silico at predefined active site residues.

- Compute their ESM-2/UMAP feature vectors as in Step 1.

Active Learning Query Cycle:

- Use the trained GPR to predict mean (μ) and standard deviation (σ) for all candidates in the pool.

- Apply the BALD acquisition function. For each candidate

i, compute:a_i = σ_i^2 / (σ_i^2 + σ_n^2), whereσ_nis noise variance. - Rank candidates by acquisition score

a_iand select the top 10-20 for experimental validation. - Experimental Validation Sub-protocol: a. Gene Synthesis & Cloning: Order gene fragments for selected variants; clone into expression vector. b. Protein Expression & Purification: Express in E. coli BL21(DE3); purify via His-tag affinity chromatography. c. Activity Assay: Perform kinetic assay under standardized conditions (pH, T, substrate conc.) to determine k_cat.

- Add newly labeled data (variant features + experimental k_cat) to the training dataset.

- Retrain the GPR model on the updated dataset.

Termination: Repeat Step 4 for 5-10 cycles, or until model performance on the hold-out set plateaus or a variant meeting the target efficiency (e.g., k_cat > X s⁻¹) is discovered.

Protocol: Data Augmentation for Reaction Yield Prediction

Objective: Train a robust yield predictor for a novel C-N coupling reaction using < 200 historical examples.

Procedure:

- Rule-Based Augmentation:

- Represent all reaction components (substrates, catalysts, solvents) as SMILES strings.

- Apply SMILES Enumeration: Use RDKit to generate canonical, randomized, and deepsmiles versions of each reactant SMILES, creating 5-10 representations per compound.

- Use Atom/Bond Masking: Randomly mask 5% of atoms in reactant SMILES to create "partial" representations, forcing the model to learn robust features.

Model-Based Augmentation (if data > 100 samples):

- Train a Variational Autoencoder (VAE) on the SMILES strings from the entire reaction dataset.

- Sample latent space vectors and decode them to generate novel, plausible reactant structures.

- Filter: Use a rule-based filter (e.g., RDKit functional group check) to remove unrealistic molecules.

Training with Augmented Data:

- Combine original and augmented data.

- Train a Graph Neural Network (e.g., MPNN) where reactants are represented as molecular graphs.

- Use a leave-one-out cross-validation strategy to evaluate performance gains from augmentation.

Visualizations

Diagram 1: Active Learning Loop for Catalyst Discovery

Diagram 2: Small Data Technique Integration

The Scientist's Toolkit: Research Reagent Solutions

Table 3: Essential Materials & Reagents for Protocol Execution

| Item | Function in Protocol | Example Product/Kit (for illustration) | Critical Parameters |

|---|---|---|---|

| Pre-trained Protein Model | Provides foundational sequence-feature embeddings to overcome small data. | ESM-2 (Meta AI), ProtBERT (NLP model). | Embedding dimension, training corpus relevance. |

| Gaussian Process Software | Core surrogate model for AL; provides native uncertainty estimates. | GPyTorch, scikit-learn GPR. | Kernel choice (Matern, RBF), noise prior. |

| Active Learning Framework | Streamlines implementation of query strategies and loop management. | modAL (Python), ALiPy. | Compatibility with chosen ML model. |

| Gene Synthesis Service | Rapid generation of DNA for selected enzyme variants. | Twist Bioscience gene fragments, IDT gBlocks. | Synthesis length, turnaround time, fidelity. |

| High-Throughput Expression System | Parallel protein production for selected candidates. | E. coli BL21(DE3) electrocompetent cells, 96-deep well plates. | Expression yield, solubility tags (His-tag, SUMO). |

| Robotic Liquid Handler | Automates assay setup for kinetic characterization of selected variants. | Beckman Coulter Biomek, Opentrons OT-2. | Pipetting precision, volume range, deck capacity. |

| Microplate Spectrophotometer/Fluorimeter | High-throughput kinetic data acquisition from activity assays. | BioTek Synergy H1, Tecan Spark. | Kinetic read speed, temperature control, sensitivity. |

| Chemical Diversity Library | Virtual or physical compound pool for catalyst/substrate screening. | Enamine REAL Space (virtual), Sigma-Aldrich screening library. | Size, chemical space coverage, purchase availability. |

Mitigating Model Bias and Improving Generalizability Beyond Training Data

AI-driven platforms for rapid catalytic performance assessment, such as predicting enzyme activity or small-molecule catalyst efficiency, are hindered by model bias and poor generalizability. Models trained on narrow chemical spaces (e.g., specific substrate classes) fail when presented with novel, out-of-distribution (OOD) scaffolds, leading to inaccurate predictions in real-world drug development pipelines.

Core Strategies and Quantitative Benchmarks

The following table summarizes recent (2023-2024) quantitative results from key studies on debiasing and improving generalizability for predictive models in chemical and biological catalysis.

Table 1: Performance of Recent Debiasing & Generalization Techniques in Catalytic AI Models

| Technique Category | Specific Method | Test Domain (Catalysis Example) | Reported Metric Improvement (vs. Baseline) | Key Limitation |

|---|---|---|---|---|

| Data-Centric | Causal Discovery & Reweighting | Transition-Metal Catalyzed Cross-Coupling | +22% ROC-AUC on OOD substrates | Requires expert-defined variable sets |

| Algorithm-Centric | Domain Adversarial Training | Enzyme Kinetics (Michaelis constant Km) | +18% Pearson r on new enzyme families | Sensitive to hyperparameter tuning |

| Architecture-Centric | Invariant Risk Minimization (IRM) | Photoredox Catalyst Turnover Frequency | +30% Mean Absolute Error reduction | High computational cost |

| Post-Hoc | Conformal Prediction | Heterogeneous Catalyst Yield Prediction | 95% prediction sets valid on OOD data | Generates set predictions, not point estimates |

| Causal Learning | Counterfactual Data Augmentation | Asymmetric Organocatalysis (Enantioselectivity) | +15% generalizability gap reduction | Augmentation quality is model-dependent |

Experimental Protocols

Protocol 3.1: Domain Adversarial Training for Enzyme Kinetics Prediction

Aim: Train a model to predict kinetic parameters robustly across diverse enzyme families.

- Data Preparation:

- Source: BRENDA or MegaKinetics datasets.

- Partition: Split data into ≥3 "domains" based on enzyme commission (EC) class top-level number.

- Features: Use pre-trained molecular graph embeddings (e.g., ChemBERTa) for substrates/products and ESM-2 embeddings for enzyme sequences.

- Model Architecture:

- Shared Feature Extractor: A 4-layer Graph Neural Network (GNN) for reaction context and a 2-layer transformer for enzyme sequence.

- Task Predictor: A 3-layer fully connected network (FCN) regressor for Km or kcat.

- Domain Classifier: A 2-layer FCN connected to the shared extractor's output via a gradient reversal layer.

- Training:

- Loss: Ltotal = Ltask (MSE) - λ * L_domain (Cross-Entropy).

- Hyperparameter: λ is gradually increased from 0 to 1 via a scheduler.

- Optimizer: AdamW (lr=5e-4), batch size=64, for 200 epochs.

- Validation: Perform cross-validation within domains and, critically, hold out one entire EC class for final OOD testing.

Protocol 3.2: Conformal Prediction for Robust Catalyst Yield Intervals

Aim: Generate prediction sets for catalytic reaction yields that guarantee coverage on novel substrates.

- Data Split: Divide labeled reaction data (substrate, catalyst, conditions, yield) into proper training set, calibration set, and test set. Ensure scaffold splits between sets.

- Base Model Training: Train any regression model (e.g., Random Forest, GNN) on the proper training set to predict yield.

- Nonconformity Score: On the calibration set, define the score as the absolute prediction error, |y_i - ŷ_i|.

- Quantile Calculation: For a chosen significance level α (e.g., 0.05, for 95% confidence), compute the (1-α) quantile, q̂, of the nonconformity scores on the calibration set.

- Prediction Set Formation: For a new test input, the model outputs the interval: [ŷ_test - q̂, ŷ_test + q̂]. This set is guaranteed to contain the true yield with probability 1-α, provided data are exchangeable.

Visualization of Workflows and Pathways

Title: AI Catalysis Model Debiasing Workflow

Title: Domain Adversarial Training Architecture

The Scientist's Toolkit: Research Reagent Solutions

Table 2: Essential Reagents and Materials for Experimental Validation of AI Catalysis Models

| Item Name | Function in Protocol | Example Product/Catalog # | Key Specification |

|---|---|---|---|

| Diversified Catalyst Library | Provides OOD test compounds for model validation. | Sigma-Aldrich MERCK Organometallic Catalyst Set; Princeton BioMolecular Ru/Pd/Fe Library. | Chemical diversity (scaffold, metal center, ligand). |

| High-Throughput Experimentation (HTE) Kit | Rapidly generates ground-truth catalytic data for novel substrates. | ChemGlass CG-LLS-96 Parallel Reactor Array; Unchained Labs Little Big System. | Temperature control, stirring, inert atmosphere in microtiter plate. |

| Automated Liquid Handling System | Enables precise, reproducible preparation of reaction mixtures for calibration datasets. | Beckman Coulter Biomex i7; Opentrons OT-2. | Sub-microliter dispensing precision, organic solvent compatibility. |

| Benchmarked Quantum Chemistry Dataset | Serves as a transfer learning source or benchmark for electronic descriptor models. | NIST Computational Chemistry Comparison and Benchmark Database (CCCBDB); QM9. | High-level theory (e.g., CCSD(T)), known reaction energies/barriers. |