Robust Statistical Methods for Handling Outliers in Catalytic Data: A Guide for Research and Drug Development

This article provides a comprehensive framework for researchers, scientists, and drug development professionals to detect, manage, and validate outlier treatment in catalytic data.

Robust Statistical Methods for Handling Outliers in Catalytic Data: A Guide for Research and Drug Development

Abstract

This article provides a comprehensive framework for researchers, scientists, and drug development professionals to detect, manage, and validate outlier treatment in catalytic data. Covering foundational concepts, practical application of statistical methods like IQR and Z-score, troubleshooting common challenges, and rigorous validation techniques, it bridges statistical theory with domain-specific expertise. The guide emphasizes preserving data integrity in sensitive fields like biomedical research, where accurate catalytic data is crucial for reliable outcomes.

Understanding Outliers in Catalytic Data: Impact on Analysis and Model Integrity

In catalyst research and development, the integrity of your data is paramount. Outliers—data points that deviate significantly from the norm—are not just statistical noise; they can be symptoms of experimental error, indicators of novel catalytic behavior, or signals of a groundbreaking discovery. Properly identifying and managing these anomalies is a critical step in ensuring the reliability of your research conclusions and accelerating the design of new, efficient catalysts [1] [2]. This guide provides practical, actionable support for handling outliers within the specific context of catalytic datasets.

Frequently Asked Questions (FAQs)

Q1: What is the fundamental difference between an outlier and an anomaly in data analysis? While the terms are often used interchangeably, a key distinction exists. An outlier is any data point that deviates significantly from the rest of the data. An anomaly, however, is an outlier that carries potential meaning and often requires investigation, as it may indicate fraud, malfunction, or unexpected behavior [3]. In catalysis, a strange data point could be just an outlier (a measurement error) or an anomaly (pointing to a previously unobserved catalytic mechanism).

Q2: Why is manual outlier detection insufficient for modern catalytic datasets? Catalytic research today generates millions of data points from high-throughput experimentation and computational screening [4] [5]. Manually examining every metric is impractical. Furthermore, manual monitoring cannot provide the real-time insight needed to correct course quickly, whether the outlier represents a problem (like a failing reactor) or an opportunity (like a promising new material composition) [4].

Q3: How should I handle an outlier once I've detected it? The appropriate action depends on the outlier's root cause [1]:

- Remove it if it conclusively stems from a data entry error or a measurement glitch.

- Winsorize it (cap the extreme value) if you suspect the value is valid in direction but its exact magnitude is suspect.

- Investigate it further as it may be a critical signal. In catalyst design, an outlier could reveal a material with uniquely superior activity or stability [5] [1]. Always compare your analysis results with and without the outlier to understand its full impact [1].

Q4: What are the common challenges in outlier detection? Two primary challenges are:

- False Positives: Normal data points incorrectly flagged as outliers, which can distort your analysis and lead to wasted resources [2].

- Scalability: Ensuring your detection methods remain effective and efficient as your dataset grows in size and complexity [2]. Using robust statistical methods and combining multiple detection techniques can help mitigate these issues.

Troubleshooting Guides

Identifying the Type of Outlier

The first step in troubleshooting is to correctly classify the anomaly. The three universally recognized categories of outliers are detailed in the table below [4] [6] [7].

| Outlier Type | Description | Catalyst Research Example | Recommended Detection Methods |

|---|---|---|---|

| Point/Global Outlier | A single data point that is far outside the entirety of the dataset [4] [6]. | A single catalyst candidate showing an adsorption energy an order of magnitude higher than all others in a high-throughput screening [4]. | Z-score, IQR, DBSCAN [8] [1] [7]. |

| Contextual/Conditional Outlier | A data point that is anomalous within a specific context but normal in others. Context is often temporal (e.g., time of day) or conditional [4] [7]. | A catalyst's conversion efficiency is normal at 500°C but becomes a severe outlier when observed at 300°C, where it is usually inactive [4]. | Forecasting models (Prophet, STL), context-aware machine learning [7]. |

| Collective Outlier | A collection of related data points that, as a group, deviate from the overall dataset, even if individual points seem normal [4] [6]. | In time-series data of reactor pressure, a sequence of small, rapid oscillations might be anomalous, even though each individual pressure reading is within the global range [4] [7]. | Clustering algorithms (DBSCAN), sequence modeling (LSTM), subspace methods [6] [7]. |

Step-by-Step Guide for Detecting Point Outliers in a Catalyst Dataset

This protocol uses the Interquartile Range (IQR), a robust statistical method, to identify univariate point outliers in a dataset of catalyst adsorption energies.

1. Problem: Suspected erroneous or extreme adsorption energy values are skewing the statistical summary of a newly calculated catalyst library. 2. Solution: Apply the IQR method to flag potential outliers for further investigation. 3. Experimental Protocol:

- Step 1: Data Preparation. Compile your dataset of adsorption energies (e.g., for oxygen, carbon, hydrogen) into a single list or array [5].

- Step 2: Calculate Quartiles.

- Step 3: Define Outlier Boundaries.

- Lower Bound =

Q1 - 1.5 * IQR - Upper Bound =

Q3 + 1.5 * IQR[1]

- Lower Bound =

- Step 4: Identify Outliers. Any data point with a value less than the Lower Bound or greater than the Upper Bound is flagged as a potential outlier [1].

- Step 5: Visualization. Create a boxplot of your data. The outliers will appear as individual points beyond the "whiskers" of the plot [1].

4. Code Snippet (Python):

Machine Learning Workflow for Advanced Outlier Detection

For complex, high-dimensional catalytic data (e.g., features including d-band center, width, filling, and composition), machine learning offers a more powerful approach. The following workflow, inspired by research on catalyst optimization, integrates multiple techniques for comprehensive anomaly detection [5].

Title: ML Outlier Detection Workflow

Protocol Explanation:

- Data Preprocessing: Begin with a cleaned dataset of catalyst properties. In a recent study, this included adsorption energies for C, O, N, and H, alongside electronic structure descriptors like d-band center, d-band filling, d-band width, and d-band upper edge [5].

- Dimensionality Reduction (PCA): Principal Component Analysis (PCA) helps visualize the data's structure and identify dominant patterns. The bar plot of explained variance from PCA is crucial for understanding how many components capture the most significant information in your data [5].

- Unsupervised ML Models: Algorithms like Isolation Forest or DBSCAN can identify outliers without pre-labeled data. They are effective for detecting both global and collective anomalies in multivariate space [1].

- Feature Importance Analysis: Once outliers are detected, use techniques like SHAP (SHapley Additive exPlanations) or Random Forest feature importance to determine which catalyst descriptors (e.g., d-band filling) were most critical in labeling a point as an outlier. This provides interpretable insights for reverse catalyst design [5].

The Scientist's Toolkit

Research Reagent Solutions

This table lists key computational and analytical "reagents" essential for experiments in computational catalyst outlier detection.

| Item | Function in Outlier Analysis |

|---|---|

| d-band Electronic Descriptors | Parameters (center, width, filling, upper edge) that serve as key features for predicting catalytic activity and identifying outliers based on electronic structure [5]. |

| SHAP (SHapley Additive exPlanations) | A game-theoretic method to explain the output of any machine learning model, crucial for interpreting why a specific catalyst was flagged as an outlier [5]. |

| Interquartile Range (IQR) | A robust measure of statistical dispersion used to define fences for point outlier detection, less sensitive to extreme values than standard deviation [1] [9]. |

| Principal Component Analysis (PCA) | A dimensionality-reduction technique used to visualize high-dimensional catalytic data and uncover the primary components of variation that may contain outlier signals [5]. |

| Isolation Forest / DBSCAN | Unsupervised machine learning algorithms effective for detecting anomalies in multivariate data without needing pre-existing labels [6] [1]. |

| Winsorization Technique | A data cleaning method that caps extreme values at a specified percentile, reducing the influence of outliers without removing them entirely [1] [2]. |

The Critical Impact of Outliers on Statistical Measures and Predictive Modeling

FAQs: Understanding and Managing Outliers

What is an outlier and why should I care about them in my catalytic data?

An outlier is a data point that significantly deviates from the other observations in your dataset [10] [1]. In the context of catalytic research, this could be an abnormally high or low adsorption energy, a d-band center value that doesn't fit the trend, or an unexpected reaction yield.

You should care because outliers can:

- Distort Statistical Results: They disproportionately influence measures of central tendency like the mean and variance, compromising your data interpretation [11] [12].

- Skew Predictive Models: They can lead to overfitting, where your model fits the extreme points instead of the generalizable pattern, or create bias in predictions [11] [13].

- Mask or Mimic Important Signals: Sometimes, outliers are not errors but indications of novel catalytic behavior, material defects, or experimental breakthroughs [10] [14]. Ignoring them risks missing valuable insights.

How can I quickly check my dataset for outliers?

A combination of visual and statistical methods is most effective for an initial check.

- Visual Methods: Use a box plot, where outliers are typically displayed as points beyond the "whiskers" (1.5 times the Interquartile Range from the quartiles) [1] [12]. A scatter plot is also useful for seeing points that deviate from the relationship between two variables, such as a catalyst's d-band center versus its adsorption energy [11] [1].

- Statistical Methods: The IQR (Interquartile Range) method is robust and commonly used. Any data point falling below (Q1 - 1.5 \times IQR) or above (Q3 + 1.5 \times IQR) can be flagged as a potential outlier [11] [1].

I've found outliers in my data. What should I do next?

Do not automatically delete them. Your first step should be a contextual analysis [11] [15]. Ask yourself:

- Is this an error? Check for data entry mistakes, sensor malfunctions, or sample contamination. If it is a clear error, removal is justified [1].

- Is this a genuine anomaly? Could this value represent a real, albeit rare, phenomenon? In catalysis, an outlier might signal a highly active alloy composition or a new reaction pathway [5] [14]. In such cases, the outlier contains valuable information and should be preserved, perhaps by encoding it as an additional variable in your model [11].

A best practice is to run your analysis twice—with and without the outliers—and compare the results. This sensitivity analysis helps you understand their true impact on your conclusions [1].

Which predictive models are less sensitive to outliers?

The choice of model can mitigate the impact of outliers. Some models are inherently more robust:

- Tree-Based Models (e.g., Decision Trees, Random Forests) are generally more robust to outliers because they partition data based on values, rather than being influenced by extreme distances [10].

- Models using Robust Statistics, such as quantile regression or models based on median absolute deviation (MAD), are designed to be less sensitive to extreme values [11] [12].

In contrast, models like linear regression can be significantly skewed by outliers, as they minimize the sum of squared residuals, giving high weight to extreme points [10].

Troubleshooting Guides

Problem: My model's predictions are inaccurate and biased.

Potential Cause: Outliers are exerting undue influence on the training of your predictive model, pulling the predicted values toward them and reducing the model's generalizability [11] [13].

Solution: Implement a robust data preprocessing pipeline.

- Detect: Use the IQR method or Z-scores (if your data is normally distributed) to identify outliers [1].

- Handle: Choose a treatment strategy based on your investigation.

- Winsorization: Cap the extreme values. For instance, set all values above the 95th percentile to the value of the 95th percentile. This reduces the influence of the outlier without removing the data point entirely [1] [2].

- Transformation: Apply a mathematical transformation like a logarithm to the entire dataset. This can compress the scale and reduce the relative distance of outliers [11].

- Use Robust Models: Switch to algorithms like Random Forest or leverage robust statistical methods that are less sensitive to extreme values [10] [11].

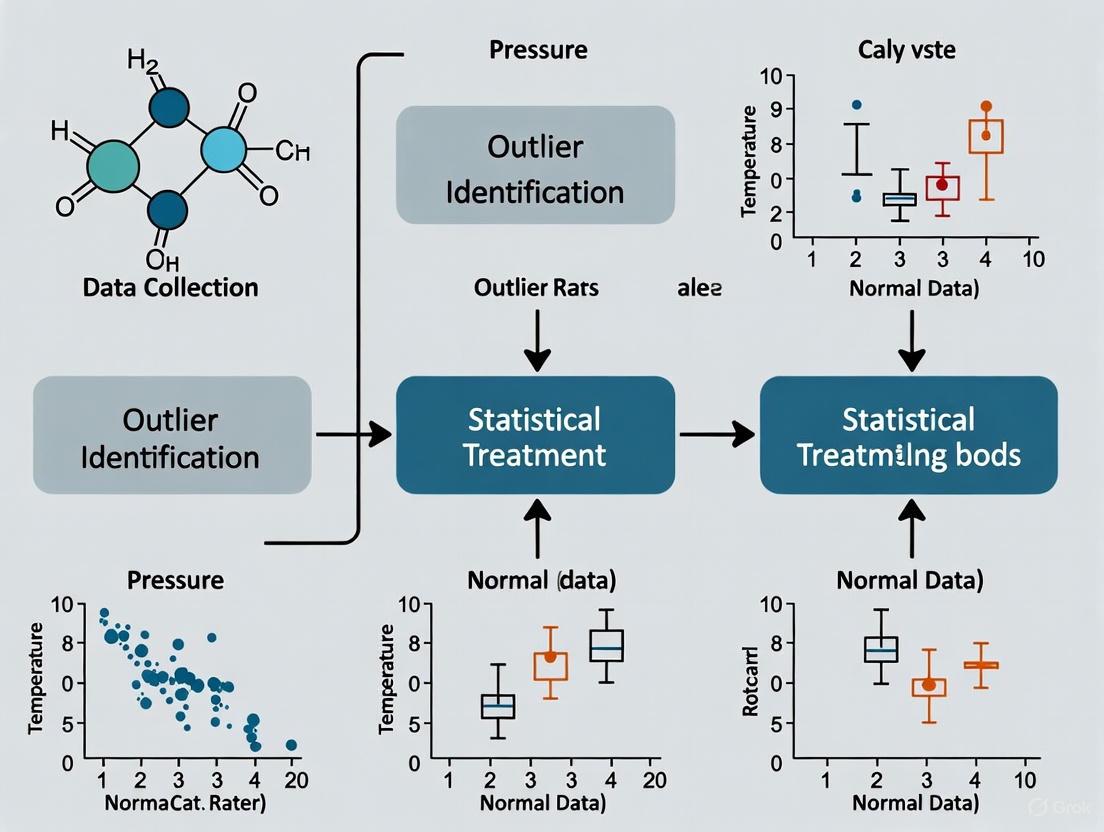

Experimental Workflow: The following workflow outlines a systematic approach to managing outliers in your data analysis pipeline.

Problem: My descriptive statistics are misleading.

Potential Cause: A single outlier can drastically skew the mean and inflate the standard deviation, giving a false representation of where the bulk of your data lies and its variability [11] [12] [13]. For example, one highly active catalyst can make an entire library appear more promising than it is.

Solution: Use robust descriptive statistics that are resistant to outliers.

- Use median instead of mean for central tendency.

- Use Interquartile Range (IQR) or Median Absolute Deviation (MAD) instead of standard deviation for spread [12] [9].

Comparison of Statistical Measures:

| Measure Type | Standard Measure (Sensitive to Outliers) | Robust Alternative (Resistant to Outliers) |

|---|---|---|

| Central Tendency | Mean ((\mu)) | Median |

| Statistical Dispersion | Standard Deviation ((\sigma)) | Interquartile Range (IQR) or Median Absolute Deviation (MAD) |

| Visualization | Line chart (for mean) | Box plot |

Problem: I am getting too many false positives in outlier detection.

Potential Cause: The detection method (e.g., Z-score) might be too sensitive for your data's distribution, or the parameters (like the Z-score threshold) might be set too strictly [2].

Solution: Optimize your detection methodology.

- Assume Non-Normal Data: If your data is not normally distributed, avoid Z-scores. Use the IQR method, which is non-parametric and doesn't assume a distribution [9].

- Leverage Machine Learning: For complex, multi-dimensional catalytic data (e.g., features like d-band center, width, filling), use advanced algorithms like Isolation Forest or DBSCAN [11] [1]. These can detect subtle, contextual outliers that univariate methods miss.

- Adjust Parameters: Calibrate the sensitivity of your detection method. For example, in DBSCAN, adjusting the

eps(neighborhood distance) andmin_samplesparameters can help fine-tune what is considered an outlier point [1] [13].

Research Reagent Solutions: The Outlier Management Toolkit

This table details key methodological "reagents" for handling outliers in your research.

| Tool / Technique | Function | Key Considerations |

|---|---|---|

| IQR Method | Identifies outliers based on data quartiles. Robust to non-normal distributions [11] [9]. | Simple and effective for univariate data. May not be suitable for multivariate contexts. |

| Z-Score | Measures standard deviations from the mean. Effective for normally distributed data [1] [12]. | Sensitive to outliers itself (as mean and SD are influenced by extremes). |

| Winsorization | Caps extreme values at a specific percentile (e.g., 5th and 95th). Reduces influence without removal [1] [12]. | Preserves sample size but alters the true value of extreme points. |

| Isolation Forest | ML algorithm that isolates anomalies based on their ease of separation. Efficient for high-dimensional data [11] [1]. | Requires hyperparameter tuning for optimal performance. |

| DBSCAN | A clustering algorithm that identifies outliers as points in low-density regions. Good for spatial data [1] [13]. | Sensitive to its parameter settings (epsilon and minPts). |

| Robust Regression | Modeling techniques (e.g., using Huber loss) that are less sensitive to outliers in the dependent variable [11] [12]. | Prevents model parameters from being skewed by anomalous target values. |

Advanced Methodologies: Outlier Detection in Multivariate Catalyst Data

When working with high-dimensional catalyst data (e.g., combining electronic descriptors like d-band center, d-band filling, and geometric features), univariate methods fall short. The following protocol uses Principal Component Analysis (PCA) to reduce dimensionality before detecting outliers.

Protocol: Multivariate Outlier Detection using PCA

- Standardize the Data: Scale all features to have a mean of zero and a standard deviation of one to ensure they contribute equally to the PCA [5].

- Apply PCA: Perform PCA on the standardized dataset. This transforms your original features into new, uncorrelated components that capture the direction of maximum variance in the data [5].

- Project Data: Project your original data onto the first few principal components (PCs) that explain the majority of the variance (e.g., 95%) [5].

- Detect Outliers: On the reduced-dimensionality data (scores of the selected PCs), use a robust distance metric like the Mahalanobis distance to detect observations that are far from the centroid of the data in this new space [12]. Alternatively, visual inspection of the PCA scores plot (e.g., PC1 vs. PC2) can often reveal outliers as isolated points [5].

Visualizing Multivariate Detection: This diagram illustrates the conceptual process of using PCA to reveal outliers in a high-dimensional feature space.

Frequently Asked Questions

1. What are the most common sources of outliers in experimental data? Outliers typically originate from three primary sources: measurement errors (e.g., faulty instruments), data entry or processing errors (e.g., typos, incorrect unit conversions), and natural variability (genuine, rare events in the process or population being studied) [16] [17] [18]. Identifying the source is the first step in determining how to handle them.

2. Should I always remove outliers from my dataset? No, not automatically. The decision depends on the outlier's cause [17].

- Remove or correct if the outlier stems from a verifiable error in measurement or data entry [17].

- Investigate and potentially retain if it is due to natural variability, as it may represent a valuable, rare event relevant to your research [17] [19]. Removing valid extreme values can distort the true nature of your data [18].

3. How can I detect outliers in my catalytic data? The appropriate method depends on your data's structure and distribution.

- For single variables (Univariate): Use simple statistical methods like the IQR (Interquartile Range) method, which is robust for non-normal data, or Z-scores, which work well for normally distributed data [20] [21].

- For multiple variables (Multivariate): Use advanced techniques like the Local Outlier Factor (LOF) or DBSCAN, which can detect outliers based on the density and relationships between several variables [16] [21].

4. What should I do if I find an outlier but cannot determine its cause? A best practice is to perform and document a sensitivity analysis. Run your key statistical models or analyses both with and without the outlier and compare the results [17] [1]. This transparently demonstrates the outlier's influence on your conclusions.

Troubleshooting Guides

Guide 1: Diagnosing the Source of an Outlier

Follow this workflow to identify the root cause of a suspected outlier in your data.

Guide 2: Handling Outliers Based on Their Source

Once you have diagnosed the likely source, use this table to determine the appropriate handling method.

| Source of Outlier | Recommended Action | Example in Catalytic Research | Statistical Method to Consider |

|---|---|---|---|

| Data Entry Error [16] [17] | Correct the value if possible; otherwise, remove it. | A turnover frequency (TOF) recorded as 1500 h⁻¹ instead of 150 h⁻¹ due to a typo. |

Data sorting and visual inspection [20]. |

| Measurement Error [16] [12] | Remove the erroneous data point. | A faulty thermocouple reports a reaction temperature of 50 °C when the actual temperature was 150 °C. |

Z-score or IQR method for detection [21] [22]. |

| Natural Variability [16] [17] | Retain the data point and use robust statistical methods for analysis. | An exceptionally high product yield from a catalyst batch due to an unknown, favorable surface reconstruction. | Use median instead of mean; employ robust regression [17] [18]. |

Experimental Protocols

Protocol 1: Detecting Univariate Outliers using the IQR Method

This is a robust method for identifying outliers in a single variable, such as catalyst yield or reaction rate [20] [21].

- Calculate Quartiles: Compute the first quartile (Q1, 25th percentile) and the third quartile (Q3, 75th percentile) of your dataset.

- Compute IQR: Find the Interquartile Range (IQR): ( IQR = Q3 - Q1 ).

- Establish Fences:

- Lower Fence = ( Q1 - 1.5 \times IQR )

- Upper Fence = ( Q3 + 1.5 \times IQR )

- Identify Outliers: Any data point that falls below the Lower Fence or above the Upper Fence is considered an outlier.

Protocol 2: Detecting Multivariate Outliers using the Local Outlier Factor (LOF)

This algorithm is ideal for identifying outliers when your analysis involves multiple related parameters (e.g., temperature, pressure, and conversion rate simultaneously) [16] [21].

- Data Preparation: Standardize your multivariate dataset and reshape it for the algorithm.

- Model Fitting: Choose the number of neighbors (

n_neighbors) and a contamination parameter (expected proportion of outliers). Fit the LOF model to your data. - Prediction: The algorithm assigns an outlier score to each point. Points with a significantly higher density than their neighbors are flagged as outliers (typically labeled as

-1). - Interpretation: Investigate the data points flagged by the LOF algorithm to understand why, in the context of multiple variables, they are considered unusual.

The Scientist's Toolkit: Key Reagents & Software for Outlier Management

| Item | Function/Brief Explanation |

|---|---|

| Statistical Software (Python/R) | For implementing statistical detection methods (Z-score, IQR) and advanced machine learning models (LOF, Isolation Forest) [21]. |

| Visualization Libraries (Seaborn, Matplotlib) | To create box plots and scatter plots for the initial visual identification of outliers [20] [22]. |

| Robust Statistical Estimators | Use the median and interquartile range (IQR) instead of mean and standard deviation for initial data description, as they are less sensitive to outliers [20] [18]. |

| Database with Audit Trail | Maintains a record of original data, allowing for the verification and correction of data entry errors [17]. |

| Sensitivity Analysis Plan | A pre-defined protocol for comparing analytical results with and without outliers to assess their impact [17] [1]. |

The Role of Domain Expertise in Distinguishing Error from Critical Anomaly

Frequently Asked Questions (FAQs)

Q1: What is the practical difference between a data error and a critical anomaly in catalytic research? A data error, such as a data entry mistake or sensor malfunction, introduces inaccuracy and should be corrected or removed. A critical anomaly, however, is a legitimate data point that deviates significantly from the norm and may indicate a novel catalytic behavior or a breakthrough material property. For example, in catalyst optimization, an unexpected adsorption energy could signal a highly effective new alloy. Distinguishing between the two requires domain expertise to interpret the scientific context of the deviation [8] [5].

Q2: Why can't automated statistical methods alone make this distinction? Automated methods like Z-scores or Interquartile Range (IQR) are excellent at flagging statistical deviations [23]. However, they lack the scientific context to determine the cause or significance of the deviation. A point flagged as an outlier by an IQR test could be a transcription error (e.g., a misplaced decimal) or a genuinely high-performing catalyst. Only a scientist with expertise in electrocatalysis can interpret whether the anomaly aligns with known principles, such as d-band theory, or suggests a new discovery [5] [2].

Q3: What are the risks of misclassifying an anomaly? Misclassification can have significant consequences:

- False Positive (Treating an error as critical): Wastes valuable R&D resources chasing an artifact and can lead to erroneous scientific conclusions.

- False Negative (Treating a critical anomaly as an error): Overlooking a potential scientific breakthrough or a critical fault in an experimental setup, such as a malfunctioning reactor [2] [24].

Q4: How can I incorporate domain knowledge into a statistical anomaly detection workflow? The most effective approach is a hybrid workflow. Use statistical methods as a first pass to flag potential outliers. Then, subject these flagged data points to a review process guided by domain expertise. This involves checking the anomaly against known catalytic descriptors (e.g., d-band center), experimental conditions, and synthesis parameters [5]. Establishing documented procedures for this investigation ensures consistency and accountability [8].

Troubleshooting Guides

Guide 1: Investigating a Point Anomaly in Adsorption Energy Measurements

Problem: A statistical process control chart flags a single, extreme value for the adsorption energy of oxygen on a new bimetallic catalyst.

Investigation Steps:

Verify Data Integrity:

- Action: Check the raw data source for entry errors. Correlate the anomalous reading with logs from the analytical equipment (e.g., spectrometer).

- Domain Context: Confirm that the experimental run ID associated with the anomaly does not have documented issues, such as incorrect temperature calibration or contaminated precursors.

Contextualize the Measurement:

- Action: Plot the anomalous value against key electronic-structure descriptors, such as the d-band center or d-band filling, for your catalyst dataset [5].

- Domain Context: Determine if the outlier aligns with the predicted trend described by chemisorption theory. An outlier that fits the electronic-structure model may be critical, not an error.

Assess Experimental Replicability:

- Action: If possible, re-run the experiment under identical conditions.

- Domain Context: A replicable anomaly strongly suggests a critical discovery. An irreplicable one points toward a transient error.

Guide 2: Diagnosing a Collective Anomaly in Catalyst Performance Screening

Problem: A machine learning model identifies a group of catalyst samples that, while individually within normal bounds, collectively show an unusual pattern of activity and stability metrics.

Investigation Steps:

Analyze Shared Characteristics:

- Action: Use feature importance analysis (e.g., SHAP analysis) to identify which parameters (e.g., composition, synthesis method) are most associated with the anomalous cluster [5].

- Domain Context: Investigate if these shared characteristics represent a known but under-explored region of the material space or an unintended deviation from the synthesis protocol.

Check for Systemic Experimental Drift:

- Action: Review the maintenance logs and calibration records for all equipment used in testing the anomalous cluster.

- Domain Context: A collective anomaly affecting a batch of samples processed on the same day could indicate a minor fault in a reactor's gas flow controller, representing an error rather than a discovery.

Triangulate with Complementary Techniques:

- Action: Characterize the "anomalous" catalysts using a different analytical method (e.g., TEM or XPS).

- Domain Context: If the secondary technique confirms unusual structural or surface properties, the collective anomaly is likely critical and warrants further investigation.

The following tables summarize key statistical methods and anomaly types relevant to catalytic data analysis.

Table 1: Common Statistical Methods for Anomaly Detection

| Method | Principle | Best Use Case in Catalysis | Limitations |

|---|---|---|---|

| Z-Score [25] [23] | Measures standard deviations from the mean. | Identifying univariate outliers in normally distributed data (e.g., reaction yield). | Assumes normal distribution; sensitive to existing outliers. |

| Interquartile Range (IQR) [8] [23] | Uses quartiles to define a "normal" range. Resistrant to outliers. | Detecting outliers in skewed distributions (e.g., catalyst particle size). | Univariate; may not capture contextual anomalies. |

| DBSCAN (Clustering) [25] | Groups dense data points; outliers are in low-density regions. | Finding novel catalyst compositions in a high-dimensional feature space. | Sensitive to parameter selection; harder to explain. |

Table 2: Typology of Data Anomalies in Catalytic Research

| Anomaly Type | Description | Catalytic Research Example | Potential Cause |

|---|---|---|---|

| Point Anomaly [8] [24] | A single data point deviating significantly. | One catalyst sample shows a turnover frequency (TOF) 10x higher than all others. | Measurement error, unique active site, or data entry mistake. |

| Contextual Anomaly [8] [24] | A data point that is unusual in a specific context. | A catalyst's selectivity drops unexpectedly at a standard temperature. | Subtle precursor impurity or unrecognized side reaction. |

| Collective Anomaly [8] [26] | A group of data points that are anomalous together. | A series of experiments show a correlated, slight drop in activity and stability. | Gradual deactivation of testing equipment or a new decomposition mechanism. |

Experimental Protocols

Protocol 1: Establishing a Baseline for Catalyst Performance

Objective: To define the expected range and pattern of catalytic performance data, which serves as the foundation for anomaly detection.

Methodology:

- Data Collection: Compile historical data from high-throughput experimentation, including key performance metrics (e.g., adsorption energy, overpotential, conversion rate, selectivity) and catalyst descriptors (e.g., d-band center, composition) [5].

- Descriptive Statistics: For each key metric, calculate the mean, median, standard deviation, and interquartile range (IQR).

- Visualization: Create control charts (time-series plots with mean and control limits) and boxplots to visualize the central tendency, spread, and existing outliers of the data [8] [25].

- Define Thresholds: Set initial statistical thresholds for alerts. A common practice is to use IQR to define the upper and lower bounds:

[Q1 - 1.5*IQR, Q3 + 1.5*IQR]. Data points outside these bounds are flagged for review [23].

Protocol 2: Root Cause Analysis for Flagged Anomalies

Objective: To systematically investigate the source of a statistical anomaly and classify it as an error or a critical finding.

Methodology:

- Data Lineage Audit: Trace the anomalous data point back to its origin. Check for inconsistencies in data entry, unit conversions, or sample labeling [8].

- Experimental Condition Correlation: Cross-reference the anomaly with all logged experimental conditions (e.g., temperature, pressure, precursor batch, synthesis duration, operator). Look for correlations that might explain the deviation.

- Model-Based Interpretation: Input the anomalous catalyst's features into existing machine learning or mechanistic models (e.g., a model predicting adsorption energy from d-band properties) [5]. A large residual error might indicate a genuinely novel behavior or a model limitation.

- Expert Elicitation: Present the findings from steps 1-3 to a panel of domain experts. The panel makes a final judgment on the anomaly's nature based on collective experience and known scientific principles [2].

Workflow Visualization

Anomaly Investigation Workflow

The Scientist's Toolkit: Research Reagent Solutions

Table 3: Essential Tools for Anomaly Investigation in Catalytic Data

| Tool / Solution | Function in Anomaly Investigation |

|---|---|

| Electronic-Structure Descriptors [5] | Parameters like d-band center, d-band width, and d-band filling provide a theoretical context to interpret whether an anomalous adsorption energy is physically plausible. |

| SHAP (SHapley Additive exPlanations) Analysis [5] | A method for interpreting machine learning output. It helps identify which catalyst features (e.g., composition, surface area) were most influential in causing a model to flag a data point as anomalous. |

| IQR (Interquartile Range) Method [8] [23] | A robust statistical technique used to establish a baseline range for "normal" data and to flag potential outliers for further investigation in a univariate context. |

| Knowledge Graphs [5] | A structured representation of domain knowledge that links catalysts, properties, and performance. It can be used to check if an anomaly conflicts with or extends established scientific relationships. |

| Winsorizing Techniques [2] | A data cleaning strategy that mitigates the impact of extreme values by limiting them to a specified percentile, reducing skew without removing the data point entirely. |

For researchers handling catalytic data, box plots and scatter plots are essential first steps for identifying outliers—data points that fall outside the expected range and could skew your results. These visual tools provide a fast, intuitive way to spot potential data issues before advanced statistical analysis.

- Use a Box Plot to examine the distribution of a single variable and identify outliers in its values [27] [28].

- Use a Scatter Plot to explore the relationship between two variables and detect outliers from the expected correlation pattern [29].

The workflow below illustrates how these tools integrate into a comprehensive outlier management strategy for catalytic research.

Frequently Asked Questions (FAQs)

1. How does a box plot define and identify an outlier? A box plot uses the Interquartile Range (IQR) to establish a expected range for the data. The IQR is the distance between the 25th percentile (Q1) and the 75th percentile (Q3) of your dataset. The "whiskers" of the plot typically extend to the smallest and largest values within 1.5 times the IQR from the quartiles. Any data point that falls beyond the whiskers is visually plotted as a dot and classified as a potential outlier [27] [28] [30].

- Upper Boundary = Q3 + (1.5 × IQR)

- Lower Boundary = Q1 – (1.5 × IQR)

2. I've found an outlier in my catalytic data. What should I do next? An outlier is not necessarily an error; it could be a significant discovery. Your investigation should follow a structured path [27] [28]:

- Check for Data Entry Errors: Verify the data was transcribed correctly from lab notebooks or instrumentation outputs.

- Review Experimental Notes: Scrutinize the lab conditions, catalyst batch, or instrumentation logs for the specific experiment that produced the outlier. Look for any noted anomalies or deviations from the standard protocol.

- Determine Biological/Chemical Significance: If no technical error is found, the outlier may represent a true, unexpected result. In catalytic research, this could indicate a novel catalyst behavior or a previously unobserved reaction pathway worthy of further study [5].

3. When should I use a scatter plot over a box plot for outlier detection? The choice depends on the nature of your analysis [27] [29]:

- Box Plot: Best for analyzing the distribution and finding outliers within a single variable (e.g., finding unusual values in the adsorption energy dataset).

- Scatter Plot: Essential for detecting outliers within the context of the relationship between two variables. A point that is not an outlier in either variable alone can be a clear outlier when the relationship is considered (e.g., a catalyst with normal d-band center and normal stability, but an anomalous combination of the two).

4. A scatter plot shows a correlation between my variables. Can I conclude one causes the other? No. This is a critical distinction in scientific research. A scatter plot reveals correlation, not causation [29]. An observed relationship could be influenced by an unmeasured third variable. For example, a correlation between catalyst activity and stability might be driven by a shared underlying factor like particle size. Further controlled experiments are required to establish a true causal link.

Troubleshooting Guide: Common Visualization Problems

| Problem & Symptoms | Possible Cause | Solution & Next Steps |

|---|---|---|

| Overwhelming Number of Outliers: Box plot shows many data points beyond the whiskers [28]. | The data may come from a non-normal or heavily skewed distribution. The 1.5×IQR rule, while standard, might not be suitable for all data types. | Verify data distribution with a histogram. Consider data transformation (e.g., log transform) to normalize the distribution. Document your choice of outlier criteria. |

| Misleading Scatter Plot: The plot shows no clear pattern, making outliers difficult to define [29]. | The axis scaling might be inappropriate, or there may be no strong relationship between the two chosen variables. | Ensure axes start at zero or use consistent scaling to avoid visual distortion [29]. Re-evaluate variable selection; the chosen metrics might be unrelated. |

| Hidden Multimodal Data: The box plot looks fine, but a histogram reveals multiple peaks (sub-populations) that are grouped together [28]. | The dataset might contain unidentified groups (e.g., data from two different catalyst synthesis methods combined into one dataset). | Always pair box plots with histograms or density plots during initial exploration [27]. Color-code data points in box and scatter plots by a potential grouping variable (e.g., synthesis batch) to reveal hidden structures. |

The Scientist's Toolkit: Research Reagent Solutions

The following table details key computational and statistical "reagents" essential for conducting robust outlier detection in catalytic data analysis.

| Tool / Solution | Function in Outlier Detection |

|---|---|

| Interquartile Range (IQR) | A core measure of statistical dispersion used to set the boundaries for outlier detection in box plots [27] [30]. |

| Statistical Software (R, Python) | Platforms that automate the calculation of summary statistics and generation of publication-quality box plots and scatter plots [28]. |

| d-band Descriptors | Electronic structure features (e.g., d-band center, filling, width) that serve as key variables in scatter plots to identify outlier catalysts with anomalous adsorption properties [5]. |

| SHAP (SHapley Additive exPlanations) | An advanced method from machine learning that helps interpret complex models and can be used to understand which features contribute most to a data point being flagged as an outlier [5]. |

| Principal Component Analysis (PCA) | A dimensionality reduction technique that can transform multiple correlated variables into principal components, allowing for outlier detection in multidimensional space beyond what 2D scatter plots can show [5]. |

Practical Statistical Techniques for Outlier Detection and Treatment in Catalysis Research

Applying the Interquartile Range (IQR) Method for Robust Univariate Outlier Detection

FAQ: The IQR Method for Outlier Detection

Q1: What is an outlier, and why is detecting them crucial in catalytic data research? An outlier is a data point that deviates markedly from other observations in the sample [31]. In catalytic research, outliers can arise from measurement errors, faulty sensors, natural variance in experimental conditions, or genuine novel discoveries [32] [33]. Detecting them is critical because they can significantly skew statistical analyses (like reaction rate calculations), impact the mean of your dataset, and lead to inaccurate models and conclusions [32].

Q2: Why should I use the IQR method over other techniques, like the Z-score? The IQR method is non-parametric and robust, meaning it does not assume your data follows a normal distribution [34]. Catalytic data is often skewed or contains multiple peaks, making Z-score methods (which rely on normality) less reliable. The IQR is resistant to extreme values, as it is based on percentiles (Q1 and Q3) rather than the mean, which can be easily distorted by outliers themselves [34] [35].

Q3: How is the IQR calculated, and what are the key quartiles? The IQR is the range of the middle 50% of your data. It is calculated by first finding the three quartiles that divide your sorted dataset into four equal parts [36].

- Q1 (First Quartile): The median of the lower half of the data (25th percentile).

- Q2 (Second Quartile): The median of the entire dataset (50th percentile).

- Q3 (Third Quartile): The median of the upper half of the data (75th percentile).

The formula for IQR is: IQR = Q3 - Q1 [36] [34].

Q4: What is the standard multiplier, and why is it 1.5? The standard multiplier of 1.5 is a convention that balances sensitivity and robustness [34]. It creates fences that, for a perfectly normal distribution, are approximately equivalent to ±2.7 standard deviations, capturing over 99% of the data. This makes it effective at flagging extreme values without being overly aggressive [35]. For a more stringent analysis, a higher multiplier (e.g., 3.0) can be used to identify only "extreme" outliers [37].

Q5: What should I do with outliers once I detect them? The action depends on the root cause, which requires domain expertise [38] [37].

- Remove them: If the outlier is due to a measurement or data entry error, removal is appropriate [38].

- Cap them (Winsorizing): Replace the outlier value with the nearest non-outlier value (the upper or lower bound). This maintains your sample size while reducing the outlier's influence [34].

- Investigate them: In research, outliers can sometimes be the most interesting data points, indicating a novel catalytic behavior or a previously unknown reaction pathway [33]. Never automatically delete data points without context.

Troubleshooting Guide: Common Issues with IQR Application

| Problem | Possible Cause | Solution |

|---|---|---|

| Too many outliers are detected. | The dataset is highly skewed, or the multiplier (1.5) is too sensitive for your context. | Visualize the data with a box plot and histogram to understand the distribution. Consider using a larger multiplier (e.g., 3.0) to define more stringent fences [37]. |

| No outliers are detected, but some points look extreme. | The IQR may be too wide due to a spread-out dataset, "masking" potential outliers. | Use graphical methods (e.g., scatter plots) to complement the IQR analysis. Consider if a different outlier detection method is more suitable for your data's distribution [31]. |

| Inconsistent Q1/Q3 values between software. | Different interpolation methods for calculating percentiles. |

Specify the interpolation method consistently (e.g., 'linear' in Python's numpy.percentile). For a standard approach, use interpolation='linear' [39]. |

| Uncertain whether to remove an outlier. | The root cause of the outlier is unknown. | Do not remove the data point. Instead, conduct the analysis both with and without the outlier and report the differences. Consult the experimental notes for potential errors [38] [37]. |

Experimental Protocol: IQR-Based Outlier Detection

This protocol provides a step-by-step methodology for applying the IQR method to a univariate dataset, such as a series of catalyst yield measurements.

1. Data Preparation and Sorting Begin with a univariate dataset. Sort all data points in ascending order [38].

- Example Dataset (Catalytic Yield %):

64, 35, 29, 41, 53, 26, 28, 31, 37, 24, 22[38] - Sorted Data:

22, 24, 26, 28, 29, 31, 35, 37, 41, 53, 64

2. Calculation of Quartiles and IQR Calculate the key quartiles and the IQR. For datasets with an odd number of observations (n=11), the median (Q2) is the 6th value.

- Q1 (Median of lower half): The median of

22, 24, 26, 28, 29is 26 [38]. - Q3 (Median of upper half): The median of

35, 37, 41, 53, 64is 41 [38]. - IQR:

IQR = Q3 - Q1 = 41 - 26 = 15[38].

3. Definition of Outlier Boundaries (Fences) Establish the lower and upper fences using the standard multiplier of 1.5.

- Lower Fence:

Q1 - 1.5 * IQR = 26 - (1.5 * 15) = 3.5 - Upper Fence:

Q3 + 1.5 * IQR = 41 + (1.5 * 15) = 63.5[38]

4. Identification of Outliers Any data point below the lower fence or above the upper fence is considered an outlier.

- Outliers in our dataset: The value 64 is above the upper fence of 63.5 and is flagged as an outlier [38].

5. Visualization and Documentation Create a box plot to visually communicate the results. The box plot will show the quartiles (box), the IQR (box length), the fences (whiskers), and outliers (points beyond the whiskers) [34] [40]. Document all calculated values and decisions for reproducibility.

The workflow for this protocol is summarized in the following diagram:

The table below summarizes the key quantitative results from the example experimental protocol.

| Metric | Value | Description |

|---|---|---|

| Q1 (First Quartile) | 26 | 25th percentile of the catalytic yield data. |

| Q2 (Median) | 31 | Middle value of the sorted dataset. |

| Q3 (Third Quartile) | 41 | 75th percentile of the catalytic yield data. |

| IQR | 15 | Range of the middle 50% of the data (Q3 - Q1). |

| Lower Fence | 3.5 | Calculated as Q1 - 1.5 * IQR. |

| Upper Fence | 63.5 | Calculated as Q3 + 1.5 * IQR. |

| Detected Outlier | 64 | Data point exceeding the Upper Fence. |

Research Reagent Solutions: Essential Tools for Analysis

The following software and libraries are essential for implementing the IQR method in a computational environment.

| Tool / Library | Function | Application in IQR Analysis |

|---|---|---|

| Python with Pandas | Data manipulation and analysis. | Used to load, sort, and manage the univariate dataset (e.g., pd.DataFrame). |

| NumPy & SciPy | Core scientific computing. | Calculate percentiles (np.percentile) and the IQR directly (scipy.stats.iqr) [39]. |

| Seaborn / Matplotlib | Data visualization. | Generate box plots for intuitive visual outlier detection and result presentation [34] [40]. |

| Jupyter Notebook | Interactive computational environment. | Provides a platform for documenting the analysis step-by-step, ensuring reproducibility. |

Utilizing Z-Scores and Modified Z-Scores for Normally Distributed Data

Frequently Asked Questions (FAQs)

1. What is the fundamental difference between a Z-score and a Modified Z-score?

The core difference lies in their calculation and robustness. The Z-score measures how many standard deviations a data point is from the mean [41]. The Modified Z-score uses the median and the Median Absolute Deviation (MAD) instead, making it more resistant to the influence of outliers themselves [41] [42]. The Z-score relies on the mean and standard deviation, both of which can be significantly distorted by extreme values, whereas the median and MAD provide a more stable center and measure of spread for outlier detection [41].

2. When should I use the Modified Z-score over the standard Z-score?

You should prefer the Modified Z-score in the following situations [41] [42]:

- When your data is skewed or not normally distributed.

- When your dataset contains suspected outliers, as the Z-score's parameters (mean, standard deviation) are easily skewed by these very outliers.

- When working with small datasets (the Z-score method is not recommended for datasets with fewer than 12 items) [42].

3. What are the standard thresholds for identifying an outlier with each method?

The commonly accepted thresholds are [41] [31]:

- Z-score: An absolute value greater than 3.

- Modified Z-score: An absolute value greater than 3.5.

4. Can these methods be applied to small datasets?

The Modified Z-score is suitable for small datasets [42]. In contrast, the standard Z-score method behaves strangely in small datasets and is not recommended for use with fewer than 12 items [42].

5. Why is it important to identify and manage outliers in catalytic or bioassay data?

Outliers can significantly impact the accuracy and precision of your results. In bioassays, for example, a single outlier can reduce the accuracy of relative potency measurements and widen the confidence intervals, leading to increased measurement error and potential batch failure rates [43]. Proper outlier management ensures that conclusions are based on representative data.

Troubleshooting Guides

Problem: Inconsistent outlier detection when data is skewed.

Solution: Switch from the Z-score to the Modified Z-score method.

- Cause: The Z-score assumes a normal distribution and relies on the mean and standard deviation. Skewed data can distort these parameters, leading to unreliable outlier detection [41].

- Fix: Use the Modified Z-score, which is based on the median and MAD, as it is more robust to non-normal distributions and the presence of outliers [41] [42].

- Procedure:

Problem: Suspected outliers are affecting the very parameters used to detect them (masking).

Solution: Use the robust Modified Z-score or a graphical method like a box plot.

- Cause: Masking can occur when multiple outliers are present, preventing any single one from being detected. The Z-score is particularly susceptible because extreme values inflate the standard deviation and shift the mean [31].

- Fix: The Modified Z-score is less susceptible to masking because the median is not influenced by extreme values. Complement your analysis with a box plot, which uses quartiles to visualize outliers independently of the mean [31].

- Procedure:

- Visualize your data using a box plot to get an initial, robust view of potential outliers.

- Apply the Modified Z-score method as described above.

- Compare the results from both methods to make a well-informed decision.

Quantitative Data Comparison

The following table summarizes the key characteristics of the Z-score and Modified Z-score methods for easy comparison.

Table 1: Comparison of Z-Score and Modified Z-Score Methods for Outlier Detection

| Feature | Z-Score | Modified Z-Score |

|---|---|---|

| Measure of Center | Mean (( \bar{x} )) | Median (( \tilde{x} )) |

| Measure of Spread | Standard Deviation (s) | Median Absolute Deviation (MAD) |

| Formula | ( Zi = \frac{(xi - \bar{x})}{s} ) | ( Mi = \frac{0.6745(xi - \tilde{x})}{\text{MAD}} ) [41] [31] |

| Outlier Threshold | ( \mid Z_i \mid > 3 ) | ( \mid M_i \mid > 3.5 ) [41] [31] |

| Data Distribution Assumption | Works best for normal distribution | Robust for skewed/non-normal data [41] |

| Sensitivity to Outliers | High (parameters are sensitive) | Low (parameters are robust) [41] [42] |

| Performance on Small Datasets | Not recommended for n < 12 [42] | Suitable for small datasets [42] |

Experimental Protocol for Outlier Detection

This protocol provides a detailed methodology for detecting outliers in a univariate dataset using Python.

1. Data Import and Visualization

- Objective: Load the data and perform an initial visual assessment of its distribution.

- Procedure:

- Import necessary libraries:

pandas,seaborn,matplotlib.pyplot. - Load your data from a source (e.g., a CSV file) into a DataFrame.

- Generate a histogram with a Kernel Density Estimate (KDE) plot to visualize the data distribution and check for normality and potential skewness [41].

- Generate a box plot for a robust visual summary and preliminary outlier identification.

- Import necessary libraries:

2. Outlier Detection using Z-Score

- Objective: Identify outliers assuming the data is normally distributed.

- Procedure:

- Calculate the Z-score for each data point. This can be done using the

zscorefunction fromscipy.stats. - Define an outlier as a data point with an absolute Z-score greater than 3.

- Filter and flag all data points meeting this condition for further review [41].

- Calculate the Z-score for each data point. This can be done using the

3. Outlier Detection using Modified Z-Score

- Objective: Identify outliers in a robust manner, suitable for non-normal data.

- Procedure:

- Define a function to calculate the Modified Z-score.

- Calculate the median of the dataset.

- Compute the MAD:

MAD = median(|x_i - median(X)|). - Calculate the Modified Z-score:

M_i = 0.6745 * (x_i - median(X)) / MAD[41].

- Apply this function to your data.

- Flag data points with an absolute Modified Z-score greater than 3.5 as potential outliers [41] [31].

- Define a function to calculate the Modified Z-score.

Experimental Workflow Diagram

The diagram below illustrates the logical workflow for the outlier detection process described in the experimental protocol.

Outlier Detection Workflow

The Scientist's Toolkit: Essential Research Reagents & Solutions

Table 2: Key Tools for Statistical Outlier Detection in Research

| Item / Tool | Function / Description |

|---|---|

| Python with pandas & NumPy | Core libraries for data manipulation, calculation, and handling numerical operations [41]. |

| Statistical Libraries (scipy.stats) | Provides built-in functions for calculating Z-scores and other statistical measures [41]. |

| Visualization Libraries (matplotlib, seaborn) | Used to create histograms, KDE plots, and box plots for initial data assessment and visualization of potential outliers [41]. |

| Median Absolute Deviation (MAD) | A robust statistic used as the measure of dispersion in the Modified Z-score calculation, resistant to outliers [41]. |

| Jupyter Notebook / Lab | An interactive computing environment ideal for exploratory data analysis, protocol development, and sharing results [41]. |

Leveraging AI and Machine Learning for Advanced Anomaly Identification

Troubleshooting Guides & FAQs

Frequently Asked Questions

Q1: What are the main types of anomalies I might encounter in catalytic data? In catalytic research, you will typically encounter three primary types of anomalies. Point anomalies are individual data points that are far outside the entire dataset, such as a single, impossibly high reaction yield caused by a measurement error. Contextual anomalies are data points that are only unusual in a specific context; for instance, a normal reaction rate that occurs at a temperature far outside the optimal range for that catalyst. Collective anomalies occur when a collection of related data points deviates from the expected pattern, like a set of catalyst performance metrics that all show an unexpected correlation, which might indicate a new reaction pathway or a systemic instrument drift [44].

Q2: My ML model for predicting catalyst performance has high error. Could outliers be the cause? Yes, this is a common issue. A single outlier can significantly skew the mean of a dataset and cause machine learning models to fit to noise rather than the underlying pattern, severely impacting predictive performance [44]. It is crucial to investigate the cause of these outliers before taking action. If the outlier stems from a measurement error or data entry error, it should be corrected or removed. If it originates from a sampling problem (e.g., the catalyst was synthesized under non-standard conditions not representative of your study), it can be legitimately excluded. However, if it is a result of the natural variation in the catalytic process, it should be retained, and you should consider using statistical methods robust to outliers [17].

Q3: When should I remove an outlier from my catalytic dataset? The decision should be based on the root cause of the outlier [17]:

- Remove the outlier if it is: A measurement or data entry error (correct it first if possible), or a data point that does not belong to the target population of your study (e.g., a catalyst tested with an incorrect reactant).

- Do NOT remove the outlier if it is: A legitimate observation representing the natural variability of the catalytic system. Removing such points creates an overly optimistic and inaccurate model. For analyses where outliers cannot be removed, consider using nonparametric statistical tests, data transformations, or robust regression techniques [17].

Q4: What is the difference between supervised and unsupervised learning for anomaly detection in my experiments? The choice depends on the nature of your labeled data [44]:

- Supervised Learning: Requires a fully labeled dataset where both normal and anomalous data points are identified. This is rare in catalysis, as labeling anomalies is time-consuming and requires expert knowledge.

- Unsupervised Learning: Works with unlabeled data, making it the most common technique. The model learns the underlying patterns of "normal" data and flags significant deviations. This is ideal for exploring new catalytic systems where all types of anomalies are not known in advance.

- Semi-supervised Learning: A hybrid approach that uses a small amount of labeled data to guide the learning process on a larger, unlabeled dataset, offering a practical balance [45] [44].

Troubleshooting Common Experimental Issues

Problem: AI Model Generates Too Many False Positives in Catalyst Screening

- Potential Cause 1: The model's definition of "normal" is too narrow. Catalytic performance can have inherent, meaningful variations.

- Solution: Re-tune the model's sensitivity threshold. Use a semi-supervised approach where an expert chemist validates a subset of the flagged anomalies to teach the model more nuanced boundaries between normal and anomalous behavior [45] [44].

- Potential Cause 2: The training data is not representative of the full range of experimental conditions.

- Solution: Augment your training dataset to include data from a wider variety of synthesis and testing conditions to improve the model's understanding of valid experimental variation [46].

Problem: Difficulty Detecting Collective Anomalies in High-Throughput Experimentation Data

- Potential Cause: Simple point anomaly detection algorithms (like basic Z-score) are unable to capture complex relationships between multiple features.

- Solution: Implement algorithms designed to identify collective anomalies. Techniques like k-means clustering or Isolation Forest can analyze the mean distance of unlabeled data points to find unusual groupings, or use autoencoders (a type of neural network) to identify complex patterns in multidimensional data that do not align with historical trends [47] [44].

Problem: Model Performance Degrades Over Time as New Catalytic Data is Collected

- Potential Cause: Concept drift, where the properties of the catalytic materials being tested or the experimental protocols have slowly changed over time, making the original training data obsolete.

- Solution: Implement a continuous learning framework. Regularly re-train your model on recent, validated data to allow it to adapt to new normal patterns. Techniques like online learning can be particularly effective for this purpose [45].

Experimental Protocols & Methodologies

Protocol 1: Systematic Outlier Handling in Catalytic Data Analysis

Objective: To establish a standardized workflow for identifying and handling outliers in datasets related to catalyst performance (e.g., yield, turnover frequency, selectivity) without distorting the underlying scientific conclusions.

Procedure:

- Visual Inspection: Generate visualization plots (e.g., box plots, scatter plots) of the key performance metrics to perform an initial, visual screen for potential outliers [22] [44].

- Quantitative Identification: Apply the Interquartile Range (IQR) method, which is robust to non-normal data distributions common in experimental data.

- Calculate the first quartile (Q1) and the third quartile (Q3) of the data.

- Compute the IQR: ( \text{IQR} = Q3 - Q1 ).

- Define the lower bound: ( Q1 - 1.5 \times \text{IQR} ).

- Define the upper bound: ( Q3 + 1.5 \times \text{IQR} ).

- Data points falling outside the [lower bound, upper bound] range are flagged as potential outliers [22].

- Root Cause Investigation: For each flagged data point, consult experimental logs to investigate the cause. Was there a known instrument error? Was a specific catalyst batch synthesized under abnormal conditions? This step requires domain expertise [17].

- Action & Documentation:

- If an error is confirmed, correct or remove the data point.

- If it is a legitimate but extreme value, retain it.

- Crucially, document all removed data points and the justification for their removal to ensure reproducibility and transparency [17].

- Final Analysis: Conduct the primary statistical or machine learning analysis on the curated dataset. For added rigor, perform a sensitivity analysis by comparing results with and without the retained legitimate outliers.

The following workflow diagram illustrates the protocol for handling outliers in catalytic data.

Protocol 2: Building an Unsupervised Anomaly Detection Model for Catalyst Performance

Objective: To train a machine learning model that can automatically identify anomalous catalyst behavior from high-throughput experimental data without pre-existing labels.

Procedure:

- Data Collection & Preprocessing: Compile a historical dataset of catalyst performance metrics (e.g., conversion, selectivity, stability) from past high-throughput experiments. Clean the data by handling missing values and normalizing the features to ensure they are on a comparable scale [45].

- Algorithm Selection: Choose an unsupervised algorithm suitable for your data.

- Isolation Forest is highly effective for high-dimensional data and efficiently isolates anomalies [44].

- K-Means Clustering groups catalysts into clusters based on performance; catalysts that do not fit well into any cluster are potential anomalies [44].

- Local Outlier Factor (LOF) is useful for detecting local anomalies by comparing the local density of a data point to its neighbors [47].

- Model Training & Tuning: Train the selected model on the preprocessed historical data. This allows the model to learn the patterns of "normal" catalyst performance. Tune hyperparameters (e.g., the contamination factor in Isolation Forest, the number of clusters k in K-Means) using techniques like cross-validation.

- Validation & Deployment: Validate the model's performance by checking if it identifies known experimental failures or anomalies from hold-out test data. Once validated, deploy the model to flag new experimental results in real-time or in batches for expert review [45].

The Scientist's Toolkit: Key Research Reagent Solutions

The following table details computational tools and data resources essential for conducting data-driven research in catalysis and anomaly detection.

| Item Name | Function/Brief Explanation | Example Use Case in Catalysis |

|---|---|---|

| Scikit-learn [46] | An open-source Python library providing simple and efficient tools for predictive data analysis. | Implementing standard ML algorithms (Isolation Forest, k-Means) for classifying catalyst performance and detecting outliers from experimental data. |

| TensorFlow/PyTorch [46] | Open-source libraries for numerical computation and large-scale machine learning, particularly for building deep neural networks. | Developing complex autoencoder models to identify subtle, collective anomalies in spectra or temporal reaction data from catalytic reactors. |

| High-Throughput Experimentation (HTE) [46] | Automated platforms that allow for the rapid synthesis and testing of large libraries of catalytic materials. | Generating the large, consistent datasets required to train robust ML models for catalyst discovery and optimization. |

| Computational Databases (OQMD, Materials Project) [46] | Databases containing high-throughput quantum chemistry calculations for a vast range of materials. | Used as a source of features (descriptors) for catalyst performance, helping to train models that link catalyst structure to activity. |

| Python Materials Genomics (pymatgen) [46] | A robust, open-source Python library for materials analysis. | Processing and analyzing crystal structure data, calculating material properties, and generating descriptors for ML input. |

Machine Learning Algorithms for Anomaly Detection

The table below summarizes key machine learning algorithms used for anomaly detection, along with their typical applications in catalytic research.

| Algorithm | Type | Mechanism | Application in Catalysis |

|---|---|---|---|

| Isolation Forest (IF) [44] | Unsupervised | Randomly partitions data; anomalies are isolated faster than normal points. | Identifying failed catalyst synthesis batches or anomalous performance in high-throughput screening. |

| K-Nearest Neighbors (k-NN) [47] [44] | Unsupervised / Supervised | Measures the distance to its k-nearest neighbors; points with large distances are anomalies. | Detecting catalysts with unusual property profiles compared to their nearest neighbors in descriptor space. |

| Local Outlier Factor (LOF) [47] [44] | Unsupervised | Compares the local density of a point to the densities of its neighbors. | Finding catalysts that are anomalous within a specific, local region of the chemical space (contextual anomalies). |

| One-Class SVM [44] | Unsupervised | Learns a decision boundary that encompasses the normal data; points outside are anomalies. | Modeling stable reactor operation; any data point deviating from this "normal" operational boundary is flagged. |

| Autoencoders [44] | Unsupervised (Neural Network) | Compresses and reconstructs data; poor reconstruction indicates an anomaly. | Detecting complex, multi-faceted failures in reaction data that are difficult to define with simple rules. |

The following diagram outlines the core decision process for selecting an appropriate anomaly detection algorithm based on the data and research question.

Outliers are data points that deviate markedly from the rest of the data and can arise from measurement errors, data entry mistakes, or genuine rare events [48] [12]. In the context of catalytic data research, where reproducibility and precision are paramount, outliers can significantly skew statistical results, inflate standard errors, and lead to misleading conclusions in model development [48] [1]. This guide details three core strategies for managing outliers—Trimming, Winsorizing, and Imputation—to help you maintain the integrity of your analytical datasets.

Core Concepts: Trimming vs. Winsorizing

What is Trimming?

Trimming, or truncation, involves completely removing outlier observations from the dataset [49] [50]. For example, when trimming the top and bottom 5% of values, these data points are entirely discarded from subsequent analysis.

What is Winsorizing?

Winsorizing involves replacing the extreme values of outlier observations with the value of the nearest inlier [49] [51]. In a 5% Winsorization, the bottom 5% of values are set to the value at the 5th percentile, and the top 5% are set to the value at the 95th percentile. The data structure remains intact, but the influence of extremes is reduced [52].

Key Differences Summarized

The table below contrasts the fundamental characteristics of each method.

| Feature | Trimming | Winsorizing |

|---|---|---|

| Data Handling | Removes data points [49] | Replaces extreme values [49] |

| Sample Size | Reduces the number of observations [49] | Preserves the original sample size [49] |

| Information | Discards all information from outliers | Preserves some information (capped value) [49] |

| Best For | Suspected erroneous data; outliers irrelevant to research question [49] | Retaining data structure; outliers are plausible but overly influential [49] |

Experimental Protocols for Outlier Treatment

Protocol 1: Implementing Trimming

This protocol uses the Interquartile Range (IQR) method, which is robust to non-normal data distributions [48] [1].

- Calculate IQR Bounds: For a chosen variable, calculate the first quartile (Q1, 25th percentile) and the third quartile (Q3, 75th percentile). The IQR is Q3 - Q1. The lower bound is defined as Q1 - 1.5 * IQR, and the upper bound as Q3 + 1.5 * IQR [48] [1].

- Identify Outliers: Flag all data points that fall below the lower bound or above the upper bound.

- Remove Outliers: Permanently remove the flagged rows from the dataset for the analysis of that specific variable.

- Consideration: Be aware that if your dataset has multiple variables, trimming a single variable will remove that entire row of data, which could result in the loss of valid data for other variables ("collateral damage") [49].

Protocol 2: Implementing Winsorization

This protocol outlines percentile-based Winsorization, a common symmetric approach.

- Set Percentile Limits: Decide on the trimming percentage for each tail (e.g., 5%). The limits are typically symmetric.

- Calculate Percentiles: Calculate the (p)th and (100-p)th percentiles (e.g., 5th and 95th percentiles) for the variable.

- Replace Values: Replace every value below the lower percentile with the value of the lower percentile itself. Replace every value above the upper percentile with the value of the upper percentile [50].

- Consideration: This process can create artificial spikes in the data distribution at the Winsorization points, which may violate model assumptions, such as homoscedasticity in linear regression [49] [51].

Protocol 3: Implementing Imputation (Mean/Median)

Imputation replaces outlier values with a central tendency measure instead of removing them.

- Identify Outliers: First, identify outliers using a chosen method like IQR or Z-score (for normally distributed data) [48] [1].

- Calculate Robust Statistic: It is recommended to use the median of the inlier data (data within the bounds) for imputation, as it is itself robust to outliers. The mean of the inliers can also be used.

- Replace Outlier Values: Replace each identified outlier value with the calculated median (or mean) value.

- Consideration: This method preserves the sample size but reduces the variance of the dataset and can distort the underlying relationship between variables [48].

Troubleshooting Common Issues

FAQ: How do I choose between trimming and Winsorizing for my catalytic data?

The choice depends on your data quality and research goals. Use trimming when you have strong reason to believe the outliers are erroneous or are not part of the population you are studying (e.g., a catalyst reaction condition that was incorrectly recorded) [49]. Winsorizing is preferable when you suspect the outliers are real but extreme, and you wish to retain all data rows for multivariate analysis or to preserve statistical power [49] [51].

FAQ: Winsorization created a large spike in my data distribution. Is this normal?

Yes, this is a known artifact of the Winsorization process. By replacing all extreme values with a single percentile value, you create a cluster of data points at that value, which can appear as a spike in the histogram [49]. If this spike is problematic for your downstream analysis (e.g., it violates the assumptions of your statistical model), consider using a more gentle Winsorization (e.g., 1% instead of 5%) or switching to a trimming approach.

FAQ: After trimming my data, my results are significant, but with the original data they are not. Which result should I trust?

This discrepancy highlights the profound impact outliers can have. First, you must investigate the nature of the outliers. Were they caused by a data entry error, an experimental artifact, or do they represent a genuine but rare outcome? If they are errors, the trimmed result is more reliable. If they are genuine, reporting both results and providing a justification for your chosen treatment method is the most transparent and scientifically rigorous approach [12] [1].

FAQ: Is it acceptable to use the overall dataset mean to impute outliers?

Using the overall dataset mean for imputation is generally not recommended because the mean is sensitive to the very outliers you are trying to treat. This can lead to a biased estimate. A better practice is to calculate the mean or median using only the non-outlying, "inlier" data points for imputation [48].

Treatment Strategy Workflow

This diagram illustrates the decision-making process for selecting an appropriate outlier treatment method.

Research Reagent Solutions: Statistical Toolkit

The table below lists essential computational "reagents" for detecting and treating outliers in your research data.

| Research Reagent | Function/Brief Explanation | ||

|---|---|---|---|

| IQR (Interquartile Range) | A robust measure of statistical dispersion used to identify outliers outside the "fences" of Q1 - 1.5IQR and Q3 + 1.5IQR [48] [1]. | ||

| Z-Score | Measures the number of standard deviations a data point is from the mean. Effective for normally distributed data (e.g., | Z | > 3 indicates an outlier) [48] [1]. |

| Box Plots | A visualization tool that graphically depicts the IQR and outliers, providing an intuitive check for extreme values [48] [1]. | ||

| Isolation Forest | An unsupervised machine learning algorithm that isolates outliers based on the principle that they are fewer and different, making them easier to isolate [48] [53]. | ||

| Local Outlier Factor (LOF) | A proximity-based algorithm that identifies outliers by measuring the local density deviation of a data point compared to its neighbors [48] [53]. |

Frequently Asked Questions (FAQs)

FAQ 1: Why should I use the IQR method over the Z-score for identifying outliers in my catalytic yield data?

The Interquartile Range (IQR) method is more robust for catalytic yield data, which is often not normally distributed. Unlike the Z-score method, which assumes normality and can be unreliable with skewed data, the IQR method is non-parametric and makes no assumptions about the distribution shape. This makes it ideal for datasets with long tails or skew, which are common in fields like chemistry and pharmacology [34] [21]. The IQR focuses on the middle 50% of your data, effectively minimizing the impact of extreme values on your analysis.

FAQ 2: What is the fundamental difference between Winsorizing my data and simply deleting outliers?

Winsorizing does not remove data points; it caps the extreme values at a specified percentile. For example, applying 95% Winsorization sets all values above the 95th percentile to the value of the 95th percentile itself. This preserves your sample size and dataset structure, which is crucial for maintaining statistical power. In contrast, deletion (or trimming) completely removes observations, which can reduce your sample size and potentially introduce bias if the missing data is not random [54] [51]. Winsorizing is often preferred when you want to reduce the influence of extremes without losing the data points entirely.

FAQ 3: I've applied Winsorization, but my model's performance seems worse. What could have gone wrong?

A common pitfall is applying Winsorization without considering the context of your data. If the extreme values in your catalytic yield data are genuine observations (e.g., representing a highly successful or failed reaction), capping them can obscure meaningful scientific information. Winsorization should be applied symmetrically (treating both tails of the distribution) to avoid producing biased statistics [51]. Before application, always investigate whether your outliers represent noise (e.g., measurement error) or valuable signal (e.g., a high-performing catalyst).

Troubleshooting Guides

Issue 1: Inconsistent Outlier Detection with IQR

Problem: You run the IQR method on similar datasets but get a different number of outliers each time.

Solution: