Standardizing Catalytic Data Reporting: A Framework for Reproducibility and AI-Driven Discovery

This article addresses the critical challenge of inconsistent data reporting in catalysis research, which hinders reproducibility, validation, and the application of data science.

Standardizing Catalytic Data Reporting: A Framework for Reproducibility and AI-Driven Discovery

Abstract

This article addresses the critical challenge of inconsistent data reporting in catalysis research, which hinders reproducibility, validation, and the application of data science. Tailored for researchers, scientists, and drug development professionals, it provides a comprehensive guide from foundational principles to advanced applications. We explore the urgent need for data standards, detail practical methodologies for implementation, offer solutions for common troubleshooting scenarios, and establish frameworks for rigorous validation and comparative analysis. The goal is to equip the community with the tools to enhance data quality, accelerate catalyst design, and foster collaborative innovation, ultimately speeding up the translation of research from the lab to clinical and industrial applications.

The Catalytic Data Crisis: Why Standardization is Key to Reproducibility and Collaboration

The Reproducibility Challenge in Modern Catalysis Research

Reproducibility forms the cornerstone of the scientific method, yet modern catalysis research faces a significant crisis in this fundamental area. Differences in catalytic performance often emerge as an intrinsic function of the compositional and structural complexity of materials, which are in turn determined by specific synthesis methods, storage conditions, and pretreatment protocols [1]. Even minor variations in synthetic parameters can translate to substantial alterations in both physical and chemical properties of catalytic materials, including purity, particle size, and surface area [1]. The lack of standardized reporting practices across the discipline hampers scientific progress, impedes technology transfer, and undermines the reliability of research findings.

This technical support center addresses the reproducibility challenge through practical guidance structured within the broader thesis that standardized catalytic data reporting practices are essential for advancing the field. The following sections provide troubleshooting guides, frequently asked questions, and experimental protocols specifically designed to help researchers identify, understand, and overcome common sources of irreproducibility in their catalytic experiments.

Troubleshooting Guides: Identifying and Resolving Common Issues

Catalyst Synthesis and Characterization Problems

Problem: Inconsistent catalytic performance between batches despite using the same synthetic protocol

| Possible Cause | Diagnostic Steps | Solution |

|---|---|---|

| Contaminated support material | Perform elemental analysis (ICP-MS) to detect trace contaminants (e.g., S, Na) [1] | Source higher purity supports; implement supplier qualification procedures; pre-wash supports using appropriate pH solutions [1] |

| Uncontrolled mixing parameters | Review synthesis documentation for incomplete parameter recording [1] | Standardize and document mixing time, speed, and apparatus; for deposition precipitation, ensure consistent contact times [1] |

| Variable precursor speciation | Characterize precursor solutions using spectroscopic methods | Control solution pH and aging time; use fresh precursor solutions with documented preparation history [1] |

Problem: Irreproducible dispersion measurements using chemisorption techniques

| Possible Cause | Diagnostic Steps | Solution |

|---|---|---|

| Surface poisoning | Compare multiple chemisorption techniques (H₂, O₂, H₂-O₂ titration) [1] | Ensure ultrapure pretreatment gases; implement gas purification traps; control reduction conditions precisely [1] |

| Inconsistent pretreatment | Monitor temperature ramps and gas flow rates with calibrated instruments | Standardize pretreatment protocols with specified heating rates, hold times, and gas space velocities [1] |

| Ambient contamination during storage | Use surface science techniques (XPS) to detect adsorbed species [1] | Implement controlled storage environments (inert atmosphere); pre-clean surfaces before measurement [1] |

Catalytic Testing and Data Interpretation Problems

Problem: Divergent activity measurements for the same catalyst material

| Possible Cause | Diagnostic Steps | Solution |

|---|---|---|

| Unaccounted atmospheric contaminants | Install trace contaminant monitors in gas supply lines; test with blank runs | Use high-purity gases with appropriate purifiers; document ambient laboratory conditions during testing [1] |

| Inconsistent reactor bed configuration | Compare fluidized vs. fixed bed configurations; model flow patterns [1] | Standardize reactor packing methods; report bed dimensions and particle size distributions; prefer fluidized beds for uniform activation [1] |

| Variable activation procedures | Characterize catalyst pre- and post-activation (e.g., XAS, XRD) | Document complete activation protocol: heating rates, atmosphere composition, gas flow rates, and pressure [1] |

Frequently Asked Questions (FAQs)

Q1: Why do apparently trivial details like reagent lot numbers need to be documented in catalyst synthesis?

The purity of reagents can vary significantly between different batches and lot numbers from the same supplier, with trace impurities (even at ppb levels) substantially influencing catalytic properties. For instance, residual sulfur or sodium in commercial alumina supports can poison active metal sites or alter metal dispersion [1]. Documenting lot numbers enables tracing such variability to its source when reproducibility issues arise.

Q2: How can ambient laboratory conditions affect my catalyst performance?

Catalysts are highly sensitive to environmental conditions during storage and handling. Research has demonstrated that when TiO₂ is exposed to ambient environments, complete surface coverage of carboxylic acids forms from ppb-level atmospheric concentrations [1]. Similarly, ppb-level H₂S exposure can reduce Ni catalyst rates by an order of magnitude [1]. Controlled storage environments with documented conditions are essential for reproducibility.

Q3: What is the minimum characterization data needed to ensure my catalyst is comparable to literature reports?

At minimum, report: (1) bulk composition (elemental analysis), (2) surface area and porosity (BET method), (3) metal dispersion (chemisorption or electron microscopy), (4) crystalline phase (XRD), and (5) reduction state (XPS or XAS) where applicable [1]. Additional characterization should be included based on the specific catalyst family and reaction type.

Q4: How does digitalization and machine learning address reproducibility challenges?

Natural language processing models can extract synthesis protocols from literature and convert unstructured procedural descriptions into standardized action sequences, enabling collective analysis and pattern recognition [2]. Implementing FAIR (Findable, Accessible, Interoperable, Reusable) data principles ensures that catalytic data can be effectively utilized by both humans and machines [3].

Q5: Why do different labs obtain different activity results with the same catalyst formulation?

Beyond synthesis variations, differences in testing protocols often contribute to divergent results. Key factors include: reactor geometry and material, catalyst bed configuration, pretreatment procedures, feed purity, space velocity, and analysis calibration. Implementing standardized testing protocols with interlaboratory validation is crucial for meaningful comparisons [1].

Experimental Protocols for Reproducible Catalyst Synthesis

Standardized Incipient Wetness Impregnation Protocol

Principle: This deposition method involves adding a precursor solution volume equal to the support pore volume to achieve uniform distribution without excess liquid.

Critical Parameters for Documentation:

- Support characterization: Specific surface area, pore volume (distribution), pre-treatment conditions [1]

- Precursor solution: Metal salt identity and purity (including lot number), solvent identity and purity, concentration, pH, aging time [1]

- Impregnation procedure: Addition rate (dropwise vs. rapid), mixing speed and method (magnetic stirring, mechanical agitation), mixing time during and after addition, ambient temperature and humidity [1]

- Aging step: Duration, container type, atmosphere [1]

- Drying: Method (static, rotary evaporator, oven), temperature, ramp rate, duration, atmosphere [1]

Step-by-Step Procedure:

- Support Pre-treatment: Calculate required support mass based on pore volume. Pre-dry support at 120°C for 2 hours in air. Cool in desiccator.

- Solution Preparation: Determine pore volume of support. Dissolve precise amount of metal precursor in deionized water (or appropriate solvent) to achieve volume equal to support pore volume. Record solution pH and appearance.

- Impregnation: Add solution dropwise to support under continuous mechanical mixing. Ensure uniform wetting without pooling.

- Aging: Seal container and maintain at room temperature for 12 hours with slow mixing.

- Drying: Transfer to oven with programmed heating (1°C/min to 80°C), hold for 4 hours in static air.

- Storage: Transfer to airtight container with desiccant; document storage conditions and duration before further treatment.

Standardized Catalyst Testing Protocol for Fixed-Bed Reactors

Principle: Consistent activity evaluation requires严格控制 reaction parameters and thorough characterization of fresh and spent catalysts.

Critical Parameters for Documentation:

- Reactor specification: Material, dimensions, thermocouple placement and calibration [1]

- Catalyst loading: Particle size range, dilution ratio with inert material, bed packing method [1]

- Pretreatment conditions: Temperature ramp rates, final temperature(s), hold time(s), gas composition and flow rate, pressure [1]

- Reaction conditions: Temperature, pressure, feed composition, mass flow rates, space velocity (WHSV) [1]

- Analysis: Calibration of analytical equipment, internal standards, sampling frequency and method [1]

Step-by-Step Procedure:

- Reactor Preparation: Load reactor with quartz wool, measure empty reactor pressure drop. Dilute catalyst with inert material (SiO₂) of similar particle size (250-355 μm).

- Catalyst Loading: Add catalyst bed, record exact mass and bed dimensions. Install thermocouple in catalyst bed.

- Leak Testing: Pressurize system with inert gas to operating pressure, monitor pressure stability.

- Pretreatment: Program temperature controller with specified ramp rate (typically 5°C/min) to target temperature under specified gas flow. Hold for specified duration.

- Reaction Initiation: Adjust to reaction temperature under inert flow. Switch to reaction feed at precisely recorded time.

- Data Collection: Allow 3 reactor residence times to reach steady state before initial sampling. Collect samples at regular intervals with documented time points.

- Shutdown: Switch to inert gas flow, cool reactor to room temperature. Recover catalyst for characterization.

Essential Research Reagent Solutions

| Reagent/ Material | Function | Critical Quality Parameters | Recommended Documentation |

|---|---|---|---|

| Support Materials | Provide high surface area for active phase dispersion; can influence metal-support interactions | Surface area, pore volume/distribution, impurity profile (esp. S, Na, Cl), pre-treatment history | Supplier, lot number, certificate of analysis, pre-treatment protocol [1] |

| Metal Precursors | Source of active catalytic component; determine initial dispersion and distribution | Purity grade, impurity profile (anion effects), hydration state, solubility characteristics | Chemical formula, supplier, lot number, purity assay, storage history [1] |

| Gases | Reaction feed, pretreatment, purge, and analysis functions | Purity grade, moisture/oxygen content, contaminant profile (e.g., metal carbonyls) | Supplier, grade, purification methods (traps/filters), cylinder pressure/history [1] |

| Solvents | Medium for catalyst preparation; can influence precursor speciation | Purity, water content, organic impurities, dissolved oxygen | Supplier, grade, lot number, purification method (if applicable), storage conditions [1] |

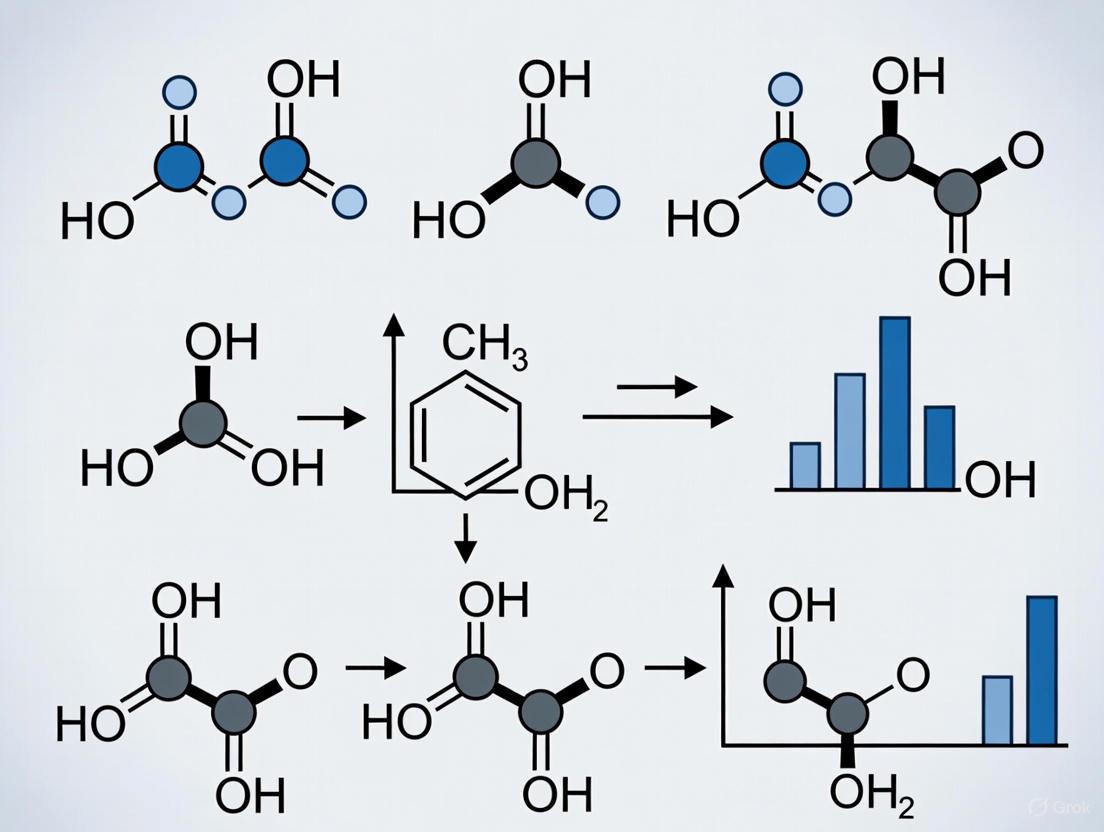

Visualization of Standardization Frameworks

Pillars of Reproducible Catalysis Research

Catalyst Synthesis Workflow for Reproducibility

Addressing the reproducibility challenge in catalysis research requires a systematic approach to experimental documentation and protocol standardization. By implementing the troubleshooting guides, experimental protocols, and documentation standards outlined in this technical support center, researchers can significantly enhance the reliability and reproducibility of their catalytic studies. The adoption of digital frameworks following FAIR data principles, combined with meticulous attention to synthesis parameters and testing conditions, will accelerate progress in catalyst design and development [3]. Ultimately, standardized practices enable more robust structure-activity relationships, facilitate knowledge transfer between laboratories, and strengthen the scientific foundation of catalysis research.

FAQ: Catalytic Data Standardization

What is catalytic data standardization and why is it critical for research?

Catalytic data standardization involves creating and implementing consistent, community-wide rules for recording, reporting, and managing all data related to catalyst design, synthesis, characterization, and performance [4]. This is critical because the field of catalysis is plagued by reproducibility challenges and inefficiencies. A primary cause is the complex nature of catalysts, whose active form is often only achieved under reaction conditions, making it difficult to establish clear structure-activity relationships [4]. Furthermore, minute variations in synthesis protocols—such as the lot number of chemicals, order of reagent addition, or pretreatment conditions—can significantly influence catalyst activity, yet these details are frequently omitted from literature, leading to irreproducibility from batch to batch [4]. Standardization, particularly through FAIR (Findable, Accessible, Interoperable, and Reusable) data principles, is a fundamental enabler for accelerating discovery, fostering collaboration, and building trustworthy, machine-readable databases for advanced analysis and machine learning [4].

What is the scope of data that needs to be standardized?

The scope of catalytic data standardization is comprehensive, covering the entire catalyst lifecycle. Data can be broadly classified into two interconnected categories [4]:

- Catalyst-Centric Data: This includes all information related to the creation and properties of the catalyst material itself.

- Synthesis Data: Details on precursors, equipment, chemical procedures, aging times, and pretreatment conditions.

- Characterization Data: Information on surface area, metal dispersion, oxidation states, and atomic structure from various analytical techniques.

- Reaction-Centric Data: This encompasses all information related to testing the catalyst's performance.

- Performance Data: Metrics such as conversion, selectivity, and yield.

- Operando/Operational Data: Critical data from characterization techniques performed under actual reaction conditions to understand the true nature of the active sites [4].

The German Catalytic Society (GeCATS) formalizes this into a framework of five pillars for a meaningful description of catalytic processes: 1) synthesis data, 2) characterization data, 3) performance data, 4) operando data, and 5) data exchange with theory [4].

What are the core principles of a catalytic data governance framework?

A robust data governance framework for catalysis should be built upon the following core principles, adapted from modern data management best practices [5]:

- Accountability and Stewardship: Every dataset must have a clear owner and data stewards who are responsible for ongoing data quality, metadata management, and compliance with policies [5].

- Transparency: All data processes, standards, and policies should be open, documented, and easily accessible to all stakeholders [5].

- Standardization, Consistency & Metadata Management: Enforcing consistent formats, definitions (e.g., a common business glossary), and active metadata management is essential for data discovery, lineage tracking, and AI readiness [5].

- Quality & Credibility: Data must be accurate, complete, timely, and reliable. This requires processes like data profiling, validation rules, and continuous monitoring [5].

- Integrity, Security & Accessibility: Data must be protected from unauthorized access or breaches through encryption and access controls, while remaining accessible to those with a legitimate business or research need [5].

- Business Alignment & Value Creation: The ultimate measure of governance success is its impact on strategic objectives, such as accelerating discovery, reducing costs, or ensuring regulatory compliance [5].

- Continuous Improvement & Change Management: Governance frameworks must evolve alongside shifting business priorities, technologies, and regulations. Policies and standards should be regularly reviewed and updated [5].

Our research group is new to this. How can we start standardizing our data practices?

Begin by focusing on the highest-impact areas:

- Adopt FAIR Guiding Principles: Make your data Findable, Accessible, Interoperable, and Reusable a core goal for all new projects [4].

- Implement a Metadata Template: Develop a simple, standardized template for capturing essential metadata for every catalyst synthesis and reaction experiment. This should include the detailed synthesis parameters often overlooked [4].

- Record Everything, Including Negative Results: Standardized protocols should be adopted to record all data, including negative results, to provide a complete picture for future analysis [4].

- Use Electronic Lab Notebooks (ELNs): ELNs can be configured to enforce data entry standards and ensure consistency across different group members.

- Leverage Community Resources: Engage with initiatives like the Catalysis Hub to understand emerging community standards and best practices [4].

Troubleshooting Guides

Issue: Inconsistent synthesis procedures leading to irreproducible catalyst performance.

Problem: Different researchers in the same lab, or the same researcher across different batches, produce catalysts with varying performance, and the root cause cannot be identified.

Solution:

- Step 1: Create a Standard Operating Procedure (SOP). Develop a detailed, step-by-step protocol for the synthesis that leaves no room for ambiguity.

- Step 2: Mandate Comprehensive Metadata Recording. Implement a checklist for all synthesis parameters. The table below outlines critical, often-overlooked data that must be captured.

Table: Essential Synthesis Metadata for Reproducibility

| Category | Specific Parameter | Why It's Important |

|---|---|---|

| Chemical Precursors | Chemical name, supplier, lot number, purity, expiration date | Different lots can have varying impurity profiles that affect synthesis. |

| Synthesis Equipment | Type of reactor/glassware, material of construction (e.g., Pyrex), reactor volume | Material reactivity and reactor geometry can influence reaction pathways. |

| Procedure | Order of reagent addition, stirring rate/speed, aging time, temperature ramp rate | Minor changes in kinetics during synthesis can drastically alter the final material's properties. |

| Pretreatment | Atmosphere (gas, flow rate), pressure, temperature ramp rate, final temperature, hold time | The activation process is critical for forming the active catalytic phase. |

- Step 3: Utilize Digital Tools for Protocol Standardization. For writing methods sections, follow machine-readable guidelines to improve clarity and analysis. A key guideline is to structure protocols as a clear sequence of action-performed-on-object sentences [6]. For example, instead of "The mixture was calcined in air," write "Calcined the mixture in air at 500 °C for 4 hours with a ramp rate of 5 °C/min." This structured approach minimizes ambiguity for both humans and automated text-mining tools [6].

Issue: Inability to correlate catalyst structure with observed activity.

Problem: You have characterization data and performance data, but cannot establish a clear link between the catalyst's properties and its function.

Solution:

- Step 1: Integrate Operando Characterization. Recognize that the active form of a catalyst is often only present under reaction conditions. Whenever possible, incorporate operando characterization techniques (e.g., XRD, spectroscopy) to capture the state of the catalyst during the reaction [4].

- Step 2: Ensure Data Linkage. In your data management system, explicitly link every performance data set (e.g., a conversion/selectivity measurement) to the specific characterization data for the catalyst batch used in that exact experiment.

- Step 3: Adopt a Structured Data Framework. Use a framework, like the GeCATS five pillars, to ensure all relevant data types are collected and connected. The diagram below visualizes this integrated data management workflow.

Issue: Data is disorganized and not machine-readable, hindering analysis.

Problem: Data is stored in inconsistent formats (e.g., paper notebooks, unstructured text files), making it difficult to search, share, or use for machine learning models.

Solution:

- Step 1: Implement a Centralized Data Catalog. Use a digital platform or electronic lab notebook (ELN) that forces standardized data entry and stores all data in a structured format.

- Step 2: Enforce a Common Vocabulary. Develop and use a standardized business glossary for key terms (e.g., "conversion," "TON," "TOF") to ensure consistency across all researchers.

- Step 3: Prioritize Metadata for AI Readiness. Remember that AI and machine learning models depend on rich, accurate metadata to understand data context, lineage, and trustworthiness. A solid metadata foundation is non-negotiable for future AI-driven discovery [5].

Research Reagent Solutions & Essential Materials

Table: Key Digital and Data Management "Reagents" for Catalytic Research

| Item / Solution | Function / Explanation |

|---|---|

| Electronic Lab Notebook (ELN) | A digital platform for recording experiments, data, and protocols in a structured, searchable format, replacing paper notebooks. |

| FAIR Data Principles | A set of guiding principles (Findable, Accessible, Interoperable, Reusable) to make data machine-actionable and maximally useful. |

| Structured Metadata Schema | A predefined template or set of fields (e.g., based on the five pillars) that ensures all relevant experimental metadata is captured consistently. |

| Data Governance Council | A cross-functional team (including scientists, IT, and data managers) that defines and enforces data standards, policies, and principles [5]. |

| Active Metadata Management | The practice of using metadata dynamically to automate governance tasks, such as data classification, lineage tracking, and policy enforcement [5]. |

| Machine-Readable Protocol Guidelines | Writing standards for experimental procedures that emphasize clear, action-oriented language to enable automated extraction and analysis by language models [6]. |

The Critical Role of Data Curation and Dissemination for Scientific Progress

Troubleshooting Guides

Troubleshooting Data Curation & Dissemination

| Problem Category | Specific Issue | Potential Causes | Solution Steps | Prevention Best Practices |

|---|---|---|---|---|

| Data Quality | Incomplete or missing data [7] [8] | Gaps in data collection, partial records, storage failures [7] | Perform data audits; use statistical imputation for missing data; document all gaps [7] [8]. | Implement standardized data collection protocols; establish ongoing quality checks [7]. |

| Data Quality | Inconsistent or non-standardized data [7] [8] | Data merged from multiple sources with different formats or units [7] | Convert data to a standard format; use automated validation checks and data profiling tools [7] [8]. | Create strict data governance policies for consistent formatting and measurement standards [7]. |

| Data Integrity | Biased or unrepresentative data samples [7] [8] | Reliance on convenient but limited datasets; failure to account for variations [7] | Review and update sampling methods; prioritize a wide data spread across all relevant segments and time periods [7]. | Ensure sample size is large enough and demographics match the target population [8]. |

| Data Integrity | Redundant or duplicate data [7] | Data collected from multiple sources without deduplication; improper archiving [7] | Conduct regular data audits; implement automated deduplication processes and data validation tools [7]. | Establish data entry protocols that flag potential duplicates at the point of entry [7]. |

| Analysis & Context | Results lack context or are misunderstood [7] [8] | Isolated data analysis without considering industry trends, history, or competitive dynamics [7] | Conduct market research; compare results with previous studies; consider seasonal variations and broader factors [7]. | Always place data in a broader business and historical context before drawing conclusions [7]. |

| Analysis & Context | Confusing correlation with causation [8] | Assuming one variable directly causes another because they are correlated [8] | Investigate other factors that could cause the correlation; conduct more research to establish causal links [8]. | Do not assume a connection without evidence; consider other potential causal factors [8]. |

| Dissemination | Low uptake of shared data or practices | Dissemination methods do not align with audience needs; lack of stakeholder engagement [9] | Understand audience needs; use clear data visualizations; leverage professional networks for sharing [9] [7]. | Engage stakeholders early; tailor dissemination strategies to the target audience [9]. |

Troubleshooting Experimental Protocols

When unexpected results occur in your lab experiments, follow this systematic approach [10]:

| Troubleshooting Step | Key Actions | Considerations for Catalytic Data |

|---|---|---|

| Check Assumptions | Question your hypothesis and experimental design. Are unexpected results truly an error, or a novel finding? [10] | Re-examine assumed reaction mechanisms or catalytic cycles. |

| Review Methods | Check equipment calibration, reagent freshness/storage, sample integrity, and control validity [10]. | Verify catalyst activation procedures, solvent purity, and reaction atmosphere (e.g., inert conditions). |

| Compare Results | Compare with previous studies, literature, and colleagues' work [10]. | Compare with known catalytic performance in similar systems (turnover frequency, yield). |

| Test Alternatives | Explore other explanations; design new experiments to test different variables [10]. | Systematically vary one parameter at a time (e.g., catalyst loading, temperature, pressure). |

| Document Process | Keep a detailed, organized record of all steps, methods, results, and changes [11]. | Record all catalytic reaction parameters and any deviations from the planned protocol. |

| Seek Help | Consult supervisors, colleagues, or experts for new perspectives and solutions [10]. | Engage with materials informatics or catalysis specialists for data interpretation. |

Frequently Asked Questions (FAQs)

Data Curation FAQs

Q: What is data curation and why is it critical for materials science? A: Data curation is the process of ensuring datasets are complete, well-described, and in a format that facilitates long-term access, discovery, and reuse [12]. It is critical because it enhances the reliability, reproducibility, and integrity of research, which is fundamental for developing reliable AI and machine learning models in fields like materials informatics [13].

Q: What is the basic workflow for curating research data? A: A effective workflow can be summarized by the CURATE(D) steps [12]:

- Check files and documentation.

- Understand the data by running files/code and conducting quality checks.

- Request missing information or changes.

- Augment metadata for findability (e.g., with DOIs).

- Transform file formats for long-term reuse.

- Evaluate for FAIRness (Findable, Accessible, Interoperable, Reusable).

- Document all curation activities.

Q: What are the most common mistakes in data analysis that curation can help prevent? A: Common mistakes include [7] [8]:

- Using incomplete or biased data samples.

- Working with inconsistent or non-standardized data.

- Presenting results without adequate context.

- Confusing correlation with causation.

- Using the wrong metrics or benchmarks for comparison.

- Poor data visualization that obscures insights.

Data Dissemination FAQs

Q: What are the key factors that encourage research organizations to disseminate data and evidence-based practices? A: Studies of substance use disorder treatment providers, who also operate in a complex scientific field, show that organizational characteristics are strong predictors. These include the organization's size, previous involvement in research protocols, linkages with other providers, and non-profit status. A leader's membership in professional organizations is also a significant factor, as shared network connections heavily influence dissemination willingness [9].

Q: What types of dissemination activities can a research team undertake? A: Activities can range from providing information and training to other providers, interacting with government agencies on issues related to evidence-based practices, participating in state/local task forces, and contributing to research publications [9].

Q: Why is considering data privacy and security important during dissemination? A: Especially when sharing human subjects data or data linked to specific programs, it is vital to define whether services are anonymous or confidential, understand relevant regulations like HIPAA, and implement good practices for data privacy and security before reporting data [14].

Experimental Protocols for Standardized Catalytic Data Reporting

Protocol 1: Data Curation Pipeline for Materials Chemistry

This protocol is adapted from proposed best practices for creating rigorous materials chemistry databases [13].

Objective: To establish a standardized workflow for curating catalytic data, ensuring its quality, completeness, and readiness for dissemination and reuse.

Materials:

- Raw experimental data (e.g., catalyst synthesis details, performance metrics).

- Computational data files (e.g., input parameters, output structures, energies).

- Metadata schema (e.g., based on community standards).

- Data validation tools (e.g., for file format checks, unit conversion).

- A designated data repository (e.g., institutional or domain-specific).

Methodology:

- Data Collection & Appraisal: Gather all raw data from experimental notebooks, instrument outputs, and computational runs. Perform an initial appraisal to select complete datasets for inclusion, documenting the reasons for excluding any data.

- Metadata Assignment: Create a comprehensive metadata record for the dataset. This must include persistent identifiers (e.g., for catalysts, substrates), detailed experimental conditions (temperature, pressure, solvent, etc.), and key performance indicators (yield, turnover number, selectivity, etc.).

- Data Validation & Cleaning:

- Check for and resolve inconsistencies in units and nomenclature.

- Validate file integrity and format.

- Identify and document outliers; do not dismiss them without investigation, as they may signal important phenomena [7].

- Format Standardization: Transform data into preservation-friendly formats (e.g., .csv for tabular data, .cif for crystallographic data) as recommended for long-term reuse [12].

- FAIRness Evaluation: Ensure the dataset adheres to the FAIR Guiding Principles [12]. It should be easy to find (rich metadata), accessible (clearly defined usage license), interoperable (using standard vocabularies), and reusable (with detailed provenance).

- Documentation & Deposit: Write a "readme" file explaining the dataset, all curation steps performed, and any assumptions made. Finally, deposit the curated dataset and its metadata into a trusted repository.

Protocol 2: Implementing a Point-in-Time Survey for Program Assessment

This protocol, adapted from harm reduction research, provides a model for collecting standardized qualitative and quantitative data from a user base, which can be used to inform and improve research programs [14].

Objective: To gather a standardized snapshot of program characteristics, needs, and service utilization patterns from a portion of users.

Materials:

- Standardized survey questionnaire.

- Data collection platform (e.g., electronic tablet, secure online form).

- Secure database for data storage.

- Statistical analysis software.

Methodology:

- Planning Phase: Define the survey's objectives and key questions. Determine the sample size and recruitment strategy.

- Design Phase: Develop the survey questionnaire. Ensure questions are clear, unbiased, and designed to elicit the specific information needed. Pilot test the survey with a small group to identify any issues.

- Implementation Phase: Administer the survey to the predetermined sample of users during a specific "point-in-time" window.

- Analysis Phase: Clean the collected data. Perform descriptive statistical analysis to summarize the findings. Identify key themes and patterns.

- Dissemination Phase: Prepare a report or presentation of the findings. Share the results with relevant stakeholders, program staff, and the wider community to inform future directions [14].

Essential Diagrams & Workflows

Data Curation Workflow

Data Dissemination Logic

The Scientist's Toolkit: Research Reagent Solutions

| Item | Function | Consideration for Catalysis Research |

|---|---|---|

| Primary Antibody | In immunohistochemistry, binds specifically to the protein of interest for detection [11]. | N/A - Biological Research |

| Secondary Antibody | Binds to the primary antibody and carries a fluorescent tag for visualization [11]. | N/A - Biological Research |

| Positive Control | A known sample that confirms the experimental protocol is working correctly [11]. | Essential for validating any new catalytic test reaction. |

| Negative Control | A sample that should not produce the target result, confirming the assay's specificity [11]. | Critical for ruling out background reactions or non-catalytic pathways. |

| Standardized Metadata Schema | A predefined set of fields (e.g., conditions, performance metrics) to ensure data is complete and comparable [12]. | The foundation for creating FAIR (Findable, Accessible, Interoperable, Reusable) data in catalysis [12]. |

| Trusted Data Repository | A digital platform for storing, preserving, and sharing research data with a unique identifier (DOI) [12]. | Enables dissemination and long-term access to curated catalytic datasets. |

| Data Profiling Tool | Software that automatically scans data to identify errors, inconsistencies, and missing values [8]. | Saves time and improves accuracy during the data validation and cleaning phase. |

Frequently Asked Questions

FAQ: My deep learning model for catalyst data performs poorly compared to simpler models. Why?

This is a common finding with structured/tabular data. Extensive benchmarking reveals that on many tabular datasets, traditional machine learning models like Gradient Boosting Machines (e.g., XGBoost) often outperform deep learning models [15]. This performance gap can be attributed to factors like dataset size and characteristics; deep learning models tend to excel compared to alternatives when the dataset has a small number of rows but a large number of columns, and features with high kurtosis [15].

FAQ: My model aces benchmark datasets but fails in real-world testing. What happened?

This can be a sign of benchmark leakage or contamination [16]. If the test data from a public benchmark has been widely available online, it's likely that your model (or the model you are using) was trained on this data, intentionally or not. The model is merely recalling patterns rather than learning to generalize. For rigorous evaluation, it's critical to use benchmarks with robust, non-leaked test sets or to participate in time-bound AI competitions that provide fresh, unseen data [16].

FAQ: I'm trying to automate data extraction from catalysis literature, but my language model is unreliable. How can I improve it?

The primary issue is often non-standardized reporting in scientific prose [17]. To significantly enhance model performance, advocate for and adopt community guidelines for writing machine-readable synthesis protocols. A proof-of-concept study in heterogeneous catalysis showed that modifying protocols to follow simple standardization guidelines dramatically improved a model's ability to correctly extract synthesis actions, with one model's information capture rising from approximately 66% to much higher levels [17].

FAQ: How can I be sure my benchmarking results are statistically sound and not just lucky?

To ensure statistical rigor, adopt a robust methodology like nested cross-validation, which prevents optimistic bias in model evaluation [18]. Furthermore, always report performance with multiple metrics (e.g., accuracy, sensitivity, specificity, AUROC) and, where possible, include confidence intervals [19] [18]. This approach is essential for providing a reliable estimate of how your model will perform on truly unseen data.

Troubleshooting Guides

Problem: Inconsistent Model Performance Across Datasets

- Symptoms: A model works excellently on one catalytic dataset but fails on another from a similar domain.

- Diagnosis: The model may be overfitting to spurious correlations or specific artifacts in the first dataset, rather than learning generalizable patterns related to the underlying chemistry [19].

- Solution:

- Profile Your Data: Use meta-feature analysis to understand dataset characteristics. The table below shows key features that influence whether deep learning (DL) or traditional machine learning (ML) will excel [15].

- Apply Domain-Specific Preprocessing: For catalysis data, ensure consistent unit normalization and handle categorical variables (e.g., catalyst supports, synthesis methods) appropriately.

- Use a Robust Benchmarking Framework: Implement a framework like BenchNIRS [18] (adapted for your domain) that uses nested cross-validation to provide unbiased performance estimates.

Problem: Large Language Model (LLM) Fails on Quantitative Reasoning Tasks

- Symptoms: An LLM provides incorrect answers to mathematical problems in catalysis, such as calculating power dissipation or reaction yields [20].

- Diagnosis: LLMs are fundamentally pattern-based and lack reliable computational reasoning. Errors are frequently related to rounding (35%) and calculation mistakes (33%) [20].

- Solution:

- Offload Calculations: Integrate dedicated mathematical solvers or symbolic computation libraries (e.g., SymPy) into your workflow.

- Use Program-Aided Language Models: Prompt the LLM to generate code (e.g., Python) to solve the problem, then execute the code in a separate environment to get the correct result.

- Implement Rigorous Validation: For any quantitative output, establish a validation step using a trusted external tool or expert knowledge.

Benchmarking Data & Performance

Table 1: Dataset Characteristics Favoring Deep Learning on Tabular Data [15]

| Dataset Characteristic | Favors Deep Learning (DL) When... | Example Value (DL-Favored) |

|---|---|---|

| Number of Rows | The dataset has a small number of rows. | Median: 4,720 rows |

| Number of Columns | The dataset has a large number of columns. | Median: 12.5 columns |

| Row-to-Column Ratio | The ratio of rows to columns is low. | ~378:1 |

| Feature Kurtosis | Features have high kurtosis (heavy-tailed distributions). | Median: 6.44 |

| Task Type | The task is classification (performance gap is smaller vs. regression). | Classification |

Table 2: Performance of Leading AI Models on the ORCA Math Benchmark (2025) [20] The benchmark tests math-oriented questions in scientific fields. A score of 100% represents perfect accuracy.

| Model | Overall Accuracy | Biology & Chemistry | Physics | Math & Conversions |

|---|---|---|---|---|

| Gemini 2.5 Flash | 63.0% | Data Not Specified | Data Not Specified | Data Not Specified |

| Grok 4 | 62.8% | Data Not Specified | Data Not Specified | Data Not Specified |

| DeepSeek V3.2 | 52.0% | 10.5% | 31.3% | 74.1% |

| ChatGPT-5 | 49.4% | Data Not Specified | Data Not Specified | Data Not Specified |

| Claude Sonnet 4.5 | 45.2% | Data Not Specified | Data Not Specified | Data Not Specified |

Experimental Protocols

Protocol 1: Creating a Rigorous Benchmark for Catalytic Data

Purpose: To establish a standardized benchmark for evaluating machine learning models on catalytic data, ensuring fair comparison and measuring true generalization [15] [16] [18].

Materials:

- Datasets from public repositories (e.g., OpenML, Kaggle, domain-specific databases).

- Computing environment with Python and relevant ML libraries (scikit-learn, XGBoost, PyTorch/TensorFlow).

- Benchmarking framework (e.g., adapted from BenchNIRS [18]).

Procedure:

- Dataset Curation:

- Assemble a diverse collection of datasets relevant to catalysis (e.g., synthesis conditions, material properties, reaction yields).

- Include both regression and classification tasks.

- Critical Step: Perform a rigorous train-test split, ensuring the test set is held out and never used during model development or training. For the highest rigor, use a completely external dataset as a test set [16].

- Model Training & Evaluation:

- Select a suite of baseline models, including tree-based models (XGBoost, Random Forest), simple linear models, and deep learning models (MLP, TabNet).

- Critical Step: Use Nested Cross-Validation [18] to tune hyperparameters and evaluate models without data leakage. The workflow for this is detailed in the diagram below.

- Evaluate all models on the same held-out test set using multiple metrics (e.g., Mean Squared Error, R² for regression; Accuracy, F1-Score, AUROC for classification).

Protocol 2: Implementing a Meta-Learning Predictor for Model Selection

Purpose: To build a model that predicts whether a deep learning or traditional ML model will perform better on a given catalytic dataset, based on the dataset's meta-features [15].

Materials: The benchmark results and dataset characteristic profiles from Protocol 1.

Procedure:

- Meta-Feature Extraction: For each dataset in your benchmark, calculate a set of meta-features. Key features include [15]:

- Number of rows and columns.

- Number of categorical and numerical columns.

- Average kurtosis and skewness of features.

- Average correlation between features.

- Average entropy.

- Meta-Target Definition: For each dataset, the meta-target is a binary variable indicating whether a deep learning model outperformed the best traditional ML model.

- Meta-Model Training: Train a classifier (e.g., Logistic Regression, Random Forest) on the collected meta-dataset to predict the meta-target. One such study achieved 86.1% accuracy (AUC 0.78) in this prediction task [15].

- Application: For a new, unseen catalytic dataset, extract its meta-features and use the meta-model to recommend the most promising model class (DL or traditional ML) to apply.

Standardization Workflow for Catalytic Data

The following diagram outlines the lifecycle for implementing FAIR (Findable, Accessible, Interoperable, Reusable) data practices in catalysis research, which is foundational for creating high-quality benchmarks.

The Scientist's Toolkit: Research Reagent Solutions

Table 3: Essential Tools for Benchmarking in Catalysis Informatics

| Tool / Resource | Function | Relevance to Catalysis Research |

|---|---|---|

| OpenML / Kaggle [21] | Public repositories for finding and sharing benchmark datasets. | Source for initial datasets; platform for hosting catalysis-specific data challenges. |

| MLPerf [19] [22] | A standardized suite of benchmarks for measuring the performance of ML hardware, software, and services. | Ensures computational efficiency claims are measured consistently when training large models on catalyst data. |

| Domain-Specific PLMs (e.g., BioLinkBERT, SciBERT) [23] | Pretrained language models fine-tuned on scientific corpora. | Superior for automated information extraction (e.g., synthesis protocols, properties) from catalysis literature compared to general-purpose models. |

| Nested Cross-Validation Script [18] | A statistical methodology implemented in code to prevent over-optimistic model evaluation. | The core of any rigorous experimental protocol for comparing predictive models in catalysis. |

| FAIR Data Guidelines [17] | A set of principles for making data Findable, Accessible, Interoperable, and Reusable. | Provides a framework for standardizing the reporting of catalytic data, making it more useful for ML. |

In modern catalytic research and drug development, efficiency and reproducibility are paramount. This technical support center addresses common experimental challenges by integrating three powerful, interconnected drivers: evolving regulatory pressure, the principles of green chemistry, and the capabilities of artificial intelligence (AI). Adopting standardized data reporting is no longer just a best practice for science; it is a strategic necessity for innovation, compliance, and sustainability. The following guides and FAQs provide direct, actionable solutions to specific experimental issues, framed within this new paradigm.

Troubleshooting Common Experimental Challenges

FAQ: My catalyst synthesis is irreproducible. What key parameters should I control and report?

Inconsistent catalyst synthesis is often due to unreported minor variations in procedure or environmental conditions. A shift towards standardized reporting is critical for machine readability and reproducibility [1] [2].

- Solution: Meticulously control, document, and report the following parameters for every synthesis, including post-treatment and storage. Standardizing this information is a key enabler for AI-assisted analysis and discovery [2].

Table: Essential Parameters for Reproducible Catalyst Synthesis Reporting

| Synthesis Phase | Critical Parameters to Report | Common Pitfalls & Green Chemistry Alternatives |

|---|---|---|

| Reagent & Apparatus Prep | Precursor purity (including lot numbers), supplier, purification methods; Glassware cleaning procedure (e.g., acid wash) [1]. | Pitfall: Trace contaminants from reagents or glassware poison active sites [1].Green Alternative: Use bio-based surfactants or perform solvent-free synthesis via mechanochemistry (grinding/ball milling) [24]. |

| Synthesis Procedure | Temperature profile (ramp rates, hold times); Precursor concentrations; Solution pH; Mixing speed, time, and method; Order of addition [1]. | Pitfall: Minor pH or mixing time changes alter particle size and distribution [1].Green Alternative: Replace toxic organic solvents with water-based or on-water reactions [24]. |

| Post-Treatment | Drying and calcination atmosphere (static/dynamic), gas space velocity; Heating rates and final temperatures [1]. | Pitfall: Fixed-bed vs. fluidized-bed activation creates different active site distributions [1]. |

| Storage | Storage duration, environment (e.g., ambient air, inert gas), and container type [1]. | Pitfall: Atmospheric contaminants (e.g., carboxylic acids, H₂S at ppb levels) adsorb on surfaces, degrading performance [1]. |

FAQ: How can I reduce the environmental impact of my synthetic protocols?

Regulatory pressure is mounting against hazardous substances, making green chemistry both a compliance and an innovation driver [24] [25].

- Solution: Implement the following green chemistry principles, which can be optimized using AI tools.

Table: Green Chemistry Solutions for Catalysis and Synthesis

| Challenge | Green Solution | Experimental Protocol & AI Integration |

|---|---|---|

| Toxic Solvents | Replace with water-based reactions or solvent-free mechanochemistry [24]. | Protocol (Mechanochemistry): Place reactants in a high-energy ball mill. Use zirconium dioxide grinding jars and balls. Mill at a optimized frequency (e.g., 30 Hz) for a defined number of cycles [24].AI Role: AI algorithms can predict optimal milling parameters and reaction outcomes for solvent-free syntheses [24]. |

| Hazardous Reagents (e.g., PFAS) | Use fluorine-free coatings (silicones, waxes) or bio-based surfactants (rhamnolipids) [24]. | Protocol (Bio-surfactant Use): For nanoparticle synthesis, use a rhamnolipid solution as a stabilizing agent instead of PFAS-based surfactants. Optimize concentration for desired particle dispersion. |

| Low Atom Economy | Adopt continuous flow synthesis instead of traditional batch methods [25]. | Protocol (Continuous Flow): Use a continuous flow reactor system. Pump reactant solutions through a temperature-controlled reaction tube. Optimize flow rate, temperature, and pressure for maximum yield and minimal waste [25]. |

| Wasteful Extraction | Use Deep Eutectic Solvents (DES) for metal recovery from waste streams [24]. | Protocol (DES for Extraction): Prepare a DES by mixing choline chloride (HBA) and urea (HBD) in a 1:2 molar ratio at 80°C until a clear liquid forms. Use this DES to leach metals from spent catalyst material [24]. |

FAQ: My data is siloed and not ready for AI analysis. What should I do?

Vendor inflexibility and a lack of standardized data structures are major barriers to AI adoption. 81% of lab leaders cite vendor technology limitations as a trigger for system upgrades [26].

- Solution: Build a future-proof informatics infrastructure with these steps:

- Demand Vendor Flexibility: In your next LIMS/ELN upgrade, prioritize vendor openness (APIs, partner networks) and post-implementation support. 37% of managers switch vendors due to poor support [26].

- Plan for System Convergence: 57% of leaders expect 2026 to be a pivotal year for consolidating LIMS, ELN, and SDMS into unified frameworks. Plan your roadmaps now for this data convergence [26].

- Enforce a Data Governance Framework: Adopt a framework that defines data ownership, quality benchmarks, and a common data model (CDM) to ensure consistency across all systems [27].

- Maintain a Centralized Data Dictionary: This dictionary should define naming conventions, data types, units of measurement, and accepted values, ensuring all researchers are aligned [27].

The Scientist's Toolkit: Key Research Reagent Solutions

Table: Essential Materials for Modern Catalytic Research

| Reagent/Material | Function & Rationale | Green & Standardization Context |

|---|---|---|

| Earth-Abundant Elements (e.g., Fe, Ni) | Powerful alternatives to rare-earth elements (e.g., in tetrataenite magnets). Reduce geopolitical and environmental costs [24]. | Green Driver: Sourcing rare earths is environmentally damaging. Regulatory Driver: Geographic concentration of supply creates risk. |

| Deep Eutectic Solvents (DES) | Customizable, biodegradable solvents for extraction and synthesis. Typically made from choline chloride and urea [24]. | Green Driver: Low-toxicity, low-energy alternative to strong acids or VOCs. AI Role: AI can help design optimal DES formulations for specific extraction tasks. |

| Bio-based Surfactants (e.g., Rhamnolipids) | Replace PFAS-based surfactants as stabilizing agents in nanoparticle synthesis and other applications [24]. | Regulatory Driver: PFAS are facing global phase-outs due to health and environmental risks. |

| Standardized Reporting Templates | Pre-defined templates for reporting synthesis parameters in publications and lab notebooks [1] [2]. | AI Imperative: Essential for machine readability. Standardized protocols improve AI extraction accuracy and enable large-scale data analysis [2]. |

Experimental Workflow for AI-Driven Catalyst Optimization

The following workflow integrates AI, real-time analytics, and green principles to create a dynamic, self-optimizing experimental system, as demonstrated in advanced bioprocesses [28].

Detailed Methodology for an AI-Optimized Reaction:

- Define Objectives & Kinetics Modeling: Use a backpropagation neural network (BPNN) to model the complex, non-linear relationships between input parameters (e.g., substrate concentration, temperature) and output objectives (e.g., specific production rate, yield). The model should achieve high predictive accuracy (R² > 0.95) [28].

- Multi-Objective Optimization: Employ an algorithm like NSGA-II to resolve trade-offs between competing goals (e.g., maximizing yield while minimizing energy consumption or waste generation). This generates a set of Pareto-optimal reaction conditions [28].

- Real-Time Sensing & Data Acquisition: Instrument the reactor with dual-spectroscopy probes (e.g., Near-Infrared and Raman) to monitor reaction progress, substrate consumption, and product formation continuously [28].

- Dynamic Feedback Control: The AI system compares real-time sensor data to the model's predictions. It then calculates and implements adjustments to process parameters (e.g., feeding rates of carbon/nitrogen sources, stirring speed) to keep the reaction on the optimal path [28].

- Validation and Analysis: Once optimal production is achieved, use integrated metabolomics and flux analysis to understand the metabolic or catalytic network reorganization that occurred under AI control [28].

Building Your Standardized Reporting Workflow: From Data Collection to Curation

Establishing a Minimum Required Data Set for Catalytic Experiments

The field of catalysis research is undergoing a significant transformation toward digitalization and enhanced reproducibility. Establishing a minimum required data set for catalytic experiments is fundamental to this shift, enabling objective comparison of catalytic performance across different systems and laboratories [29]. Such standardization addresses the current challenges in determining, evaluating, and comparing light-driven catalytic performance, which depends on a complex interplay between multiple components and processes [29]. The implementation of Findable, Accessible, Interoperable, and Reusable (FAIR) data principles is emerging as an indispensable element in the advancement of science, requiring new methods for data acquisition, storage, and sharing [30]. This framework for standardized data reporting supports future automated data analysis and machine learning applications, which demand high data quality in terms of reliability, reproducibility, and consistency [30].

Frequently Asked Questions (FAQs) on Catalytic Data Reporting

Why is a minimum data set necessary for catalytic experiments? A minimum data set is crucial for providing quantitative comparability and unbiased, reliable, and reproducible performance evaluation across multiple systems and laboratories [29]. The immense complexity of high-performance catalytic systems, particularly where selectivity is a major issue, requires analysis of scientific data by artificial intelligence and data science, which in turn requires data of the highest quality and sufficient diversity [31]. Existing data frequently do not comply with these constraints, necessitating new concepts of data generation and management [31].

What are the FAIR data principles and why are they important? FAIR stands for Findable, Accessible, Interoperable, and Reusable. These principles form the basis for algorithm-based, automated data analyses and are becoming critical as the increasing application of artificial intelligence demands significantly higher data quality in terms of reliability, reproducibility, and consistency of datasets [30]. Research organizations across the globe have expressed the need for open and transparent data reporting, which has led to the adoption of these principles [29].

What are the key challenges in comparing light-driven catalytic systems? Light-driven catalysis typically relies on the interplay between multiple components and processes, including light absorption, charge separation, charge transfer, and catalytic turnover [29]. The complex interplay between system components, reaction conditions, and community-specific reporting strategies has thus far prevented the development of unified comparability protocols [29]. As catalytic processes typically show maximum performance in a narrow window of operation, defining a standard set of reaction conditions would be inherently biased [29].

How do homogeneous and heterogeneous catalytic systems differ in their reporting requirements? Homogeneous light-driven reactions in solution are highly dependent on the kinetics of many elementary processes, which need to occur in a specific order, and are affected by intermolecular and supramolecular interactions, as well as kinetic rate matching [29]. Heterogeneous light-driven catalysis uses solid-state compounds and is influenced by additional factors such as optical effects (scattering and reflection) and mass transport considerations [29].

What is the role of Standard Operating Procedures (SOPs) in catalysis research? SOPs are documented in handbooks and published together with results to ensure consistency in experimental workflows [30]. The automatic capture of data and its digital storage facilitates the use of SOPs and simultaneously prepares the way for autonomous catalysis research [30]. Machine-readable handbooks are the preferred solution compared to plain text, allowing method information to be stored in sustainable, machine-readable data formats [30].

Troubleshooting Common Experimental Issues

Inconsistent Catalytic Performance Measurements

Problem: Measured reaction rates or selectivity values vary unexpectedly between experiments, even when using catalysts with apparently the same composition.

Solution:

- Document complete catalyst history: The measured rate depends not only on the catalyst and reaction parameters but also on the experimental workflow [30]. For example, in ammonia decomposition, conversion can vary depending on whether data was measured with ascending or descending temperature [30].

- Implement standardized pretreatment protocols: Chemical changes at the catalyst interface under reaction conditions lead to dynamic coupling between the catalyst and the reacting medium [30].

- Control activation procedures: Differences can be attributed to changes in the catalyst, such as the degree of reduction, particle size distribution, or formation of new surface phases, which may occur at the highest reaction temperature even if the catalyst was in steady-state at lower temperatures [30].

Irreproducible Synthesis Results

Problem: Catalyst materials synthesized using reported methods yield different structural properties or performance characteristics.

Solution:

- Record comprehensive synthesis parameters: Document precise precursor concentrations, mixing order and rates, aging times, and thermal treatment profiles [31].

- Standardize characterization protocols: Implement consistent materials characterization before and after catalytic testing to identify structural variations [31].

- Report equipment-specific parameters: Include details such as reactor geometry, mixing efficiency, and heating rates that may influence materials properties [31].

Discrepancies in Photocatalytic Measurements

Problem: Significant variations in reported quantum yields or reaction rates for similar photocatalytic systems.

Solution:

- Characterize light source completely: Report emitted photon flux, emission wavelengths, and emission geometry [29].

- Document spectrally resolved incident photon flux: This parameter describes the photon flux that reaches the inside of the reactor and serves as an ideal basis for quantitative interpretation of light-driven catalytic reactivity data [29].

- Control for optical effects: In heterogeneous systems, account for scattering and reflection at the interface between solvent and solid-state compounds [29].

- Standardize actinometry methods: Use consistent approaches for determining incident photon flux [29].

Minimum Required Data Set Tables

Chemical Reaction Parameters

Table 1: Essential chemical parameters for catalytic experiments

| Parameter Category | Specific Parameters | Reporting Standard |

|---|---|---|

| Reaction System | Light absorber, catalyst, sacrificial electron donors/acceptors, reagents | Concentrations and ratios of all components [29] |

| Reaction Conditions | Solvent type, solution pH, temperature, pressure | Full specification with purity grades [29] |

| Electron Transfer | Redox potentials, pH dependence | With and without applied bias if relevant [29] |

| Performance Metrics | Conversion, selectivity, yield, TON, TOF | With clear calculation methods and error analysis [29] |

Technical and Instrumentation Parameters

Table 2: Technical parameters for catalytic experimental reporting

| Parameter Type | Specific Requirements | Importance |

|---|---|---|

| Light Source Characteristics | Emitted photon flux, emission wavelengths, emission geometry [29] | Enables quantification of photon-involved processes [29] |

| Incident Photon Flux | Spectrally resolved data, measurement method (e.g., actinometry) [29] | Critical for calculating quantum efficiency [29] |

| Reactor Configuration | Geometry, material, optical path length, illumination direction [29] | Affects light distribution and mass transfer [29] |

| Analysis Methods | Analytical technique, calibration details, sampling method [32] | Ensures proper quantification and detection limits [32] |

Data Management and Accessibility Requirements

Table 3: Data management and accessibility standards

| Data Aspect | Minimum Requirement | Best Practice |

|---|---|---|

| Primary Data Access | Availability statement in publication [33] | Deposition in public repository with accession codes [33] |

| Data Repository | Community-endorsed public repository [33] | Discipline-specific repository (e.g., CCDC for structures) [33] |

| Data Citation | Formal citation in reference list [32] | Include authors, title, publisher, identifier [32] |

| Software & Code | Availability of custom code [33] | Version control repository with documentation [33] |

Experimental Protocols for Standardized Testing

Catalyst Characterization Protocol

Objective: To provide consistent baseline characterization of catalytic materials before and after testing.

Procedure:

- Surface Area Analysis: Conduct BET surface area measurements using standardized adsorption protocols.

- Structural Characterization: Perform XRD analysis to determine crystal structure and phase composition.

- Morphological Examination: Use electron microscopy (SEM/TEM) to assess particle size and distribution.

- Surface Composition: Apply XPS to determine surface elemental composition and oxidation states.

- Chemical Environment: Utilize FTIR or Raman spectroscopy to identify functional groups and bonding environments.

Data Reporting Requirements:

- Instrument model and settings

- Sample preparation methods

- Reference standards used

- Full spectral data when possible [32]

Kinetic Measurement Protocol

Objective: To obtain reproducible catalytic performance data under controlled conditions.

Procedure:

- Catalyst Pretreatment: Apply standardized activation procedure (e.g., reduction, oxidation, calcination).

- Reaction Conditions: Establish steady-state conditions with documented stabilization period.

- Data Collection: Measure performance at multiple time points to establish stability.

- Parameter Variation: Systematically vary key parameters (temperature, pressure, concentration).

- Control Experiments: Conduct blank tests (without catalyst) and reference catalyst tests.

Data Reporting Requirements:

- Complete description of reactor system

- Detailed workflow including direction of parameter changes [30]

- Mass balance closures

- Error analysis and reproducibility measures [32]

Research Reagent Solutions

Table 4: Essential research reagents and materials for catalytic experiments

| Reagent/Material | Function/Purpose | Reporting Requirements |

|---|---|---|

| Reference Catalysts | Benchmarking performance, validating experimental setups | Source, composition, pretreatment history, performance data [31] |

| Sacrificial Reagents | Electron donors/acceptors in photocatalytic systems | Identity, concentration, purity, redox potentials [29] |

| Spectroscopic Standards | Calibration of characterization equipment | Source, method of use, reference values [32] |

| Actinometry Solutions | Quantification of photon flux in photoreactions | Composition, concentration, calibration method [29] |

| Internal Standards | Quantitative analysis in chromatography and spectroscopy | Identity, concentration, retention times/peaks [32] |

Workflow and Data Relationship Diagrams

Experimental Workflow for Catalytic Testing

Experimental workflow for catalytic testing showing the sequential steps from project conceptualization to knowledge graph generation.

Data Management and FAIR Principles Implementation

Data management workflow showing the implementation of FAIR principles from data generation to machine learning applications.

Quantitative performance metrics are the cornerstone of rigorous and reproducible catalysis research, providing the essential data required to compare catalysts, optimize processes, and establish robust structure-function relationships. Within the broader context of standardizing catalytic data reporting practices, the consistent application and accurate reporting of metrics like turnover frequency (TOF), selectivity, and mass balances are paramount. These metrics move catalyst evaluation beyond simple conversion and yield, offering deeper insights into the intrinsic activity of active sites and the efficiency of chemical transformations. The drive towards standardization, as highlighted in recent literature, is a response to the recognition that nuanced differences in synthesis and pretreatment can lead to significant variations in catalyst properties and performance [1]. This technical support guide provides troubleshooting advice and foundational methodologies to help researchers navigate the common challenges encountered when determining these critical quantitative metrics.

Core Metric Definitions and Standardized Reporting

A clear understanding of the core metrics and their proper calculation is the first step toward reliable data. The table below defines the key performance indicators every catalysis researcher should know.

Table 1: Fundamental Quantitative Metrics in Heterogeneous Catalysis

| Metric | Definition | Key Reporting Considerations |

|---|---|---|

| Turnover Frequency (TOF) | The number of catalytic turnover events per unit time per active site. It measures the intrinsic activity of a site [34]. | The method used to quantify the number of active sites (e.g., chemisorption, titration) must be explicitly stated, as different techniques can yield different TOF values [1]. |

| Selectivity | The fraction of converted reactant that forms a specific desired product. It measures the catalyst's ability to direct the reaction toward the target pathway. | Must be reported for all major products. Requires analytical methods (e.g., chromatography, spectroscopy) that can identify and quantify all products to close the mass balance. |

| Mass Balance | A measure of the conservation of mass, accounting for all reactants and products in a system. | A closed mass balance (typically 100% ± 5%) is crucial to confirm that all significant products have been identified and quantified, preventing false conclusions about activity or selectivity [35]. |

| Faradaic Efficiency (FE) | In electrocatalysis, the fraction of charge (electrons) directed toward the formation of a specific product [35]. | Requires precise measurement of charge passed and accurate quantification of products. Essential for evaluating the efficiency of electrochemical catalytic systems. |

| Energy Efficiency (EE) | The ratio of the Gibbs free energy stored in the products to the total energy input into the system [35]. | Distinguish from Faradaic/internal quantum yield. Critical for assessing the overall energy footprint and practical potential of a catalytic process. |

Troubleshooting Common Experimental Issues

This section addresses specific, common problems researchers face when measuring catalytic performance, providing a systematic approach to diagnosis and resolution.

FAQ 1: My mass balance does not close. Where did the missing carbon (or mass) go?

A mass balance that does not close is a common and critical issue that invalidates calculations of conversion and selectivity. A systematic approach is required to identify the source of the loss.

Step-by-Step Diagnosis:

- Verify Analytical Calibration: Re-calibrate all analytical instruments (e.g., GC, HPLC) with fresh standard solutions for every suspected product and reactant. Ensure the calibration range encompasses the actual concentrations in your experiment.

- Identify All Products:

- Check for Volatiles: Look for light gases (e.g., CO, CO₂, CH₄, H₂) that may not be detected by your primary analytical method. Use a combination of gas chromatography (GC) with multiple detectors (TCD, FID) to identify and quantify them.

- Check for Condensables: Examine for intermediate or high-boiling-point products that may be adsorbed on the catalyst surface or reactor walls. Perform a post-reaction extraction of the catalyst and reactor with an appropriate solvent and analyze the extract.

- Check for Solids: In polymerization or reactions on solid supports, the product mass may be retained as a solid on the catalyst. Thermogravimetric analysis (TGA) of the spent catalyst can reveal this.

- Account for Carbon in the System:

- Catalyst Coke: Carbonaceous deposits (coke) are a common sink for missing carbon, especially in high-temperature reactions. Perform elemental analysis (CHNS) or TGA on the spent catalyst to quantify coke formation.

- System Flushing: Ensure your reactor flushing procedure between experiments is adequate to remove all products from previous runs.

Solution: The workflow below outlines a logical path to troubleshoot a poor mass balance.

FAQ 2: My calculated Turnover Frequency (TOF) is inconsistent with literature values. What could be wrong?

Discrepancies in TOF often stem from differences in how the number of active sites is determined or from subtle experimental conditions.

Troubleshooting Checklist:

- Active Site Counting Method: The method for determining active sites (e.g., H₂ or CO chemisorption, titration) must be consistent with what is being compared. Different techniques and assumptions (e.g., adsorption stoichiometry) will yield different site counts and thus different TOFs [1]. Report your method in detail.

- Catalyst Contamination: Trace contaminants can poison a fraction of your active sites. For example, ppb-level exposure of Ni catalysts to H₂S can reduce rates by an order of magnitude [1]. Use high-purity gases and reagents, and be aware of contaminants in supports (e.g., S or Na in commercial Al₂O₃) [1].

- Transport Limitations: If your reaction is limited by mass or heat transfer rather than the intrinsic kinetics of the catalyst, the measured rate will be lower than the true TOF. Perform tests to rule out internal and external diffusion limitations by varying catalyst particle size and stirring/flow rate.

- Inconsistent Pretreatment: The catalyst's active state is highly sensitive to its pretreatment (calcination, reduction) conditions. Slight variations in temperature, heating rate, or atmosphere can create catalysts with different dispersions and activities. Report pretreatment protocols with full detail, including heating rates and gas space velocities [1].

FAQ 3: How do I prove that my products are truly from the catalytic reaction and not from a side reaction or background process?

This is a fundamental question, particularly when working with novel catalysts or complex reaction networks.

Required Experimental Evidence:

- Appropriate Control Experiments: Always run control experiments under identical conditions without the catalyst, and/or with the support material alone. This identifies any contribution from homogeneous reactions or the support.

- Isotope Labelling: This is the gold standard for proving a reaction pathway. For example, use ¹³CO₂ to prove that carbon in a product originates from CO₂ fixation, or D₂ to trace hydrogenation pathways [35]. Analyze products using techniques like mass spectrometry to detect the isotope label.

- Stoichiometric and Kinetic Analysis: The reaction stoichiometry should make chemical sense. Monitor the reaction kinetics to ensure the product formation rate is consistent with the reactant consumption rate and the proposed mechanism.

- "Omics" for Biohybrid Systems: When working with material-microbe hybrid catalysts, advanced techniques like transcriptomics, proteomics, and metabolomics are needed to affirm the proposed metabolic processes are active and to rule out other pathways that could produce the same product [35].

The Scientist's Toolkit: Essential Reagents & Materials

The table below lists key materials and reagents frequently used in the synthesis and characterization of heterogeneous catalysts, along with critical considerations for their use to ensure reproducibility.

Table 2: Key Research Reagent Solutions for Catalyst Synthesis and Testing

| Item | Function | Troubleshooting Tips |

|---|---|---|

| Metal Salt Precursors | Source of the active metal phase (e.g., H₂PtCl₆, Ni(NO₃)₂). | Purity and Lot Number: Purity and even the supplier's lot number can critically impact reproducibility, especially in nanoparticle synthesis. Record and report this information [1]. |

| High-Surface-Area Supports | Carrier materials for deposited catalysts (e.g., Al₂O₃, SiO₂, TiO₂, C). | Impurity Profile: Commercial supports often contain impurities (e.g., S, Na in Al₂O₃) that can poison active sites. Specify supplier, type, and pre-treatment (e.g., washing) [1]. |

| High-Purity Gases | Used for pretreatment (reduction, calcination) and as reactants. | Contaminants: Trace O₂ in inert gases or ppb-level poisons (e.g., H₂S) in H₂ can alter catalyst performance. Use appropriate gas purifiers and report gas grades [1]. |

| Solvents | Medium for catalyst synthesis (e.g., impregnation) and reaction. | Purity and Water Content: Solvent purity can influence precursor speciation and deposition. For air- and moisture-sensitive procedures, use dry, degassed solvents. |

| Static Control Solutions | Used in electrophoretic deposition or to control interaction between precursors and supports. | Solution History: The age of the solution and atmospheric CO₂ absorption can change pH over time. Prepare fresh solutions and monitor pH during synthesis. |

Workflow for Comprehensive Performance Evaluation

Establishing a complete and reliable picture of catalyst performance involves more than just measuring a single rate. The following workflow integrates the metrics and troubleshooting points discussed above into a coherent process for any catalytic study. It emphasizes the critical role of mass balance closure and appropriate controls in validating the data that leads to the final calculation of TOF, selectivity, and efficiency.

Frequently Asked Questions (FAQs)

Q1: What is the fundamental difference between in situ and operando characterization? In situ techniques probe materials under controlled, non-operational conditions (e.g., elevated temperature, applied voltage, presence of solvents), while operando techniques monitor changes under actual device operation, simultaneously linking the observed structural or chemical changes with measured performance data [36] [37]. Operando characterization is considered more powerful for establishing direct structure-function relationships under real working conditions.