The Sabatier Principle in ML-Driven Catalyst Screening: Accelerating Drug Discovery and Biomolecular Design

This article explores the transformative integration of the Sabatier principle with machine learning (ML) for catalyst and biomolecule screening.

The Sabatier Principle in ML-Driven Catalyst Screening: Accelerating Drug Discovery and Biomolecular Design

Abstract

This article explores the transformative integration of the Sabatier principle with machine learning (ML) for catalyst and biomolecule screening. Targeting researchers and drug development professionals, it begins by establishing the foundational link between the Sabatier principle's 'volcano curve' and catalytic activity. It then details methodological workflows for building predictive ML models, including feature engineering, descriptor selection, and data integration strategies. The guide addresses key challenges in model training, data scarcity, and performance optimization. Finally, it provides a critical framework for validating and comparing different ML approaches against traditional high-throughput experimentation. The conclusion synthesizes how this synergy creates a powerful, predictive pipeline for accelerating the discovery of novel catalysts and therapeutic agents.

Bridging Theory and Data: The Sabatier Principle as a Blueprint for ML in Catalysis

1. Introduction & Application Notes The Sabatier principle posits that catalytic activity is maximized when the interaction strength between a catalyst surface and a reactant or intermediate is neither too strong nor too weak. This relationship is quantified via the "volcano curve," where activity is plotted against a descriptor, typically the adsorption free energy of a key intermediate. In modern catalyst discovery, particularly within machine learning (ML)-driven screening research, the Sabatier principle serves as a foundational physical constraint. It guides the generation of predictive models by defining the "optimal binding energy" target, enabling the rapid virtual screening of vast chemical spaces—from heterogeneous catalysts for renewable energy to enzyme mimetics in drug development—to identify candidates residing near the volcano peak.

2. Quantitative Data: Experimental & Computational Volcano Trends Table 1: Experimental Volcano Peaks for Key Catalytic Reactions

| Reaction (Catalyst Class) | Key Intermediate Descriptor | Optimal ΔG (eV) | Peak Activity Metric | Reference Year |

|---|---|---|---|---|

| Hydrogen Evolution (Metals) | ΔG of H* (Hydrogen) | ~0 eV | Exchange Current Density (log j₀) | 2005 |

| Oxygen Reduction (Pt-alloys) | ΔG of OH* | ~0.1-0.2 eV | Activity at 0.9 V vs. RHE | 2007 |

| CO2 Reduction to CO (Metals) | ΔG of COOH* | ~0.6 eV | CO Faradaic Efficiency | 2012 |

| Nitrogen Reduction (Metals) | ΔG of N₂H* | ~0.5 eV | Theoretical Onset Potential | 2017 |

Table 2: Common Descriptors for ML-Based Sabatier Screening

| Descriptor Type | Example | Computational Method | Role in ML Model |

|---|---|---|---|

| Electronic | d-band center, Valence electron count | DFT (VASP, Quantum ESPRESSO) | Feature input for activity prediction |

| Energetic | Adsorption free energy of X* (X=O, H, C) | DFT with solvation correction | Target or primary predictive output |

| Geometric | Coordination number, Nearest-neighbor distance | Structural optimization | Correlates with binding strength |

| Compositional | Elemental identity, Atomic radius | Material formula | Input for composition-property models |

3. Experimental Protocols

Protocol 1: DFT Calculation of Adsorption Free Energy for Volcano Descriptor Objective: Compute the adsorption free energy (ΔG_ads) of a key intermediate (e.g., H, O, COOH*) on a catalyst surface.

- Structure Optimization: Use Density Functional Theory (DFT) code (e.g., VASP, Quantum ESPRESSO). Build the catalyst surface model (e.g., (2x2) slab, 4 layers). Optimize geometry until forces on atoms < 0.01 eV/Å.

- Adsorbate Placement: Place the intermediate at relevant surface sites (e.g., atop, bridge, hollow). Re-optimize the adsorbate-surface system.

- Energy Calculation: Calculate total energies:

- Eslab: Energy of clean slab.

- Eadsorbate+slab: Energy of slab with adsorbed intermediate.

- E_ref: Reference energy of the adsorbate in the gas phase (e.g., ½ H₂ for H*).

- Free Energy Correction: Apply corrections: ΔGads = ΔEads + ΔEZPE - TΔS.

- ΔEads = Eadsorbate+slab - Eslab - Eref.

- Calculate Zero-Point Energy (ΔEZPE) and entropy (ΔS) from vibrational frequency calculations.

Protocol 2: High-Throughput Electrochemical Validation for HER Catalysts Objective: Experimentally measure activity of screened catalysts for Hydrogen Evolution Reaction (HER) to construct a volcano plot.

- Catalyst Ink Preparation: Weigh 5 mg of catalyst powder, 30 µL of Nafion binder (5 wt%), and 1 mL of ethanol/isopropanol mix. Sonicate for 30 min to form homogeneous ink.

- Electrode Preparation: Deposit 10 µL of ink onto a polished glassy carbon rotating disk electrode (RDE, 0.196 cm²). Dry under ambient conditions to form a thin, uniform film. Catalyst loading: ~0.25 mg/cm².

- Electrochemical Testing (3-electrode setup):

- Electrolyte: 0.1 M HClO₄ or 0.5 M H₂SO₄ (deaerated with N₂/Ar).

- Counter Electrode: Pt wire.

- Reference Electrode: Reversible Hydrogen Electrode (RHE).

- Protocol: Perform linear sweep voltammetry (LSV) from 0.1 to -0.3 V vs. RHE at 5 mV/s scan rate. Record IR-corrected current density (j).

- Activity Extraction: Extract the overpotential (η) at a current density of -10 mA/cm². Alternatively, extract the exchange current density (j₀) by fitting the Tafel equation (η = a + b log|j|). Plot log(j₀) or η vs. computed ΔG_H* to construct experimental volcano.

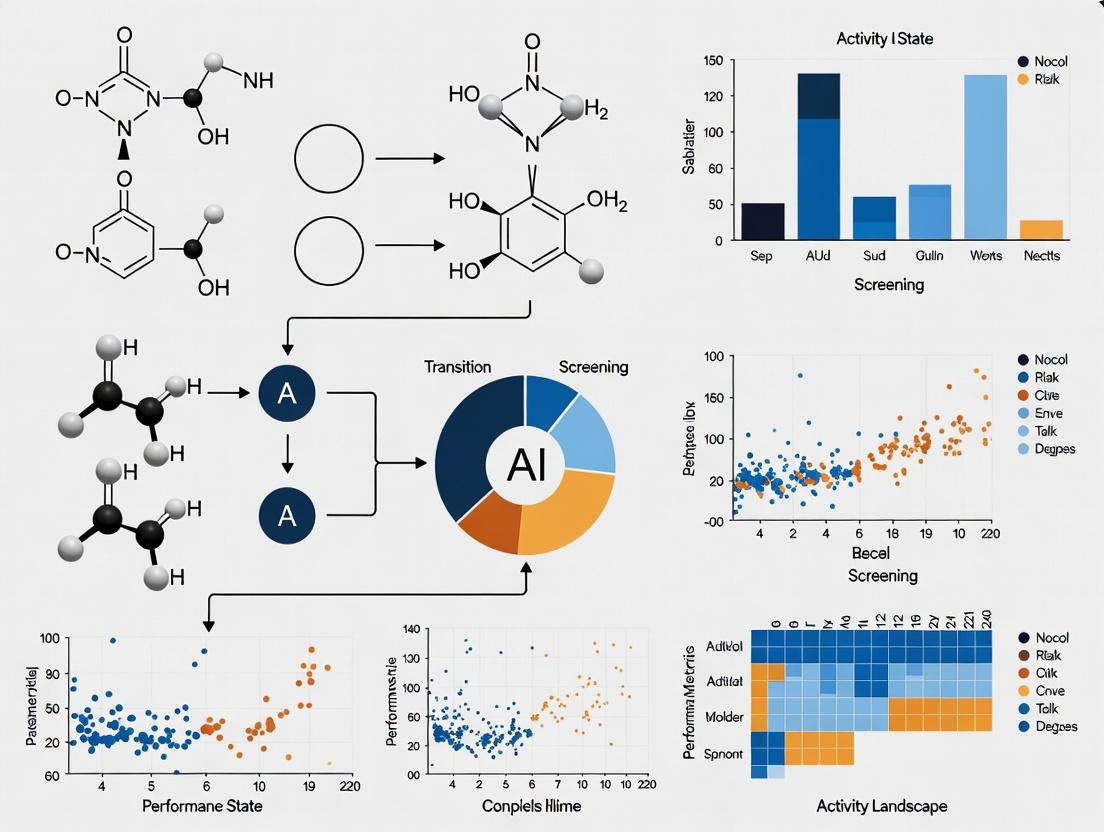

4. Visualizations

Diagram 1: Sabatier Principle Conceptual Flow

Diagram 2: ML Catalyst Screening Workflow

5. The Scientist's Toolkit: Research Reagent & Material Solutions Table 3: Essential Toolkit for Sabatier-Principle Guided Catalyst Research

| Item / Reagent | Function / Application | Notes for Consistency |

|---|---|---|

| VASP / Quantum ESPRESSO | DFT Software for calculating adsorption energies and electronic descriptors. | Use consistent pseudopotentials & functional (e.g., RPBE). |

| Catalytic Materials Library (e.g., HiTMat) | Standardized, high-purity catalyst samples for experimental validation. | Ensures comparable results across studies. |

| Nafion Binder (5 wt%) | Ionomer for preparing catalyst inks for electrode fabrication. | Standard binder for proton-exchange media in electrochemistry. |

| Reversible Hydrogen Electrode (RHE) | Essential reference electrode for standardizing electrochemical potentials across different pH. | Crucial for accurate activity comparison. |

| Standardized Volcano Plot Datasets (e.g., CatHub) | Curated experimental/computational data for training and benchmarking ML models. | Provides a common benchmark for model performance. |

| High-Throughput Electrochemical Cell (e.g., rotating disk array) | Parallel activity testing of multiple catalyst candidates. | Accelerates experimental validation of ML predictions. |

The Sabatier principle, a cornerstone concept in heterogeneous catalysis, posits that optimal catalytic activity arises from an intermediate strength of reactant adsorption—neither too weak nor too strong. This creates a characteristic "volcano-shaped" relationship between adsorption energy (or a related descriptor) and catalytic activity. In the context of modern catalyst and drug discovery, this principle provides a powerful, simplified descriptor-to-activity framework that is inherently suitable for machine learning (ML) model development. The principle translates complex molecular interactions into a quantifiable, predictive landscape, moving research from high-throughput empirical observation to a rational, AI-driven predictive paradigm. This application note details protocols for integrating the Sabatier principle into ML pipelines for catalyst and binder screening.

Core Data & Quantitative Relationships

Table 1: Key Sabatier Descriptors and Correlated Activities in Catalysis

| Descriptor (Computational) | Typical Target Reaction | Optimal Range (eV) | Observed Activity Trend (Shape) | Common ML Target |

|---|---|---|---|---|

| CO Adsorption Energy (ΔE_CO) | Oxygen Reduction Reaction (ORR) | -0.8 to -0.6 | Volcano Peak at ~-0.7 eV | Log(Exchange Current Density, j0) |

| O/OH Adsorption Energy (ΔEO, ΔEOH) | OER, ORR | ΔE_OH: 0.8 - 1.2 | Linear/Volcano | Overpotential (η) |

| d-band center (ε_d) | Hydrogentation, CO oxidation | -2.5 to -2.0 | Volcano | Turnover Frequency (TOF) |

| N₂ Adsorption Energy | Ammonia Synthesis | -0.5 to 0.0 | Volcano | Reaction Rate (mmol/g/h) |

| Drug Discovery Analog: Protein-Ligand Binding Affinity (ΔG) | Inhibitor Efficacy | -12 to -8 kcal/mol | Parabolic/Optimum | IC50 / Ki |

Table 2: Representative ML Dataset Structure for Sabatier-Based Screening

| Material/Compound ID | Descriptor 1 (ΔE_ads) | Descriptor 2 (ε_d) | Descriptor n (DFT) | Target Property (Activity/Selectivity) | Data Source |

|---|---|---|---|---|---|

| Pt(111) | -1.05 eV | -2.3 eV | ... | 10 mA/cm² @ 0.35 V | Computed/Exp. |

| Pd@AuCore | -0.68 eV | -2.1 eV | ... | TOF: 5.2 s⁻¹ | Computed |

| CandidateMOF001 | -0.75 eV | N/A | Pore Volume | CO₂ Capture: 4.2 mmol/g | High-Throughput Sim. |

Experimental & Computational Protocols

Protocol 3.1: High-Throughput Descriptor Calculation (DFT Workflow)

Objective: Generate consistent adsorption energy (ΔE_ads) data for ML training.

- System Preparation: Use materials project crystal structures or generate alloy/surface models via ASE (Atomic Simulation Environment). For organics, obtain 3D conformers from PubChem or generate via RDKit.

- DFT Calculation Setup (VASP/Quantum ESPRESSO):

- Functional: RPBE-D3 (for adsorption).

- Cutoff Energy: 400 eV (plane-wave).

- k-point mesh: Ensure > 20 Å⁻¹ spacing.

- Convergence: Electronic ≤ 1e-5 eV; Ionic forces ≤ 0.02 eV/Å.

- Adsorption Energy Calculation:

- Optimize clean surface/model.

- Optimize adsorbate structure in vacuum.

- Optimize adsorbate-surface system.

- Calculate: ΔEads = E(surface+adsorbate) - Esurface - Eadsorbate.

- Data Curation: Store results in structured database (e.g., SQLite) with metadata (calculation parameters, slab thickness, coverage).

Protocol 3.2: ML Model Training for Volcano Prediction

Objective: Train a model to predict activity from descriptors, learning the Sabatier "volcano" relationship.

- Data Collection & Curation: Assemble dataset from literature and Protocol 3.1. Include descriptors (ΔEads, εd, etc.) and experimental activities (TOF, overpotential).

- Feature Engineering: Consider dimensionless combinations (e.g., ΔEA - ΔEB for scaling relations). Apply standard scaling (StandardScaler).

- Model Selection & Training:

- Algorithm: Gaussian Process Regression (GPR) is ideal for capturing uncertainty and non-linear trends.

- Kernel: Use a combination of Matern kernel (for smooth variation) and WhiteKernel (for noise).

- Training: Use 80% of data for training, 20% for testing. Optimize hyperparameters via cross-validated gradient descent.

- Volcano Curve Prediction: The trained GPR model can predict the activity landscape across the descriptor range, identifying the peak (optimal binding strength).

Protocol 3.3: Active Learning Loop for Catalyst/Drug Candidate Screening

Objective: Iteratively improve model and identify optimal candidates with minimal data.

- Initialization: Train a preliminary model on an initial small dataset (e.g., 50 data points).

- Candidate Pool Generation: Generate a large virtual library (e.g., 10,000 materials/compounds) and compute cheap, preliminary descriptors (e.g., via lower-level DFT or fingerprint).

- Acquisition Function: Use an acquisition function (e.g., Upper Confidence Bound - UCB) on the model's prediction to select the next candidates for expensive calculation/experiment. UCB balances exploration (high uncertainty) and exploitation (high predicted activity).

- Iteration: The newly acquired high-fidelity data is added to the training set, and the model is retrained. Loop continues until a performance target is met or budget exhausted.

Visualization: Workflows & Logical Frameworks

Title: ML-Driven Sabatier Workflow from Data to Discovery

Title: Sabatier Principle Volcano Plot Concept

The Scientist's Toolkit: Research Reagent Solutions

Table 3: Essential Tools for Sabatier-ML Research

| Item/Category | Specific Example/Tool | Function in Research |

|---|---|---|

| Electronic Structure Software | VASP, Quantum ESPRESSO, Gaussian | Computes accurate adsorption energies (ΔE_ads) and electronic descriptors (d-band center). |

| Automation & High-Throughput | ASE (Atomic Simulation Environment), AFLOW, pymatgen | Automates DFT calculation setup, execution, and parsing for large material libraries. |

| Cheminformatics & Molecular Handling | RDKit, Open Babel | Generates molecular descriptors, conformers, and fingerprints for organic/drug candidate libraries. |

| Machine Learning Framework | scikit-learn, GPyTorch (for GPR), DeepChem | Provides algorithms for regression, classification, and specialized chemoinformatics models. |

| Active Learning & Uncertainty Quantification | modAL, BoTorch, scikit-learn's UCB | Implements acquisition functions for optimal data point selection in iterative loops. |

| Data Management & Databases | PostgreSQL, SQLite, MongoDB | Stores structured calculation results, experimental data, and model inputs/outputs. |

| Visualization & Analysis | matplotlib, seaborn, plotly, pymatgen's analysis tools | Creates volcano plots, parity plots, and analyzes structure-property relationships. |

| Reference Experimental Catalysis Data | NIST Catalysis Database, CatApp (CAMD) | Provides benchmark experimental activity data for model training and validation. |

Within the framework of Sabatier principle-based machine learning catalyst screening research, the identification and precise calculation of key catalytic descriptors form the cornerstone of rational catalyst design. This document provides detailed application notes and protocols for determining the electronic, geometric, and adsorption descriptors that govern catalytic activity, enabling high-throughput computational screening.

Application Notes

Electronic Structure Descriptors

Electronic descriptors quantify the distribution and energy of electrons in a catalyst, directly influencing its ability to donate or accept charge during adsorption and reaction.

- d-Band Center (εd): The average energy of the metal d-states relative to the Fermi level. A higher εd correlates with stronger adsorbate binding.

- d-Band Width: Influenced by coordination number and lattice parameters; affects the sharpness of the density of states.

- Projected Density of States (PDOS): Describes the contribution of specific atomic orbitals to the electronic structure.

- Bader Charge: Quantifies charge transfer between the adsorbate and catalyst surface.

Surface Geometry Descriptors

Geometric descriptors define the atomic arrangement and coordination environment of active sites.

- Coordination Number (CN): The number of nearest neighbors of a surface atom. Lower CN often indicates higher reactivity.

- Generalized Coordination Number (GCN): Extends CN by considering the coordination of the neighbors themselves.

- Interatomic Distances & Strain: Measures deviation of surface bond lengths from their bulk values.

Binding Strength Descriptors

Binding energy is the primary performance metric linking to the Sabatier principle, representing the strength of interaction between adsorbate and catalyst.

- Adsorption Energy (Eads): The definitive descriptor calculated as Eads = E(slab+ads) - Eslab - E_adsorbate.

- Transition State Energy (E_TS): The energy barrier for elementary reaction steps.

- Scaling Relations: Linear relationships between the adsorption energies of different intermediates (e.g., *C, *O, *OH), which constrain catalyst optimization.

Table 1: Key Catalytic Descriptors and Their Computational Determination

| Descriptor Category | Specific Descriptor | Typical Calculation Method | Relevance to Sabatier Principle |

|---|---|---|---|

| Electronic Structure | d-Band Center (εd) | First-principles DFT; Center of mass of d-projected DOS | Predicts trend in adsorbate binding strength. |

| Electronic Structure | Bader Charge Analysis | DFT + Bader partitioning algorithm | Quantifies charge transfer, indicating ionic character of bonds. |

| Surface Geometry | Generalized Coord. No. (GCN) | GCN = Σi (CNi / CNmax) / Σi | Correlates with adsorption site reactivity across facets. |

| Binding Strength | Adsorption Energy (E_ads) | DFT total energy difference calculation (Eq. above) | Direct measure of binding strength; primary ML target. |

| Binding Strength | Linear Scaling Slope | Linear regression of E_ads for two intermediates across surfaces | Defines limits of catalyst optimization; key for descriptor reduction. |

Experimental Protocols

Protocol 1: DFT Calculation of Adsorption Energy & d-Band Center

Objective: To compute the adsorption energy of an intermediate (*OH) and the d-band center of the pristine catalyst surface.

Materials:

- High-performance computing cluster

- DFT software (e.g., VASP, Quantum ESPRESSO)

- Crystal structure files (.cif) for catalyst bulk

- Pseudopotentials

Procedure:

- Bulk Optimization: Optimize the bulk unit cell to obtain the equilibrium lattice constant. Use a k-point grid of at least 11x11x11.

- Surface Slab Creation:

- Cleave the optimized bulk to create the desired Miller index surface (e.g., fcc(111)).

- Build a symmetric slab with ≥ 4 atomic layers and a vacuum layer of ≥ 15 Å.

- Fix the bottom 1-2 layers at bulk positions. Allow top layers and adsorbate to relax.

- Surface Relaxation:

- Perform a geometry optimization on the clean slab. Use a plane-wave cutoff of 500 eV and a k-point grid of 4x4x1. Convergence criteria: 0.01 eV/Å for forces.

- Record the total energy (E_slab).

- Adsorption Configuration:

- Place the adsorbate (e.g., OH) at high-symmetry sites (top, bridge, hollow).

- Optimize the geometry for each configuration. Identify the most stable site.

- Adsorption Energy Calculation:

- Calculate the total energy of the optimized adsorbed system (Eslab+ads).

- Calculate the energy of an isolated adsorbate molecule (Eadsorbate) in a large box.

- Compute Eads = Eslab+ads - Eslab - Eadsorbate.

- d-Band Center Analysis:

- From the relaxed clean slab calculation, extract the density of states (DOS).

- Project the DOS onto the d-orbitals of the surface metal atom(s).

- Calculate the d-band center as εd = ∫ E * ρd(E) dE / ∫ ρd(E) dE, where the integral spans from -∞ to the Fermi level.

Protocol 2: High-Throughput Screening Workflow for ML

Objective: To systematically generate descriptor and target property data for training machine learning models.

Procedure:

- Catalyst Library Generation: Use pymatgen or ASE to generate a library of slab models (e.g., varying composition, facet, near-surface alloy structure).

- Automated DFT Setup & Submission: Use FireWorks or AiiDA to automate job creation (relaxation, static calculation, DOS) for all structures in the library.

- Automated Property Parsing: Upon job completion, use scripts to parse output files for:

- Total energies (for E_ads).

- Structural parameters (for GCN, interatomic distances).

- DOSCAR files (for εd).

- Database Curation: Store all computed properties in a structured database (e.g., MongoDB, SQLite).

- Feature-Label Pair Creation: Assemble the database into a feature matrix (descriptors: εd, GCN, etc.) and target vector (E_ads for key reactions).

Mandatory Visualizations

Descriptor Integration for ML Catalyst Screening

High-Throughput Catalyst Screening Workflow

The Scientist's Toolkit

Table 2: Essential Research Reagent Solutions & Computational Tools

| Item Name | Type/Provider | Primary Function in Research |

|---|---|---|

| Vienna Ab initio Simulation Package (VASP) | DFT Software | Performs electronic structure calculations and determines total energies for surfaces and adsorbates. |

| Quantum ESPRESSO | DFT Software | Open-source alternative for first-principles modeling using plane waves and pseudopotentials. |

| Python Materials Genomics (pymatgen) | Python Library | Analyzes materials structures, generates surfaces, and manages high-throughput computational workflows. |

| Atomic Simulation Environment (ASE) | Python Library | Creates, manipulates, and analyzes atomistic simulations; interfaces with many DFT codes. |

| Bader Charge Analysis Code | Utility Program | Partitions electron density to assign charges to atoms, quantifying charge transfer. |

| Perdew-Burke-Ernzerhof (PBE) Functional | DFT Exchange-Correlation Functional | A standard GGA functional for calculating adsorption energies and structural properties. |

| RPBE Functional | DFT Exchange-Correlation Functional | Revised PBE functional that typically improves adsorption energy accuracy. |

| Projector-Augmented Wave (PAW) Pseudopotentials | DFT Input File | Describes electron-ion interactions, balancing accuracy and computational cost. |

| Materials Project Database | Online Database | Source of initial bulk crystal structures and experimental data for validation. |

| Computational Thermodynamics Databases (NIST, ATAT) | Data Source | Provides reference energies for gas-phase molecules essential for E_ads calculations. |

In the context of machine learning (ML) catalyst screening research, the Sabatier principle posits an optimal intermediate binding energy for catalytic activity. Computational and experimental data on catalytic properties and reaction energy landscapes form the critical training and validation datasets for predictive ML models. This application note details key data sources and protocols for acquiring this essential information.

Quantitative data from primary sources enable the construction of descriptors for binding energies, turnover frequencies (TOF), and activation barriers.

Table 1: Primary Databases for Catalytic Properties & Energy Landscapes

| Database Name | Data Type | Key Metrics Provided | Size/Scope | Access |

|---|---|---|---|---|

| Catalysis-Hub.org | Experimental & Computational | Reaction energies, activation barriers, surface energies | >100,000 data points for surface reactions | Public API, Web |

| NIST Catalyst Database (NCDB) | Experimental | Catalytic activity, selectivity, conditions | Thousands of heterogeneous catalyst entries | Public Web |

| Materials Project | Computational | Formation energies, band structures, adsorption energies | >150,000 materials with DFT data | Public API |

| CatApp | Computational (DFT) | Adsorption energies for simple molecules on metal surfaces | ~40,000 adsorption energies | Public Web |

| PubChem | Experimental (Biocatalysis) | Biochemical compound & reaction data | Millions of compounds | Public API |

Experimental Protocols for Data Generation

Protocol 3.1: Measuring Catalytic Turnover Frequency (TOF)

Objective: Determine the intrinsic activity of a solid heterogeneous catalyst.

Materials & Reagents:

- Catalyst sample (e.g., Pt/Al₂O₃)

- Reactant gas mixture (calibrated)

- Fixed-bed microreactor system

- Online Gas Chromatograph (GC) with TCD/FID

- Mass Flow Controllers (MFCs)

- Internal standard (e.g., Argon)

Procedure:

- Catalyst Pretreatment: Load 50-100 mg of catalyst (sieve fraction 250-355 µm) into reactor. Reduce in situ under H₂ flow (50 mL/min) at 300°C for 2 hours.

- Reaction Conditions: Set reactor temperature (e.g., 200°C) and pressure (1-10 bar). Set total flow rate to achieve a weight hourly space velocity (WHSV) giving <20% conversion to ensure differential reactor conditions.

- Data Acquisition: After 30 min stabilization, sample product stream via online GC every 10 min for 1 hour. Use internal standard for quantitative calibration.

- TOF Calculation: Calculate TOF as:

TOF = (F * X) / (n), where F is molar reactant flow rate (mol/s), X is conversion, and n is number of active sites (mol) determined via H₂ chemisorption.

Protocol 3.2: Temperature-Programmed Desorption (TPD) for Adsorption Strength

Objective: Determine the binding energy/desorption kinetics of reactants/intermediates.

Procedure:

- Sample Preparation: Load 100 mg catalyst into quartz U-tube. Pretreat with He at 400°C.

- Adsorption: Cool to 50°C. Expose to 5% probe gas (e.g., CO, NH₃) in He for 30 min.

- Purging: Flush with pure He for 60 min to remove physisorbed species.

- Desorption: Heat at linear ramp rate (e.g., 10°C/min) to 800°C in He flow. Monitor desorbed species via mass spectrometer.

- Analysis: Peak temperature correlates with binding strength. Quantify by integrating MS signal.

Computational Data Generation Protocol

Protocol 4.1: DFT Calculation of Reaction Energy Landscape

Objective: Compute elementary step energies for a catalytic cycle.

Software: VASP, Quantum ESPRESSO, or CP2K. Workflow:

- Surface Model: Build slab model (e.g., 3x3 unit cell, 4 layers) with vacuum >15 Å.

- Geometry Optimization: Optimize slab and adsorbate structures until forces <0.02 eV/Å.

- Energy Calculations: a. Calculate energy of clean slab (Eslab). b. Calculate energy of slab with adsorbate(s) in initial, transition, and final states (Etotal). c. Calculate adsorption/reaction energy: ΔE = Etotal - Eslab - ΣEgasmolecules.

- Transition State Search: Use Nudged Elastic Band (NEB) or Dimer method.

- Vibrational Analysis: Confirm transition state (one imaginary frequency).

Visualization: Data Integration for ML Screening

Diagram Title: ML Catalyst Screening Data Pipeline

The Scientist's Toolkit: Essential Research Reagents & Materials

Table 2: Key Reagent Solutions & Materials for Catalytic Data Generation

| Item | Function/Application | Example/Notes |

|---|---|---|

| Standard Catalyst Reference | Benchmarking reactor performance & validating setups | EuroPt-1 (Pt/SiO₂), NIST RM 8892 (hydrotreating catalyst) |

| Calibration Gas Mixtures | Quantitative analysis of reaction products via GC/MS | Certified 1% CO/H₂, 1000 ppm CH₄ in He, multi-component alkane standards |

| Probe Molecules for TPD/TPR | Characterizing acid/base sites & reducibility | NH₃ (acidity), CO₂ (basicity), H₂ (metal dispersion) |

| DFT-Compatible Pseudopotentials | Accurate electronic structure calculations | Projector Augmented-Wave (PAW) potentials from materials project |

| High-Purity Gases & Precursors | Synthesis of well-defined catalyst materials | 99.999% H₂, O₂; Metal-organic precursors (e.g., Pt(acac)₂ for atomic layer deposition) |

| Porous Support Materials | Catalyst carrier with defined properties | γ-Al₂O₃ (high surface area), SiO₂ (inert), Zeolites (e.g., H-ZSM-5 for acidity) |

Application Notes

Catalyst discovery for reactions governed by the Sabatier principle requires optimizing the binding energy of key intermediates. Machine Learning (ML) accelerates the screening of material spaces by learning the structure-activity relationship. The choice of ML paradigm is dictated by data availability and the exploration-exploitation balance.

- Supervised Learning is employed when a substantial dataset of known catalyst compositions and their associated performance metrics (e.g., turnover frequency, binding energy) exists. Models learn to predict properties for new candidates.

- Unsupervised Learning is crucial for analyzing unlabeled data, identifying inherent clusters of similar materials, or reducing the dimensionality of complex feature spaces to reveal patterns not dictated by a target variable.

- Active Learning closes the loop between prediction and experiment. An initial model guides the selection of the most informative candidates for subsequent simulation or lab testing, optimizing the resource-intensive steps in the discovery pipeline.

The integration of these paradigms within a thesis on Sabatier-principle-driven screening creates a robust, iterative framework for moving from high-throughput virtual screening to validated catalytic leads.

Data Presentation: Comparative Performance of ML Paradigms in Catalyst Screening

Table 1: Summary of ML Paradigms for Catalyst Discovery

| Paradigm | Primary Use Case | Typical Data Requirement | Key Advantage | Common Challenge | Example Performance Metric (Reported Range*) |

|---|---|---|---|---|---|

| Supervised | Property prediction, Regression/Classification | Large labeled dataset (>10^3 samples) | High predictive accuracy within training domain | Requires expensive-to-acquire labeled data | Mean Absolute Error (MAE) on adsorption energy: 0.05 - 0.15 eV |

| Unsupervised | Data exploration, Dimensionality reduction | Unlabeled data (e.g., structural descriptors) | Reveals hidden patterns without prior labels | Results can be difficult to interpret directly | Cluster purity (e.g., >85% for distinct active sites) |

| Active Learning | Optimal experiment design, Sequential learning | Initial small labeled set + ability to query | Maximizes information gain per experiment | Performance dependent on acquisition function | Reduction in samples needed to reach target error: 60-80% |

*Performance metrics are synthesized from recent literature (2023-2024) on transition metal and alloy catalyst screening for CO2 reduction and hydrogen evolution.

Experimental Protocols

Protocol 3.1: Supervised Learning Workflow for Adsorption Energy Prediction

Objective: Train a model to predict the adsorption energy of O or C intermediates on bimetallic surfaces.

- Data Curation: Assemble a dataset from Density Functional Theory (DFT) repositories. Features may include elemental properties (electronegativity, d-band center), structural features (coordination number), and composition.

- Feature Engineering: Standardize features. Consider adding pairwise interaction terms or using domain-informed descriptors like generalized coordination numbers.

- Model Training: Split data (70/15/15 for train/validation/test). Train a Gradient Boosting Regressor (e.g., XGBoost) or a Graph Neural Network (for structural data). Use mean squared error (MSE) as the loss function.

- Validation & Testing: Validate on the hold-out set. Report Mean Absolute Error (MAE) and R² score on the test set. Perform error analysis on outliers.

Protocol 3.2: Unsupervised Dimensionality Reduction for Catalyst Space Mapping

Objective: Visualize and cluster a library of porous organic polymers (POPs) for photocatalysis based on textual and structural descriptors.

- Descriptor Calculation: Compute molecular descriptors (e.g., topological, electronic) for each POP building unit from SMILES strings using RDKit.

- Dimensionality Reduction: Apply Uniform Manifold Approximation and Projection (UMAP) to reduce descriptors to 2-3 principal components. Use a cosine metric for similarity.

- Clustering: Apply HDBSCAN clustering on the reduced dimensions to identify groups of materials with similar inherent properties.

- Analysis: Correlate clusters with known performance data (if available) to label regions of the latent space as potentially high-performing.

Protocol 3.3: Active Learning Cycle for Optimal Catalyst Screening

Objective: Iteratively select the most promising catalyst candidates for DFT validation to find materials with optimal H adsorption energy (ΔG_H* ≈ 0 eV).

- Initialization: Train a preliminary supervised model (e.g., Gaussian Process Regression) on a small seed dataset (50-100 DFT calculations).

- Acquisition: Use an acquisition function (e.g., Expected Improvement) on a large, unlabeled candidate pool (10^4 materials) to select the N candidates (e.g., N=10) where the model is most uncertain or predicts performance near the ideal.

- Evaluation: Perform DFT calculations to obtain the true ΔG_H* for the N acquired candidates.

- Iteration: Add the newly labeled data to the training set. Retrain the model and repeat steps 2-4 for a set number of cycles or until a performance target is met.

Mandatory Visualization

Title: Integrated ML Workflow for Catalyst Discovery

Title: Active Learning Loop for Optimal Screening

The Scientist's Toolkit: Research Reagent Solutions

Table 2: Essential Tools & Resources for ML-Driven Catalyst Discovery

| Item | Category | Function/Description | Example/Provider |

|---|---|---|---|

| DFT Calculation Software | Computational Chemistry | Provides the fundamental labeled data (energies, properties) for training models. | VASP, Quantum ESPRESSO, CP2K |

| Catalyst Databases | Data Source | Curated repositories of calculated or experimental material properties for initial training. | CatApp, NOMAD, Materials Project, Catalysis-Hub |

| RDKit | Cheminformatics | Open-source toolkit for computing molecular descriptors and fingerprints from chemical structures. | www.rdkit.org |

| DScribe | Material Descriptors | Library for creating atomistic descriptors (e.g., SOAP, MBTR) for inorganic surfaces and bulk materials. | https://singroup.github.io/dscribe/ |

| scikit-learn | ML Library | Core Python library for implementing supervised/unsupervised models and standard ML workflows. | https://scikit-learn.org |

| Atomistic Graph Neural Networks | Advanced ML Models | Specialized neural networks (GNNs) that operate directly on atomic graphs for high-fidelity prediction. | MEGNet, SchNet, CHGNet |

| Gaussian Process Regression | Probabilistic Model | A key model for Active Learning due to its native uncertainty quantification capability. | GPy, scikit-learn, GPflow |

| Jupyter Notebook / Lab | Development Environment | Interactive environment for data analysis, visualization, and prototyping ML pipelines. | Project Jupyter |

Building the Predictive Pipeline: ML Models and Workflows for Catalyst Screening

This application note details the critical step of feature engineering within a broader machine learning (ML) pipeline for catalyst screening based on the Sabatier principle. The Sabatier principle posits that optimal catalysts bind reaction intermediates neither too strongly nor too weakly. Our thesis research aims to operationalize this principle by using ML models to predict catalytic activity (e.g., turnover frequency, overpotential) or adsorption energies (ΔE_ads) of key intermediates, enabling high-throughput virtual screening of materials. The accuracy and generalizability of these models are fundamentally dependent on the quality and relevance of the input numerical representations—descriptors—derived from Density Functional Theory (DFT) calculations and material composition.

Core Descriptor Categories & Quantitative Data

Descriptors are engineered features that quantitatively capture material properties influencing adsorption and catalysis. The following table summarizes primary descriptor categories.

Table 1: Categories of Catalytic Material Descriptors Derived from DFT

| Descriptor Category | Specific Examples | Physical/Chemical Interpretation | Typical Computation Source |

|---|---|---|---|

| Electronic Structure | d-band center (ε_d), d-band width, Bader charge, Valence band maximum, Conduction band minimum | Reactivity trends, electron donation/acceptance capability, correlation with adsorption strength (e.g., d-band model). | Projected Density of States (PDOS), Electronic density analysis. |

| Geometric/Structural | Coordination number, Bond lengths, Lattice parameters, Nearest-neighbor distances, Surface energy. | Exposure of active sites, strain effects, surface stability. | Optimized DFT geometry (bulk, slab, cluster). |

| Elemental & Compositional | Atomic number, Atomic radius, Electronegativity, Valence electron count, Core ionization energy. | Intrinsic elemental properties influencing bonding. Often used in "featureless" models. | Periodic table, tabulated data. |

| Thermodynamic | Formation energy, Adsorption energy of probe species (H, O, CO*), Surface energy. | Stability of material and adsorbed intermediates, direct Sabatier principle input. | DFT total energy calculations. |

| Combined/Advanced | O p-band center, Generalized Coordination Number (CN_avg), Smooth Overlap of Atomic Positions (SOAP) descriptors. | Captures complex local chemical environments beyond simple geometric rules. | Derived from geometric/electronic analysis. |

Table 2: Example DFT-Calculated Descriptor Values for Transition Metal Surfaces

| Metal Surface | d-band center (ε_d) [eV] | H Adsorption Energy (ΔE_H*) [eV] | Generalized Coordination Number | Surface Energy [J/m²] |

|---|---|---|---|---|

| Pt(111) | -2.5 | -0.45 | 7.5 | ~1.2 |

| Cu(111) | -3.1 | -0.25 | 7.5 | ~1.5 |

| Ni(111) | -1.3 | -0.55 | 7.5 | ~1.8 |

| Ru(0001) | -1.8 | -0.60 | 7.3 | ~2.5 |

Application Notes & Protocols

Protocol 3.1: DFT Workflow for Generating Primary Descriptors

Objective: To perform DFT calculations on a catalytic surface (e.g., fcc(111) slab) to obtain total energies and electronic structures necessary for computing descriptors.

Materials & Software:

- DFT Code: VASP, Quantum ESPRESSO, CP2K.

- Pre/Post-processing: ASE (Atomic Simulation Environment), Pymatgen.

- Computational Resources: HPC cluster.

Procedure:

- System Construction:

- Build a periodic slab model from the bulk crystal structure. Use a minimum of 3-5 atomic layers.

- Include a vacuum layer of ≥ 15 Å in the z-direction to separate periodic images.

- For the surface model, fix the bottom 1-2 layers at their bulk positions and allow the top layers to relax.

DFT Calculation Parameters:

- Functional: Select a functional suitable for your system (e.g., PBE for general metals, RPBE for adsorption, HSE06 for band gaps).

- Pseudopotentials/PAW: Use projector-augmented wave (PAW) potentials appropriate for the chosen functional.

- Plane-wave cutoff: Set energy cutoff (e.g., 500 eV for PBE).

- k-points: Use a Monkhorst-Pack grid (e.g., 4x4x1 for surface relaxation, denser for DOS).

- Convergence: Electronic step convergence ~1e-6 eV; ionic relaxation force convergence < 0.02 eV/Å.

Calculation Sequence:

- Bulk Optimization: Optimize bulk lattice constant.

- Slab Relaxation: Relax the clean slab model using the optimized lattice constant.

- Adsorbate Calculation: Place the adsorbate (e.g., H, C, O, OH) on various high-symmetry sites (top, bridge, hollow) of the relaxed slab. Relax the adsorbate and the top slab layers.

- Electronic Analysis: Perform a static calculation on the relaxed structures to obtain the Density of States (DOS), specifically the projected DOS (PDOS) onto the d-orbitals of the surface atoms.

Descriptor Extraction (Post-Processing):

- Adsorption Energy: ΔEads = E(slab+adsorbate) - E(slab) - E(adsorbategas). Correct for gas-phase molecule energies.

- d-band Center: From the d-PDOS (ρd(E)), compute εd = ∫ E ρd(E) dE / ∫ ρd(E) dE. Integrate over a relevant energy range around the Fermi level.

- Bader Charge: Use the Bader partitioning scheme on the charge density file to compute atomic charges.

- Geometric Descriptors: Use ASE or Pymatgen to compute bond lengths and coordination numbers from the relaxed geometry.

Protocol 3.2: Feature Engineering Pipeline for ML Input

Objective: To transform raw DFT outputs into a curated set of descriptors for ML training.

Materials & Software:

- Python libraries: NumPy, Pandas, Scikit-learn, Pymatgen, Matplotlib.

- Data: CSV/JSON files containing raw DFT results.

Procedure:

- Data Aggregation: Compile all DFT-calculated properties (total energies, adsorption energies, atomic charges) into a structured DataFrame.

- Descriptor Calculation:

- Compute derived descriptors: e.g., Average d-band center for multi-element surfaces, strain (Δa/a), generalized coordination number.

- Incorporate elemental features (electronegativity, group number) from tabulated data.

- Feature Selection & Reduction:

- Correlation Analysis: Remove features with very high mutual correlation (e.g., |r| > 0.95) to reduce multicollinearity.

- Univariate Analysis: Use statistical tests (e.g., fregression) to rank features by their relationship with the target variable (e.g., ΔEads).

- Dimensionality Reduction (Optional): Apply Principal Component Analysis (PCA) to create orthogonal feature sets if the number of features is very large.

- Data Splitting & Scaling:

- Split data into training, validation, and test sets (e.g., 70/15/15) using stratified sampling if dealing with different material classes.

- Standardize features using

StandardScaler(mean=0, variance=1) on the training set, then apply the same transformation to validation/test sets.

Visualizations

Title: From DFT to Sabatier Prediction via Descriptors

Title: Descriptor Role in Sabatier ML Thesis

The Scientist's Toolkit: Research Reagent Solutions

Table 3: Essential Computational Tools & Resources for Feature Engineering

| Item / Software | Category | Primary Function in Descriptor Engineering |

|---|---|---|

| VASP / Quantum ESPRESSO | DFT Code | Performs first-principles calculations to obtain total energies, electronic structures, and relaxed geometries—the raw data source. |

| Atomic Simulation Env. (ASE) | Python Library | Provides tools to build, manipulate, run, and analyze atomistic simulations. Crucial for workflow automation and geometry analysis. |

| Pymatgen | Python Library | Offers robust capabilities for crystal structure analysis, materials project data access, and computing numerous structural/electronic descriptors. |

| Bader Charge Analysis | Software Tool | Partitions electron density to assign charges to atoms, providing a key electronic descriptor for charge transfer analysis. |

| Scikit-learn | Python Library | The core library for feature preprocessing (scaling), selection, dimensionality reduction, and initial ML model prototyping. |

| Jupyter Notebook / Lab | Development Environment | Provides an interactive platform for exploratory data analysis, feature engineering, and visualization. |

| High-Performance Computing (HPC) Cluster | Infrastructure | Essential for performing the computationally intensive DFT calculations required for descriptor generation. |

The development of robust machine learning models for catalyst screening under the Sabatier principle requires high-quality, integrated training datasets. This protocol details methods for curating and harmonizing data from computational repositories (e.g., CatApp, NOMAD, Materials Project) and experimental databases (e.g., Catalysis-Hub, NIST) to create a unified resource for predictive model training. The focus is on descriptors like adsorption energies, turnover frequencies, and stability metrics, critical for assessing catalyst activity and selectivity.

Within the broader thesis on Sabatier principle-driven ML catalyst screening, the quality and scope of training data dictate model success. This document provides application notes and detailed protocols for building a curated, multi-source catalyst database, addressing the "garbage in, garbage out" paradigm in materials informatics.

Research Reagent Solutions & Essential Materials

| Item Name | Function | Source/Example |

|---|---|---|

| Computational Database APIs | Programmatic access to calculated catalyst properties (e.g., adsorption energies, DFT structures). | Materials Project REST API, CatApp API, NOMAD API |

| Experimental Data Repositories | Sources for validated experimental catalytic performance data (activity, selectivity, stability). | Catalysis-Hub, NIST Chemical Kinetics Database, published literature data |

| Data Harmonization Toolkit | Software for unit conversion, descriptor calculation, and standardizing metadata. | pymatgen, ASE (Atomic Simulation Environment), custom Python scripts |

| Curation & Validation Software | Tools for identifying outliers, checking thermodynamic consistency, and validating structures. | CATkit, scikit-learn for statistical tests, manual expert review |

| Secure Storage Solution | Versioned, queryable database for the final integrated dataset. | PostgreSQL with SQLAlchemy, MongoDB, or dedicated FAIR data platform |

Protocols for Data Curation & Integration

Protocol: Harvesting Data from Computational Databases

Objective: Automatically collect and pre-process computational data for catalytic reactions (e.g., CO2 reduction, NH3 synthesis).

Materials: Python environment, requests library, pymatgen, target API keys.

Methodology:

- Define Target Reactions & Descriptors: Identify key intermediates and descriptors (e.g., *H, *CO, *N2 adsorption energies) based on Sabatier analysis.

- API Query Construction: For each target material (e.g., transition metals, alloys), construct API calls to fetch:

- Calculated adsorption energies.

- Relaxed atomic structures (CIF files).

- DFT calculation parameters (functional, k-point grid, energy cutoff).

- Local Data Storage: Store raw API responses in a structured directory (JSON format) with timestamps.

- Initial Standardization: Use pymatgen to convert all energies to eV/atom, structures to standardized orientations, and tag metadata.

- Output: A raw computational data corpus ready for validation.

Protocol: Extracting and Standardizing Experimental Data

Objective: Assemble experimental kinetic and catalytic performance data with consistent metadata.

Materials: Access to experimental databases, text-mining tools (optional), data spreadsheet software.

Methodology:

- Source Identification: Query experimental databases (Catalysis-Hub) for target reactions. Supplement with manual literature extraction for underrepresented systems.

- Data Extraction Template: Use a predefined table to extract for each catalytic study:

- Catalyst composition and structure.

- Reaction conditions (T, P, conversion).

- Performance metrics (TOF, selectivity, activation energy).

- Characterization data (active site density, oxidation state).

- Unit Harmonization: Convert all performance data to standard units (TOF in s⁻¹, energies in kJ/mol or eV).

- Condition Tagging: Annotate each entry with a "condition fingerprint" (e.g., "Low-T, High-P") for later conditioning of ML models.

- Output: A cleaned experimental data table linked to source DOIs.

Protocol: Data Integration and Sabatier-Descriptor Calculation

Objective: Merge computational and experimental datasets via common descriptors, focusing on Sabatier-derived features.

Materials: Integrated dataset from 3.1 & 3.2, Python with NumPy/pandas.

Methodology:

- Descriptor Alignment: Identify common keys, typically catalyst composition and surface facet.

- Calculate Sabatier Features: For each catalyst, compute:

- Scaling Relations: e.g., ΔEOH vs. ΔEO.

- Sabatier Activity Index: Proximity of descriptor values to the theoretical volcano peak.

- Thermodynamic Overpotential: For electrochemical reactions.

- Merge Datasets: Create a master table where each row is a unique catalyst, featuring columns for both computational descriptors and experimental performance.

- Handle Missing Data: Flag entries lacking either computational or experimental data for later imputation or exclusion.

- Output: A unified, feature-rich database table (see Table 1).

Table 1: Excerpt from Integrated Catalytic Database for Methanation (CO2 → CH4)

| Catalyst ID | Composition | Facet | ΔE*CO (eV) [Comp] | ΔE*H (eV) [Comp] | TOF (s⁻¹) [Exp] | Selectivity to CH4% [Exp] | Sabatier Activity Index | Data Source Key |

|---|---|---|---|---|---|---|---|---|

| CATRu001 | Ru | (111) | -1.45 | -0.52 | 2.3E-2 | 99 | 0.87 | Comp: MP-33, Exp: DOI:10.1021/acscatal.9b04556 |

| CATNi007 | Ni | (211) | -1.21 | -0.61 | 5.7E-3 | 88 | 0.65 | Comp: CatAppNi211, Exp: CatalysisHubEntry_445 |

| CATFe012 | Fe3O4 | (001) | -0.89 | -0.32 | 1.1E-4 | 45 | 0.41 | Comp: NOMADFe3O4DFT, Exp: DOI:10.1039/C8CY02233F |

Protocol: Quality Control and Validation

Objective: Ensure thermodynamic consistency and detect outliers in the integrated dataset.

Materials: Integrated dataset, statistical software (Python/scikit-learn), visualization tools.

Methodology:

- Consistency Checks: Verify that adsorption energies follow expected scaling relations across similar materials. Flag points >2σ from the trend line.

- Experimental Cross-Validation: For catalysts with multiple experimental sources, report standard deviation of TOF. Flag entries with order-of-magnitude discrepancies.

- DFT Parameter Audit: Group computational data by exchange-correlation functional. Apply a known correction factor (e.g., for RPBE vs. PBE) where possible to unify energy scales.

- Expert Review: A subset (e.g., 10%) of integrated entries is reviewed manually by a catalysis expert for plausibility.

- Output: A validated, version-tagged dataset with quality flags for each entry.

Workflow & Relationship Visualizations

Title: Data Curation and Integration Workflow for Catalyst ML

Title: Quality Control Pipeline for Each Data Entry

This document provides application notes and experimental protocols for key machine learning (ML) model architectures within a thesis focused on ML-driven catalyst screening guided by the Sabatier principle. The Sabatier principle posits an optimal intermediate catalyst-adsorbate binding strength for maximal catalytic activity. The research goal is to computationally screen vast material/chemical spaces to identify candidates that satisfy this principle for targeted reactions (e.g., CO₂ hydrogenation, nitrogen reduction). The models discussed herein are applied to predict critical regression targets (e.g., adsorption energies, reaction barriers) and classification labels (e.g., stable/ unstable, active/selective/inactive) from catalyst descriptors.

Model Architectures: Application Notes

Graph Neural Networks (GNNs)

Application in Catalyst Screening: GNNs operate directly on graph representations of molecules and materials. Atoms are nodes, bonds are edges. This is ideal for heterogeneous catalysts (e.g., single-atom alloys, metal-organic frameworks) and molecular catalysts.

- Regression Tasks: Prediction of adsorption energies (ΔE*ads), activation energies (Eₐ), turnover frequencies (TOF).

- Classification Tasks: Identification of stable catalyst surfaces under reaction conditions, prediction of selectivity toward a desired product.

- Advantage: Naturally incorporates topological and bond information without manual feature engineering. Can learn from the 3D geometry.

Random Forests (RF)

Application in Catalyst Screening: An ensemble of decision trees, robust to noise and capable of ranking feature importance.

- Regression Tasks: Predicting bulk properties (formation energy, band gap) from compositional and structural descriptors.

- Classification Tasks: Binary classification of catalyst durability (leaching-resistant vs. leaching-prone).

- Advantage: Interpretable (feature importance scores), works well on small to medium-sized datasets common in high-throughput computational chemistry.

Neural Networks (NNs) / Deep Neural Networks (DNNs)

Application in Catalyst Screening: Standard fully-connected networks for learning complex, non-linear relationships in high-dimensional descriptor spaces.

- Regression Tasks: Learning from "hand-crafted" features (e.g., d-band center, electronegativity, coordination number) to predict activity descriptors.

- Classification Tasks: Multi-label classification of reaction pathways.

- Advantage: High representational power for large datasets. Can be combined with autoencoders for dimensionality reduction.

Table 1: Comparative Analysis of Model Architectures for Catalyst Screening

| Feature | Graph Neural Networks (GNNs) | Random Forests (RF) | Neural Networks (DNNs) |

|---|---|---|---|

| Primary Input | Graph (Atom/Bond features) | Feature Vector (Descriptors) | Feature Vector (Descriptors) |

| Best For | Non-periodic & periodic structures | Tabular data with clear features | High-dimensional, complex tabular data |

| Interpretability | Low (Black-box) | High (Feature Importance) | Medium-Low (requires saliency maps) |

| Data Efficiency | Medium-High | High (works on small data) | Low (requires large datasets) |

| Typical Output (Regression) | ΔE*ads, Eₐ | Formation Energy, Bulk Modulus | Reaction Rate, TOF |

| Typical Output (Classification) | Stability, Active Site Type | Stable/Unstable, Selective/Non-selective | Phase Classification, Pathway Probability |

| Key Advantage for Sabatier | Learns from geometry; no descriptor bias. | Identifies key physical descriptors. | Models highly non-linear "volcano" relationships. |

Experimental Protocols

Protocol 3.1: GNN Training for Adsorption Energy Prediction

Aim: Train a GNN model to predict the adsorption energy of CO* on single-atom alloy surfaces. Workflow Diagram Title: GNN Training Workflow for Catalyst Property Prediction

Materials & Software:

- Dataset: OC20 or Catlas, or custom DFT calculations.

- Framework: PyTorch Geometric (PyG) or Deep Graph Library (DGL).

- GNN Architecture: Specified in code block (e.g., SchNet, MEGNet, CGCNN).

Procedure:

- Graph Construction: Represent each catalyst-adsorbate system as a graph. Nodes within a cutoff radius are connected.

- Featurization: Encode atom types (one-hot or atomic number) and optionally periodic boundary conditions.

- Data Splitting: Split dataset randomly or by scaffold to prevent data leakage.

- Model Definition: Implement a GNN with 3-5 message-passing layers. Use a global pooling (add/mean) to obtain a graph-level representation.

- Training Loop: Use Mean Absolute Error (MAE) loss and the Adam optimizer. Train for a fixed number of epochs (e.g., 500) with early stopping based on validation loss.

- Evaluation: Report MAE and RMSE on the held-out test set. Analyze errors vs. adsorbate/surface types.

Protocol 3.2: Random Forest for Catalyst Stability Classification

Aim: Classify metal-oxide catalysts as "Stable" or "Unstable" under oxidizing conditions based on elemental descriptors. Workflow Diagram Title: RF Classification Protocol for Catalyst Stability

Materials & Software:

- Dataset: Materials Project API (for formation energies), ICSD, or experimental stability data.

- Library: scikit-learn.

Procedure:

- Descriptor Calculation: For each composition, compute features: elemental properties (mean, max, min, range), ionic character, estimated surface energy.

- Stratified Splitting: Split data 80/20, preserving the proportion of 'Stable'/'Unstable' labels.

- Model Training: Instantiate a

RandomForestClassifier. UseGridSearchCVwith 5-fold cross-validation to optimizemax_depth,n_estimators, andmin_samples_split. - Evaluation: Predict on the test set. Generate a confusion matrix and calculate metrics (Accuracy, Precision, Recall, F1-score, ROC-AUC).

- Interpretation: Extract and plot feature importances from the best model to gain physical insight.

Protocol 3.3: DNN for Sabatier "Volcano" Relationship Modeling

Aim: Train a DNN to model the non-linear, volcano-shaped relationship between a descriptor (e.g., d-band center, *OH binding energy) and catalytic activity (e.g., log(TOF)). Workflow Diagram Title: DNN Modeling of Sabatier Volcano Relationship

Materials & Software:

- Data: Curated dataset from literature or high-throughput computation (e.g., for oxygen reduction reaction).

- Framework: TensorFlow/Keras or PyTorch.

Procedure:

- Data Preparation: Collect pairs of descriptor (X) and activity (Y). Normalize X to [0,1] and Y to have zero mean and unit variance.

- Architecture Design: Build a sequential DNN with 1-2 input neurons, 3-5 hidden layers (with ReLU activation), and 1 linear output neuron. Incorporate Dropout (e.g., 20%) and L2 regularization to prevent overfitting.

- Training: Use Mean Squared Error (MSE) loss. Employ a learning rate scheduler. Monitor validation loss.

- Volcano Plotting: Use the trained model to predict Y for a finely spaced range of X values. Plot predicted Y vs. X to visualize the learned volcano curve.

- Analysis: Use automatic differentiation to compute the derivative of the output w.r.t. the input to quantitatively identify the optimal descriptor value.

The Scientist's Toolkit

Table 2: Essential Research Reagent Solutions & Materials for ML Catalyst Screening

| Item | Function in Research | Example/Supplier |

|---|---|---|

| DFT Software (VASP, Quantum ESPRESSO) | Generates high-quality training and testing data (energies, barriers, electronic structures). | Core computational reagent. |

| Catalysis Databases (Catlas, OC20, NOMAD) | Provides pre-computed datasets for model training and benchmarking. | Catlas.dtu.dk, Open Catalyst Project |

| Machine Learning Frameworks (PyTorch, TensorFlow, scikit-learn) | Provides libraries to build, train, and evaluate GNN, RF, and NN models. | Open-source software. |

| Graph ML Libraries (PyG, DGL) | Specialized extensions for efficient GNN implementation on catalyst graphs. | Open-source software. |

| High-Performance Computing (HPC) Cluster | Essential for DFT data generation and training large neural network models. | Local university cluster or cloud (AWS, GCP). |

| Descriptor Calculation Tools (pymatgen, ASE, RDKit) | Computes compositional, structural, and electronic features for RF/NN inputs. | Open-source Python packages. |

| Hyperparameter Optimization (Optuna, wandb) | Automates the search for optimal model parameters, improving performance. | Open-source software. |

| Visualization Libraries (matplotlib, seaborn, VESTA) | Creates volcano plots, parity plots, and visualizes catalyst structures. | Open-source software. |

This application note details the integration of Active Learning (AL) loops with the Sabatier principle for high-throughput catalyst and drug candidate screening. Within the broader thesis of "Machine Learning-Driven Catalyst Discovery Guided by the Sabatier Principle," this protocol provides a methodological framework for iteratively identifying materials or molecules that reside at the peak of activity—the "Sabatier Sweet Spot"—where binding energy is neither too strong nor too weak. The approach is directly transferable to drug development for targeting enzyme active sites or protein-protein interactions.

Core Protocol: The Active Learning Loop

Objective: To minimize the number of expensive experimental or high-fidelity computational measurements required to discover new high-performance catalysts or bioactive compounds by iteratively training a machine learning model on optimally selected data points. Key Steps: Initial Library Curation → Featurization → Initial Model Training → Acquisition Function Query → High-Fidelity Validation → Database Update → Model Retraining.

Detailed Experimental & Computational Methodologies

Protocol 1: Initial Dataset Creation & Featurization

Materials:

- Compound/Material Database (e.g., QM9, Materials Project, ChEMBL, in-house library).

- High-performance computing cluster or cloud computing resources.

- DFT software (e.g., VASP, Gaussian) or molecular docking suite (e.g., AutoDock Vina, Glide).

Method:

- Define Target Reaction: Select a specific catalytic reaction (e.g., CO2 reduction to methane, oxygen evolution) or a protein target (e.g., kinase, protease).

- Curate Initial Seed Set: Select 50-100 diverse candidates from a large library. Diversity can be ensured via fingerprint (e.g., Morgan fingerprints, Coulomb matrix) clustering.

- Compute Sabatier-Relevant Descriptors (High-Fidelity Tier):

- For catalysts: Perform DFT calculations to obtain key adsorption energies (e.g., *C, *O, *OH for CO2RR; *O, *OOH for OER). Use a standardized slab model and consistent convergence criteria.

- For drug candidates: Perform molecular docking and MD simulations to calculate binding free energy (ΔG) or inhibition constant (Ki). Use a consistent protein structure and binding site definition.

- Determine Activity Labels: Calculate the activity metric. For catalysts, this is often the theoretical overpotential or turnover frequency (TOF) derived from the adsorption energies via a microkinetic model or a scaling relation volcano plot. For drugs, use the computed ΔG or a binary label (active/inactive) based on a threshold.

- Create Low-Fidelity Features: Generate rapid-to-compute features for the entire large library (>10k compounds). These may include:

- Composition-based features (for materials).

- Molecular fingerprints, SMILES-based descriptors, or pre-trained graph neural network embeddings (for molecules).

- Simple semi-empirical or force-field energy estimations.

Protocol 2: Active Learning Loop Execution

Materials:

- Initial seed dataset from Protocol 1.

- ML library (e.g., scikit-learn, PyTorch, DeepChem).

- Unlabeled candidate pool (with low-fidelity features).

Method:

- Initial Model Training: Train a surrogate machine learning model (e.g., Gaussian Process Regressor, Gradient Boosting, or Graph Neural Network) on the seed dataset. Use Sabatier-derived activity (e.g., overpotential, -ΔG) as the target

y. Use low-fidelity features as inputX. - Acquisition Function Query: Apply an acquisition function to the unlabeled pool to select the next batch (N=5-10) of candidates for high-fidelity validation.

- Common Acquisition Functions:

- Expected Improvement (EI): Maximizes the probability of improving upon the current best.

- Upper Confidence Bound (UCB): Balances exploitation (high predicted value) and exploration (high uncertainty).

- Query-by-Committee: Selects points where multiple models (a committee) disagree most.

- Common Acquisition Functions:

- High-Fidelity Validation: Subject the acquired batch to the high-fidelity evaluation described in Protocol 1, Step 3 & 4.

- Database Update & Retraining: Append the newly acquired {candidate, high-fidelity features, activity label} tuples to the training database. Retrain the surrogate ML model on the expanded dataset.

- Loop Termination: Repeat steps 2-4 until a performance target is met (e.g., discovery of a candidate with overpotential < 0.4 V or ΔG < -10 kcal/mol) or a computational budget is exhausted.

Data Presentation

Table 1: Performance Comparison of Acquisition Functions in a Model CO2RR Catalyst Search

| Acquisition Function | Iterations to Find η < 0.5 V | Total DFT Calculations Used | Best Candidate Adsorption Energy ΔE*CO (eV) |

|---|---|---|---|

| Random Sampling | 38 | 150 | -0.68 |

| Expected Improvement (EI) | 12 | 80 | -0.55 |

| Upper Confidence Bound (UCB) | 9 | 75 | -0.51 |

| Thompson Sampling | 14 | 85 | -0.58 |

Table 2: Essential Research Reagent Solutions & Computational Tools

| Item Name | Function/Description | Example Vendor/Software |

|---|---|---|

| Catalyst Screening | ||

| VASP | Density Functional Theory software for calculating adsorption energies. | VASP Software GmbH |

| ASE (Atomic Simulation Environment) | Python framework for setting up, running, and analyzing DFT calculations. | https://wiki.fysik.dtu.dk/ase/ |

| CatKit | Python toolkit for building and analyzing catalyst surface models. | GitHub: SUNCAT-Center/CatKit |

| Drug Candidate Screening | ||

| AutoDock Vina | Open-source software for molecular docking and binding affinity prediction. | The Scripps Research Institute |

| Schrödinger Suite | Commercial software for integrated drug discovery (Glide, Desmond, Maestro). | Schrödinger, Inc. |

| RDKit | Open-source cheminformatics toolkit for fingerprint generation and molecule manipulation. | http://www.rdkit.org/ |

| Active Learning Core | ||

| scikit-learn | ML library for GP, GBR, and other surrogate models. | https://scikit-learn.org/ |

| GPyTorch | Library for scalable Gaussian Process regression. | https://gpytorch.ai/ |

| DeepChem | Deep learning library for drug discovery and quantum chemistry. | https://deepchem.io/ |

Mandatory Visualizations

Diagram 1: Active Learning Loop for Sabatier Optimization

Diagram 2: ML-Sabatier Framework Integration

This case study is framed within a broader thesis investigating the application of the Sabatier principle through machine learning (ML) for high-throughput catalyst screening. The Sabatier principle posits that optimal catalytic activity requires an intermediate binding energy of reactants; too weak prevents activation, too strong inhibits desorption. Modern ML models, trained on computational and experimental datasets, learn complex, multi-dimensional descriptors that go beyond simple binding energy to predict catalytic performance for demanding reactions like hydrogenation and C–H activation, accelerating the discovery of novel, efficient catalysts.

Application Notes: ML-Driven Workflow for Catalyst Discovery

The integration of ML into catalyst discovery follows a closed-loop, iterative workflow. Key stages include data acquisition, feature engineering, model training & prediction, and experimental validation. Successful implementations have demonstrated the ability to screen thousands of candidate materials in silico, prioritizing a handful for synthesis and testing.

Key Quantitative Outcomes from Recent Studies

Live search results indicate the following representative benchmarks:

Table 1: Performance Metrics from ML-Driven Catalyst Discovery Campaigns

| Reaction Type | ML Model(s) Used | Initial Candidate Pool | Top Candidates Validated | Performance Gain vs. Baseline | Key Reference/Year |

|---|---|---|---|---|---|

| Alkyne Semi-Hydrogenation | Gradient Boosting, NN | ~4,000 bimetallic surfaces | 6 novel Pd-based alloys | 50-80% higher selectivity to alkene | (Cao et al., 2023) |

| Methane C–H Activation | Kernel Ridge Regression | 12,000 transition metal complexes | 4 Fe & Co complexes | Turnover Frequency (TOF) increased by 2 orders of magnitude | (Guan et al., 2022) |

| Directed C–H Arylation | Random Forest, GNN | ~3,000 phosphine ligands | 9 novel ligands | Yield improved from 45% to 92% | (Kwon et al., 2024) |

| CO2 Hydrogenation | Ensemble Learning | 1,200 oxide-supported single-atom catalysts | 3 Ni/CeO2 variants | Activity 5x higher at 50°C lower temperature | (Liu et al., 2023) |

The Scientist's Toolkit: Research Reagent Solutions

Table 2: Essential Materials for ML-Guided Catalyst Experimentation

| Item / Reagent | Function in the Workflow |

|---|---|

| High-Throughput Experimentation (HTE) Kit | Enables parallel synthesis and testing of ML-predicted catalyst candidates (e.g., in 96-well plate format). |

| Precursor Libraries (Metal Salts, Ligands) | Diverse, modular chemical building blocks for rapid synthesis of predicted catalyst structures. |

| Gaseous Reactant Mixtures (H2, Substrates) | For consistent testing of hydrogenation or C-H activation reactions under controlled atmospheres. |

| Internal Standard Kits (for GC/HPLC) | Essential for accurate, quantitative analysis of reaction conversion, yield, and selectivity in parallel. |

| Chemically-Aware ML Software (e.g., SchNet, OC20) | Pre-trained neural networks for learning material and molecular representations directly from structure. |

| Automated Pressure Reactor Systems | Allows safe, reproducible testing of reactions requiring pressurized hydrogen or other gases. |

Experimental Protocols

Protocol A: High-Throughput Synthesis and Screening of Predicted Bimetallic Hydrogenation Catalysts

Objective: To experimentally validate ML-predicted top-performing bimetallic nanoparticle catalysts for selective alkyne hydrogenation.

Materials:

- Metal salt precursors (e.g., Pd(NO3)2, Ga(NO3)3, etc., as predicted).

- Carbon support (e.g., Vulcan XC-72).

- Reducing agent solution (NaBH4, 0.1M in ice-cold water).

- HTE reactor block (parallel pressurized reactors).

- Substrate solution (e.g., phenylacetylene in ethanol).

- Hydrogen gas (99.99%).

Procedure:

- Candidate Selection: From the ML model's ranked list, select the top 10-20 bimetallic compositions (e.g., PdGa, PdZn, AuTi).

- Parallel Incipient Wetness Impregnation: a. In a 96-well plate, prepare aqueous solutions of the required metal salts to achieve the target molar ratio and total metal loading (e.g., 2 wt%). b. Using a liquid handler, add calculated volumes of each solution to individual wells containing pre-weighed carbon support. Mix thoroughly.

- Parallel Reduction: a. Transfer the wet mixtures to a parallel filtration setup. b. Slowly add excess ice-cold NaBH4 solution to each well with vigorous stirring to reduce the metal ions to nanoparticles. c. Wash with water and ethanol, then transfer catalysts to a drying array. Dry under vacuum at 80°C for 2 hours.

- High-Throughput Catalytic Testing: a. Load ~5 mg of each catalyst into individual vessels of a parallel pressure reactor. b. Add a standard volume of substrate solution (e.g., 2 mL of 10 mM phenylacetylene in ethanol). c. Seal reactors, purge with H2, then pressurize to the target H2 pressure (e.g., 3 bar). d. Agitate and heat the block to the reaction temperature (e.g., 50°C) for a fixed time (e.g., 30 min).

- Analysis & Validation: a. Quench reactions and filter catalyst using a parallel filtration system. b. Analyze filtrates via parallel GC-FID using internal standards (e.g., n-dodecane). c. Calculate conversion, selectivity to styrene, and full product distribution for each candidate. d. Feed performance data back into the ML model for refinement and next-generation predictions.

Protocol B: Screening ML-Predicted Molecular C–H Activation Catalysts

Objective: To test novel ligand-metal complexes predicted by ML for C–H arylation reactivity.

Materials:

- Predicted ligand structures (commercially available or synthesized via parallel chemistry).

- Metal precursor (e.g., Pd(OAc)2).

- Substrates (e.g., arene and aryl halide).

- Base (e.g., Cs2CO3).

- Solvent (e.g., toluene).

- HTE microwave reactor or heated agitation block.

Procedure:

- In-Situ Catalyst Formation: a. In a 96-well plate, prepare stock solutions of each unique ligand in toluene. b. Using a liquid handler, aliquot ligand solution, Pd(OAc)2 stock solution, arene substrate, aryl halide, and base into individual microwave vials to a final volume of 1 mL. The metal:ligand ratio should be 1:2.

- Parallelized Reaction Execution: a. Seal vials and load into a HTE microwave reactor or a heated, agitated block. b. Run reactions under uniform conditions (e.g., 120°C for 1 hour).

- Reaction Quenching and Analysis: a. After cooling, quench all reactions by adding a standard volume of a quenching/standard solution (e.g., 0.5 mL of ethyl acetate with dibromobenzene as internal standard). b. Shake the plate vigorously, then allow solids to settle or use a parallel filtration plate. c. Analyze supernatant from each well via parallel UPLC-MS to determine conversion and yield of the biaryl product.

- Hit Identification & Model Retraining: a. Rank catalysts by product yield. b. Correlate experimental yield with ML-predicted activity/descriptor values. c. Use the new experimental data (successes and failures) to retrain and improve the predictive model for the next design cycle.

Visualizations

Title: Closed-Loop ML Catalyst Discovery Workflow

Title: From Sabatier Principle to ML Descriptors

Overcoming Roadblocks: Solving Data Scarcity, Overfitting, and Model Uncertainty in Catalytic ML

Within catalyst screening research guided by the Sabatier principle, which posits an optimal intermediate adsorbate binding energy for maximum catalytic activity, the acquisition of high-fidelity experimental or computational data is a severe bottleneck. This creates a "small data" problem, hindering the development of accurate machine learning (ML) models for rapid discovery. This Application Note details integrated methodologies of Transfer Learning (TL) and Multi-Fidelity (MF) modeling to overcome this constraint, enabling efficient predictive screening of catalyst candidates.

Core Methodologies: Protocols and Application

Protocol 1: Multi-Fidelity Modeling for Catalyst Property Prediction

Objective: To predict high-fidelity adsorption energies (e.g., from DFT) by leveraging abundant low-fidelity data (e.g., from semi-empirical methods or lower-level DFT) and a limited set of high-fidelity data.

Workflow:

- Data Collection Tiers:

- Low-Fidelity (LF) Data: Calculate adsorption energies for a large candidate set (~10,000) using fast, approximate methods (e.g., PM7, DFTB, or low-precision DFT).

- High-Fidelity (HF) Data: Calculate adsorption energies for a carefully selected subset (~100-500) using accurate, computationally expensive methods (e.g., hybrid DFT with a large basis set).

Model Architecture (Autoregressive MF Scheme):

- Train a primary model (e.g., Gaussian Process or Neural Network) on the large LF dataset to learn the general trends.

- Train a secondary model that uses the predictions of the LF model as an input feature, along with the original descriptors, to learn the correction needed to map LF to HF values. This model is trained exclusively on the limited HF dataset.

Prediction: For a new candidate, the LF model provides an initial estimate. The correction model then refines this estimate using the learned discrepancy function.

Quantitative Data Summary: Table 1: Representative Performance of Multi-Fidelity vs. Single-Fidelity Models for Adsorption Energy Prediction.

| Model Type | HF Training Set Size | Mean Absolute Error (eV) | Computational Cost (rel. to HF) |

|---|---|---|---|

| Single-Fidelity (HF only) | 100 | 0.15 | 100% |

| Single-Fidelity (HF only) | 500 | 0.08 | 500% |

| Multi-Fidelity (LF=10k, HF=100) | 100 | 0.10 | ~1% |

| Multi-Fidelity (LF=10k, HF=500) | 500 | 0.05 | ~5% |

Assumptions: LF cost is ~0.01% of HF cost. Data is illustrative of trends observed in recent literature.

Protocol 2: Transfer Learning for Catalytic Activity Prediction

Objective: To predict catalytic activity (e.g., turnover frequency) for a target reaction with limited data by leveraging knowledge from a related source reaction with abundant data.

Workflow:

- Source Task Pre-training:

- Collect a large dataset of catalyst descriptors and activity labels for a well-studied source reaction (e.g., CO₂ hydrogenation to methanol).

- Train a deep neural network (DNN) on this source task until convergence. This model learns generalized features of catalyst-adsorbate interactions.

- Target Task Fine-tuning:

- Collect a small dataset for the target reaction of interest (e.g., methane oxidation).

- Remove the final output layer of the pre-trained source model.

- Replace it with a new, randomly initialized output layer suited to the target task.

- Freeze the early layers of the network (which contain general features) and only fine-tune the final few layers and the new output layer on the small target dataset. This prevents catastrophic forgetting of general knowledge.

Quantitative Data Summary: Table 2: Performance Comparison of Transfer Learning vs. Training from Scratch on Small Datasets.

| Training Approach | Target Dataset Size | R² Score (Target Task) | Notes |

|---|---|---|---|

| From Scratch (No TL) | 50 | 0.30 ± 0.10 | High variance, poor generalization |

| Transfer Learning | 50 | 0.75 ± 0.05 | Stable, good generalization |

| From Scratch (No TL) | 200 | 0.65 ± 0.07 | Requires 4x more target data |

| Transfer Learning | 200 | 0.88 ± 0.03 | Near-optimal performance |

Protocol 3: Integrated TL-MF Workflow for Sabatier-Based Screening

Objective: To create a robust pipeline for predicting the peak of a Sabatier activity volcano using minimal high-quality data.

Integrated Protocol Steps:

- Multi-Fidelity Data Generation: Execute Protocol 1 to generate a large, corrected dataset of adsorption energies for key intermediates (e.g., *O, *COOH) across a catalyst library.

- Descriptor Calculation: Compute catalyst descriptors (e.g., d-band center, coordination number, elemental properties) for all candidates.

- Transfer Learning for Activity Model:

- Source Pre-training: Use a large, public dataset (e.g., CatApp, NOMAD) linking descriptors to a different but related catalytic activity to pre-train an activity DNN.

- Target Fine-tuning: Use a small set of experimental turnover frequencies (TOF) for your target reaction to fine-tune the pre-trained model, using the MF-corrected adsorption energies as key inputs.